script

stringlengths 113

767k

|

|---|

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

import time

data = pd.read_csv("../input/featureselection/data.csv")

data.head()

col = data.columns

print(col)

y = data.diagnosis

list = ["Unnamed: 32", "id", "diagnosis"]

x = data.drop(list, axis=1)

x.head()

ax = sns.countplot(y, label="Count")

B, M = y.value_counts()

print("Number of Benign:", B)

print("Number of Malignant: ", M)

x.describe()

data_dia = y

data = x

data_n_2 = (data - data.mean()) / (data.std())

data = pd.concat([y, data_n_2.iloc[:, 0:10]], axis=1)

data = pd.melt(data, id_vars="diagnosis", var_name="features", value_name="value")

plt.figure(figsize=(12, 5))

sns.violinplot(

x="features", y="value", hue="diagnosis", data=data, split=True, inner="quart"

)

plt.xticks(rotation=90)

data = pd.concat([y, data_n_2.iloc[:, 10:20]], axis=1)

data = pd.melt(data, id_vars="diagnosis", var_name="features", value_name="value")

plt.figure(figsize=(12, 5))

sns.violinplot(

x="features", y="value", hue="diagnosis", data=data, split=True, inner="quart"

)

plt.xticks(rotation=60)

data = pd.concat([y, data_n_2.iloc[:, 20:31]], axis=1)

data = pd.melt(data, id_vars="diagnosis", value_name="value", var_name="features")

plt.figure(figsize=(12, 5))

sns.violinplot(

x="features", y="value", hue="diagnosis", data=data, split=True, inner="quart"

)

plt.xticks(rotation=60)

plt.figure(figsize=(12, 5))

sns.boxplot(x="features", y="value", hue="diagnosis", data=data)

plt.xticks(rotation=60)

sns.jointplot(

x.loc[:, "concavity_worst"], x.loc[:, "concave points_worst"], kind="reg", color="b"

)

sns.set(style="white")

df = x.loc[:, ["radius_worst", "perimeter_worst", "area_worst"]]

g = sns.PairGrid(df, diag_sharey=False)

g.map_lower(sns.kdeplot, cmap="Blues_d")

g.map_upper(plt.scatter)

g.map_diag(sns.kdeplot, lw=3)

sns.set(style="whitegrid", palette="muted")

data_dia = y

data = x

data_n_2 = (data - data.mean()) / (data.std())

data = pd.concat([y, data_n_2.iloc[:, 0:10]], axis=1)

data = pd.melt(data, id_vars="diagnosis", var_name="features", value_name="value")

plt.figure(figsize=(12, 12))

tic = time.time()

sns.swarmplot(x="features", y="value", hue="diagnosis", data=data)

plt.xticks(rotation=60)

data = pd.concat([y, data_n_2.iloc[:, 10:20]], axis=1)

data = pd.melt(data, id_vars="diagnosis", var_name="features", value_name="value")

plt.figure(figsize=(12, 5))

sns.swarmplot(x="features", y="value", hue="diagnosis", data=data)

plt.xticks(rotation=60)

data = pd.concat([y, data_n_2.iloc[:, 20:31]], axis=1)

data = pd.melt(data, id_vars="diagnosis", var_name="features", value_name="value")

plt.figure(figsize=(12, 10))

sns.swarmplot(x="diagnosis", y="value", hue="diagnosis", data=data)

plt.xticks(rotation=60)

f, ax = plt.subplots(figsize=(12, 12))

sns.heatmap(x.corr(), annot=True, linewidths=0.5, fmt=".1f", ax=ax)

drop_list1 = [

"perimeter_mean",

"radius_mean",

"compactness_mean",

"concave points_mean",

"radius_se",

"perimeter_se",

"radius_worst",

"perimeter_worst",

"compactness_worst",

"concave points_worst",

"compactness_se",

"concave points_se",

"texture_worst",

"area_worst",

]

x_1 = x.drop(drop_list1, axis=1)

x_1.head()

f, ax = plt.subplots(figsize=(12, 10))

sns.heatmap(x_1.corr(), annot=True, linewidths=0.5, fmt=".1f", ax=ax)

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import f1_score, confusion_matrix

from sklearn.metrics import accuracy_score

X_train, X_test, y_train, y_test = train_test_split(

x_1, y, test_size=0.3, random_state=42

)

clf_rf = RandomForestClassifier(random_state=43)

clf_rf = clf_rf.fit(X_train, y_train)

ac = accuracy_score(y_test, clf_rf.predict(X_test))

print("Accuracy is: ", ac)

cm = confusion_matrix(y_test, clf_rf.predict(X_test))

sns.heatmap(cm, annot=True, fmt="d")

from sklearn.feature_selection import SelectKBest

from sklearn.feature_selection import chi2

select_feature = SelectKBest(chi2, k=5).fit(X_train, y_train)

print("Score list: ", select_feature.scores_)

print("Feature list: ", X_train.columns)

X_train_2 = select_feature.transform(X_train)

X_test_2 = select_feature.transform(X_test)

clf_rf_2 = RandomForestClassifier()

clf_rf_2 = clf_rf_2.fit(X_train_2, y_train)

ac_2 = accuracy_score(y_test, clf_rf_2.predict(X_test_2))

print("Accuracy is: ", ac_2)

cm_2 = confusion_matrix(y_test, clf_rf_2.predict(X_test_2))

sns.heatmap(cm_2, annot=True, fmt="d")

from sklearn.feature_selection import RFE

clf_rf_3 = RandomForestClassifier()

rfe = RFE(estimator=clf_rf_3, n_features_to_select=5, step=1)

rfe = rfe.fit(X_train, y_train)

print("Chosen best 5 features by rfe: ", X_train.columns[rfe.support_])

from sklearn.feature_selection import RFECV

clf_rf_4 = RandomForestClassifier()

rfecv = RFECV(estimator=clf_rf_4, step=1, cv=5, scoring="accuracy")

rfecv = rfecv.fit(X_train, y_train)

print("Optimal number of features: ", rfecv.n_features_)

print("Best features: ", X_train.columns[rfecv.support_])

import matplotlib.pyplot as plt

plt.figure()

plt.xlabel("Number of featuress selected")

plt.ylabel("Cross validation score of number of selected features")

plt.plot(range(1, len(rfecv.grid_scores_) + 1), rfecv.grid_scores_)

clf_rf_5 = RandomForestClassifier()

clf_rf_5 = clf_rf_5.fit(X_train, y_train)

importances = clf_rf_5.feature_importances_

std = np.std([tree.feature_importances_ for tree in clf_rf.estimators_], axis=0)

indices = np.argsort(importances)[::-1]

print("Feature ranking: ")

for f in range(X_train.shape[1]):

print("%d. feature %d (%f)" % (f + 1, indices[f], importances[indices[f]]))

plt.figure(1, figsize=(12, 8))

plt.title("Feature importances ")

plt.bar(

range(X_train.shape[1]),

importances[indices],

color="g",

yerr=std[indices],

align="center",

)

plt.xticks(range(X_train.shape[1]), X_train.columns[indices], rotation=60)

plt.xlim([-1, X_train.shape[1]])

X_train, X_test, y_train, y_test = train_test_split(

x, y, test_size=0.3, random_state=42

)

X_train_N = (X_train - X_train.mean()) / (X_train.max() - X_train.min())

X_test_N = (X_test - X_test.mean()) / (X_test.max() - X_test.min())

from sklearn.decomposition import PCA

pca = PCA()

pca.fit(X_train_N)

plt.figure(1, figsize=(12, 8))

plt.clf()

plt.axes([0.2, 0.2, 0.7, 0.7])

plt.plot(pca.explained_variance_ratio_, linewidth=2)

plt.axis("tight")

plt.xlabel("n_components")

plt.ylabel("explainbed_variance_ratio_")

|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 20GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session

train = pd.read_csv("/kaggle/input/titanic/train.csv")

test = pd.read_csv("/kaggle/input/titanic/test.csv")

# ## Discriptive Analysis

train.head()

train.describe()

# Observation:

# 1. Fare is skewed as mean and 50% values are not near to each other.

train.info()

# Observation:

# 1. Age, Cabin, Embarked have null value.

# ## EDA

import plotly.express as px

import matplotlib

import matplotlib.pyplot as plt

import seaborn as sns

sns.set_style("darkgrid")

matplotlib.rcParams["font.size"] = 14

matplotlib.rcParams["figure.figsize"] = (10, 6)

matplotlib.rcParams["figure.facecolor"] = "#00000000"

px.histogram(train, x="Survived")

# 1. 38% survived of the total people onboard survived.

sns.histplot(train, x="Pclass", hue="Survived")

# 1. It shows large amount of people traveled in 3rd class.

# 2. Survival rate of 1st class people is higher than 3rd class people.

px.histogram(train, x="Age", color="Survived")

# 1. Large number of people between age 20 - 35 age travelled.

# 2. Children and people aged between 20-40 survived more.

px.histogram(train, x="Sex", color="Survived")

# 1. Number of male survived is less than female.

train[train["Survived"] == 1].groupby("SibSp").count()["Name"] / train.groupby(

"SibSp"

).count()["Name"].sum()

train[train["Survived"] == 0].groupby("SibSp").count()["Name"] / train.groupby(

"SibSp"

).count()["Name"].sum()

# 1. 68% people have no Siblings or wife.

# 2. 23 % people without siblings or wife survived.

px.histogram(train, x="SibSp", color="Survived")

# 1. Lesser the SibSp value, more the chance of survival.

train[train["Survived"] == 1].groupby("Parch").count()["Name"] / train.groupby(

"SibSp"

).count()["Name"].sum()

train[train["Survived"] == 0].groupby("Parch").count()["Name"] / train.groupby(

"SibSp"

).count()["Name"].sum()

# 1. 75% have no parents or children and 26% survived in that.

px.histogram(train, x="Parch", color="Survived")

# 1. Lesser the value of Parch, more the chance of survival.

px.histogram(train, x="Fare", color="Survived")

# 1. Fare column have exp distribution.

px.histogram(train, x="Embarked", color="Survived")

# Large number of people embarked on S post.

# #### Summary of the obervation

# 1. 38% survived of the total people onboard survived.

# 2. Survival rate of 1st class people is higher than 3rd class people.

# 3. 68 % people have no Siblings or wife.

# 4. 23 % people without siblings or wife survived.

# 5. 75% have no parents or children and 26% survived in that.

# 6. Fare price is largely skewed and few people paid fare as high as $512.

# ## Addressing Missing Value

train.isna().sum()

train.describe(include=["O"])

# 1. Cabin is being shared by people. This gives the picture like, a family shares one cabin or alternatively cheap ticket traveller share cabins with other traveller.

# #### Assumptions

# 1. We can drop Ticket, Cabin, PassengerID cols as they dont directly contribute to Survival rate.Also Cabin col contain lot of null value.

# 2. Lets fill the age col with median of the col and Embarked with mode of the col.

def missing_value(df):

df = df.drop(["Ticket", "Cabin", "PassengerId", "Name"], axis=1)

df["Age"].fillna(df["Age"].median(), inplace=True)

df["Embarked"].fillna(df["Embarked"].mode()[0], inplace=True)

return df

train = missing_value(train)

train

# As large number of people are around 20-35 age group. We choose to fill null value of column Age with median.

train.isna().sum()

# #### Onehot Encode of Categorical Col

def categorical(df):

df["Sex"] = df["Sex"].map({"female": 1, "male": 0}).astype(int)

df["Embarked"] = df["Embarked"].map({"S": 0, "C": 1, "Q": 2})

return df

train = categorical(train)

# ## Model building

X_train = train.drop(["Survived"], axis=1)

y_train = train["Survived"]

from sklearn.linear_model import LogisticRegression

model1 = LogisticRegression(random_state=0, solver="liblinear")

model1.fit(X_train, y_train)

model1.score(X_train, y_train)

from sklearn.ensemble import RandomForestClassifier

random_forest = RandomForestClassifier(n_estimators=100)

random_forest.fit(X_train, y_train)

random_forest.score(X_train, y_train)

acc_random_forest = round(random_forest.score(X_train, y_train) * 100, 2)

acc_random_forest

# Thus Random Forest Classifier perform best.

# #### Test set Prediction

test.isna().sum()

test = missing_value(test)

test = categorical(test)

test["Fare"] = test["Fare"].fillna(test["Fare"].median())

test.isna().sum()

Y_pred = random_forest.predict(test)

test_df = pd.read_csv("/kaggle/input/titanic/test.csv")

submission = pd.DataFrame({"PassengerId": test_df["PassengerId"], "Survived": Y_pred})

submission.to_csv("submission.csv", index=False)

|

# #Assignment - Transliteration

# In this task you are required to solve the transliteration problem of names from English to Russian. Transliteration of a string means writing this string using the alphabet of another language with the preservation of pronunciation, although not always.

# ## Instructions

# To complete the assignment please do the following steps (both are requred to get the full credits):

# ### 1. Complete this notebook

# Upload a filled notebook with code (this file). You will be asked to implement a transformer-based approach for transliteration.

# You should implement your ``train`` and ``classify`` functions in this notebook in the cells below. Your model should be implemented as a special class/function in this notebook (be sure if you add any outer dependencies that everything is improted correctly and can be reproducable).

# ###2. Submit solution to the shared task

# After the implementation of models' architectures you are asked to participate in the [competition](https://competitions.codalab.org/competitions/30932) to solve **Transliteration** task using your implemented code.

# You should use your code from the previous part to train, validate, and generate predictions for the public (Practice) and private (Evaluation) test sets. It will produce predictions (`preds_translit.tsv`) for the dataset and score them if the true answers are present. You can use these scores to evaluate your model on dev set and choose the best one. Be sure to download the [dataset](https://github.com/skoltech-nlp/filimdb_evaluation/blob/master/TRANSLIT.tar.gz) and unzip it with `wget` command and run them from notebook cells.

# Upload obtained TSV file with your predictions (``preds_translit.tsv``) in ``.zip`` for the best results to both phases of the competition.

# **Important: You must indicate "DL4NLP-23" as your team name in Codalab. Without it your submission will be invalid!**

# ## Basic algorithm

# The basic algorithm is based on the following idea: for transliteration, alphabetic n-grams from one language can be transformed into another language into n-grams of the same size, using the most frequent transformation rule found according to statistics on the training sample.

# To test the implementation, download the data, unzip the datasets, predict transliteration and run the evaluation script. To do this, you need to run the following commands:

# ### Baseline code

from typing import List, Any

from random import random

import collections as col

def baseline_train(

train_source_strings: List[str], train_target_strings: List[str]

) -> Any:

"""

Trains transliretation model on the given train set represented as

parallel list of input strings and their transliteration via labels.

:param train_source_strings: a list of strings, one str per example

:param train_target_strings: a list of strings, one str per example

:return: learnt parameters, or any object you like (it will be passed to the classify function)

"""

ngram_lvl = 3

def obtain_train_dicts(train_source_strings, train_target_strings, ngram_lvl):

ngrams_dict = col.defaultdict(lambda: col.defaultdict(int))

for src_str, dst_str in zip(train_source_strings, train_target_strings):

try:

src_ngrams = [

src_str[i : i + ngram_lvl]

for i in range(len(src_str) - ngram_lvl + 1)

]

dst_ngrams = [

dst_str[i : i + ngram_lvl]

for i in range(len(dst_str) - ngram_lvl + 1)

]

except TypeError as e:

print(src_ngrams, dst_ngrams)

print(e)

raise StopIteration

for src_ngram in src_ngrams:

for dst_ngram in dst_ngrams:

ngrams_dict[src_ngram][dst_ngram] += 1

return ngrams_dict

ngrams_dict = col.defaultdict(lambda: col.defaultdict(int))

for nl in range(1, ngram_lvl + 1):

ngrams_dict.update(

obtain_train_dicts(train_source_strings, train_target_strings, nl)

)

return ngrams_dict

def baseline_classify(strings: List[str], params: Any) -> List[str]:

"""

Classify strings given previously learnt parameters.

:param strings: strings to classify

:param params: parameters received from train function

:return: list of lists of predicted transliterated strings

(for each source string -> [top_1 prediction, .., top_k prediction]

if it is possible to generate more than one, otherwise

-> [prediction])

corresponding to the given list of strings

"""

def predict_one_sample(sample, train_dict, ngram_lvl=1):

ngrams = [

sample[i : i + ngram_lvl]

for i in range(

0, (len(sample) // ngram_lvl * ngram_lvl) - ngram_lvl + 1, ngram_lvl

)

] + (

[]

if len(sample) % ngram_lvl == 0

else [sample[-(len(sample) % ngram_lvl) :]]

)

prediction = ""

for ngram in ngrams:

ngram_dict = train_dict[ngram]

if len(ngram_dict.keys()) == 0:

prediction += "?" * len(ngram)

else:

prediction += max(ngram_dict, key=lambda k: ngram_dict[k])

return prediction

ngram_lvl = 3

predictions = []

ngrams_dict = params

for string in strings:

top_1_pred = predict_one_sample(string, ngrams_dict, ngram_lvl)

predictions.append([top_1_pred])

return predictions

# ### Evaluation code

PREDS_FNAME = "preds_translit_baseline.tsv"

SCORED_PARTS = ("train", "dev", "train_small", "dev_small", "test")

TRANSLIT_PATH = "TRANSLIT"

import codecs

from pandas import read_csv

def load_dataset(data_dir_path=None, parts: List[str] = SCORED_PARTS):

part2ixy = {}

for part in parts:

path = os.path.join(data_dir_path, f"{part}.tsv")

with open(path, "r", encoding="utf-8") as rf:

# first line is a header of the corresponding columns

lines = rf.readlines()[1:]

col_count = len(lines[0].strip("\n").split("\t"))

if col_count == 2:

strings, transliterations = zip(

*list(map(lambda l: l.strip("\n").split("\t"), lines))

)

elif col_count == 1:

strings = list(map(lambda l: l.strip("\n"), lines))

transliterations = None

else:

raise ValueError("wrong amount of columns")

part2ixy[part] = (

[f"{part}/{i}" for i in range(len(strings))],

strings,

transliterations,

)

return part2ixy

def load_transliterations_only(data_dir_path=None, parts: List[str] = SCORED_PARTS):

part2iy = {}

for part in parts:

path = os.path.join(data_dir_path, f"{part}.tsv")

with open(path, "r", encoding="utf-8") as rf:

# first line is a header of the corresponding columns

lines = rf.readlines()[1:]

col_count = len(lines[0].strip("\n").split("\t"))

n_lines = len(lines)

if col_count == 2:

transliterations = [l.strip("\n").split("\t")[1] for l in lines]

elif col_count == 1:

transliterations = None

else:

raise ValueError("Wrong amount of columns")

part2iy[part] = (

[f"{part}/{i}" for i in range(n_lines)],

transliterations,

)

return part2iy

def save_preds(preds, preds_fname):

"""

Save classifier predictions in format appropriate for scoring.

"""

with codecs.open(preds_fname, "w") as outp:

for idx, preds in preds:

print(idx, *preds, sep="\t", file=outp)

print("Predictions saved to %s" % preds_fname)

def load_preds(preds_fname, top_k=1):

"""

Load classifier predictions in format appropriate for scoring.

"""

kwargs = {

"filepath_or_buffer": preds_fname,

"names": ["id", "pred"],

"sep": "\t",

}

pred_ids = list(read_csv(**kwargs, usecols=["id"])["id"])

pred_y = {

pred_id: [y]

for pred_id, y in zip(pred_ids, read_csv(**kwargs, usecols=["pred"])["pred"])

}

for y in pred_y.values():

assert len(y) == top_k

return pred_ids, pred_y

def compute_hit_k(preds, k=10):

raise NotImplementedError

def compute_mrr(preds):

raise NotImplementedError

def compute_acc_1(preds, true):

right_answers = 0

bonus = 0

for pred, y in zip(preds, true):

if pred[0] == y:

right_answers += 1

elif pred[0] != pred[0] and y == "нань":

print(

"Your test file contained empty string, skipping %f and %s"

% (pred[0], y)

)

bonus += 1 # bugfix: skip empty line in test

return right_answers / (len(preds) - bonus)

def score(preds, true):

assert len(preds) == len(

true

), "inconsistent amount of predictions and ground truth answers"

acc_1 = compute_acc_1(preds, true)

return {"acc@1": acc_1}

def score_preds(preds_path, data_dir, parts=SCORED_PARTS):

part2iy = load_transliterations_only(data_dir, parts=parts)

pred_ids, pred_dict = load_preds(preds_path)

# pred_dict = {i:y for i,y in zip(pred_ids, pred_y)}

scores = {}

for part, (true_ids, true_y) in part2iy.items():

if true_y is None:

print("no labels for %s set" % part)

continue

pred_y = [pred_dict[i] for i in true_ids]

score_values = score(pred_y, true_y)

acc_1 = score_values["acc@1"]

print("%s set accuracy@1: %.2f" % (part, acc_1))

scores[part] = score_values

return scores

# ### Train and predict results

from time import time

import numpy as np

import os

def train_and_predict(translit_path, scored_parts):

top_k = 1

part2ixy = load_dataset(translit_path, parts=scored_parts)

train_ids, train_strings, train_transliterations = part2ixy["train"]

print(

"\nTraining classifier on %d examples from train set ..." % len(train_strings)

)

st = time()

params = baseline_train(train_strings, train_transliterations)

print("Classifier trained in %.2fs" % (time() - st))

allpreds = []

for part, (ids, x, y) in part2ixy.items():

print("\nClassifying %s set with %d examples ..." % (part, len(x)))

st = time()

preds = baseline_classify(x, params)

print("%s set classified in %.2fs" % (part, time() - st))

count_of_values = list(map(len, preds))

assert np.all(np.array(count_of_values) == top_k)

# score(preds, y)

allpreds.extend(zip(ids, preds))

save_preds(allpreds, preds_fname=PREDS_FNAME)

print("\nChecking saved predictions ...")

return score_preds(

preds_path=PREDS_FNAME, data_dir=translit_path, parts=scored_parts

)

train_and_predict(TRANSLIT_PATH, SCORED_PARTS)

# ## Transformer-based approach

# To implement your algorithm, use the template code, which needs to be modified.

# First, you need to add some details in the code of the Transformer architecture, implement the methods of the class `LrScheduler`, which is responsible for updating the learning rate during training.

# Next, you need to select the hyperparameters for the model according to the proposed guide.

import torch

import torch.nn as nn

import torch.nn.functional as F

import pandas as pd

import numpy as np

import itertools as it

import collections as col

import random

import os

import copy

import json

from tqdm import tqdm

import datetime, time

import copy

import os

import pandas as pd

import torch

import torch.nn as nn

import torch.utils.data as torch_data

import itertools as it

import collections as col

import random

import Levenshtein as le

# ### Load dataset and embeddings

def load_datasets(data_dir_path, parts):

datasets = {}

for part in parts:

path = os.path.join(data_dir_path, f"{part}.tsv")

datasets[part] = pd.read_csv(path, sep="\t", na_filter=False)

print(f"Loaded {part} dataset, length: {len(datasets[part])}")

return datasets

class TextEncoder:

def __init__(self, load_dir_path=None):

self.lang_keys = ["en", "ru"]

self.directions = ["id2token", "token2id"]

self.service_token_names = {

"pad_token": "<pad>",

"start_token": "<start>",

"unk_token": "<unk>",

"end_token": "<end>",

}

service_id2token = dict(enumerate(self.service_token_names.values()))

service_token2id = {v: k for k, v in service_id2token.items()}

self.service_vocabs = dict(

zip(self.directions, [service_id2token, service_token2id])

)

if load_dir_path is None:

self.vocabs = {}

for lk in self.lang_keys:

self.vocabs[lk] = copy.deepcopy(self.service_vocabs)

else:

self.vocabs = self.load_vocabs(load_dir_path)

def load_vocabs(self, load_dir_path):

vocabs = {}

load_path = os.path.join(load_dir_path, "vocabs")

for lk in self.lang_keys:

vocabs[lk] = {}

for d in self.directions:

columns = d.split("2")

print(lk, d)

df = pd.read_csv(os.path.join(load_path, f"{lk}_{d}"))

vocabs[lk][d] = dict(zip(*[df[c] for c in columns]))

return vocabs

def save_vocabs(self, save_dir_path):

save_path = os.path.join(save_dir_path, "vocabs")

os.makedirs(save_path, exist_ok=True)

for lk in self.lang_keys:

for d in self.directions:

columns = d.split("2")

pd.DataFrame(data=self.vocabs[lk][d].items(), columns=columns).to_csv(

os.path.join(save_path, f"{lk}_{d}"), index=False, sep=","

)

def make_vocabs(self, data_df):

for lk in self.lang_keys:

tokens = col.Counter("".join(list(it.chain(*data_df[lk])))).keys()

part_id2t = dict(enumerate(tokens, start=len(self.service_token_names)))

part_t2id = {k: v for v, k in part_id2t.items()}

part_vocabs = [part_id2t, part_t2id]

for i in range(len(self.directions)):

self.vocabs[lk][self.directions[i]].update(part_vocabs[i])

self.src_vocab_size = len(self.vocabs["en"]["id2token"])

self.tgt_vocab_size = len(self.vocabs["ru"]["id2token"])

def frame(self, sample, start_token=None, end_token=None):

if start_token is None:

start_token = self.service_token_names["start_token"]

if end_token is None:

end_token = self.service_token_names["end_token"]

return [start_token] + sample + [end_token]

def token2id(self, samples, frame, lang_key):

if frame:

samples = list(map(self.frame, samples))

vocab = self.vocabs[lang_key]["token2id"]

return list(

map(

lambda s: [

vocab[t]

if t in vocab.keys()

else vocab[self.service_token_names["unk_token"]]

for t in s

],

samples,

)

)

def unframe(self, sample, start_token=None, end_token=None):

if start_token is None:

start_token = self.service_vocabs["token2id"][

self.service_token_names["start_token"]

]

if end_token is None:

end_token = self.service_vocabs["token2id"][

self.service_token_names["end_token"]

]

pad_token = self.service_vocabs["token2id"][

self.service_token_names["pad_token"]

]

return list(

it.takewhile(lambda e: e != end_token and e != pad_token, sample[1:])

)

def id2token(self, samples, unframe, lang_key):

if unframe:

samples = list(map(self.unframe, samples))

vocab = self.vocabs[lang_key]["id2token"]

return list(

map(

lambda s: [

vocab[idx]

if idx in vocab.keys()

else self.service_token_names["unk_token"]

for idx in s

],

samples,

)

)

class TranslitData(torch_data.Dataset):

def __init__(self, source_strings, target_strings, text_encoder):

super(TranslitData, self).__init__()

self.source_strings = source_strings

self.text_encoder = text_encoder

if target_strings is not None:

assert len(source_strings) == len(target_strings)

self.target_strings = target_strings

else:

self.target_strings = None

def __len__(self):

return len(self.source_strings)

def __getitem__(self, idx):

src_str = self.source_strings[idx]

encoder_input = self.text_encoder.token2id(

[list(src_str)], frame=True, lang_key="en"

)[0]

if self.target_strings is not None:

tgt_str = self.target_strings[idx]

tmp = self.text_encoder.token2id(

[list(tgt_str)], frame=True, lang_key="ru"

)[0]

decoder_input = tmp[:-1]

decoder_target = tmp[1:]

return (encoder_input, decoder_input, decoder_target)

else:

return (encoder_input,)

class BatchSampler(torch_data.BatchSampler):

def __init__(self, sampler, batch_size, drop_last, shuffle_each_epoch):

super(BatchSampler, self).__init__(sampler, batch_size, drop_last)

self.batches = []

for b in super(BatchSampler, self).__iter__():

self.batches.append(b)

self.shuffle_each_epoch = shuffle_each_epoch

if self.shuffle_each_epoch:

random.shuffle(self.batches)

self.index = 0

# print(f'Batches collected: {len(self.batches)}')

def __iter__(self):

self.index = 0

return self

def __next__(self):

if self.index == len(self.batches):

if self.shuffle_each_epoch:

random.shuffle(self.batches)

raise StopIteration

else:

batch = self.batches[self.index]

self.index += 1

return batch

def collate_fn(batch_list):

"""batch_list can store either 3 components:

encoder_inputs, decoder_inputs, decoder_targets

or single component: encoder_inputs"""

components = list(zip(*batch_list))

batch_tensors = []

for data in components:

max_len = max([len(sample) for sample in data])

# print(f'Maximum length in batch = {max_len}')

sample_tensors = [

torch.tensor(s, requires_grad=False, dtype=torch.int64) for s in data

]

batch_tensors.append(

nn.utils.rnn.pad_sequence(sample_tensors, batch_first=True, padding_value=0)

)

return tuple(batch_tensors)

def create_dataloader(

source_strings, target_strings, text_encoder, batch_size, shuffle_batches_each_epoch

):

"""target_strings parameter can be None"""

dataset = TranslitData(source_strings, target_strings, text_encoder=text_encoder)

seq_sampler = torch_data.SequentialSampler(dataset)

batch_sampler = BatchSampler(

seq_sampler,

batch_size=batch_size,

drop_last=False,

shuffle_each_epoch=shuffle_batches_each_epoch,

)

dataloader = torch_data.DataLoader(

dataset, batch_sampler=batch_sampler, collate_fn=collate_fn

)

return dataloader

# ### Metric function

def compute_metrics(predicted_strings, target_strings, metrics):

metric_values = {}

for m in metrics:

if m == "acc@1":

metric_values[m] = sum(predicted_strings == target_strings) / len(

target_strings

)

elif m == "mean_ld@1":

metric_values[m] = np.mean(

list(

map(

lambda e: le.distance(*e),

zip(predicted_strings, target_strings),

)

)

)

else:

raise ValueError(f"Unknown metric: {m}")

return metric_values

# ### Positional Encoding

# As you remember, Transformer treats an input sequence of elements as a time series. Since the Encoder inside the Transformer simultaneously processes the entire input sequence, the information about the position of the element needs to be encoded inside its embedding, since it is not identified in any other way inside the model. That is why the PositionalEncoding layer is used, which sums embeddings with a vector of the same dimension.

# Let the matrix of these vectors for each position of the time series be denoted as $PE$. Then the elements of the matrix are:

# $$ PE_{(pos,2i)} = \sin{(pos/10000^{2i/d_{model}})}$$

# $$ PE_{(pos,2i+1)} = \cos{(pos/10000^{2i/d_{model}})}$$

# where $pos$ - is the position, $i$ - index of the component of the corresponging vector, $d_{model}$ - dimension of each vector. Thus, even components represent sine values, and odd ones represent cosine values with different arguments.

# In this task you are required to implement these formulas inside the class constructor *PositionalEncoding* in the main file ``translit.py``, which you are to upload. To run the test use the following function:

# `test_positional_encoding()`

# Make sure that there is no any `AssertionError`!

#

import math

class Embedding(nn.Module):

def __init__(self, hidden_size, vocab_size):

super(Embedding, self).__init__()

self.emb_layer = nn.Embedding(vocab_size, hidden_size)

self.hidden_size = hidden_size

def forward(self, x):

return self.emb_layer(x)

class PositionalEncoding(nn.Module):

def __init__(self, hidden_size, max_len=512):

super(PositionalEncoding, self).__init__()

pe = torch.zeros(max_len, hidden_size, requires_grad=False)

position = torch.arange(0, max_len, dtype=torch.float).unsqueeze(1)

div_term = torch.exp(

torch.arange(0, hidden_size, 2).float() * (-math.log(10000.0) / hidden_size)

)

pe[:, 0::2] = torch.sin(position * div_term)

pe[:, 1::2] = torch.cos(position * div_term)

pe = pe.unsqueeze(0)

# pe shape: (1, max_len, hidden_size)

self.register_buffer("pe", pe)

def forward(self, x):

# x: shape (batch size, sequence length, hidden size)

x = x + self.pe[:, : x.size(1)]

return x

def test_positional_encoding():

pe = PositionalEncoding(max_len=3, hidden_size=4)

res_1 = torch.tensor(

[

[

[0.0000, 1.0000, 0.0000, 1.0000],

[0.8415, 0.5403, 0.0100, 0.9999],

[0.9093, -0.4161, 0.0200, 0.9998],

]

]

)

# print(pe.pe - res_1)

assert torch.all(torch.abs(pe.pe - res_1) < 1e-4).item()

print("Test is passed!")

test_positional_encoding()

# ### LayerNorm

class LayerNorm(nn.Module):

"Layer Normalization layer"

def __init__(self, hidden_size, eps=1e-6):

super(LayerNorm, self).__init__()

self.gain = nn.Parameter(torch.ones(hidden_size))

self.bias = nn.Parameter(torch.zeros(hidden_size))

self.eps = eps

def forward(self, x):

mean = x.mean(-1, keepdim=True)

std = x.std(-1, keepdim=True)

return self.gain * (x - mean) / (std + self.eps) + self.bias

# ### SublayerConnection

class SublayerConnection(nn.Module):

"""

A residual connection followed by a layer normalization.

"""

def __init__(self, hidden_size, dropout):

super(SublayerConnection, self).__init__()

self.layer_norm = LayerNorm(hidden_size)

self.dropout = nn.Dropout(dropout)

def forward(self, x, sublayer):

return self.layer_norm(x + self.dropout(sublayer(x)))

def padding_mask(x, pad_idx=0):

assert len(x.size()) >= 2

return (x != pad_idx).unsqueeze(-2)

def look_ahead_mask(size):

"Mask out the right context"

attn_shape = (1, size, size)

look_ahead_mask = np.triu(np.ones(attn_shape), k=1).astype("uint8")

return torch.from_numpy(look_ahead_mask) == 0

def compositional_mask(x, pad_idx=0):

pm = padding_mask(x, pad_idx=pad_idx)

seq_length = x.size(-1)

result_mask = pm & look_ahead_mask(seq_length).type_as(pm.data)

return result_mask

# ### FeedForward

class FeedForward(nn.Module):

def __init__(self, hidden_size, ff_hidden_size, dropout=0.1):

super(FeedForward, self).__init__()

self.pre_linear = nn.Linear(hidden_size, ff_hidden_size)

self.post_linear = nn.Linear(ff_hidden_size, hidden_size)

self.dropout = nn.Dropout(dropout)

def forward(self, x):

return self.post_linear(self.dropout(F.relu(self.pre_linear(x))))

def clone_layer(module, N):

"Produce N identical layers."

return nn.ModuleList([copy.deepcopy(module) for _ in range(N)])

# ### MultiHeadAttention

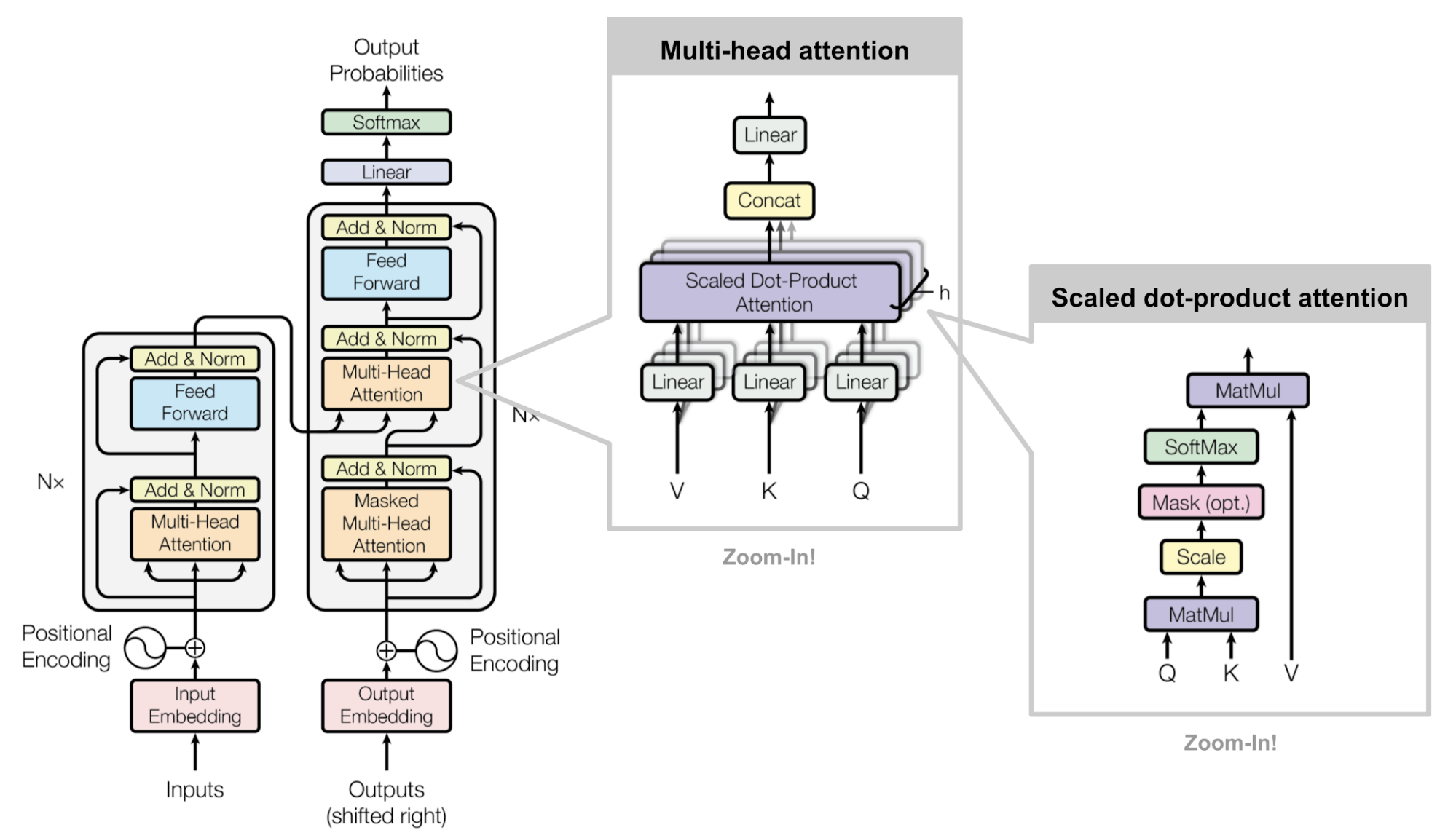

# Then you are required to implement `attention` method in the class `MultiHeadAttention`. The MultiHeadAttention layer takes as input query vectors, key and value vectors for each step of the sequence of matrices Q,K,V correspondingly. Each key vector, value vector, and query vector is obtained as a result of linear projection using one of three trained vector parameter matrices from the previous layer. This semantics can be represented in the form of formulas:

# $$

# Attention(Q, K, V)=softmax\left(\frac{Q K^{T}}{\sqrt{d_{k}}}\right) V\\

# $$

# $$

# MultiHead(Q, K, V) = Concat\left(head_1, ... , head_h\right) W^O\\

# $$

# $$

# head_i=Attention\left(Q W_i^Q, K W_i^K, V W_i^V\right)\\

# $$

# $h$ - the number of attention heads - parallel sub-layers for Scaled Dot-Product Attention on a vector of smaller dimension ($d_{k} = d_{q} = d_{v} = d_{model} / h$).

# The logic of $\texttt{MultiHeadAttention}$ is presented in the picture (from original [paper](https://arxiv.org/abs/1706.03762)):

#

# Inside a method `attention` you are required to create a dropout layer from MultiHeadAttention class constructor. Dropout layer is to be applied directly on the attention weights - the result of softmax operation. Value of drop probability can be regulated in the train in the `model_config['dropout']['attention']`.

# The correctness of implementation can be checked with

# `test_multi_head_attention()`

#

class MultiHeadAttention(nn.Module):

def __init__(self, n_heads, hidden_size, dropout=None):

super(MultiHeadAttention, self).__init__()

assert hidden_size % n_heads == 0

self.head_hidden_size = hidden_size // n_heads

self.n_heads = n_heads

self.linears = clone_layer(nn.Linear(hidden_size, hidden_size), 4)

self.attn_weights = None

self.dropout = dropout

if self.dropout is not None:

self.dropout_layer = nn.Dropout(p=self.dropout)

def attention(self, query, key, value, mask=None):

d_k = query.size(-1)

scores = torch.matmul(query, key.transpose(-2, -1)) / math.sqrt(d_k)

if mask is not None:

scores = scores.masked_fill(mask == 0, -1e9)

attn_weights = F.softmax(scores, dim=-1)

# if self.dropout is not None:

# attn_weights = self.dropout(attn_weights)

result = torch.matmul(attn_weights, value)

return result, attn_weights

def forward(self, query, key, value, mask=None):

if mask is not None:

# Same mask applied to all h heads.

mask = mask.unsqueeze(1)

batch_size = query.size(0)

# Split vectors for different attention heads (from hidden_size => n_heads x head_hidden_size)

# and do separate linear projection, for separate trainable weights

query, key, value = [

l(x)

.view(batch_size, -1, self.n_heads, self.head_hidden_size)

.transpose(1, 2)

for l, x in zip(self.linears, (query, key, value))

]

x, self.attn_weights = self.attention(query, key, value, mask=mask)

# x shape: (batch size, number of heads, sequence length, head hidden size)

# self.attn_weights shape: (batch size, number of heads, sequence length, sequence length)

# Concatenate the output of each head

x = (

x.transpose(1, 2)

.contiguous()

.view(batch_size, -1, self.n_heads * self.head_hidden_size)

)

return self.linears[-1](x)

def test_multi_head_attention():

mha = MultiHeadAttention(n_heads=1, hidden_size=5, dropout=None)

# batch_size == 2, sequence length == 3, hidden_size == 5

# query = torch.arange(150).reshape(2, 3, 5)

query = torch.tensor(

[

[

[[0.64144618, -0.95817388, 0.37432297, 0.58427106, -0.94668716]],

[[-0.23199289, 0.66329209, -0.46507035, -0.54272512, -0.98640698]],

[[0.07546638, -0.09277002, 0.20107185, -0.97407381, -0.27713414]],

],

[

[[0.14727783, 0.4747886, 0.44992016, -0.2841419, -0.81820319]],

[[-0.72324994, 0.80643179, -0.47655449, 0.45627872, 0.60942404]],

[[0.61712569, -0.62947282, -0.95215713, -0.38721959, -0.73289725]],

],

]

)

key = torch.tensor(

[

[

[[-0.81759856, -0.60049991, -0.05923424, 0.51898901, -0.3366209]],

[[0.83957818, -0.96361722, 0.62285191, 0.93452467, 0.51219613]],

[[-0.72758847, 0.41256154, 0.00490795, 0.59892503, -0.07202049]],

],

[

[[0.72315339, -0.49896314, 0.94254637, -0.54356006, -0.04837949]],

[[0.51759322, -0.43927061, -0.59924184, 0.92241702, -0.86811696]],

[[-0.54322046, -0.92323003, -0.827746, 0.90842783, 0.88428119]],

],

]

)

value = torch.tensor(

[

[

[[-0.83895431, 0.805027, 0.22298283, -0.84849915, -0.34906026]],

[[-0.02899652, -0.17456128, -0.17535998, -0.73160314, -0.13468061]],

[[0.75234265, 0.02675947, 0.84766286, -0.5475651, -0.83319316]],

],

[

[[-0.47834413, 0.34464645, -0.41921457, 0.33867964, 0.43470836]],

[[-0.99000979, 0.10220893, -0.4932273, 0.95938905, 0.01927012]],

[[0.91607137, 0.57395644, -0.90914179, 0.97212912, 0.33078759]],

],

]

)

query = query.float().transpose(1, 2)

key = key.float().transpose(1, 2)

value = value.float().transpose(1, 2)

x, _ = torch.max(query[:, 0, :, :], axis=-1)

mask = compositional_mask(x)

mask.unsqueeze_(1)

for n, t in [("query", query), ("key", key), ("value", value), ("mask", mask)]:

print(f"Name: {n}, shape: {t.size()}")

with torch.no_grad():

output, attn_weights = mha.attention(query, key, value, mask=mask)

assert output.size() == torch.Size([2, 1, 3, 5])

assert attn_weights.size() == torch.Size([2, 1, 3, 3])

truth_output = torch.tensor(

[

[

[

[-0.8390, 0.8050, 0.2230, -0.8485, -0.3491],

[-0.6043, 0.5212, 0.1076, -0.8146, -0.2870],

[-0.0665, 0.2461, 0.3038, -0.7137, -0.4410],

]

],

[

[

[-0.4783, 0.3446, -0.4192, 0.3387, 0.4347],

[-0.7959, 0.1942, -0.4652, 0.7239, 0.1769],

[-0.3678, 0.2868, -0.5799, 0.7987, 0.2086],

]

],

]

)

truth_attn_weights = torch.tensor(

[

[

[

[1.0000, 0.0000, 0.0000],

[0.7103, 0.2897, 0.0000],

[0.3621, 0.3105, 0.3274],

]

],

[

[

[1.0000, 0.0000, 0.0000],

[0.3793, 0.6207, 0.0000],

[0.2642, 0.4803, 0.2555],

]

],

]

)

# print(torch.abs(output - truth_output))

# print(torch.abs(attn_weights - truth_attn_weights))

assert torch.all(torch.abs(output - truth_output) < 1e-4).item()

assert torch.all(torch.abs(attn_weights - truth_attn_weights) < 1e-4).item()

print("Test is passed!")

test_multi_head_attention()

# ### Encoder

class EncoderLayer(nn.Module):

"Encoder is made up of self-attn and feed forward (defined below)"

def __init__(self, hidden_size, ff_hidden_size, n_heads, dropout):

super(EncoderLayer, self).__init__()

self.self_attn = MultiHeadAttention(

n_heads, hidden_size, dropout=dropout["attention"]

)

self.feed_forward = FeedForward(

hidden_size, ff_hidden_size, dropout=dropout["relu"]

)

self.sublayers = clone_layer(

SublayerConnection(hidden_size, dropout["residual"]), 2

)

def forward(self, x, mask):

x = self.sublayers[0](x, lambda x: self.self_attn(x, x, x, mask))

return self.sublayers[1](x, self.feed_forward)

class Encoder(nn.Module):

def __init__(self, config):

super(Encoder, self).__init__()

self.embedder = Embedding(config["hidden_size"], config["src_vocab_size"])

self.positional_encoder = PositionalEncoding(

config["hidden_size"], max_len=config["max_src_seq_length"]

)

self.embedding_dropout = nn.Dropout(p=config["dropout"]["embedding"])

self.encoder_layer = EncoderLayer(

config["hidden_size"],

config["ff_hidden_size"],

config["n_heads"],

config["dropout"],

)

self.layers = clone_layer(self.encoder_layer, config["n_layers"])

self.layer_norm = LayerNorm(config["hidden_size"])

def forward(self, x, mask):

"Pass the input (and mask) through each layer in turn."

x = self.embedding_dropout(self.positional_encoder(self.embedder(x)))

for layer in self.layers:

x = layer(x, mask)

return self.layer_norm(x)

# ### Decoder

class DecoderLayer(nn.Module):

"""

Decoder is made of 3 sublayers: self attention, encoder-decoder attention

and feed forward"

"""

def __init__(self, hidden_size, ff_hidden_size, n_heads, dropout):

super(DecoderLayer, self).__init__()

self.self_attn = MultiHeadAttention(

n_heads, hidden_size, dropout=dropout["attention"]

)

self.encdec_attn = MultiHeadAttention(

n_heads, hidden_size, dropout=dropout["attention"]

)

self.feed_forward = FeedForward(

hidden_size, ff_hidden_size, dropout=dropout["relu"]

)

self.sublayers = clone_layer(

SublayerConnection(hidden_size, dropout["residual"]), 3

)

def forward(self, x, encoder_output, encoder_mask, decoder_mask):

x = self.sublayers[0](x, lambda x: self.self_attn(x, x, x, decoder_mask))

x = self.sublayers[1](

x,

lambda x: self.encdec_attn(x, encoder_output, encoder_output, encoder_mask),

)

return self.sublayers[2](x, self.feed_forward)

class Decoder(nn.Module):

def __init__(self, config):

super(Decoder, self).__init__()

self.embedder = Embedding(config["hidden_size"], config["tgt_vocab_size"])

self.positional_encoder = PositionalEncoding(

config["hidden_size"], max_len=config["max_tgt_seq_length"]

)

self.embedding_dropout = nn.Dropout(p=config["dropout"]["embedding"])

self.decoder_layer = DecoderLayer(

config["hidden_size"],

config["ff_hidden_size"],

config["n_heads"],

config["dropout"],

)

self.layers = clone_layer(self.decoder_layer, config["n_layers"])

self.layer_norm = LayerNorm(config["hidden_size"])

def forward(self, x, encoder_output, encoder_mask, decoder_mask):

x = self.embedding_dropout(self.positional_encoder(self.embedder(x)))

for layer in self.layers:

x = layer(x, encoder_output, encoder_mask, decoder_mask)

return self.layer_norm(x)

# ### Transformer

class Transformer(nn.Module):

def __init__(self, config):

super(Transformer, self).__init__()

self.config = config

self.encoder = Encoder(config)

self.decoder = Decoder(config)

self.proj = nn.Linear(config["hidden_size"], config["tgt_vocab_size"])

self.pad_idx = config["pad_idx"]

self.tgt_vocab_size = config["tgt_vocab_size"]

def encode(self, encoder_input, encoder_input_mask):

return self.encoder(encoder_input, encoder_input_mask)

def decode(

self, encoder_output, encoder_input_mask, decoder_input, decoder_input_mask

):

return self.decoder(

decoder_input, encoder_output, encoder_input_mask, decoder_input_mask

)

def linear_project(self, x):

return self.proj(x)

def forward(self, encoder_input, decoder_input):

encoder_input_mask = padding_mask(encoder_input, pad_idx=self.config["pad_idx"])

decoder_input_mask = compositional_mask(

decoder_input, pad_idx=self.config["pad_idx"]

)

encoder_output = self.encode(encoder_input, encoder_input_mask)

decoder_output = self.decode(

encoder_output, encoder_input_mask, decoder_input, decoder_input_mask

)

output_logits = self.linear_project(decoder_output)

return output_logits

def prepare_model(config):

model = Transformer(config)

for p in model.parameters():

if p.dim() > 1:

nn.init.xavier_uniform_(p)

return model

# #### LrScheduler

# The last thing you have to prepare is the class `LrScheduler`, which is in charge of learning rate updating after every step of the optimizer. You are required to fill the class constructor and the method `learning_rate`. The preferable stratagy of updating the learning rate (lr), is the following two stages:

# * "warmup" stage - lr linearly increases until the defined value during the fixed number of steps (the proportion of all training steps - the parameter `train_config['warmup\_steps\_part']` in the train function).

# * "decrease" stage - lr linearly decreases until 0 during the left training steps.

# `learning_rate()` call should return the value of lr at this step, which number is stored at self.step. The class constructor takes not only `warmup_steps_part` but the peak learning rate value `lr_peak` at the end of "warmup" stage and a string name of the strategy of learning rate scheduling. You can test other strategies if you want to with `self.type attribute`.

# Correctness check: `test_lr_scheduler()`

#

class LrScheduler:

def __init__(self, n_steps, **kwargs):

self.type = kwargs["type"]

if self.type == "warmup,decay_linear":

self.warmup_steps_part = kwargs["warmup_steps_part"]

self.lr_peak = kwargs["lr_peak"]

self.n_steps = n_steps # total number of training steps

self.warmup_steps = int(self.warmup_steps_part * n_steps)

self._lr = 0 # initialize the _lr attribute to zero

# raise NotImplementedError

else:

raise ValueError(f"Unknown type argument: {self.type}")

self._step = 0

self._lr = 0

def step(self, optimizer):

self._step += 1

lr = self.learning_rate()

for p in optimizer.param_groups:

p["lr"] = lr

def learning_rate(self, step=None):

if step is None:

step = self._step

if self.type == "warmup,decay_linear":

if step < self.warmup_steps:

self._lr = self.lr_peak * (step / self.warmup_steps)

else:

self._lr = self.lr_peak * (

(self.n_steps - step) / (self.n_steps - self.warmup_steps)

)

return self._lr

raise NotImplementedError("Unknown type of learning rate scheduling.")

def state_dict(self):

sd = copy.deepcopy(self.__dict__)

return sd

def load_state_dict(self, sd):

for k in sd.keys():

self.__setattr__(k, sd[k])

def test_lr_scheduler():

lrs_type = "warmup,decay_linear"

warmup_steps_part = 0.1

lr_peak = 3e-4

sch = LrScheduler(

100, type=lrs_type, warmup_steps_part=warmup_steps_part, lr_peak=lr_peak

)

assert sch.learning_rate(step=5) - 15e-5 < 1e-6

assert sch.learning_rate(step=10) - 3e-4 < 1e-6

assert sch.learning_rate(step=50) - 166e-6 < 1e-6

assert sch.learning_rate(step=100) - 0.0 < 1e-6

print("Test is passed!")

test_lr_scheduler()

# ### Run and translate

def format_time(elapsed):

"""

Takes a time in seconds and returns a string hh:mm:ss

"""

elapsed_rounded = int(round((elapsed)))

return str(datetime.timedelta(seconds=elapsed_rounded))

def run_epoch(data_iter, model, lr_scheduler, optimizer, device, verbose=False):

start = time.time()

local_start = start

total_tokens = 0

total_loss = 0

tokens = 0

loss_fn = nn.CrossEntropyLoss(reduction="sum", label_smoothing=0.1)

for i, batch in tqdm(enumerate(data_iter)):

encoder_input = batch[0].to(device)

decoder_input = batch[1].to(device)

decoder_target = batch[2].to(device)

logits = model(encoder_input, decoder_input)

loss = loss_fn(logits.view(-1, model.tgt_vocab_size), decoder_target.view(-1))

total_loss += loss.item()

batch_n_tokens = (decoder_target != model.pad_idx).sum().item()

total_tokens += batch_n_tokens

if optimizer is not None:

optimizer.zero_grad()

lr_scheduler.step(optimizer)

loss.backward()

optimizer.step()

tokens += batch_n_tokens

if verbose and i % 1000 == 1:

elapsed = time.time() - local_start

print(

"batch number: %d, accumulated average loss: %f, tokens per second: %f"

% (i, total_loss / total_tokens, tokens / elapsed)

)

local_start = time.time()

tokens = 0

average_loss = total_loss / total_tokens

print("** End of epoch, accumulated average loss = %f **" % average_loss)

epoch_elapsed_time = format_time(time.time() - start)

print(f"** Elapsed time: {epoch_elapsed_time}**")

return average_loss

def save_checkpoint(epoch, model, lr_scheduler, optimizer, model_dir_path):

save_path = os.path.join(model_dir_path, f"cpkt_{epoch}_epoch")

torch.save(

{

"epoch": epoch,

"model_state_dict": model.state_dict(),

"optimizer_state_dict": optimizer.state_dict(),

"lr_scheduler_state_dict": lr_scheduler.state_dict(),

},

save_path,

)

print(f"Saved checkpoint to {save_path}")

def load_model(epoch, model_dir_path):

save_path = os.path.join(model_dir_path, f"cpkt_{epoch}_epoch")

checkpoint = torch.load(save_path)

with open(

os.path.join(model_dir_path, "model_config.json"), "r", encoding="utf-8"

) as rf:

model_config = json.load(rf)

model = prepare_model(model_config)

model.load_state_dict(checkpoint["model_state_dict"])

return model

def greedy_decode(model, device, encoder_input, max_len, start_symbol):

batch_size = encoder_input.size()[0]

decoder_input = (

torch.ones(batch_size, 1)

.fill_(start_symbol)

.type_as(encoder_input.data)

.to(device)

)

for i in range(max_len):

logits = model(encoder_input, decoder_input)

_, predicted_ids = torch.max(logits, dim=-1)

next_word = predicted_ids[:, i]

# print(next_word)

rest = torch.ones(batch_size, 1).type_as(decoder_input.data)

# print(rest[:,0].size(), next_word.size())

rest[:, 0] = next_word

decoder_input = torch.cat([decoder_input, rest], dim=1).to(device)

# print(decoder_input)

return decoder_input

def generate_predictions(dataloader, max_decoding_len, text_encoder, model, device):

# print(f'Max decoding length = {max_decoding_len}')

model.eval()

predictions = []

start_token_id = text_encoder.service_vocabs["token2id"][

text_encoder.service_token_names["start_token"]

]

with torch.no_grad():

for batch in tqdm(dataloader):

encoder_input = batch[0].to(device)

prediction_tensor = greedy_decode(

model, device, encoder_input, max_decoding_len, start_token_id

)

predictions.extend(

[

"".join(e)

for e in text_encoder.id2token(

prediction_tensor.cpu().numpy(), unframe=True, lang_key="ru"

)

]

)

return np.array(predictions)

def train(source_strings, target_strings):

"""Common training cycle for final run (fixed hyperparameters,

no evaluation during training)"""

if torch.cuda.is_available():

device = torch.device("cuda")

print(f"Using GPU device: {device}")

else:

device = torch.device("cpu")

print(f"GPU is not available, using CPU device {device}")

train_df = pd.DataFrame({"en": source_strings, "ru": target_strings})

text_encoder = TextEncoder()

text_encoder.make_vocabs(train_df)

model_config = {

"src_vocab_size": text_encoder.src_vocab_size,

"tgt_vocab_size": text_encoder.tgt_vocab_size,

"max_src_seq_length": max(train_df["en"].aggregate(len))

+ 2, # including start_token and end_token

"max_tgt_seq_length": max(train_df["ru"].aggregate(len)) + 2,

"n_layers": 2,

"n_heads": 2,

"hidden_size": 128,

"ff_hidden_size": 256,

"dropout": {

"embedding": 0.15,

"attention": 0.15,

"residual": 0.15,

"relu": 0.15,

},

"pad_idx": 0,

}

model = prepare_model(model_config)

model.to(device)

train_config = {

"batch_size": 200,

"n_epochs": 200,

"lr_scheduler": {

"type": "warmup,decay_linear",

"warmup_steps_part": 0.1,

"lr_peak": 1e-3,

},

}

# Model training procedure

optimizer = torch.optim.Adam(model.parameters(), lr=0.0)

n_steps = (len(train_df) // train_config["batch_size"] + 1) * train_config[

"n_epochs"

]

lr_scheduler = LrScheduler(n_steps, **train_config["lr_scheduler"])

# prepare train data

source_strings, target_strings = zip(

*sorted(zip(source_strings, target_strings), key=lambda e: len(e[0]))

)

train_dataloader = create_dataloader(

source_strings,

target_strings,

text_encoder,

train_config["batch_size"],

shuffle_batches_each_epoch=True,

)

# training cycle

for epoch in range(1, train_config["n_epochs"] + 1):

print("\n" + "-" * 40)

print(f"Epoch: {epoch}")

print(f"Run training...")

model.train()

run_epoch(

train_dataloader,

model,

lr_scheduler,

optimizer,

device=device,

verbose=False,

)

learnable_params = {

"model": model,

"text_encoder": text_encoder,

}

return learnable_params

def classify(source_strings, learnable_params):

if torch.cuda.is_available():

device = torch.device("cuda")

print(f"Using GPU device: {device}")

else:

device = torch.device("cpu")

print(f"GPU is not available, using CPU device {device}")

model = learnable_params["model"]

text_encoder = learnable_params["text_encoder"]

batch_size = 200

dataloader = create_dataloader(

source_strings, None, text_encoder, batch_size, shuffle_batches_each_epoch=False

)

max_decoding_len = model.config["max_tgt_seq_length"]

predictions = generate_predictions(

dataloader, max_decoding_len, text_encoder, model, device

)

# return single top1 prediction for each sample

return np.expand_dims(predictions, 1)

# ### Training

PREDS_FNAME = "preds_translit.tsv"

SCORED_PARTS = ("train", "dev", "train_small", "dev_small", "test")

TRANSLIT_PATH = "TRANSLIT"

top_k = 1

part2ixy = load_dataset(TRANSLIT_PATH, parts=SCORED_PARTS)

train_ids, train_strings, train_transliterations = part2ixy["train"]

print("\nTraining classifier on %d examples from train set ..." % len(train_strings))

st = time.time()

params = train(train_strings, train_transliterations)

print("Classifier trained in %.2fs" % (time.time() - st))

allpreds = []

for part, (ids, x, y) in part2ixy.items():

print("\nClassifying %s set with %d examples ..." % (part, len(x)))

st = time.time()

preds = classify(x, params)

print("%s set classified in %.2fs" % (part, time.time() - st))

count_of_values = list(map(len, preds))

assert np.all(np.array(count_of_values) == top_k)

# score(preds, y)

allpreds.extend(zip(ids, preds))

save_preds(allpreds, preds_fname=PREDS_FNAME)

print("\nChecking saved predictions ...")

score_preds(preds_path=PREDS_FNAME, data_dir=TRANSLIT_PATH, parts=SCORED_PARTS)

# ### Hyper-parameters choice

# The model is ready. Now we need to find the optimal hyper-parameters.

# The quality of models with different hyperparameters should be monitored on dev or on dev_small samples (in order to save time, since generating transliterations is a rather time-consuming process, comparable to one training epoch).

# To generate predictions, you can use the `generate_predictions` function, to calculate the accuracy@1 metric, and then you can use the `compute_metrics` function.

# Hyper-parameters are stored in the dictionary `model_config` and `train_config` in train function. The following hyperparameters in `model_config` and `train_config` are suggested to leave unmodified:

# * n_layers $=$ 2

# * n_heads $=$ 2

# * hidden_size $=$ 128

# * fc_hidden_size $=$ 256

# * warmup_steps_part $=$ 0.1

# * batch_size $=$ 200

# You can vary the dropout value. The model has 4 types of : ***embedding dropout*** applied on embdeddings before sending to the first layer of Encoder or Decoder, ***attention*** dropout applied on the attention weights in the MultiHeadAttention layer, ***residual dropout*** applied on the output of each sublayer (MultiHeadAttention or FeedForward) in layers Encoder and Decoder and, finaly, ***relu dropout*** in used in FeedForward layer. For all 4 types it is suggested to test the same value of dropout from the list: 0.1, 0.15, 0.2.

# Also it is suggested to test several peak levels of learning rate - **lr_peak** : 5e-4, 1e-3, 2e-3.

# Note that if you are using a GPU, then training one epoch takes about 1 minute, and up to 1 GB of video memory is required. When using the CPU, the learning speed slows down by about 2 times. If there are problems with insufficient RAM / video memory, reduce the batch size, but in this case the optimal range of learning rate values will change, and it must be determined again. To train a model with batch_size $=$ 200 , it will take at least 300 epochs to achieve accuracy 0.66 on dev_small dataset.

# *Question: What are the optimal hyperpameters according to your experiments? Add plots or other descriptions here.*

# ```

# ENTER HERE YOUR ANSWER

# ```

#

import optuna

import datetime

import torch

import numpy as np

study = optuna.create_study(direction="maximize", sampler=optuna.samplers.TPESampler())

def objective(trial):

params = {

"learning_rate": trial.suggest_loguniform("learning_rate", 1e-5, 1e-1),

"optimizer": trial.suggest_categorical("optimizer", ["Adam", "RMSprop", "SGD"]),

"n_unit": trial.suggest_int("n_unit", 4, 18),

}

model = build_model(params)

accuracy = train_and_evaluate(params, model)

return accuracy

def objective(trial):

dropout_embeddings = trial.suggest_categorical(

"dropout_embeddings", [0.1, 0.15, 0.2]

)

dropout_attention = trial.suggest_categorical("dropout_attention", [0.1, 0.15, 0.2])

dropout_residual = trial.suggest_categorical("dropout_residual", [0.1, 0.15, 0.2])

dropout_relu = trial.suggest_categorical("dropout_relu", [0.1, 0.15, 0.2])

lr_peak = trial.suggest_categorical("lr_peak", [5e-4, 1e-3, 2e-3])

# train the model and return the evaluation metric for tuning

model = Model(

dropout_embeddings, dropout_attention, dropout_residual, dropout_relu, lr_peak

)

train(model)

metric = evaluate(model)

return metric

# Initialize an Optuna study

study = optuna.create_study(

direction="maximize", sampler=optuna.samplers.RandomSampler(seed=123)

)

study.optimize(objective, n_trials=100)

# Get the best hyperparameters and the best evaluation metric

best_params = study.best_params

best_metric = study.best_value

# Print the best hyperparameters and the best evaluation metric

print(f"Best hyperparameters: {best_params}")

print(f"Best evaluation metric: {best_metric}")

# ## Label smoothing

# We suggest to implement an additional regularization method - **label smoothing**. Now imagine that we have a prediction vector from probabilities at position t in the sequence of tokens for each token id from the vocabulary. CrossEntropy compares it with ground truth one-hot representation

# $$[0, ... 0, 1, 0, ..., 0].$$

# And now imagine that we are slightly "smoothed" the values in the ground truth vector and obtained

# $$[\frac{\alpha}{|V|}, ..., \frac{\alpha}{|V|}, 1(1-\alpha)+\frac{\alpha}{|V|}, \frac{\alpha}{|V|}, ... \frac{\alpha}{|V|}],$$

# where $\alpha$ - parameter from 0 to 1, $|V|$ - vocabulary size - number of components in the ground truth vector. The values of this new vector are still summed to 1. Calculate the cross-entropy of our prediction vector and the new ground truth. Now, firstly, cross-entropy will never reach 0, and secondly, the result of the error function will require the model, as usual, to return the highest probability vector compared to other components of the probability vector for the correct token in the dictionary, but at the same time not too large, because as the value of this probability approaches 1, the value of the error function increases. For research on the use of label smoothing, see the [paper](https://arxiv.org/abs/1906.02629).

#

# Accordingly, in order to embed label smoothing into the model, it is necessary to carry out the transformation described above on the ground truth vectors, as well as to implement the cross-entropy calculation, since the used `torch.nn.CrossEntropy` class is not quite suitable, since for the ground truth representation of `__call__` method takes the id of the correct token and builds a one-hot vector already inside. However, it is possible to implement what is required based on the internal implementation of this class [CrossEntropyLoss](https://pytorch.org/docs/stable/_modules/torch/nn/modules/loss.html#CrossEntropyLoss).

#

# Test different values of $\alpha$ (e.x, 0.05, 0.1, 0.2). Describe your experiments and results.

# ```

# ENTER HERE YOUR ANSWER

# ```

# ENTER HERE YOUR CODE

|

import numpy as np

import pandas as pd

import os

import cv2

labels = os.listdir("/kaggle/input/drowsiness-dataset/train")

labels

import matplotlib.pyplot as plt

plt.imshow(plt.imread("/kaggle/input/drowsiness-dataset/train/Closed/_107.jpg"))

plt.imshow(plt.imread("/kaggle/input/drowsiness-dataset/train/yawn/10.jpg"))

# # for yawn

def face_for_yawn(

direc="/kaggle/input/drowsiness-dataset/train",

face_cas_path="/kaggle/input/haarcasacades/haarcascade_frontalface_alt.xml",

):

yaw_no = []

IMG_SIZE = 224

categories = ["yawn", "no_yawn"]

for category in categories:

path_link = os.path.join(direc, category)

class_num1 = categories.index(category)

print(class_num1)

for image in os.listdir(path_link):

image_array = cv2.imread(os.path.join(path_link, image), cv2.IMREAD_COLOR)

face_cascade = cv2.CascadeClassifier(face_cas_path)

faces = face_cascade.detectMultiScale(image_array, 1.3, 5)

for x, y, w, h in faces:

img = cv2.rectangle(image_array, (x, y), (x + w, y + h), (0, 255, 0), 2)

roi_color = img[y : y + h, x : x + w]

resized_array = cv2.resize(roi_color, (IMG_SIZE, IMG_SIZE))

yaw_no.append([resized_array, class_num1])

return yaw_no

yawn_no_yawn = face_for_yawn()

# # for eyes

def get_data(dir_path="/kaggle/input/drowsiness-dataset/train"):

labels = ["Closed", "Open"]

IMG_SIZE = 224

data = []

for label in labels:

path = os.path.join(dir_path, label)

class_num = labels.index(label)

class_num += 2

print(class_num)

for img in os.listdir(path):

try:

img_array = cv2.imread(os.path.join(path, img), cv2.IMREAD_COLOR)

resized_array = cv2.resize(img_array, (IMG_SIZE, IMG_SIZE))

data.append([resized_array, class_num])

except Exception as e:

print(e)

return data

data_train = get_data()

def append_data():

yaw_no = face_for_yawn()

data = get_data()

yaw_no.extend(data)

return np.array(yaw_no)

all_data = append_data()

# # separate label and features

X = []

y = []

for feature, labelss in all_data:

X.append(feature)

y.append(labelss)

X = np.array(X)

X = X.reshape(-1, 224, 224, 3)

from sklearn.preprocessing import LabelBinarizer

label_bin = LabelBinarizer()

y = label_bin.fit_transform(y)

y = np.array(y)

from sklearn.model_selection import train_test_split

seed = 42

test_size = 0.30

X_train, X_test, y_train, y_test = train_test_split(

X, y, random_state=seed, test_size=test_size

)

# # data augmentation and model

from keras.layers import Input, Lambda, Dense, Flatten, Conv2D, MaxPooling2D, Dropout

from keras.models import Model

from keras.models import Sequential

from keras.preprocessing.image import ImageDataGenerator

import tensorflow as tf

train_generator = ImageDataGenerator(

rescale=1 / 255, zoom_range=0.2, horizontal_flip=True, rotation_range=30

)

test_generator = ImageDataGenerator(rescale=1 / 255)

train_generator = train_generator.flow(np.array(X_train), y_train, shuffle=False)

test_generator = test_generator.flow(np.array(X_test), y_test, shuffle=False)

model = Sequential()

model.add(Conv2D(256, (3, 3), activation="relu", input_shape=X_train.shape[1:]))

model.add(MaxPooling2D(2, 2))

model.add(Conv2D(128, (3, 3), activation="relu"))

model.add(MaxPooling2D(2, 2))

model.add(Conv2D(64, (3, 3), activation="relu"))

model.add(MaxPooling2D(2, 2))

model.add(Conv2D(32, (3, 3), activation="relu"))

model.add(MaxPooling2D(2, 2))

model.add(Flatten())

model.add(Dropout(0.5))

model.add(Dense(64, activation="relu"))

model.add(Dense(4, activation="softmax"))

model.compile(loss="categorical_crossentropy", metrics=["accuracy"], optimizer="adam")

model.summary()

r = model.fit(

train_generator,

epochs=50,

validation_data=test_generator,

shuffle=True,

validation_steps=len(test_generator),

)

# loss

plt.figure(figsize=(4, 2))

plt.plot(r.history["loss"], label="train loss")

plt.plot(r.history["val_loss"], label="val loss")

plt.legend()

plt.show()

# accuracies

plt.figure(figsize=(4, 2))

plt.plot(r.history["accuracy"], label="train acc")

plt.plot(r.history["val_accuracy"], label="val acc")

plt.legend()

plt.show()

model.save("drowiness_new6.h5")

# # test data

prediction = model.predict(X_test)

classes_predicted = np.argmax(prediction, axis=1)

classes_predicted

# # evaluation metrics

labels_new = ["yawn", "no_yawn", "Closed", "Open"]

from sklearn.metrics import classification_report

print(

classification_report(

np.argmax(y_test, axis=1), classes_predicted, target_names=labels_new

)

)

# # predictions

# 0-yawn, 1-no_yawn, 2-Closed, 3-Open

model = tf.keras.models.load_model("./drowiness_new6.h5")

IMG_SIZE = 224

def prepare_data(filepath, face_cas="haarcascade_frontalface_default.xml"):

image_array = cv2.imread(filepath)

face_cascade = cv2.CascadeClassifier(cv2.data.haarcascades + face_cas)

faces = face_cascade.detectMultiScale(image_array, 1.3, 5)

for x, y, w, h in faces:

img = cv2.rectangle(image_array, (x, y), (x + w, y + h), (0, 255, 0), 2)

roi_color = img[y : y + h, x : x + w]

roi_color = roi_color / 255

resized_array = cv2.resize(roi_color, (IMG_SIZE, IMG_SIZE))

return resized_array.reshape(-1, IMG_SIZE, IMG_SIZE, 3)

def prediction_function(img_path):

ready_data = prepare_data(img_path)

prediction = model.predict(ready_data)

plt.imshow(plt.imread(img_path))

if np.argmax(prediction) == 2 or np.argmax(prediction) == 0:

print("DROWSY ALERT!!!!")

else:

print("DRIVER IS ACTIVE")

prediction_function("/kaggle/input/predict-img/predict_1.jpg")

|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 5GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session

import seaborn as sns

import matplotlib.pyplot as matplot

from matplotlib import pyplot as plt

from matplotlib.pyplot import show

from sklearn import metrics

from sklearn.model_selection import train_test_split

from scipy.stats import zscore

from sklearn.neighbors import KNeighborsClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import GridSearchCV

from mlxtend.feature_selection import SequentialFeatureSelector as sfs

pd.set_option("display.max_rows", None)

pd.set_option("display.max_columns", None)

train_df = pd.read_csv("/kaggle/input/titanic/train.csv")

sub_df = pd.read_csv("/kaggle/input/titanic/gender_submission.csv")

test_df = pd.read_csv("/kaggle/input/titanic/test.csv")

train_df.head()

train_df.dtypes

test_df.head()

print(train_df.shape)

print(test_df.shape)

# The training dataset has 891 records and test_df has 418 records. The test dataset has 1 column in short since the target column is excluded

# Checking for null values

train_df.isnull().sum()

test_df.isnull().sum()

# The column Cabin has more than 70% of the records with null values in both train and test dataset

# Imputing this column will not be helpful, so deleting this column

train_df.drop("Cabin", axis=1, inplace=True)

test_df.drop("Cabin", axis=1, inplace=True)

# The age and Embarked columns has null values in training dataset which needs to be imputed.

# Similarly Age and Fare dataset has null values.

train_df[train_df["Embarked"].isnull()]

# Both the records belong to same ticket, which means they are travelling together and we can see they were in class 1.

# imputing the column with most repeated value using mode.

train_df["Embarked"].fillna(

train_df[train_df["Pclass"] == 1]["Embarked"].mode()[0], inplace=True

)

train_df.sort_values(by="Ticket")

train_df[train_df["Ticket"] == "CA. 2343"]

train_df.sort_values(by="Age")

train_df[train_df["Age"].isnull()].sort_values(by="Ticket")

train_df[(train_df["Age"].isnull()) & (train_df["Parch"] != 0)]

train_df["Age"].fillna(

train_df["Age"].median(), inplace=True

) # imputing with mdeian as there are some outlier, since only few people have travelled whose age is close to eighty. using mean will not be suitable

test_df["Age"].fillna(

test_df["Age"].median(), inplace=True

) # imputing with mdeian as there are some outlier, since only few people have travelled whose age is close to eighty. using mean will not be suitable

# We can remove the columns id and Name as it will not be helpful for our analysis

train_df.drop(["Name"], axis=1, inplace=True)

test_df.drop(["Name"], axis=1, inplace=True)

train_df.describe().transpose()

# sns.pairplot(train_df,hue='Survived')

# Univariant analysis, Bivariant and multivariant analysis

total = float(len(train_df))

ax = sns.countplot(train_df["Survived"]) # for Seaborn version 0.7 and more

for p in ax.patches:

height = p.get_height()

ax.text(

p.get_x() + p.get_width() / 2.0,

height + 3,

"{:1.2f}".format((height / total) * 100),

ha="center",

)

show()

# The graph above shows only 38.38% of people have survided

total = float(len(train_df))

ax = sns.countplot(train_df["Pclass"]) # for Seaborn version 0.7 and more

for p in ax.patches:

height = p.get_height()

ax.text(

p.get_x() + p.get_width() / 2.0,

height + 3,

"{:1.2f}".format((height / total) * 100),

ha="center",

)

show()

# The graph above shows more than 50 percentage of the people have travelled in class 3

sns.distplot(train_df["Age"])

# The distribution plot shows that the majority of the people were in the age group 20 to 40.

total = float(len(train_df))

ax = sns.countplot(train_df["Sex"]) # for Seaborn version 0.7 and more

for p in ax.patches:

height = p.get_height()

ax.text(

p.get_x() + p.get_width() / 2.0,

height + 3,

"{:1.2f}".format((height / total) * 100),

ha="center",

)

show()

# In the total passenger 64.76% were male and 35.24% people were female.

total = float(len(train_df))

ax = sns.countplot(train_df["Embarked"]) # for Seaborn version 0.7 and more

for p in ax.patches:

height = p.get_height()

ax.text(

p.get_x() + p.get_width() / 2.0,

height + 3,