script

stringlengths 113

767k

|

|---|

import bq_helper

import matplotlib.pyplot as plt

import matplotlib.cm as cm

stackOverflow = bq_helper.BigQueryHelper(

active_project="bigquery-public-data", dataset_name="stackoverflow"

)

query = """

SELECT users.display_name as `Display Name`, COUNT(users.id) as Count

FROM bigquery-public-data.stackoverflow.users AS users

INNER JOIN bigquery-public-data.stackoverflow.comments AS comments

ON users.id = comments.user_id

WHERE users.id > 0

GROUP BY users.display_name

ORDER BY count DESC

LIMIT 25;

"""

top_users = stackOverflow.query_to_pandas_safe(query, max_gb_scanned=2)

top_users = top_users.sort_values(["Count"], ascending=True)

top_users

_, ax = plt.subplots(figsize=(22, 10))

top_users.plot(

x="Display Name",

y="Count",

kind="barh",

ax=ax,

color=cm.viridis_r(top_users.Count / float(max(top_users.Count))),

)

ax.spines["top"].set_visible(False)

ax.spines["right"].set_visible(False)

ax.spines["bottom"].set_visible(False)

ax.set_title("Top 25 Users With The Most Comments", fontsize=20)

for i, v in enumerate(top_users.Count):

ax.text(v + 750, i - 0.15, str(v), color="black", fontsize=12)

ax.xaxis.set_visible(False)

ax.yaxis.get_label().set_visible(False)

ax.tick_params(axis="y", labelsize=14)

ax.get_legend().remove()

|

#

# - Learn about the prices of all cars and the changes made to all car brands

# - The automobile industry has seen a dramatic shift in pricing over the past decade. With the introduction of new technologies, increased competition, and changing consumer preferences, car prices have been on the rise. This dataset will explore the changes in car prices over time, looking at factors such as inflation, technological advances, and consumer demand.

# - you will understand collecting, organizing, analyzing, and interpreting my data

# Table of Contents

# 1. [import library](#dataA)

# 2. [Data Exploration](#dataB)

# 3. [describe data](#dataC)

# 4. [feature engineering](#dataD)

# 4.1. [handling data types](#dataD1)

# 4.2. [Exploratory Data Analysis (EDA)](#dataD2)

# 4.3. [performing a data filtering operation](#dataD3)

#

#

# 5. [Conclusion](#dataE)

# 1. import library

#

# import necessary library for work

import numpy as np

import pandas as pd

import pandas_profiling as pp

import matplotlib.pyplot as plt

import seaborn as sns

import skimpy

#

# 2. Data Exploration

#

# read data that we will work about it

df = pd.read_csv("/kaggle/input/car-price/car_price.csv", index_col=False)

my_data = df.copy()

my_data

#

# 3. describe data

# - overview about data to know some of properties as Dataset statistics name of columns and number of columns , interaction , correlations , missing values and sample about data

over_view = pp.ProfileReport(my_data)

over_view

df = pd.read_csv(r"/kaggle/input/car-price/car_price.csv") # describe data

report = skimpy.skim(df)

print(report)

my_data.isnull().count() # to know missing value

my_data.head(3) # show some of data to know info about the name of columns

my_data.columns # my data content of 5 columns

#

# 4. feature engineering

#

my_data.drop(

"Unnamed: 0", axis=1, inplace=True

) # drop the columns which named "Unnamed" from columns

new_col = [

"cars_names",

"old_price",

"price_change",

"new_price",

"change_date",

] # list of new name

my_data.columns = new_col

my_data.head(5)

my_data.dtypes # show the data type of my columns to know how will work with it

my_data[

"change_date"

] # this column should have date this mean it's dtype should be datetime

#

# 4.1. handling data types

#

my_data["change_date"] = pd.to_datetime(my_data["change_date"])

my_data["change_date"]

my_data.dtypes

my_data[

"old_price"

] # this column content is mix of string and numerical we will change dtype to number

# - create function to convert columns which contan of mix values int,str , take name of column and the string which you want to delete and replace the string with "" and replace "," with "" to ease the convert to int

def to_int(column):

# my_data[column] = my_data[column].astype(str).replace(string , "")

my_data[column] = my_data[column].astype(str).replace(r"\D", "", regex=True)

for i in range(len(my_data[column])):

my_data[column][i] = my_data[column][i].replace(",", "")

my_data.dtypes

to_int("old_price")

# my_data_copy["old_price"] = my_data_copy["old_price"].astype(int)

my_data.loc[:, "old_price"] = my_data["old_price"].astype(int)

my_data["old_price"]

my_data.head(3)

my_data[

"price_change"

] # this column contains of 3 different string are "trending_up" , "trending_down" , "EGP" , ","

# my_data["price_change"] = my_data["price_change"].str.replace("EGP" , " ")

# li_str = ["trending_up" , "trending_down"]

# for i in li_str:

to_int("price_change")

# my_data.iloc[:, "price_change"] = my_data.iloc[:, "price_change"].astype(int)

my_data["price_change"] = my_data["price_change"].astype(int)

my_data["price_change"]

my_data.head(4)

to_int("new_price")

my_data["new_price"] = my_data["new_price"].astype(int)

my_data

my_data["cars_names"] = my_data["cars_names"].astype("string")

my_data["cars_names"].describe()

my_data.dtypes

#

# 4.2. Exploratory Data Analysis (EDA)

#

plt.figure(figsize=(20, 10))

sns.set_style("darkgrid")

sns.pairplot(my_data)

plt.show()

plt.figure(figsize=(10, 10))

sns.set(

rc={

"axes.facecolor": "#2b4969",

"axes.grid": False,

"xtick.labelsize": 14,

"ytick.labelsize": 14,

}

)

# sns.set_style("dark")

sns.scatterplot(

x=my_data["old_price"], y=my_data["new_price"], color="#FF0000", markers="."

)

plt.show()

my_data["cars_names"].duplicated().sum()

my_data["cars_names"].nunique()

car_brand = []

for i in range(len(my_data["cars_names"])):

car_brand.append(my_data["cars_names"][i].split()[0])

my_data["car_brand"] = car_brand

my_data

my_data["car_brand"].nunique()

my_data["car_brand"].value_counts()

# Knowing some statistical information for each company or mark,

# such as the average, the largest value, the smallest value, and the sum of values in relation to the price increase column

info = pd.DataFrame(

my_data.groupby("car_brand").price_change.agg(

["count", "mean", "min", "max", "sum"]

)

)

info

# restore the index name, and move the index back to a column

info.reset_index(inplace=True)

# info.drop("level_0" , axis=1 , inplace=True)

info

# the name brand of max change in price change and the sum of its changes

pd.DataFrame(info[["car_brand", "sum"]][info["sum"] == info["sum"].max()])

plt.figure(figsize=(20, 20))

plt.title("Mercedes")

sns.set(

rc={

"axes.facecolor": "#414547",

"axes.grid": False,

"xtick.labelsize": 14,

"ytick.labelsize": 14,

}

)

sns.pointplot(

data=my_data,

x=my_data["old_price"][my_data["car_brand"] == "Mercedes"],

y=my_data["price_change"][my_data["car_brand"] == "Mercedes"],

)

plt.show()

# the name brand of min change in price change and the sum of its changes

pd.DataFrame(info[["car_brand", "sum"]][info["sum"] == info["sum"].min()])

plt.figure(figsize=(20, 20))

plt.title("Porsche")

sns.set(

rc={

"axes.facecolor": "#132c47",

"axes.grid": False,

"xtick.labelsize": 14,

"ytick.labelsize": 14,

}

)

sns.pointplot(

x=my_data["old_price"][my_data["car_brand"] == "Porsche"],

y=my_data["price_change"][my_data["car_brand"] == "Porsche"],

)

plt.show()

#

# 4.3. performing a data filtering operation

#

my_data[my_data["car_brand"] == "Mercedes"]

# the mean , min , max values for brand who is the max change in price change

info_mercedes = pd.DataFrame(

my_data["price_change"][my_data["car_brand"] == "Mercedes"]

)

pd.DataFrame(info_mercedes.agg(["mean", "min", "max"]).astype(int))

# the mean , min , max values for brand who is the min change in price change

info_Porsche = pd.DataFrame(my_data["price_change"][my_data["car_brand"] == "Porsche"])

pd.DataFrame(info_Porsche.agg(["mean", "min", "max"]).astype(int))

my_data[my_data["car_brand"] == "Porsche"]

# The sum of the changes that occurred to the Mercedes company,

# which is the owner of the largest increase in car prices among the companies,

# starting from [2/2/2021] to [17/1/2023] for each day of the change,

# whether with an increase or decrease, and their number

df_mercedes = pd.DataFrame(

my_data.groupby(

my_data["change_date"][my_data["car_brand"] == "Mercedes"]

).price_change.agg(["sum", "count"])

)

df_mercedes.reset_index(inplace=True)

df_mercedes

plt.figure(figsize=(10, 8))

sns.scatterplot(x=df_mercedes["change_date"], y=df_mercedes["sum"], color="#FF0000")

plt.show()

# The sum of the changes that occurred to the Porsche company,

# which is the owner of the smallest increase in car prices among the companies,

# starting from [14/10/2019] to [18/11/2020] for each day of the change,

# whether with an increase or decrease, and their number

df_porshe = pd.DataFrame(

my_data.groupby(

my_data["change_date"][my_data["car_brand"] == "Porsche"]

).price_change.agg(["sum", "count"])

)

df_porshe.reset_index(inplace=True)

df_porshe

plt.figure(figsize=(8, 6))

sns.scatterplot(x=df_porshe["change_date"], y=df_porshe["sum"], color="#ed0915")

plt.show()

my_data["cars_names"][my_data["cars_names"].duplicated()]

my_data["cars_names"].nunique()

info_names = pd.DataFrame(my_data.groupby("cars_names").price_change.agg("count"))

info_top30 = pd.DataFrame(

info_names["price_change"].nlargest(30)

) # to know 30 top of changes

info_top30.reset_index(inplace=True)

info_top30

plt.figure(figsize=(20, 10))

sns.set(

rc={

"axes.facecolor": "#283747",

"axes.grid": False,

"xtick.labelsize": 14,

"ytick.labelsize": 14,

}

)

colors = [

"#8f285b",

"#4b78b8",

"#aecd6e",

"#d47f3f",

"#8e2991",

"#7a3f3e",

"#418274",

"#b23b47",

"#ffb00d",

"#4b4b4b",

"#d6adad",

"#3978b8",

"#e6f088",

"#d46b6b",

"#a8c6e5",

]

plt.bar(info_top30["cars_names"][0:15], info_top30["price_change"][0:15], color=colors)

plt.show()

plt.figure(figsize=(10, 7))

sns.set(

rc={

"axes.facecolor": "#283747",

"axes.grid": False,

"xtick.labelsize": 14,

"ytick.labelsize": 14,

}

)

sns.barplot(data=info_top30, x=info_top30["cars_names"], y=my_data["old_price"])

plt.show()

# The total change for each type of car brands represents the owners of the top 30 changes in their prices

# for 16 times duplicated

list = []

for i in range(30):

list.append(

my_data["price_change"][

my_data["cars_names"] == info_top30["cars_names"][i]

].sum()

)

changes_top30 = pd.DataFrame(list, columns=["sum_changes_top30"])

changes_top30

plt.figure(figsize=(20, 10))

sns.set(

rc={

"axes.facecolor": "#283747",

"axes.grid": False,

"xtick.labelsize": 14,

"ytick.labelsize": 14,

}

)

sns.scatterplot(x=info_top30["cars_names"], y=changes_top30["sum_changes_top30"])

plt.show()

plt.figure(figsize=(20, 15))

sns.set(

rc={

"axes.facecolor": "#283747",

"axes.grid": False,

"xtick.labelsize": 14,

"ytick.labelsize": 14,

}

)

sns.barplot(

x=info_top30["cars_names"][0:11], y=changes_top30["sum_changes_top30"][0:11]

)

plt.show()

df_old = pd.DataFrame(my_data["old_price"].describe().astype(int))

df_old.reset_index(inplace=True)

df_old

plt.bar(df_old["index"], df_old["old_price"])

plt.show()

df_new = pd.DataFrame(my_data["new_price"].describe().astype(int))

df_new.reset_index(inplace=True)

df_new

plt.scatter(df_new["index"], df_new["new_price"], color="#c22961")

plt.show()

|

# #COVID-19 vaccine doses administered per 100 people

# Total number of vaccination doses administered per 100 people in the total population. This is counted as a single dose, and may not equal the total number of people vaccinated, depending on the specific dose regime (e.g. people receive multiple doses).

# https://data.humdata.org/dataset/covid-19-vaccinations

# youtube.com

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

from sklearn.preprocessing import StandardScaler

from sklearn.cluster import KMeans, AffinityPropagation

import matplotlib.pyplot as plt

import seaborn as sns

import plotly as py

import plotly.graph_objs as go

import plotly.express as px

py.offline.init_notebook_mode(connected=True)

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 20GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session

nRowsRead = 1000 # specify 'None' if want to read whole file

df = pd.read_csv(

"../input/cusersmarildownloadsvaccinatedcsv/vaccinated.csv",

delimiter=";",

encoding="utf8",

nrows=nRowsRead,

)

df.dataframeName = "vaccinated.csv"

nRow, nCol = df.shape

print(f"There are {nRow} rows and {nCol} columns")

df.head()

# #Dataprep

# Acknowlegdements

# SFU Data Science Research Group - SIMON FRASER UNIVERSITY

# https://sfu-db.github.io/dataprep/user_guide/eda/plot.html

# https://dataprep.ai/

# https://www.cs.sfu.ca/~jnwang/

# https://www.cs.sfu.ca/~jnwang/ppt/DataPrep-Overview-Databricks.pdf

from dataprep.eda import plot, plot_correlation, create_report, plot_missing

plot_missing(df)

plot(df)

# API Correlation

plot_correlation(df)

brz = df[(df["location"] == "Brazil")].reset_index(drop=True)

brz.head()

# Code by Joshua Swords https://www.kaggle.com/joshuaswords/data-visualization-clustering-mall-data

# Preparing to cluster: What about the distribution of our data?

import matplotlib.gridspec as gridspec

# Create 2x2 sub plots

gs = gridspec.GridSpec(1, 3)

fig = plt.figure(figsize=(15, 3))

# Title and sub-title

fig.text(

0.09,

1.09,

"Numeric variable distribution",

fontsize=15,

fontweight="bold",

fontfamily="serif",

)

fig.text(

0.09,

1,

"Our data appears to be relatively normal, therefore we will not transform it.",

fontsize=12,

fontweight="light",

fontfamily="serif",

)

# Grid spec layout

ax1 = fig.add_subplot(gs[0, 0]) # row 0, col 0

ax1 = sns.distplot(

df["people_vaccinated"],

color="#244747",

hist_kws=dict(edgecolor="white", linewidth=1, alpha=0.8),

)

ax2 = fig.add_subplot(gs[0, 1]) # row 0, col 1

ax2 = sns.distplot(

df["people_fully_vaccinated"],

color="#244747",

hist_kws=dict(edgecolor="white", linewidth=1, alpha=0.8),

)

ax3 = fig.add_subplot(gs[0, 2]) # row 1, span all columns

ax3 = sns.distplot(

df["daily_vaccinations_per_million"],

color="#244747",

hist_kws=dict(edgecolor="white", linewidth=1, alpha=0.8),

)

sns.despine()

plt.subplots_adjust(

left=None, bottom=None, right=None, top=None, wspace=0.3, hspace=None

) # useful for adjusting space between subplots

fig = px.bar(

df,

x="date",

y="people_vaccinated",

color_discrete_sequence=["blue"],

title="Covid Vaccine Administered",

text="location",

)

fig.show()

fig = px.bar(

brz,

x="date",

y="people_vaccinated",

color_discrete_sequence=["darkolivegreen"],

title="Covid Vaccine Administered in Brazil",

text="people_fully_vaccinated",

)

fig.show()

fig = px.parallel_categories(

df, color="people_vaccinated", color_continuous_scale=px.colors.sequential.OrRd

)

fig.show()

fig = px.line(

df,

x="date",

y="people_fully_vaccinated",

color_discrete_sequence=["darksalmon"],

title="Covid-19 Doses Administered",

)

fig.show()

fig = px.scatter(

df,

x="date",

y="people_fully_vaccinated",

color_discrete_sequence=["#4257f5"],

title="Covid Doses Administered",

)

fig.show()

# Code by Olga Belitskaya https://www.kaggle.com/olgabelitskaya/sequential-data/comments

from IPython.display import display, HTML

c1, c2, f1, f2, fs1, fs2 = "#eb3434", "#eb3446", "Akronim", "Smokum", 30, 15

def dhtml(string, fontcolor=c1, font=f1, fontsize=fs1):

display(

HTML(

"""<style>

@import 'https://fonts.googleapis.com/css?family="""

+ font

+ """&effect=3d-float';</style>

<h1 class='font-effect-3d-float' style='font-family:"""

+ font

+ """; color:"""

+ fontcolor

+ """; font-size:"""

+ str(fontsize)

+ """px;'>%s</h1>""" % string

)

)

dhtml("Be patient. Marília Prata, @mpwolke was Here.")

|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 20GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session

# The thing should take around 10gb of gpu memory when being trained

device = "cuda"

model_checkpoint = "bert-large-uncased"

# ## Exploring the data

import pandas as pd

raw_train_df = pd.read_csv("/kaggle/input/nlp-getting-started/train.csv")

raw_test_df = pd.read_csv("/kaggle/input/nlp-getting-started/test.csv")

len(raw_train_df)

raw_train_df.head()

raw_test_df.head()

duplicates_df = raw_train_df[raw_train_df.text.duplicated(keep=False)].sort_values(

"text"

)

duplicates_df.head()

# ## Clean the data

# As we've seen, there are dosens of duplicated tweets. Moreover, their labes are sometimes contradictory.

duplicates_df[:20]

duplicated_tweets = list(duplicates_df.text)

len(duplicated_tweets)

# Now search for contradictions

contradictory_tweets = set()

for tweet in duplicated_tweets:

if len(set(duplicates_df[duplicates_df["text"] == tweet].target)) > 1:

contradictory_tweets.add(tweet)

contradictory_tweets = list(contradictory_tweets)

print(len(contradictory_tweets), type(contradictory_tweets))

# We're going to label those manually

for i, tweet in enumerate(contradictory_tweets):

print(i, tweet, "\n")

no_duplicates_train_df = raw_train_df.drop_duplicates("text")

print(len(no_duplicates_train_df))

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[0], "target"

] = 0

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[1], "target"

] = 0

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[2], "target"

] = 0

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[3], "target"

] = 0

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[4], "target"

] = 0

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[5], "target"

] = 0

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[6], "target"

] = 0

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[7], "target"

] = 0

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[8], "target"

] = 0

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[9], "target"

] = 1

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[10], "target"

] = 0

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[11], "target"

] = 1

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[12], "target"

] = 0

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[13], "target"

] = 0

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[14], "target"

] = 0

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[15], "target"

] = 0

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[16], "target"

] = 0

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[17], "target"

] = 1

no_duplicates_train_df.loc[

no_duplicates_train_df["text"] == contradictory_tweets[12], "target"

]

# ## Create the Datasets

import torch

from torch.utils.data import Dataset

from transformers import AutoTokenizer, AutoModel

from tqdm import tqdm

from copy import deepcopy

import random

tokenizer = AutoTokenizer.from_pretrained(model_checkpoint)

class LabeledDisasterTweetsDataset(Dataset):

def __init__(

self,

df,

tokenizer,

max_tweet_len=180,

tweet_column="text",

location_column="location",

keyword_column="keyword",

target_column="target",

device="cpu",

text_prep_f=None,

):

self._data = []

for index, row in tqdm(df.iterrows(), total=len(df), ncols=70):

text = (

row[tweet_column]

if text_prep_f == None

else text_prep_f(

row[tweet_column], row[keyword_column], row[location_column]

)

)

tokenized = tokenizer(

text,

padding="max_length",

truncation=True,

max_length=256,

return_tensors="pt",

)

self._data.append(

(

(

tokenized["input_ids"][0].to(device),

tokenized["attention_mask"][0].to(device),

),

torch.tensor(row[target_column]).to(device),

)

)

print("NOTE: you can safely delete the dataframe")

def __len__(self):

return len(self._data)

def __getitem__(self, i):

"""return: ((input_ids, attention_mask), target)"""

return self._data[i]

def train_valid_split(self, train_fraction=0.9, shuffle=True):

num_train_examples = int(len(self) * train_fraction)

train_dataset = deepcopy(self)

if shuffle:

random.shuffle(train_dataset._data)

valid_dataset = deepcopy(train_dataset)

train_dataset._data = train_dataset._data[:num_train_examples]

valid_dataset._data = valid_dataset._data[num_train_examples:]

print(

"NOTE: you can safely delete parent dataset. The train|valid split is {}|{}.".format(

len(train_dataset), len(valid_dataset)

)

)

return train_dataset, valid_dataset

class UnlabeledDisasterTweetsDataset(Dataset):

def __init__(

self,

df,

tokenizer,

max_tweet_len=180,

tweet_column="text",

location_column="location",

keyword_column="keyword",

id_column="id",

device="cpu",

text_prep_f=None,

):

self._data = []

for index, row in tqdm(df.iterrows(), total=len(df), ncols=70):

text = (

row[tweet_column]

if text_prep_f == None

else text_prep_f(

row[tweet_column], row[keyword_column], row[location_column]

)

)

tokenized = tokenizer(

text,

padding="max_length",

truncation=True,

max_length=256,

return_tensors="pt",

)

self._data.append(

(

torch.tensor(row[id_column]).to(device),

(

tokenized["input_ids"][0].to(device),

tokenized["attention_mask"][0].to(device),

),

)

)

print("NOTE: you can safely delete the dataframe")

def __len__(self):

return len(self._data)

def __getitem__(self, i):

"""return: (id, (input_ids, attention_mask))"""

return self._data[i]

def prep_fn(text, keywords, location):

return text + " Location: " + str(location) + ". Keywords: " + str(keywords) + "."

dataset = LabeledDisasterTweetsDataset(

no_duplicates_train_df, tokenizer, device="cuda", text_prep_f=prep_fn

)

train_dataset, valid_dataset = dataset.train_valid_split()

eval_dataset = UnlabeledDisasterTweetsDataset(

raw_test_df, tokenizer, device="cuda", text_prep_f=prep_fn

)

tokenizer.decode(train_dataset[0][0][0])

tokenizer.decode(eval_dataset[0][1][0])

# ## Defining the model

class BertClassifier(torch.nn.Module):

def __init__(

self, n_classes=2, head_dropout=0.2, model_checkpoint=model_checkpoint

):

super().__init__()

self.Base = AutoModel.from_pretrained(model_checkpoint)

self.Head = torch.nn.Sequential(

torch.nn.Dropout(head_dropout),

torch.nn.Linear(1024, 1024),

torch.nn.Dropout(head_dropout),

torch.nn.Linear(1024, n_classes), # projection

)

def forward(self, input_ids, attention_mask=None, *argv):

res = self.Base.forward(

input_ids=input_ids, attention_mask=attention_mask, return_dict=False

)

res = res[0]

res = res[:, 0, :] # encoding for <s> token

res = self.Head(res)

return res

def parameters_num(self):

return sum(p.numel() for p in self.parameters())

model = BertClassifier(head_dropout=0.5)

model.forward(torch.tensor([1, 2, 33, 2])[None])

model.to(device)

model.forward(torch.tensor([[1]], device=device))

print("Our Roberta has {:,d} parameters...".format(model.parameters_num()))

# ## Fine-tuning

from torch.utils.data import DataLoader

batch_size = 4

train_dataloader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

valid_dataloader = DataLoader(valid_dataset, batch_size=batch_size, shuffle=False)

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

def train(

model,

train_dataloader,

valid_dataloader,

steps,

optimizer,

blind_steps=None,

loss_fn=torch.nn.BCELoss(),

main_metric=("f1", f1_score),

additional_metrics=[],

filepath="model_sd.pt",

load_best=True,

scheduler=None,

losses_dict=None,

):

if blind_steps == None:

blind_steps = len(train_dataloader) // 4

def evaluate(): # the first score returned is the main

model.eval()

y_trues = []

y_hats = []

loss = 0

k = 0

with torch.no_grad():

for batch in valid_dataloader:

(ids, mask), y_true = batch

hots = torch.nn.functional.one_hot(y_true, 2).to(device, torch.float)

y_hat = torch.softmax(

model.forward(input_ids=ids, attention_mask=mask), dim=-1

)

loss += float(loss_fn(y_hat, hots))

k += 1

for i in range(y_true.shape[0]):

y_trues.append(int(y_true[i]))

y_hats.append(1 if y_hat[i][0] < y_hat[i][1] else 0)

scores = [(main_metric[0], main_metric[1](y_trues, y_hats))]

for metric in additional_metrics:

scores.append((metric[0], metric[1](y_trues, y_hats)))

model.train()

return scores + [("valid_loss", loss / k)]

def render_scores(scores, step, best=None):

print("{:05d} steps".format(step), end=" ")

for score in scores:

print("| {}: {:.3f}".format(*score), end=" ")

if best != None:

print("| best_score: {:.3f}".format(best))

# initial scores

scores = evaluate()

render_scores(scores, 0)

best_score = scores[0][1]

torch.save(model.state_dict(), filepath)

# logs

if losses_dict != None:

losses_dict["train_loss"] = []

losses_dict["valid_loss"] = []

losses_dict[main_metric[0]] = []

epoch_loss = 0

k = 0

train_iter = iter(train_dataloader)

model.train()

for step in tqdm(range(steps)):

# retrieving a batch

try:

batch = next(train_iter)

except:

train_iter = iter(train_dataloader)

batch = next(train_iter)

(ids, mask), y_true = batch

# prediction

y_hat = torch.softmax(model.forward(input_ids=ids, attention_mask=mask), dim=-1)

hots = torch.nn.functional.one_hot(y_true, 2).to(device, torch.float)

loss = loss_fn(y_hat, hots)

# backprop

optimizer.zero_grad()

loss.backward()

optimizer.step()

if scheduler != None:

scheduler.step()

epoch_loss += float(loss)

k += 1

# evaluation

if (step + 1) % blind_steps == 0:

scores = evaluate() + [("train_loss", epoch_loss / k)]

if losses_dict != None:

losses_dict["valid_loss"].append(float(scores[-2][1]))

losses_dict["train_loss"].append(float(scores[-1][1]))

losses_dict[main_metric[0]].append(float(scores[0][1]))

if scores[0][1] > best_score:

best_score = scores[0][1]

torch.save(model.state_dict(), filepath)

render_scores(scores, step + 1, best=best_score)

epoch_loss = 0

k = 0

if load_best:

model.load_state_dict(torch.load(filepath))

optimizer = torch.optim.Adam(model.parameters(), lr=5e-6, weight_decay=2e-3)

scheduler = torch.optim.lr_scheduler.LinearLR(optimizer, total_iters=500)

logs_dict = {}

train(

model,

train_dataloader,

valid_dataloader,

7000,

optimizer,

blind_steps=125,

additional_metrics=[

("precision", precision_score),

("recall", recall_score),

("accuracy", accuracy_score),

],

losses_dict=logs_dict,

scheduler=scheduler,

)

import numpy as np

import seaborn as sns

sns.set_theme(style="whitegrid")

sns.lineplot(

data=pd.DataFrame(

logs_dict, [(i + 1) * 125 for i in range(len(logs_dict["train_loss"]))]

),

palette="Set2",

linewidth=2.5,

)

# ## Evaluate

model.load_state_dict(torch.load("model_sd.pt"))

def evaluate(

model,

valid_dataloader,

metrics=[

("f1", f1_score),

("precision", precision_score),

("recall", recall_score),

("accuracy", accuracy_score),

],

):

model.eval()

y_trues = []

y_hats = []

with torch.no_grad():

for batch in valid_dataloader:

(ids, mask), y_true = batch

y_hat = torch.softmax(

model.forward(input_ids=ids, attention_mask=mask), dim=-1

)

for i in range(y_true.shape[0]):

y_trues.append(int(y_true[i]))

y_hats.append(1 if y_hat[i][0] < y_hat[i][1] else 0)

scores = []

for metric in metrics:

scores.append((metric[0], metric[1](y_trues, y_hats)))

return scores

scores = evaluate(model, valid_dataloader)

print(scores)

def classify(tweet):

ids = torch.tensor(tokenizer(tweet)["input_ids"])[None].to(device)

return model.forward(input_ids=ids)

classify("Over 2 hundred families have become victims of my cookie-shop iniciative")

classify(

"These events are terrible. The God will make those terorists suffer. #karma #teror"

)

# ## Make predictions

predictions_df = pd.DataFrame()

for i, (ids, mask) in tqdm(eval_dataset):

pred = model(input_ids=ids[None], attention_mask=mask[None])[0]

y_hat = 1 if pred[0] < pred[1] else 0

r = [int(i), y_hat]

predictions_df = pd.concat(

[predictions_df, pd.DataFrame(np.array(r)[None, :], columns=["id", "target"])]

)

predictions_df.target = predictions_df.target.astype(int)

predictions_df.id = predictions_df.id.astype(int)

predictions_df.head(20)

predictions_df.to_csv("submission.csv", index=False)

predictions_df.to_csv(str(scores[0][1]) + ".csv", index=False)

print("Done!")

|

# In this notebook, I'll build a **Support Vector Machine** for classification using **scikit-learn** and the **Radial Basis Function (RBF) Kernel**. My training dataset contains continous and categorical data from the UCI Machine Learning Repository to predict *whether or not a perso will default on their credit card*.

# SVM are one of the best machine learning methods when getting the correct answer is a higher priority than understanding why you get the correct answer. They work really well with relatively small datasets and they tend to work well "out of the box". In other words, they do not require much optimization.

# ## 1. Load Libraries

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.colors as colors

from sklearn.utils import resample # downsample the data

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import scale # scale and center data

from sklearn.svm import SVC # SVM for classification

from sklearn.model_selection import GridSearchCV # cross validation

from sklearn.metrics import confusion_matrix

from sklearn.metrics import plot_confusion_matrix

from sklearn.decomposition import PCA # to perform PCA to plot the data

# ## 2. Importing Data

df = pd.read_csv(

"/kaggle/input/default-on-their-credit-card/default of credit card clients.csv",

header=1,

)

df.head()

# We see a bunch of columns for the variables collected for each customer. The columns are...

# * **ID**: The ID number assigned to each customer

# * **LIMIT_BAL**: Credit limit

# * **SEX**: Gender (1 = male; 2 = female).

# * **EDUCATION**: Education (1 = graduate school; 2 = university; 3 = high school; 4 = others).

# * **MARRIAGE**: Marital status (1 = married; 2 = single; 3 = others).

# * **AGE**: Age (year).

# * **PAY_**: When the last 6 bills were payed

# * **BLL_AMT**: What the last 6 bills were

# * **PAY_AMT**: How much the last payments were

# * **default payment next month**

# I'll change the last column to **DEFAULT**.

df.rename({"default payment next month": "DEFAULT"}, axis="columns", inplace=True)

df.head()

# Drop ID, cause it was randomly assigned

df.drop("ID", axis=1, inplace=True)

df.head()

# ## 3. Missing Data

# ### 3.1 Identifying Missing Data

df.dtypes

# All of the columns is int64, which is good, since it tells us that they did not mix letters and numbers. In other words, there are no **NA** values. But **SEX**, **EDUCATION**, **MARRIAGE**,**PAY_** and **DEFAULT** should be **category** type.

# Make sure every category column contains the categpries which it should have and no more else.

df["SEX"].unique() # good

df["EDUCATION"].unique()

# EDUCATION only allowed numbers: 1,2,3,4. But it contains 0, 5 and 6. It is possible that 0 means missing data, and 5 and 6 represent categories not mentioned in the specification, but that is just a guess.

df["MARRIAGE"].unique() # 0 may represents missing data

# ### 3.2 Dealing with Missing Data

# Because scikit-learn's support vector machines do not support missing values, I need to figure out what to do with the 0s in the dataset. I can either delete these or impute them.

len(df.loc[(df["EDUCATION"] == 0) | (df["MARRIAGE"] == 0)])

# 68 rows have missing value.

len(df)

# So 68 of the 30000 rows, less than 1%, contains missing values. Since we have enough data for SVM when we drop these missing value rather than impute them. So I do this by selecting all of the rows that do not contain 0 in these two columns.

df_no_missing = df.loc[(df["EDUCATION"] != 0) & (df["MARRIAGE"] != 0)]

len(df_no_missing)

# Verify

df_no_missing["EDUCATION"].unique()

# Verify

df_no_missing["MARRIAGE"].unique()

# ## 4. Downsample the Data

# SVMs are great with small dataset, and this dataset will take a long time to optimize with Cross Validation. So I'll downsample both categories, customers who did and did not default, to 1000 each.

df_no_default = df_no_missing[df_no_missing["DEFAULT"] == 0]

df_default = df_no_missing[df_no_missing["DEFAULT"] == 1]

# Downsample the dataset that did not default

df_no_default_downsampled = resample(df_no_default, replace=False, n_samples=1000)

len(df_no_default_downsampled)

# Downsample the dataset that defaulted

df_default_downsampled = resample(df_default, replace=False, n_samples=1000)

len(df_default_downsampled)

# Merge them back into a single dataframe

df_downsample = pd.concat([df_no_default_downsampled, df_default_downsampled])

len(df_downsample)

# ## 5. Format the Data

# ### 5.1 Split the data into Dependent and Independent Variables

X = df_downsample.drop("DEFAULT", axis=1).copy()

# Or: X = df_no_missing.iloc[:,:-1].copy

X.head()

y = df_downsample["DEFAULT"].copy()

y.head()

# ### 5.2 One-Hot coding

X_encoded = pd.get_dummies(

X,

columns=[

"SEX",

"EDUCATION",

"MARRIAGE",

"PAY_0",

"PAY_2",

"PAY_3",

"PAY_4",

"PAY_5",

"PAY_6",

],

)

X_encoded

# ### 5.3 Centering and Scaling

# I split the data into training and testing datasets and then scale them separatly to avoid Data Leakage. Data Leakage occurs when informantion about the training dataset currupts or influences the testing dataset.

X_train, X_test, y_train, y_test = train_test_split(X_encoded, y)

X_train_scaled = scale(X_train)

X_test_scaled = scale(X_test)

# ## 6. Build a Preliminary Support Vector Machine

clf_svm = SVC()

clf_svm.fit(X_train_scaled, y_train)

plot_confusion_matrix(

clf_svm,

X_test_scaled,

y_test,

values_format="d",

display_labels=["Did not default", "Defaulted"],

)

# In this confusion matrix, we see that of the 250 people that did not default, 198(79.2%) were correctly classified. And of the 250 people that defaulted, 152(60.8%) were correctly calssified. So the SVM was not awesome. And I'll try to improve predictions using Cross validation to optimize the parameters.

# ## 7. Optimize Parameters with CV

param_grid = [

{

"C": [0.5, 1, 10, 100],

"gamma": ["scale", 1, 0.1, 0.01, 0.001, 0.0001],

"kernel": ["rbf"],

},

]

optimal_params = GridSearchCV(SVC(), param_grid, cv=5, scoring="accuracy", verbose=0)

optimal_params.fit(X_train_scaled, y_train)

print(optimal_params.best_params_)

# So the ideal value for C is 100, and the ideal value for gamma is 0.001.

# ## 8. Building, Evaluating, Drawing the Interpreting the Final Support Vector machine.

clf_svm = SVC(C=100, gamma=0.001)

clf_svm.fit(X_train_scaled, y_train)

plot_confusion_matrix(

clf_svm,

X_test_scaled,

y_test,

values_format="d",

display_labels=["Did not default", "Defaulted"],

)

# The results from the optimized SVM do not better than before. In other words, the SVM was pretty good straight out of the box without much optimization. This makes SVMs a great, quick and dirty method for relatively small datasets.

len(df_downsample.columns)

pca = PCA()

X_train_pca = pca.fit_transform(X_train_scaled)

per_var = np.round(pca.explained_variance_ratio_ * 100, decimals=1)

labels = [str(x) for x in range(1, len(per_var) + 1)]

plt.bar(x=range(1, len(per_var) + 1), height=per_var)

plt.tick_params(axis="x", which="both", bottom=False, top=False, labelbottom=False)

plt.ylabel("Percentage of Explained Variance")

plt.xlabel("Principal Components")

plt.title("Scree Plot")

plt.show()

# The Scree Plot shows that PC1 accounts for a relatively large amount of variation in the raw data, this means that it will be a good candidate for the x-axis in the 2-dimensional graph. However, PC2 is not much different with PC3 or PC4, which doesn't bode well for dimension reduction.

train_pc1_coords = X_train_pca[:, 0]

train_pc2_coords = X_train_pca[:, 1]

pca_train_scaled = scale(np.column_stack((train_pc1_coords, train_pc2_coords)))

param_grid = [

{

"C": [1, 10, 100, 1000],

"gamma": ["scale", 1, 0.1, 0.01, 0.001, 0.0001],

"kernel": ["rbf"],

},

]

optimal_params = GridSearchCV(SVC(), param_grid, cv=5, scoring="accuracy", verbose=0)

optimal_params.fit(pca_train_scaled, y_train)

print(optimal_params.best_params_)

clf_svm = SVC(C=10, gamma=0.1)

clf_svm.fit(pca_train_scaled, y_train)

X_test_pca = pca.transform(X_train_scaled)

test_pc1_coords = X_test_pca[:, 0]

test_pc2_coords = X_test_pca[:, 1]

x_min = test_pc1_coords.min() - 1

x_max = test_pc1_coords.max() + 1

y_min = test_pc2_coords.min() - 1

y_max = test_pc2_coords.max() + 1

xx, yy = np.meshgrid(

np.arange(start=x_min, stop=x_max, step=0.1),

np.arange(start=y_min, stop=y_max, step=0.1),

)

Z = clf_svm.predict(np.column_stack((xx.ravel(), yy.ravel())))

Z = Z.reshape(xx.shape)

fig, ax = plt.subplots(figsize=(10, 10))

ax.contourf(xx, yy, Z, alpha=0.1)

cmap = colors.ListedColormap(["#e41a1c", "#4daf4a"])

scatter = ax.scatter(

test_pc1_coords,

test_pc2_coords,

c=y_train,

cmap=cmap,

s=100,

edgecolors="k",

alpha=0.7,

)

legend = ax.legend(

scatter.legend_elements()[0], scatter.legend_elements()[1], loc="upper right"

)

legend.get_texts()[0].set_text("No Default")

legend.get_texts()[1].set_text("Yes Default")

ax.set_ylabel("PC2")

ax.set_xlabel("PC1")

ax.set_title("Decision surface using the PCA transformed features")

plt.show()

|

# # 📋 Variable description

# ---

# **Customer_id** = unique customer id

# **Age** = customer's age

# **Gender** = 0: Male, 1: Female

# **Revenue_Total** = total sales by customer

# **N_Purchases** = number of purchases to date

# **Purchase_DATE** = date latest purchase, dd.mm.yy

# **Purchase_VALUE** = latest purchase in €

# **Pay_Method** = 0: Digital Wallets, 1: Card, 2: PayPal, 3: Other

# **Time_Spent** = time spent (in sec) on website

# **Browser** = 0: Chrome, 1: Safari, 2: Edge, 3: Other

# **Newsletter** = 0: not subscribed, 1: subscribed

# **Voucher** = 0: not used, 1: used

# # 📚 Imports

# ---

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings("ignore")

# # 📖 Data

# ---

df = pd.read_csv(

"/kaggle/input/online-shop-customer-sales-data/Online Shop Customer Sales Data.csv"

)

df.head()

df.info()

df.describe().T

# Checking null values

df.isna().sum()

# Random sampling

proportion_sample = 0.1

# Calculate the sample size based on the proportion

sample_size = int(len(df) * proportion_sample)

# Perform random sampling based on the sample size

sample = df.sample(n=sample_size, random_state=0)

# Display the sample

print(sample)

# Drop Customer_id

df.drop("Customer_id", axis=1, inplace=True)

# Splitting the date into month and year

df["Month"] = df["Purchase_DATE"].str.split(".").str[1]

df["Year"] = df["Purchase_DATE"].str.split(".").str[2]

df["Year"] = df["Year"].astype(int)

df["Month"] = df["Month"].astype(int)

# Drop Purchase_DATE

df.drop("Purchase_DATE", axis=1, inplace=True)

df.head()

# # 🔎 Identifying and excluding outliers 🗑

# ---

# Create the boxplot

sns.boxplot(data=df[["Revenue_Total", "Purchase_VALUE"]], palette="cool")

# Set the title/labels

plt.title("Boxplot of Revenue Total and Purchase Value")

plt.xlabel("Features")

plt.ylabel("Values")

plt.show()

# I will use **z-score** to exclude outliers.

# The z-score is calculated by the difference between the specific value and the mean of the data set, divided by the standard deviation of the data set. The formula for calculating the z-score is as follows:

# z = (x - μ) / σ

# **where:**

# **x** is the specific value

# **μ** is the mean of the data set

# **σ** is the standard deviation of the data set

# Calculate the mean and std

mean = np.mean(df["Purchase_VALUE"])

std = np.std(df["Purchase_VALUE"])

# Calculate the Z-score

z_scores = (df["Purchase_VALUE"] - mean) / std

# Set a Z-score threshold

threshold = 3

# Identify the indices of the outliers based on the Z-score threshold

outlier_indices = np.where(np.abs(z_scores) > threshold)

# Drop the outliers

df.drop(df.index[outlier_indices], inplace=True)

# # 📊 Visualization

# ---

# # Distribution of variables

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(15, 28))

# Adjust subplot vertical spacing

plt.subplots_adjust(hspace=0.2)

# 6x2 subplot figure

fig, axs = plt.subplots(6, 2, figsize=(15, 28))

i = 1

# Loop through dataframe columns

for feature in df.columns:

# Create a subplot

plt.subplot(6, 2, i)

# Create histogram

sns.histplot(data=df, x=feature, kde=True, palette="cool")

i += 1

# Show the plot

plt.show()

# ## ♂️ Gender Distribution ♀️

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Gender value count

gender_counts = df["Gender"].value_counts()

# Create the pie chart

fig, ax = plt.subplots()

ax.pie(

gender_counts,

labels=gender_counts.index,

autopct="%1.1f%%",

startangle=90,

colors=["#007fff", "#FF40B4"],

)

# Add title and show

ax.set_title("Gender Distribution")

plt.show()

# ## 👶 Age by Gender 👴

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Create histogram

sns.histplot(x="Age", hue="Gender", multiple="stack", bins=50, data=df, palette="cool")

# Customize the title and labels

plt.title("Distribution of Gender")

plt.xlabel("Age")

plt.ylabel("Count")

# Display the plot

plt.show()

# ## Revenue Total by Gender

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Bar plot

sns.barplot(x="Gender", y="Revenue_Total", data=df, palette="cool")

# Labels/Title

plt.xlabel("Gender")

plt.ylabel("Average of Revenue Total")

plt.title("Revenue Total by Gender")

plt.show()

# ## 💵 Pay Method Distribution

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Gender value count

pay_method_counts = df["Pay_Method"].value_counts()

# Set colors

colors = ["#07d5f5", "#04a4bd", "#d102d1", "#ff21ff"]

# Create the pie chart

fig, ax = plt.subplots()

ax.pie(

pay_method_counts,

labels=pay_method_counts.index,

autopct="%1.1f%%",

startangle=90,

colors=colors,

)

# Add title and show

ax.set_title("Pay Method Distribution")

plt.show()

# ## 💻 Browser Distribution

# ---

# Browser value count

browser_count = df["Browser"].value_counts()

# Set colors

colors = ["#07d5f5", "#04a4bd", "#d102d1", "#ff21ff"]

# Create the pie chart

fig, ax = plt.subplots()

ax.pie(

browser_count,

labels=browser_count.index,

autopct="%1.1f%%",

startangle=90,

colors=colors,

)

# Add title and show

ax.set_title("Browser Distribution")

plt.show()

# ## 📰 Newsletter Distribution

# ---

# Newsletter value count

newsletter_count = df["Newsletter"].value_counts()

# Set colors

colors = ["#07d5f5", "#d102d1"]

# Create the pie chart

fig, ax = plt.subplots()

ax.pie(

newsletter_count,

labels=newsletter_count.index,

autopct="%1.1f%%",

startangle=90,

colors=colors,

)

# Add title and show

ax.set_title("Newsletter Distribution")

plt.show()

# ## ✔️ Voucher Distribution

# ---

# Vouncher value count

voucher_count = df["Voucher"].value_counts()

# Set colors

colors = ["#07d5f5", "#d102d1"]

# Create the pie chart

fig, ax = plt.subplots()

ax.pie(

voucher_count,

labels=voucher_count.index,

autopct="%1.1f%%",

startangle=90,

colors=colors,

)

# Add title and show

ax.set_title("Voucher Distribution")

plt.show()

# ## Purchase Value by Payment Method

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Barplot

sns.barplot(x="Pay_Method", y="Purchase_VALUE", data=df, palette="cool")

# Labels/Title

plt.xlabel("Payment Method")

plt.ylabel("Purchase Value")

plt.title("Purchase Value by Payment Method")

plt.show()

# ## Revenue Total by Number of Purchases

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Bar plot

sns.barplot(x="N_Purchases", y="Revenue_Total", data=df, palette="cool")

# Labels/Title

plt.xlabel("N_Purchases")

plt.ylabel("Revenue Total")

plt.title("Revenue Total by Number of Purchases")

plt.show()

# ## Revenue Total by Browser

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Bar plot

sns.barplot(x="Browser", y="Revenue_Total", data=df, palette="cool")

# Labels/Title

plt.xlabel("Browser")

plt.ylabel("Revenue Total")

plt.title("Revenue Total by Browser")

plt.show()

# ## Revenue Total by Newsletter

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Bar plot

sns.barplot(x="Newsletter", y="Revenue_Total", data=df, palette="cool")

# Labels/Title

plt.xlabel("Newsletter")

plt.ylabel("Revenue Total")

plt.title("Revenue Total by Newsletter")

plt.show()

# ## Revenue Total by Voucher

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Bar plot

sns.barplot(x="Voucher", y="Revenue_Total", data=df, palette="cool")

# Labels/Title

plt.xlabel("Voucher")

plt.ylabel("Revenue Total")

plt.title("Revenue Total by Voucher")

plt.show()

# ## Time Spent by Browser

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Bar plot

sns.barplot(x="Browser", y="Time_Spent", data=df, palette="cool")

# Labels/Title

plt.xlabel("Browser")

plt.ylabel("Time Spent")

plt.title("Time Spent by Browser")

plt.show()

# ## Time Spent by Voucher

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Bar plot

sns.barplot(x="Voucher", y="Time_Spent", data=df, palette="cool")

# Labels/Title

plt.xlabel("Voucher")

plt.ylabel("Time Spent Total")

plt.title("Time Spent by Voucher")

plt.show()

# ## Time Spent by Newsletter

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Bar plot

sns.barplot(x="Newsletter", y="Time_Spent", data=df, palette="cool")

# Labels/Title

plt.xlabel("Newsletter")

plt.ylabel("Time Spent")

plt.title("Time Spent by Newsletter")

plt.show()

# ## Distribution of Purchase Value by Gender

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Create the Violin plot

sns.violinplot(x="Gender", y="Purchase_VALUE", data=df, palette="cool")

# Set the axis and title

plt.title("Distribution of Purchase Value by Gender")

plt.xlabel("Gender")

plt.ylabel("Purchase Value")

plt.show()

# ## Distribution of Revenue Total by Gender

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Create the Violin plot

sns.violinplot(x="Gender", y="Revenue_Total", data=df, palette="cool")

# Set the axis and title

plt.title("Distribution of Revenue Total by Gender")

plt.xlabel("Gender")

plt.ylabel("Revenue Total")

plt.show()

# ## Purchase Value by Gender

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Bar plot

sns.barplot(x="Age", y="Purchase_VALUE", data=df, palette="cool")

# Labels/Title

plt.xlabel("Age")

plt.ylabel("Purchase Value")

plt.title("Purchase by Gender")

plt.show()

# ## Revenue Total by Gender

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Bar plot

sns.barplot(x="Age", y="Revenue_Total", data=df, palette="cool")

# Labels/Title

plt.xlabel("Age")

plt.ylabel("Revenue Total")

plt.title("Revenue Total by Gender")

plt.show()

# ## Monthly Revenue Analysis

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Grouping

grouped_df = df.groupby("Month").sum()[["Revenue_Total", "N_Purchases"]]

# Sorting the dataframe by 'Revenue_Total'

sorted_df = grouped_df.sort_values(by="Revenue_Total", ascending=False)

# Creating a bar chart

sns.barplot(x=sorted_df.index, y="Revenue_Total", data=sorted_df, palette="cool")

# Set labels/title

plt.xlabel("Month")

plt.ylabel("Number of purchases")

plt.title("Monthly Revenue Analysis")

plt.show()

# ## Revenue Total vs Time Spent

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Set line color

color = "#FF40B4"

# Create the scatter plot

plt.scatter(df["Revenue_Total"], df["Time_Spent"], color=color, alpha=0.1)

# Set the axis labels

plt.xlabel("Revenue_Total")

plt.ylabel("Time_Spent")

# Set the title

plt.title("Revenue Total vs TimeSpent")

plt.show()

# ## Purshase Value vs Time Spent

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Set line color

color = "#FF40B4"

# Create the scatter plot

plt.scatter(df["Purchase_VALUE"], df["Time_Spent"], color=color, alpha=0.1)

# Set the axis labels

plt.xlabel("Purshase_VALUE")

plt.ylabel("Time_Spent")

# Set the title

plt.title("Purshase Value vs Time Spent")

plt.show()

# ## Purchase Value by Month

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Set line color

line_color = "#FF00B4"

# Group the data by 'Month' and the sum of 'Purchase_VALUE'

monthly_purchase_value = df.groupby("Month")["Purchase_VALUE"].sum()

# Create a line plot

plt.plot(monthly_purchase_value.index, monthly_purchase_value.values, color=line_color)

# Set the axis labels

plt.xlabel("Month")

plt.ylabel("Purchase_VALUE")

# Set the grid color

plt.grid(color="lightgray", alpha=0.3)

# Set the title

plt.title("Purchase Value by Month")

plt.show()

# ## Revenue Total by Month

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Set line color

line_color = "#FF00B4"

# Group the data by 'Month' and the sum of 'Revenue_Total'

monthly_revenue_total = df.groupby("Month")["Revenue_Total"].sum()

# Create a line plot

plt.plot(monthly_revenue_total.index, monthly_revenue_total.values, color=line_color)

# Set the axis labels

plt.xlabel("Month")

plt.ylabel("Revenue_Total")

# Set the grid color

plt.grid(color="lightgray", alpha=0.3)

# Set the title

plt.title("Revenue Total by Month")

plt.show()

# ## Time Spent by Month

# ---

# Set the background color and figsize

plt.style.use("dark_background")

plt.figure(figsize=(10, 6))

# Set line color

line_color = "#FF00B4"

# Group the data by 'Month' and the sum of 'Purchase_VALUE'

monthly_purchase_value = df.groupby("Month")["Time_Spent"].sum()

# Create a line plot

plt.plot(monthly_purchase_value.index, monthly_purchase_value.values, color=line_color)

# Set the axis labels

plt.xlabel("Month")

plt.ylabel("Time_Spent")

# Set the grid color

plt.grid(color="lightgray", alpha=0.3)

# Set the title

plt.title("Time Spent by Month")

plt.show()

|

# **Load the important required libraries**

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings("ignore")

# **Let's Load the dataset**

fifa18 = pd.read_csv("../input/fifa-worldcup-2018/2018_worldcup_v3.csv")

# ### **Data Analysis On Fifa World Cup 2018 Data Set**

# **Checking first 5 and last 5 records from the datasets**

fifa18.head(5)

fifa18.tail(5)

# **Let's check the duplicate data in data set**

fifa18.duplicated().sum()

fifa18.shape

fifa18.info()

# **So, there 64 records in 14 columns. Also, there are no null records as well as duplicate values.**

# **Let's extract hour from datetime and add it to the new column.**

fifa18["Hour"] = fifa18.Datetime.apply(lambda x: x.split(" - ")[1])

fifa18.Datetime = fifa18.Datetime.apply(lambda x: x.split(" - ")[0])

fifa18.head()

# **Let's add total goals from home and away goals.**

fifa18["Total_Goals"] = fifa18["Home Team Goals"] + fifa18["Away Team Goals"]

fifa18.head()

fifa18.rename(

columns={

"Home Team Name": "Home_Team",

"Away Team Name": "Away_Team",

"Home Team Goals": "Home_Team_Goals",

"Away Team Goals": "Away_Team_Goals",

},

inplace=True,

)

fifa18.head()

# ### **Exploratory Data Analysis - EDA**

fifa18["City"].value_counts().sort_index()

plt.figure(figsize=(10, 5))

plt.title("Number of Matches held in each Russian City", fontsize=14)

plt.xlabel("City", fontsize=12)

plt.ylabel("Count", fontsize=12)

plt.xticks(rotation=90, fontsize=12)

plt.yticks(fontsize=12)

sns.countplot(x="City", data=fifa18, palette="rocket")

# **From above table and plot, we can observe that most number of the matches are held in Moscow.**

fifa18["Hour"].value_counts().sort_index()

plt.figure(figsize=(10, 5))

plt.title("Number of Matches held in each Hour", fontsize=14)

plt.xlabel("Hour", fontsize=12)

plt.ylabel("Count", fontsize=12)

plt.xticks(rotation=90, fontsize=12)

plt.yticks(fontsize=12)

sns.countplot(x="Hour", data=fifa18, palette="mako")

# **From above table and plot, we can observe that most number of the matches are held in during 21:00 Hour time.**

fifa18["Stadium"].value_counts().sort_index()

plt.figure(figsize=(10, 5))

plt.title("Number of Matches held in each Stadium", fontsize=14)

plt.xlabel("Stadiums", fontsize=12)

plt.ylabel("Count", fontsize=12)

plt.xticks(rotation=90, fontsize=12)

plt.yticks(fontsize=12)

sns.countplot(x="Stadium", data=fifa18, palette="Greens_d")

# **From above table and plot, we can observe that most number of the matches are held in Luzhniki Stadium and Saint Petersburg Stadium with 7 no of matches each.**

goals_by_day = fifa18.groupby("Datetime").sum().Total_Goals.to_frame().reset_index()

goals_by_day.columns = ["Datetime", "Total Goals By Day"]

goals_by_day = goals_by_day.sort_values("Datetime", ascending=False)

goals_by_day

plt.figure(figsize=(12, 8))

sns.barplot(

y=goals_by_day["Datetime"],

x=goals_by_day["Total Goals By Day"],

palette="twilight",

orient="h",

)

plt.title("No of goals scored each day", fontsize=15)

plt.xlabel("Goals")

plt.ylabel("Date")

plt.xticks(fontsize=12)

plt.yticks(fontsize=12)

# **From above table and plot, we can observe that most number of goals scored on 24th June 2018 where as least number of goals scored on 10th July 2018.**

# **Also, there is difference in group stages, QF, SF stage. So we will also have to look on no of matches held on each day.**

fifa18["Datetime"].value_counts().sort_index()

plt.figure(figsize=(10, 5))

plt.title("Number of Matches held in each Day", fontsize=14)

plt.xlabel("Days", fontsize=12)

plt.ylabel("Count", fontsize=12)

plt.xticks(rotation=90, fontsize=12)

plt.yticks(fontsize=12)

sns.countplot(x="Datetime", data=fifa18, palette="cool_d")

# **From above table and plot, we can observe that there were 4 days when 4 matches were held in one single day**

# **Now, let'swork on total team goals, away and home.**

goals_by_home = (

fifa18.groupby("Home_Team")

.sum()[["Home_Team_Goals", "Away_Team_Goals"]]

.reset_index()

)

goals_by_away = (

fifa18.groupby("Away_Team")

.sum()[["Home_Team_Goals", "Away_Team_Goals"]]

.reset_index()

)

goals_total = pd.concat([goals_by_home, goals_by_away], axis=1)

goals_total.columns = [

"Home_Team",

"Home_Scored",

"Home_Conceded",

"Away_Team",

"Away_Conceded",

"Away_Scored",

]

goals_total["Scored"] = goals_total.Home_Scored + goals_total.Away_Scored

goals_total["Conceded"] = goals_total.Home_Conceded + goals_total.Away_Conceded

goals_total = goals_total.drop(

["Home_Scored", "Home_Conceded", "Away_Team", "Away_Scored", "Away_Conceded"],

axis=1,

)

goals_total

goals_total["Goal_Diff"] = goals_total.Scored - goals_total.Conceded

goals_total = goals_total.rename(columns={"Home_Team": "Team_Name"})

goals_total

goals_total = goals_total.sort_values("Scored", ascending=False)

plt.figure(figsize=(12, 8))

sns.barplot(x=goals_total["Team_Name"], y=goals_total["Scored"], palette="coolwarm")

plt.title("No of goals scored by each teams", fontsize=15)

plt.xlabel("Teams")

plt.ylabel("No of Goals")

plt.xticks(rotation=90, fontsize=12)

plt.yticks(fontsize=12)

goals_total = goals_total.sort_values("Conceded", ascending=False)

plt.figure(figsize=(12, 8))

sns.barplot(x=goals_total["Team_Name"], y=goals_total["Conceded"], palette="coolwarm")

plt.title("No of goals conceded by each teams", fontsize=15)

plt.xlabel("Teams")

plt.ylabel("No of Goals")

plt.xticks(rotation=90, fontsize=12)

plt.yticks(fontsize=12)

plt.figure(figsize=(12, 8))

sns.barplot(x=fifa18["City"], y=fifa18["Home_Team_Goals"], palette="inferno")

plt.title("No of goals scored by Home Team in each city", fontsize=15)

plt.xlabel("City")

plt.ylabel("No of Goals")

plt.xticks(rotation=90, fontsize=12)

plt.yticks(fontsize=12)

plt.figure(figsize=(12, 8))

sns.barplot(x=fifa18["City"], y=fifa18["Away_Team_Goals"], palette="inferno")

plt.title("No of goals scored by aw away team in each city", fontsize=15)

plt.xlabel("City")

plt.ylabel("No of Goals")

plt.xticks(rotation=90, fontsize=12)

plt.yticks(fontsize=12)

plt.figure(figsize=(12, 8))

sns.barplot(x=fifa18["Stadium"], y=fifa18["Home_Team_Goals"], palette="flare")

plt.title("No of goals scored by Home Team in each stadium", fontsize=15)

plt.xlabel("City")

plt.ylabel("No of Goals")

plt.xticks(rotation=90, fontsize=12)

plt.yticks(fontsize=12)

plt.figure(figsize=(12, 8))

sns.barplot(x=fifa18["Stadium"], y=fifa18["Away_Team_Goals"], palette="flare")

plt.title("No of goals scored by Away Team in each stadium", fontsize=15)

plt.xlabel("City")

plt.ylabel("No of Goals")

plt.xticks(rotation=90, fontsize=12)

plt.yticks(fontsize=12)

|

import tensorflow as tf

from tensorflow.keras import models, layers

import matplotlib.pyplot as plt

import numpy as np

import pathlib

import os

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2" # to disable all debugging logs

# Global initialization of some imp variables

IMAGE_SIZE = 256

BATCH_SIZE = 32

CHANNELS = 3

EPOCHS = 50

train = tf.keras.preprocessing.image_dataset_from_directory(

"/kaggle/input/tomato/Tomato_images/train",

shuffle=True,

image_size=(IMAGE_SIZE, IMAGE_SIZE),

batch_size=BATCH_SIZE,

)

val = tf.keras.preprocessing.image_dataset_from_directory(

"/kaggle/input/tomato/Tomato_images/val",

shuffle=True,

image_size=(IMAGE_SIZE, IMAGE_SIZE),

batch_size=BATCH_SIZE,

)

test = tf.keras.preprocessing.image_dataset_from_directory(

"/kaggle/input/tomato/Tomato_images/test",

shuffle=True,

image_size=(IMAGE_SIZE, IMAGE_SIZE),

batch_size=BATCH_SIZE,

)

class_names = train.class_names

class_names

# Number of Batches = (total number of files belonging to all classes / Batch_Size)

len(train)

# prints Elements in dataset: here 1st element is image and 2nd index of that image.

print(train)

for image_batch, labels_batch in train.take(1):

print(image_batch.shape)

print(labels_batch.numpy())

plt.figure(figsize=(20, 10))

for image_batch, labels_batch in train.take(1):

for i in range(15):

ax = plt.subplot(3, 5, i + 1)

plt.imshow(image_batch[i].numpy().astype("uint8"))

plt.title(class_names[labels_batch[i]])

plt.axis("off")

train.take(41)

train = train.cache().shuffle(1000).prefetch(buffer_size=tf.data.AUTOTUNE)

val = val.cache().shuffle(1000).prefetch(buffer_size=tf.data.AUTOTUNE)

test = test.cache().shuffle(1000).prefetch(buffer_size=tf.data.AUTOTUNE)

resize_and_rescale = tf.keras.Sequential(

[

layers.experimental.preprocessing.Resizing(IMAGE_SIZE, IMAGE_SIZE),

layers.experimental.preprocessing.Rescaling(1.0 / 255),

]

)

data_augmentation = tf.keras.Sequential(

[

layers.experimental.preprocessing.RandomFlip("horizontal_and_vertical"),

layers.experimental.preprocessing.RandomRotation(0.2),

]

)

input_shape = (BATCH_SIZE, IMAGE_SIZE, IMAGE_SIZE, CHANNELS)

n_classes = 3

model = models.Sequential(

[

resize_and_rescale,

data_augmentation,

layers.Conv2D(

32, kernel_size=(3, 3), activation="relu", input_shape=input_shape

),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, kernel_size=(3, 3), activation="relu"),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, kernel_size=(3, 3), activation="relu"),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation="relu"),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation="relu"),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(32, (3, 3), activation="relu"),

layers.MaxPooling2D((2, 2)),

layers.Flatten(),

layers.Dense(32, activation="relu"),

layers.Dense(n_classes, activation="softmax"),

]

)

model.build(input_shape=input_shape)

model.summary()

model.compile(

optimizer="adam",

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=["accuracy"],

)

history = model.fit(

train, batch_size=BATCH_SIZE, validation_data=val, verbose=1, epochs=50

)

scores = model.evaluate(test)

scores

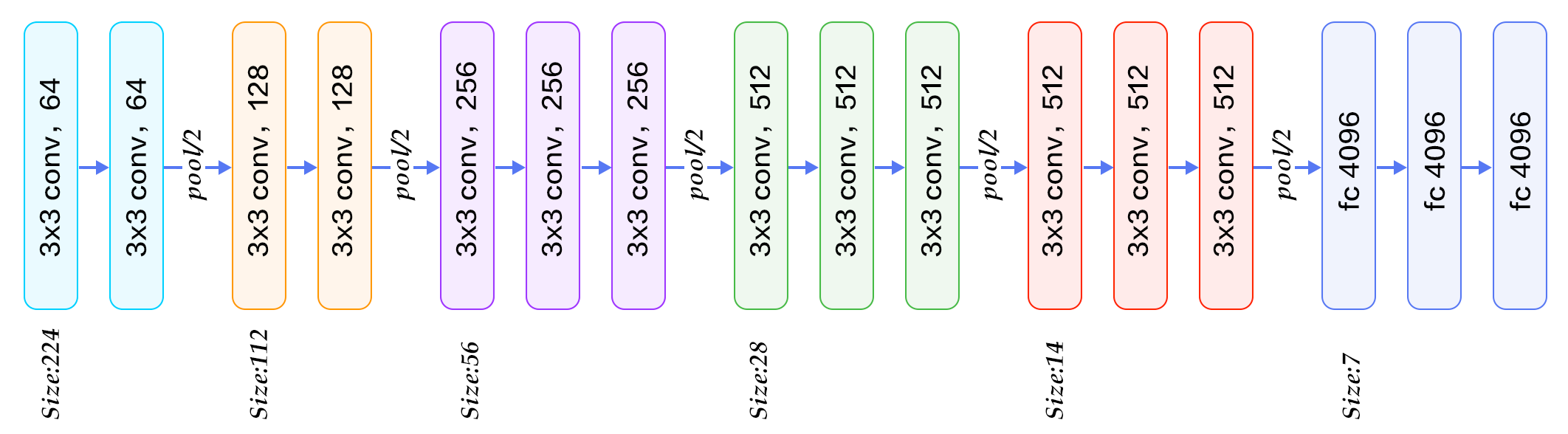

# # VGG16

from tensorflow.keras.applications.vgg16 import VGG16

input_shape = (IMAGE_SIZE, IMAGE_SIZE, CHANNELS)

vgg16 = VGG16(input_shape=input_shape, weights="imagenet", include_top=False)

VGG_16 = models.Sequential(

[

resize_and_rescale,

data_augmentation,

vgg16,

layers.Flatten(),

# layers.Dense(64, activation='relu'),

layers.Dense(n_classes, activation="softmax"),

]

)

VGG_16.compile(

optimizer="adam",

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=["accuracy"],

)

history = VGG_16.fit(

train, batch_size=BATCH_SIZE, validation_data=val, verbose=1, epochs=10

)

scores = VGG_16.evaluate(test)

scores

# # VGG19

from tensorflow.keras.applications.vgg19 import VGG19

input_shape = (IMAGE_SIZE, IMAGE_SIZE, CHANNELS)

vgg19 = VGG19(input_shape=input_shape, weights="imagenet", include_top=False)

VGG_19 = models.Sequential(

[

resize_and_rescale,

data_augmentation,

vgg19,

layers.Flatten(),

# layers.Dense(64, activation='relu'),

layers.Dense(n_classes, activation="softmax"),

]

)

VGG_19.compile(

optimizer="adam",

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=["accuracy"],

)

history = VGG_19.fit(

train, batch_size=BATCH_SIZE, validation_data=val, verbose=1, epochs=10

)

scores = VGG_19.evaluate(test)

scores

# # VGG21

VGG_21 = models.Sequential(

[

resize_and_rescale,

data_augmentation,

vgg19,

layers.Conv2D(512, kernel_size=(3, 3), activation="relu"),

layers.MaxPooling2D(pool_size=(2, 2), strides=(2, 2)),

layers.Flatten(),

layers.Dense(64, activation="relu"),

layers.Dense(n_classes, activation="softmax"),

]

)

VGG_21.compile(

optimizer="adam",

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=["accuracy"],

)

history = VGG_21.fit(

train, batch_size=BATCH_SIZE, validation_data=val, verbose=1, epochs=10

)

VGG_21.summary()

scores = VGG_21.evaluate(test)

scores

train_loss = history.history["loss"]

train_acc = history.history["accuracy"]

val_loss = history.history["val_loss"]

val_acc = history.history["val_accuracy"]

# graphs for accuracy and loss of training and validation data

plt.figure(figsize=(15, 15))

plt.subplot(2, 3, 1)

plt.plot(range(10), train_acc, label="Training Accuracy")

plt.plot(range(10), val_acc, label="Validation Accuracy")

plt.legend(loc="lower right")

plt.title("Training and Validation Accuracy")

plt.subplot(2, 3, 2)

plt.plot(range(10), train_loss, label="Training Loss")

plt.plot(range(10), val_loss, label="Validation Loss")

plt.legend(loc="upper right")

plt.title("Training and Validation Loss")

# # VGG14

input_shape = (BATCH_SIZE, IMAGE_SIZE, IMAGE_SIZE, CHANNELS)

n_classes = 3

VGG_14 = models.Sequential(

[

resize_and_rescale,

data_augmentation,

# Block1

layers.Conv2D(

64,

kernel_size=(3, 3),

activation="relu",

padding="same",

name="block1_conv1",

),

layers.Conv2D(

64,

kernel_size=(3, 3),

activation="relu",

padding="same",

name="block1_conv2",

),

layers.MaxPooling2D(pool_size=(2, 2), strides=(2, 2), name="block1_pool"),

# Block2

layers.Conv2D(

128,

kernel_size=(3, 3),

activation="relu",

padding="same",

name="block2_conv1",

),

layers.Conv2D(

128,

kernel_size=(3, 3),

activation="relu",

padding="same",

name="block2_conv2",

),

layers.MaxPooling2D(pool_size=(2, 2), strides=(2, 2), name="block2_pool"),

# Block3

layers.Conv2D(

256,

kernel_size=(3, 3),

activation="relu",

padding="same",

name="block3_conv1",

),

layers.Conv2D(

256,

kernel_size=(3, 3),

activation="relu",

padding="same",

name="block3_conv2",

),

layers.Conv2D(

256,

kernel_size=(3, 3),

activation="relu",

padding="same",

name="block3_conv3",

),

layers.MaxPooling2D(pool_size=(2, 2), strides=(2, 2), name="block3_pool"),

# Block4

layers.Conv2D(

512,

kernel_size=(3, 3),

activation="relu",

padding="same",

name="block4_conv1",

),

layers.Conv2D(

512,

kernel_size=(3, 3),

activation="relu",

padding="same",

name="block4_conv2",

),

layers.Conv2D(

512,

kernel_size=(3, 3),

activation="relu",

padding="same",

name="block4_conv3",

),

layers.MaxPooling2D(pool_size=(2, 2), strides=(2, 2), name="block4_pool"),

layers.Flatten(),

layers.Dense(64, activation="relu"),

layers.Dense(n_classes, activation="softmax"),

]

)

VGG_14.build(input_shape=input_shape)

VGG_14.summary()

VGG_14.compile(

optimizer="adam",

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=["accuracy"],

)

history = VGG_14.fit(

train, batch_size=BATCH_SIZE, validation_data=val, verbose=1, epochs=10

)

scores = VGG_14.evaluate(test)

scores

|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 20GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session

import pandas as pd

data = pd.read_csv("../input/resume-dataset/UpdatedResumeDataSet.csv")

data.head()

# To identify the number of unique categories

print(data["Category"].unique())

print("")

print("Number of datapoints in each categories")

print(data["Category"].value_counts())

# Visualization of various categories

data["Category"].value_counts(sort=True).nlargest(25).plot.bar()

# # remove empty documents from the dataframe

# empty_doc = []

# for i in range(len(data)):

# if(len(data['Resume'][i]) <=10):

# empty_doc.append(i)

# print(empty_doc)

# data = data.drop(empty_doc)

# We now need to clean the text in the "Resume" column. So some standard data preprocessing steps are followed as below.

import re

import string