script

stringlengths 113

767k

|

|---|

# for basic mathematics operation

import numpy as np

import pandas as pd

from pandas import plotting

# for visualizations

import matplotlib.pyplot as plt

import seaborn as sns

plt.style.use("fivethirtyeight")

# for interactive visualizations

import plotly.offline as py

from plotly.offline import init_notebook_mode, iplot

import plotly.graph_objs as go

from plotly import tools

init_notebook_mode(connected=True)

import plotly.figure_factory as ff

# for path

import os

for dirname, _, filenames in os.walk("\kaggle\input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# print(os.listdir('kaggle\input'))

# Connecting to the house sales data present in the kaggle input directory.

os.chdir(r"/kaggle/input/telco-customer-churn")

data = pd.read_csv("WA_Fn-UseC_-Telco-Customer-Churn.csv")

data_1 = ff.create_table(data.head())

data.head(10)

desc = ff.create_table(data.describe())

py.iplot(desc)

data.isnull().any().any()

plt.rcParams["figure.figsize"] = (15, 10)

plotting.andrews_curves(data.drop("tenure", axis=1), "gender")

plt.title("Andrew Curves for Gender", fontsize=20)

plt.show()

import warnings

warnings.filterwarnings("ignore")

plt.rcParams["figure.figsize"] = (18, 8)

plt.subplot(1, 2, 1)

sns.set(style="whitegrid")

sns.distplot(data["Annual Income (k$)"])

plt.title("Distribution of Annual Income", fontsize=20)

plt.xlabel("Range of Annual Income")

plt.ylabel("Count")

plt.subplot(1, 2, 2)

sns.set(style="whitegrid")

sns.distplot(data["Age"], color="red")

plt.title("Distribution of Age", fontsize=20)

plt.xlabel("Range of Age")

plt.ylabel("Count")

plt.show()

labels = ["Female", "Male"]

size = data["gender"].value_counts()

colors = ["lightgreen", "orange"]

explode = [0, 0.1]

plt.rcParams["figure.figsize"] = (9, 9)

plt.pie(

size, colors=colors, explode=explode, labels=labels, shadow=True, autopct="%.2f%%"

)

plt.title("gender", fontsize=20)

plt.axis("off")

plt.legend()

plt.show()

plt.rcParams["figure.figsize"] = (15, 8)

sns.countplot(data["tenure"], palette="hsv")

plt.title("Distribution of Age", fontsize=20)

plt.show()

plt.rcParams["figure.figsize"] = (20, 8)

sns.countplot(data["MonthlyCharges"], palette="rainbow")

plt.title("Distribution of Annual Income", fontsize=20)

plt.show()

plt.rcParams["figure.figsize"] = (20, 8)

sns.countplot(data["TotalCharges"], palette="copper")

plt.title("Distribution of Spending Score", fontsize=20)

plt.show()

plt.rcParams["figure.figsize"] = (15, 8)

sns.heatmap(data.corr(), annot=True)

plt.title("Heatmap for the Data", fontsize=20)

plt.show()

# Gender vs Spendscore

plt.rcParams["figure.figsize"] = (18, 7)

sns.boxenplot(data["gender"], data["TotalCharges"], palette="Blues")

plt.title("Gender vs TotalCharges", fontsize=20)

plt.show()

plt.rcParams["figure.figsize"] = (18, 7)

sns.violinplot(data["gender"], data["MonthlyCharges"], palette="rainbow")

plt.title("Gender vs Spending Score", fontsize=20)

plt.show()

plt.rcParams["figure.figsize"] = (18, 7)

sns.stripplot(data["gender"], data["tenure"], palette="Purples", size=10)

plt.title("Gender vs Spending Score", fontsize=20)

plt.show()

x = data["MonthlyCharges"]

y = data["tenure"]

z = data["TotalCharges"]

sns.lineplot(x, y, color="blue")

sns.lineplot(x, z, color="pink")

plt.title("Annual Income vs Age and Spending Score", fontsize=20)

plt.show()

x = data.iloc[:, [3, 4]].values

# let's check the shape of x

print(x.shape)

from sklearn.cluster import KMeans

wcss = []

for i in range(1, 11):

km = KMeans(n_clusters=i, init="k-means++", max_iter=300, n_init=10, random_state=0)

km.fit(x)

wcss.append(km.inertia_)

plt.plot(range(1, 11), wcss)

plt.title("The Elbow Method", fontsize=20)

plt.xlabel("No. of Clusters")

plt.ylabel("wcss")

plt.show()

km = KMeans(n_clusters=5, init="k-means++", max_iter=300, n_init=10, random_state=0)

y_means = km.fit_predict(x)

plt.scatter(x[y_means == 0, 0], x[y_means == 0, 1], s=100, c="pink", label="miser")

plt.scatter(x[y_means == 1, 0], x[y_means == 1, 1], s=100, c="yellow", label="general")

plt.scatter(x[y_means == 2, 0], x[y_means == 2, 1], s=100, c="cyan", label="target")

plt.scatter(

x[y_means == 3, 0], x[y_means == 3, 1], s=100, c="magenta", label="spendthrift"

)

plt.scatter(x[y_means == 4, 0], x[y_means == 4, 1], s=100, c="orange", label="careful")

plt.scatter(

km.cluster_centers_[:, 0],

km.cluster_centers_[:, 1],

s=200,

c="blue",

label="centeroid",

)

plt.style.use("fivethirtyeight")

plt.title("K Means Clustering", fontsize=20)

plt.xlabel("TotalCharges")

plt.ylabel("tenure")

plt.legend()

plt.grid()

plt.show()

import scipy.cluster.hierarchy as sch

dendrogram = sch.dendrogram(sch.linkage(x, method="ward"))

plt.title("Dendrogam", fontsize=20)

plt.xlabel("Customers")

plt.ylabel("Ecuclidean Distance")

plt.show()

from sklearn.cluster import AgglomerativeClustering

hc = AgglomerativeClustering(n_clusters=5, affinity="euclidean", linkage="ward")

y_hc = hc.fit_predict(x)

plt.scatter(x[y_hc == 0, 0], x[y_hc == 0, 1], s=100, c="pink", label="miser")

plt.scatter(x[y_hc == 1, 0], x[y_hc == 1, 1], s=100, c="yellow", label="general")

plt.scatter(x[y_hc == 2, 0], x[y_hc == 2, 1], s=100, c="cyan", label="target")

plt.scatter(x[y_hc == 3, 0], x[y_hc == 3, 1], s=100, c="magenta", label="spendthrift")

plt.scatter(x[y_hc == 4, 0], x[y_hc == 4, 1], s=100, c="orange", label="careful")

plt.scatter(

km.cluster_centers_[:, 0],

km.cluster_centers_[:, 1],

s=50,

c="blue",

label="centeroid",

)

plt.style.use("fivethirtyeight")

plt.title("Hierarchial Clustering", fontsize=20)

plt.xlabel("TotalCharges")

plt.ylabel("tenure")

plt.legend()

plt.grid()

plt.show()

x = data.iloc[:, [2, 4]].values

x.shape

from sklearn.cluster import KMeans

wcss = []

for i in range(1, 11):

kmeans = KMeans(

n_clusters=i, init="k-means++", max_iter=300, n_init=10, random_state=0

)

kmeans.fit(x)

wcss.append(kmeans.inertia_)

plt.rcParams["figure.figsize"] = (15, 5)

plt.plot(range(1, 11), wcss)

plt.title("K-Means Clustering(The Elbow Method)", fontsize=20)

plt.xlabel("TotalCharges")

plt.ylabel("tenure")

plt.grid()

plt.show()

kmeans = KMeans(n_clusters=4, init="k-means++", max_iter=300, n_init=10, random_state=0)

ymeans = kmeans.fit_predict(x)

plt.rcParams["figure.figsize"] = (10, 10)

plt.title("Cluster of Ages", fontsize=30)

plt.scatter(

x[ymeans == 0, 0], x[ymeans == 0, 1], s=100, c="pink", label="Usual Customers"

)

plt.scatter(

x[ymeans == 1, 0], x[ymeans == 1, 1], s=100, c="orange", label="Priority Customers"

)

plt.scatter(

x[ymeans == 2, 0],

x[ymeans == 2, 1],

s=100,

c="lightgreen",

label="Target Customers(Young)",

)

plt.scatter(

x[ymeans == 3, 0], x[ymeans == 3, 1], s=100, c="red", label="Target Customers(Old)"

)

plt.scatter(

kmeans.cluster_centers_[:, 0], kmeans.cluster_centers_[:, 1], s=50, c="black"

)

plt.style.use("fivethirtyeight")

plt.xlabel("tenure")

plt.ylabel("TotalCharges")

plt.legend()

plt.grid()

plt.show()

x = data[["tenure", "TotalCharges", "MonthlyCharges"]].values

km = KMeans(n_clusters=5, init="k-means++", max_iter=300, n_init=10, random_state=0)

km.fit(x)

labels = km.labels_

centroids = km.cluster_centers_

data["labels"] = labels

trace1 = go.Scatter3d(

x=data["Age"],

y=data["Spending Score (1-100)"],

z=data["Annual Income (k$)"],

mode="markers",

marker=dict(

color=data["labels"],

size=10,

line=dict(color=data["labels"], width=12),

opacity=0.8,

),

)

df = [trace1]

layout = go.Layout(

title="Character vs Gender vs Alive or not",

margin=dict(l=0, r=0, b=0, t=0),

scene=dict(

xaxis=dict(title="tenure"),

yaxis=dict(title="MonthlyCharges"),

zaxis=dict(title="TotalCharges"),

),

)

fig = go.Figure(data=df, layout=layout)

py.iplot(fig)

|

import numpy as np # linear algebra

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from ast import literal_eval

import json

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 20GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session

# # Cleaning

# Loading csv file into a Pandas DataFrame

movies = pd.read_csv("../input/the-movies-dataset/movies_metadata.csv")

creadits = pd.read_csv("../input/the-movies-dataset/credits.csv")

keywords = pd.read_csv("../input/the-movies-dataset/keywords.csv")

movies.head()

movies.info()

# Through using the info() function, Dataframe Movies has 45466 rows and 24 columns corresponding to 24 features

# ### Check the number of null values present in each feature

movies.isnull().sum()

# Because in columns like belongs_to_collection,homepage,tagline there are too many null values, so we will proceed to delete them

# ## Handling missing value

movies = movies.drop(

[

"belongs_to_collection",

"homepage",

"tagline",

],

axis=1,

)

movies[movies["title"].isnull()]

# So there are 6 lines in the title column containing null values,We will remove them.

movies.dropna(subset=["title"], inplace=True)

# Similar to column 'production_companies' and 'spoken_language'

movies.dropna(subset=["production_companies"], axis="rows", inplace=True)

movies.dropna(subset=["spoken_languages"], axis="rows", inplace=True)

# ### converts json list to list of inputs (from the label specified with 'wanted' parameter)

# We can define this pattern with a regex, compile it and use it to find all words with that pattern in each column by applying a lambda function. If this was confusing, you can jump to the final result to see the cleaned data and compare it to the output above to see what we are trying to accomplish.

import re

regex = re.compile(r": '(.*?)'")

movies["genres"] = movies["genres"].apply(lambda x: str(x))

movies["genres"] = movies["genres"].apply(lambda x: ", ".join(regex.findall(x)))

# *Extracting relevant substring from production_companies, production_countries and spoken_languages as done with genres above.*

movies["production_companies"] = movies["production_companies"].apply(lambda x: str(x))

movies["production_countries"] = movies["production_countries"].apply(lambda x: str(x))

movies["spoken_languages"] = movies["spoken_languages"].apply(lambda x: str(x))

movies["production_companies"] = movies["production_companies"].apply(

lambda x: ", ".join(regex.findall(x))

)

movies["production_countries"] = movies["production_countries"].apply(

lambda x: ", ".join(regex.findall(x))

)

movies["spoken_languages"] = movies["spoken_languages"].apply(

lambda x: ", ".join(regex.findall(x))

)

# Remove wrong value data in column id

id_errors = []

for index, row in movies.iterrows():

row["id"] = row["id"].split("-")

if len(row["id"]) > 1:

id_errors.append(index)

movies = movies.drop(id_errors)

movies = movies.reset_index(drop=True)

# ### Converting the date to datetime and using year to create a new column

movies["release_date"] = pd.to_datetime(movies["release_date"], errors="coerce")

movies["year"] = movies["release_date"].dt.year

movies.dropna(inplace=True)

|

import tensorflow as tf

import tensorflow_datasets as tfds

from keras.preprocessing.text import Tokenizer

from tensorflow.keras import layers

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

import scipy

from sklearn.compose import make_column_transformer

from sklearn.preprocessing import MinMaxScaler, OneHotEncoder, LabelEncoder

from sklearn.model_selection import train_test_split

import random

import os

import io

import zipfile

from tensorflow.keras.preprocessing.image import ImageDataGenerator

import urllib.request

import cv2

# Setup the train and test directories

train_dir = "/kaggle/input/3classdataset/train"

test_dir = "/kaggle/input/3classdataset/test"

# Use ImageDataGenerator to create Train and Test Datsets with data augmentation built in

train_gen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1.0 / 255,

shear_range=0.2, # shear the image

zoom_range=0.2, # zoom into the image

width_shift_range=0.2, # shift the image width ways

height_shift_range=0.2, # shift the image height ways

horizontal_flip=True,

) # flip the image on the horizontal axis

test_gen = tf.keras.preprocessing.image.ImageDataGenerator(rescale=1.0 / 255)

training_data = train_gen.flow_from_directory(

train_dir,

batch_size=32, # number of images to process at a time

target_size=(224, 224), # convert all images to be 224 x 224

class_mode="categorical", # type of problem we're working

shuffle=True,

seed=42,

)

testing_data = test_gen.flow_from_directory(

test_dir,

batch_size=32, # number of images to process at a time

target_size=(224, 224), # convert all images to be 224 x 224

class_mode="categorical", # type of problem we're working

shuffle=True,

seed=42,

)

# Create callbacks

early_stopping = tf.keras.callbacks.EarlyStopping(

monitor="val_loss", patience=3, restore_best_weights=True

)

lr_callback = tf.keras.callbacks.ReduceLROnPlateau(

monitor="val_loss", factor=0.5, patience=2, verbose=0

)

# 1. Create base model with tf.keras.applications

base_model = tf.keras.applications.efficientnet_v2.EfficientNetV2M(

include_top=False, include_preprocessing=False

)

# 2. Freeze the base model (so the undelying pre-trained patterns aren't updated during training )

base_model.trainable = True

# 3. Create inputs into our model

inputs = tf.keras.layers.Input(shape=(224, 224, 3), name="input_layer", dtype="float32")

# 4. If using a model like ResNet50V2, add this to speed up convergence, remove for EfficientNet

# x = tf.keras.layers.experimental.preprocessing.Rescaling(1./255)(inputs)

# 5. Pass the inputs to the base_model

x = base_model(inputs)

x = tf.keras.layers.GlobalAveragePooling2D(name="global_average_pooling_layer")(x)

# 7. Create the output activation layer

outputs = tf.keras.layers.Dense(3, activation="softmax", name="output_layer")(x)

model = tf.keras.Model(inputs=inputs, outputs=outputs)

model.summary()

model.compile(

loss="categorical_crossentropy",

optimizer=tf.keras.optimizers.Adam(),

metrics=["accuracy"],

)

model_history = model.fit(

training_data,

validation_data=(testing_data),

epochs=10,

callbacks=[early_stopping, lr_callback],

)

model.evaluate(training_data)

model.save_weights("weights.h5")

model.evaluate(training_data)

# 1. Create base model with tf.keras.applications

base_model = tf.keras.applications.efficientnet_v2.EfficientNetV2M(

include_top=False, include_preprocessing=False

)

# 2. Freeze the base model (so the undelying pre-trained patterns aren't updated during training )

base_model.trainable = True

# 3. Create inputs into our model

inputs = tf.keras.layers.Input(shape=(224, 224, 3), name="input_layer", dtype="float32")

# 4. If using a model like ResNet50V2, add this to speed up convergence, remove for EfficientNet

# x = tf.keras.layers.experimental.preprocessing.Rescaling(1./255)(inputs)

# 5. Pass the inputs to the base_model

x = base_model(inputs)

x = tf.keras.layers.GlobalAveragePooling2D(name="global_average_pooling_layer")(x)

# 7. Create the output activation layer

outputs = tf.keras.layers.Dense(1, activation="sigmoid", name="output_layer")(x)

model2 = tf.keras.Model(inputs=inputs, outputs=outputs)

model2.compile(

loss="binary_crossentropy",

optimizer=tf.keras.optimizers.Adam(),

metrics=["accuracy"],

)

model2.load_weights("/kaggle/working/weights.h5")

model2.evaluate(testing_data)

IMAGE_SIZE = 224

BATCH_SIZE = 64

datagen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1.0 / 255, validation_split=0.2

)

train_generator = datagen.flow_from_directory(

train_dir,

target_size=(IMAGE_SIZE, IMAGE_SIZE),

batch_size=BATCH_SIZE,

subset="training",

)

val_generator = datagen.flow_from_directory(

test_dir,

target_size=(IMAGE_SIZE, IMAGE_SIZE),

batch_size=BATCH_SIZE,

subset="validation",

)

batch_images, batch_labels = next(val_generator)

logits = model(batch_images)

truth = np.argmax(batch_labels, axis=1)

prediction = np.argmax(logits, axis=1)

keras_accuracy = tf.keras.metrics.Accuracy()

keras_accuracy(prediction, truth)

print("Raw model accuracy: {:.3%}".format(keras_accuracy.result()))

prediction

model.save("model_3_classes.h5")

|

# # Project 4

# We're going to use Polars instead of Pandas to perform the transformations we did on projects 1 & 2. We are then going to compare how long they take to perform similar tasks.

import pandas as pd

import polars as pl

import numpy as np

import time

from datetime import timedelta

pl.Config.set_fmt_str_lengths(200)

data1_path = "/kaggle/input/project-1-data/data"

sampled = False

path_suffix = "" if not sampled else "_sampled"

data2_path = "/kaggle/input/project-2-data/project_2_data"

# # Loading data

# Load the transactions data from csv using each library.

# ## Polars

start_time = time.monotonic()

polar_transactions = pl.read_csv(

f"{data1_path}/transactions_data{path_suffix}.csv"

).with_columns(

pl.col("date").str.strptime(pl.Date, fmt="%Y-%m-%d %H:%M:%S", strict=False)

)

end_time = time.monotonic()

polars_loading_time = timedelta(seconds=end_time - start_time)

print(polars_loading_time)

# ## Pandas

start_time = time.monotonic()

pandas_transactions = pd.read_csv(f"{data1_path}/transactions_data{path_suffix}.csv")

pandas_transactions["date"] = pd.to_datetime(pandas_transactions["date"])

end_time = time.monotonic()

pandas_loading_time = timedelta(seconds=end_time - start_time)

print(pandas_loading_time)

# # Processing data

# Process the transactions data such that we have sales information even when no sales were performed, for all combination of dates and ids. We then compare with a prepared sales file to check if the processing was done correct on both occasions.

# ## Polars

start_time = time.monotonic()

polar_data = (

polar_transactions.with_columns(pl.lit(1).alias("sales"))

.groupby(list(polar_transactions.columns))

.sum()

)

max_date = polar_data.with_columns(pl.col("date")).max()["date"][0]

min_date = polar_data.with_columns(pl.col("date")).min()["date"][0]

date_range = pl.date_range(min_date, max_date, "1d")

polars_MultiIndex = pl.DataFrame({"date": date_range}).join(

polar_data.select(pl.col("id").unique()), how="cross"

)

polar_data = (

polar_data.join(polars_MultiIndex, on=["date", "id"], how="outer")

.with_columns(pl.col("sales").fill_null(0))

.sort("id", "date")

)

filled_data = (

polar_data.lazy()

.groupby("id")

.agg(

pl.col("date"),

pl.col("item_id").forward_fill(),

pl.col("dept_id").forward_fill(),

pl.col("cat_id").forward_fill(),

pl.col("store_id").forward_fill(),

pl.col("state_id").forward_fill(),

)

.explode(["date", "item_id", "dept_id", "cat_id", "store_id", "state_id"])

.sort(["id", "date"])

)

polar_data = (

polar_data.lazy()

.join(filled_data, on=["date", "id"], how="outer")

.with_columns(

pl.col("item_id").fill_null(pl.col("item_id_right")),

pl.col("dept_id").fill_null(pl.col("dept_id_right")),

pl.col("cat_id").fill_null(pl.col("cat_id_right")),

pl.col("store_id").fill_null(pl.col("store_id_right")),

pl.col("state_id").fill_null(pl.col("state_id_right")),

)

.select(polar_data.columns)

# We can remove the initial data without sales we would not need the cumsum trick.

.drop_nulls()

.collect()

)

end_time = time.monotonic()

polars_processing_time = timedelta(seconds=end_time - start_time)

print(polars_processing_time)

# ## Pandas

start_time = time.monotonic()

pandas_data = (

pandas_transactions.assign(sales=1, date=lambda df: df.date.dt.floor("d"))

.groupby(list(pandas_transactions.columns))

.sum()

.reset_index()

.set_index(["date", "id"])

.sort_index()

)

min_date = pandas_data.index.get_level_values("date").min()

max_date = pandas_data.index.get_level_values("date").max()

dates_to_select = pd.date_range(min_date, max_date, freq="1D")

ids = pandas_data.index.get_level_values("id").unique()

index_to_select = pd.MultiIndex.from_product(

[dates_to_select, ids], names=["date", "id"]

)

pandas_data = pandas_data.reindex(index_to_select)

pandas_data = pandas_data.loc[pandas_data.groupby("id").sales.cumsum() > 0]

data_ids = pandas_data.index.get_level_values("id").str.split("_")

data_id = pd.DataFrame(data_ids.tolist())

data_id.index = pandas_data.index

pandas_data.sales = pandas_data.sales.astype(np.int64)

item_id = data_id[0] + "_" + data_id[1] + "_" + data_id[2]

pandas_data["item_id"].update(item_id)

dept_id = data_id[0] + "_" + data_id[1]

pandas_data["dept_id"].update(dept_id)

cat_id = data_id[0]

pandas_data["cat_id"].update(cat_id)

store_id = data_id[3] + "_" + data_id[4]

pandas_data["store_id"].update(store_id)

state_id = data_id[3]

pandas_data["state_id"].update(state_id)

end_time = time.monotonic()

pandas_processing_time = timedelta(seconds=end_time - start_time)

print(pandas_processing_time)

# ## Comparison

# We compare each dataframe with the expected results. They should all match.

#

def test_sales_eq(data):

assert (

pd.read_csv(

f"{data1_path}/sales_data{path_suffix}.csv", usecols=["date", "id", "sales"]

)

# pd.read_parquet(f"{data2_path}/sales_data.parquet")[['sales']].reset_index()

.assign(date=lambda df: pd.to_datetime(df.date))

.merge(data, on=["date", "id"], how="left", suffixes=("_actual", "_predicted"))

.fillna({"sales_actual": 0, "sales_predicted": 0})

.assign(sales_error=lambda df: (df.sales_actual - df.sales_predicted).abs())

.sales_error.sum()

< 1e-6

), "Your version of sales does not match the original sales data."

print("Comparing POLARS")

test_sales_eq(polar_data.to_pandas())

print(" - matched.")

print("Comparing PANDAS")

test_sales_eq(pandas_data)

print(" - matched.")

# # Feature Engineering

# We now have to add date features, calendar and price features to the dataframes.

# ## Polars

start_time = time.monotonic()

pl_calendar = (

pl.read_parquet(f"{data2_path}/calendar.parquet")

.with_columns(pl.col("date").cast(pl.Date))

.lazy()

)

pl_prices = (

pl.read_parquet(f"{data2_path}/prices.parquet")

.with_columns(pl.col("date").cast(pl.Date))

.lazy()

)

pl_data = (

polar_data.lazy()

.drop(["state_id"])

.join(pl_prices, on=["date", "store_id", "item_id"], how="left")

.join(pl_calendar, on=["date"], how="left")

.with_columns(pl.col("date").dt.weekday().alias("day_of_week"))

.with_columns(pl.col("date").dt.day().alias("day_of_month"))

.with_columns(pl.col("date").dt.week().alias("week"))

.with_columns(pl.col("date").dt.month().alias("month"))

.with_columns(pl.col("date").dt.quarter().alias("quarter"))

.with_columns(pl.col("date").dt.year().alias("year"))

.with_columns(

pl.col("item_id", "dept_id", "cat_id", "store_id").cast(pl.Categorical)

)

.rename({"date": "ds", "id": "unique_id", "sales": "y"})

.collect()

)

event_cols = ["event_name_1", "event_name_2", "event_type_1", "event_type_2"]

cat_feats = ["unique_id", "item_id", "dept_id", "cat_id"]

cat_feats.extend(event_cols)

enc_cat_feats = [f"{feat}_enc" for feat in cat_feats]

encoding_feats = [

pl.UInt32 if col in ["unique_id", "item_id", "dept_id", "store_id"] else pl.UInt8

for col in cat_feats

]

# encoding since OrdinalEncoder does not seem to work with polars

for col, enc_col, encoding in zip(cat_feats, enc_cat_feats, encoding_feats):

pl_data = pl_data.with_columns(

pl.col(col).cast(pl.Categorical).to_physical().cast(encoding).alias(enc_col)

)

numeric_features = ["sell_price"]

reference_cols = ["unique_id", "ds", "y"]

# add features to this list if you want to use them

features = reference_cols + enc_cat_feats + numeric_features

pl_data = pl_data[features]

end_time = time.monotonic()

polars_feature_time = timedelta(seconds=end_time - start_time)

print(polars_feature_time)

# ## Pandas

from sklearn.preprocessing import OrdinalEncoder

start_time = time.monotonic()

pd_calendar = pd.read_parquet(f"{data2_path}/calendar.parquet")

pd_prices = pd.read_parquet(f"{data2_path}/prices.parquet")

pd_data = (

pandas_data.reset_index()

.drop(["state_id"], axis=1)

.merge(pd_prices, on=["date", "store_id", "item_id"], how="left")

.merge(pd_calendar, on=["date"], how="left")

.set_index("date")

.assign(

day_of_week=lambda d: d.index.dayofweek,

day_of_month=lambda d: d.index.day,

week=lambda d: d.index.isocalendar().week,

month=lambda d: d.index.month,

year=lambda d: d.index.year,

quarter=lambda d: d.index.quarter,

item_id=lambda d: d.item_id.astype("category"),

dept_id=lambda d: d.dept_id.astype("category"),

cat_id=lambda d: d.cat_id.astype("category"),

store_id=lambda d: d.store_id.astype("category"),

event_name_1=lambda d: d.event_name_1.astype("category"),

event_name_2=lambda d: d.event_name_2.astype("category"),

event_type_1=lambda d: d.event_type_1.astype("category"),

event_type_2=lambda d: d.event_type_2.astype("category"),

)

.reset_index()

.rename(columns={"id": "unique_id", "date": "ds", "sales": "y"})

)

# label encode categorical features

event_cols = ["event_name_1", "event_name_2", "event_type_1", "event_type_2"]

cat_feats = ["unique_id", "item_id", "dept_id", "cat_id"]

cat_feats.extend(event_cols)

enc_cat_feats = [f"{feat}_enc" for feat in cat_feats]

encoder = OrdinalEncoder()

pd_data[enc_cat_feats] = encoder.fit_transform(pd_data[cat_feats])

numeric_feature = ["sell_price"]

reference_cols = ["unique_id", "ds", "y"]

# add features to this list if you want to use them

features = reference_cols + enc_cat_feats + numeric_features

pd_data = pd_data[features]

end_time = time.monotonic()

pandas_feature_time = timedelta(seconds=end_time - start_time)

print(pandas_feature_time)

# # Results

# We now check the times for each of the parts to see results.

metrics = pd.DataFrame(

{

"loading": [

polars_loading_time.total_seconds(),

pandas_loading_time.total_seconds(),

],

"processing": [

polars_processing_time.total_seconds(),

pandas_processing_time.total_seconds(),

],

"feature_eng": [

polars_feature_time.total_seconds(),

pandas_feature_time.total_seconds(),

],

},

index=["polars", "pandas"],

)

metrics["total"] = metrics["loading"] + metrics["processing"] + metrics["feature_eng"]

# metrics["total"].apply(lambda x: x.total_seconds()) / sum( metrics["total"].apply(lambda x: x.total_seconds()))

metrics.loc["ratio", "loading"] = (

metrics.loc["pandas", "loading"] / metrics.loc["polars", "loading"]

)

metrics.loc["ratio", "processing"] = (

metrics.loc["pandas", "processing"] / metrics.loc["polars", "processing"]

)

metrics.loc["ratio", "feature_eng"] = (

metrics.loc["pandas", "feature_eng"] / metrics.loc["polars", "feature_eng"]

)

metrics.loc["ratio", "total"] = (

metrics.loc["pandas", "total"] / metrics.loc["polars", "total"]

)

metrics

|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 20GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session

df = pd.read_csv("/kaggle/input/bitcoin-and-fear-and-greed/dataset.csv")

df

# # **Bitcoin Fear and greed days split overall**

# Define the colors for each bar

colors = ["red", "blue", "green", "purple", "orange"]

bar_chart = df["Value_Classification"].hist()

bar_chart.set_title("Bitcoin fear and greed index 1 Feb to 31 Mar 2023")

bar_chart.set_ylabel("Number of days")

print(df["Value_Classification"].value_counts())

# # **Bitcoin Fear and greed per month**

df["Date"] = pd.to_datetime(df["Date"])

# Extract the short name of the month from the date column

df["month"] = df["date"].dt.strftime("%b")

df

df.pivot_table(

index=[["month", "Value_Classification"]],

values="Value_Classification",

aggfunc="count",

)

|

# ### Build your own Neural Network from scratch using only Numpy

# Deep Learning has become a popular topic in recent times. It involves emulating the neural structure of the human brain through a network of nodes called a **Neural Network**. While our brain's neurons have physical components like nucleus, dendrites, and synapses, Neural Network neurons are interconnected and have weights and biases assigned to them.

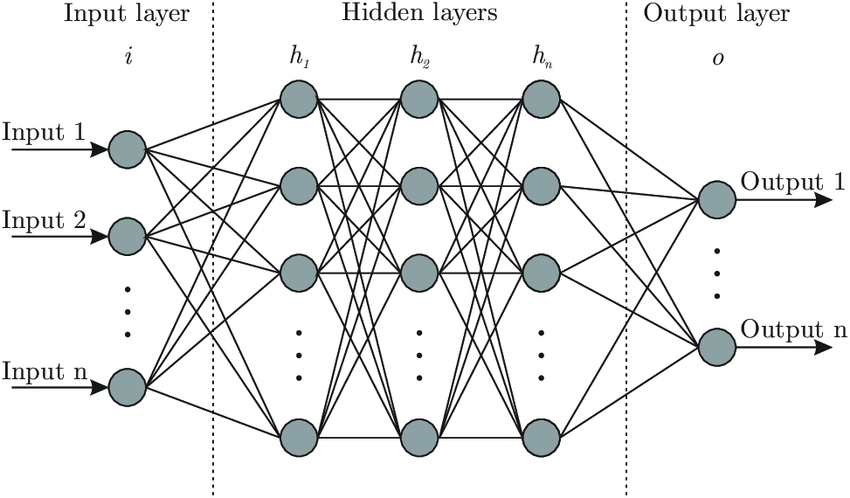

# A neural network typically consists of an input layer, an output layer, and one or more hidden layers. In conventional neural networks, all nodes in these layers are interconnected to form a dense network. However, there are cases where certain nodes in the network are not connected to others, which are referred to as **Sparse Neural Networks**. InceptionNet models for image classification use Sparse Neural Networks. The following figure illustrates the structure of a neural network.

#

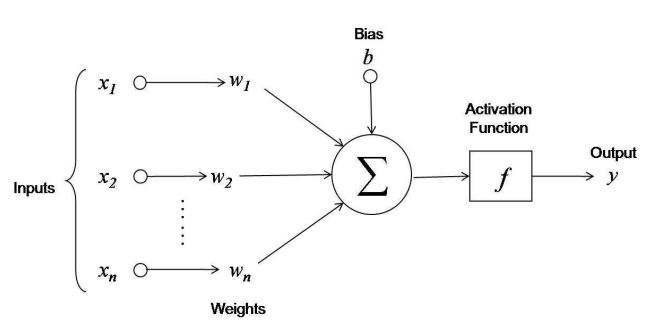

# The neurons will be activated or fired, which is the input will be passed through the neuron to the next layer. Each neuron inside the neural network will have a linear function using weights and biases like the following equation. The input will be transformed using the function below and produce a new output to the next layer.

#

# You can see at the end there will be something called as the ***Activation function***. An activation function is a function which decides wheather the neuron needs to be activated or not. Some people say, a neuron without an activation function is just a linear regression model. There are several activation functions called Sigmoid, Softmax, Tanh, ReLu and many more. We'll see about activation functions in detail later.

# let's start to build a neural network from scratch. We'll get into code without much further ado. Let's start by importing numpy library for linear algebra functions.

#

import numpy as np # linear algebra

# Numpy is a python library which can be used to implement linear algebra functions. So for creating a neural network we need the base for building a nueral network, neurons. We'll create a class neuron to implement the weights and biases.

class Neuron:

def __init__(self, weights, bias):

self.weights = weights

self.bias = bias

def feedforward(self, x):

return np.dot(weights, x) + bias

weights = np.array([0, 1])

bias = 1

n = Neuron(weights, bias)

n.feedforward([1, 1])

|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

import tensorflow as tf

from keras.preprocessing.image import ImageDataGenerator

from keras.utils import to_categorical

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D, BatchNormalization, Dropout

from keras.layers import Dense, Flatten

import cv2

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the "../input/" directory.

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# Any results you write to the current directory are saved as output.

import os

print(os.listdir("../input"))

category = ["cat", "dog"]

EPOCHS = 50

IMGSIZE = 128

BATCH_SIZE = 32

STOPPING_PATIENCE = 15

VERBOSE = 1

MODEL_NAME = "cnn_50epochs_imgsize128"

OPTIMIZER = "adam"

TRAINING_DIR = "/kaggle/working/train"

TEST_DIR = "/kaggle/working/test"

for img in os.listdir(TRAINING_DIR)[7890:]:

img_path = os.path.join(TRAINING_DIR, img)

img_arr = cv2.imread(img_path, cv2.IMREAD_GRAYSCALE)

img_arr = cv2.resize(img_arr, (IMGSIZE, IMGSIZE))

plt.imshow(img_arr, cmap="gray")

plt.title(img.split(".")[0])

break

def create_train_data(path):

X = []

y = []

for img in os.listdir(path):

if img == os.listdir(path)[7889]:

continue

img_path = os.path.join(path, img)

img_arr = cv2.imread(img_path, cv2.IMREAD_GRAYSCALE)

img_arr = cv2.resize(img_arr, (IMGSIZE, IMGSIZE))

img_arr = img_arr / 255.0

cat = np.where(img.split(".")[0] == "dog", 1, 0)

X.append(img_arr)

y.append(cat)

X = np.array(X).reshape(-1, IMGSIZE, IMGSIZE, 1)

y = np.array(y)

return X, y

X, y = create_train_data(TRAINING_DIR)

print(f"features shape {X.shape}.\nlabel shape {y.shape}.")

y = to_categorical(y, 2)

print(f"features shape {X.shape}.\nlabel shape {y.shape}.")

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=1 / 3)

X_train.shape

y_train.shape

model = Sequential()

model.add(Conv2D(32, kernel_size=(3, 3), activation="relu", input_shape=(28, 28, 1)))

model.add(Conv2D(64, (3, 3), activation="relu"))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(128, activation="relu"))

model.add(Dropout(0.5))

model.add(Dense(10, activation="softmax"))

model.compile(loss="categorical_crossentropy", optimizer="Adam", metrics=["accuracy"])

model.fit(X_train, y_train, epochs=10, validation_data=(X_test, y_test))

|

from keras.layers import Input, Conv2D, Lambda, merge, Dense, Flatten, MaxPooling2D

from keras.models import Model, Sequential

from keras.regularizers import l2

from keras import backend as K

from keras.optimizers import SGD, Adam

from keras.losses import binary_crossentropy

import sklearn.metrics as sm

import numpy.random as rng

import numpy as np

import pandas as pd

import os

import pickle

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.utils import shuffle

import os

from keras.datasets import mnist

def loop(p, q, low, high):

m = []

cat = {}

n = 0

for j in range(low, high):

r = np.where(q == j)

cat[str(j)] = [n, None]

t = np.random.choice(r[0], 20)

c = []

for i in t:

c.append(np.resize(p[i], (35, 35)))

n += 1

cat[str(j)][1] = n - 1

m.append(np.stack(c))

return m, cat

def createdataset(low, high, t):

(a, b), (f, g) = mnist.load_data()

m = []

cat = {}

if t == 0:

m, cat = loop(a, b, low, high)

else:

m, cat = loop(f, g, low, high)

m = np.stack(m)

return m, cat

train, cat_train = createdataset(0, 6, 0)

test, cat_test = createdataset(6, 10, 0)

print(train.shape)

print(test.shape)

print(cat_train.keys())

print(cat_test.keys())

def W_init(shape, name=None, dtype=None):

"""Initialize weights as in paper"""

values = rng.normal(loc=0, scale=1e-2, size=shape)

return K.variable(values, name=name)

# //TODO: figure out how to initialize layer biases in keras.

def b_init(shape, name=None, dtype=None):

"""Initialize bias as in paper"""

values = rng.normal(loc=0.5, scale=1e-2, size=shape)

return K.variable(values, name=name)

nclass, nexample, row, col = train.shape

input_shape = (row, col, 1)

left_input = Input(input_shape)

right_input = Input(input_shape)

# build convnet to use in each siamese 'leg'

convnet = Sequential()

convnet.add(

Conv2D(

64,

(10, 10),

activation="relu",

input_shape=input_shape,

kernel_initializer=W_init,

kernel_regularizer=l2(2e-4),

)

)

convnet.add(MaxPooling2D())

# convnet.add(Conv2D(128,(7,7),activation='relu',kernel_regularizer=l2(2e-4),kernel_initializer=W_init, bias_initializer=b_init))

# convnet.add(MaxPooling2D())

convnet.add(

Conv2D(

128,

(4, 4),

activation="relu",

kernel_initializer=W_init,

kernel_regularizer=l2(2e-4),

bias_initializer=b_init,

)

)

# convnet.add(MaxPooling2D())

# convnet.add(Conv2D(256,(4,4),activation='relu',kernel_initializer=W_init,kernel_regularizer=l2(2e-4),bias_initializer=b_init))

convnet.add(Flatten())

convnet.add(

Dense(

4096,

activation="sigmoid",

kernel_regularizer=l2(1e-3),

kernel_initializer=W_init,

bias_initializer=b_init,

)

)

# call the convnet Sequential model on each of the input tensors so params will be shared

encoded_l = convnet(left_input)

encoded_r = convnet(right_input)

# layer to merge two encoded inputs with the l1 distance between them

L1_layer = Lambda(lambda tensors: K.abs(tensors[0] - tensors[1]))

# call this layer on list of two input tensors.

L1_distance = L1_layer([encoded_l, encoded_r])

prediction = Dense(1, activation="sigmoid")(L1_distance)

siamese_net = Model(inputs=[left_input, right_input], outputs=prediction)

optimizer = Adam(0.00006)

# //TODO: get layerwise learning rates and momentum annealing scheme described in paperworking

siamese_net.compile(loss="binary_crossentropy", optimizer=optimizer)

siamese_net.count_params()

# nclass,nexample,row,col = train.shape

# input_shape = (row,col, 1)

# left_input = Input(input_shape)

# right_input = Input(input_shape)

# #build convnet to use in each siamese 'leg'

# convnet = Sequential()

# convnet.add(Conv2D(64,(10,10),activation='relu',input_shape=input_shape,kernel_regularizer=l2(2e-4)))

# convnet.add(MaxPooling2D())

# # convnet.add(Conv2D(128,(7,7),activation='relu',kernel_regularizer=l2(2e-4),kernel_initializer=W_init, bias_initializer=b_init))

# # convnet.add(MaxPooling2D())

# convnet.add(Conv2D(128,(4,4),activation='relu',kernel_regularizer=l2(2e-4)))

# # convnet.add(MaxPooling2D())

# # convnet.add(Conv2D(256,(4,4),activation='relu',kernel_initializer=W_init,kernel_regularizer=l2(2e-4),bias_initializer=b_init))

# convnet.add(Flatten())

# convnet.add(Dense(4096,activation="sigmoid",kernel_regularizer=l2(1e-3)))

# #call the convnet Sequential model on each of the input tensors so params will be shared

# encoded_l = convnet(left_input)

# encoded_r = convnet(right_input)

# #layer to merge two encoded inputs with the l1 distance between them

# L1_layer = Lambda(lambda tensors:K.abs(tensors[0] - tensors[1]))

# #call this layer on list of two input tensors.

# L1_distance = L1_layer([encoded_l, encoded_r])

# prediction = Dense(1,activation='sigmoid')(L1_distance)

# siamese_net = Model(inputs=[left_input,right_input],outputs=prediction)

# siamese_net.load_weights("/kaggle/input/mnistweights/weights")

# optimizer = Adam(0.00006)

# #//TODO: get layerwise learning rates and momentum annealing scheme described in paperworking

# siamese_net.compile(loss="binary_crossentropy",optimizer=optimizer)

# siamese_net.count_params()

class Siamese_Loader:

"""For loading batches and testing tasks to a siamese net"""

def __init__(self):

self.data = {"train": train, "val": test}

self.categories = {"train": cat_train, "val": cat_test}

def get_batch(self, batch_size, s="train"):

"""Create batch of n pairs, half same class, half different class"""

X = self.data[s]

n_classes, n_examples, w, h = X.shape

# randomly sample several classes to use in the batch

categories = rng.choice(n_classes, size=(batch_size,), replace=False)

# initialize 2 empty arrays for the input image batch

pairs = [np.zeros((batch_size, h, w, 1)) for i in range(2)]

# initialize vector for the targets, and make one half of it '1's, so 2nd half of batch has same class

targets = np.zeros((batch_size,))

targets[batch_size // 2 :] = 1

for i in range(batch_size):

category = categories[i]

idx_1 = rng.randint(0, n_examples)

pairs[0][i, :, :, :] = X[category, idx_1].reshape(w, h, 1)

idx_2 = rng.randint(0, n_examples)

# pick images of same class for 1st half, different for 2nd

if i >= batch_size // 2:

category_2 = category

else:

# add a random number to the category modulo n classes to ensure 2nd image has

# ..different category

category_2 = (category + rng.randint(1, n_classes)) % n_classes

pairs[1][i, :, :, :] = X[category_2, idx_2].reshape(w, h, 1)

return pairs, targets

def make_oneshot_task(self, N, s="val", language=None):

"""Create pairs of test image, support set for testing N way one-shot learning."""

X = self.data[s]

n_classes, n_examples, w, h = X.shape

indices = rng.randint(0, n_examples, size=(N,))

# if language is not None:

# low, high = self.categories[s][language]

# if N > high - low:

# raise ValueError("This language ({}) has less than {} letters".format(language, N))

# categories = rng.choice(range(low,high),size=(N,),replace=False)

# else:#if no language specified just pick a bunch of random letters

# categories = rng.choice(range(n_classes),size=(N,),replace=False)

categories = rng.choice(range(n_classes), size=(N,), replace=True)

true_category = categories[0]

ex1, ex2 = rng.choice(n_examples, replace=False, size=(2,))

test_image = np.asarray([X[true_category, ex1, :, :]] * N).reshape(N, w, h, 1)

support_set = X[categories, indices, :, :]

support_set[0, :, :] = X[true_category, ex2]

support_set = support_set.reshape(N, w, h, 1)

targets = np.zeros((N,))

targets[np.where(categories == true_category)] = 1

targets, test_image, support_set = shuffle(targets, test_image, support_set)

pairs = [test_image, support_set]

return pairs, targets

def test_oneshot(self, model, N, k, i, s="val", verbose=0):

"""Test average N way oneshot learning accuracy of a siamese neural net over k one-shot tasks"""

n_correct = 0

if verbose:

print("iteration no.{}".format(i))

print(

"Evaluating model on {} random {} way one-shot learning tasks ...".format(

k, N

)

)

sum = 0.0

sum1 = 0.0

for i in range(k):

inputs, targets = self.make_oneshot_task(N, s)

probs = model.predict(inputs)

probability = []

for i in probs:

probability.append(round(i[0]))

probability = np.array(probability)

a = sm.confusion_matrix(targets, probability)

if len(a) > 1:

sum += sm.accuracy_score(targets, probability)

sum1 += sm.f1_score(targets, probability)

else:

sum += 1.0

sum1 += 1.0

percent = (sum / k) * 100.0

F1_score = sum1 / k

if verbose:

print(

"Got an average of {}% Accuracy in {} way one-shot learning accuracy".format(

round(percent, 2), N

)

)

print(

"Got an average of {} F1-Score in {} way one-shot learning accuracy".format(

round(F1_score, 2), N

)

)

return percent, F1_score

# Instantiate the class

loader = Siamese_Loader()

def concat_images(X):

"""Concatenates a bunch of images into a big matrix for plotting purposes."""

a, b, c, d = X.shape

X = np.resize(X, (a, 28, 28, d))

nc, h, w, _ = X.shape

X = X.reshape(nc, h, w)

n = np.ceil(np.sqrt(nc)).astype("int8")

img = np.zeros((n * w, n * h))

x = 0

y = 0

for example in range(nc):

img[x * w : (x + 1) * w, y * h : (y + 1) * h] = X[example]

y += 1

if y >= n:

y = 0

x += 1

return img

def plot_oneshot_task(pairs):

"""Takes a one-shot task given to a siamese net and"""

fig, (ax1, ax2) = plt.subplots(2)

ax1.matshow(np.resize(pairs[0][0], (28, 28)), cmap="gray")

img = concat_images(pairs[1])

ax1.get_yaxis().set_visible(False)

ax1.get_xaxis().set_visible(False)

ax2.matshow(img, cmap="gray")

plt.xticks([])

plt.yticks([])

plt.show()

# example of a one-shot learning task

pairs, targets = loader.make_oneshot_task(10, "train", "0")

plot_oneshot_task(pairs)

# Training loop

os.chdir(r"/kaggle/working/")

print("!")

evaluate_every = 1 # interval for evaluating on one-shot tasks

loss_every = 50 # interval for printing loss (iterations)

batch_size = 4

n_iter = 10000

N_way = 10 # how many classes for testing one-shot tasks>

n_val = 250 # how many one-shot tasks to validate on?

best = -1

s = -1

print("training")

for i in range(1, n_iter):

(inputs, targets) = loader.get_batch(batch_size)

loss = siamese_net.train_on_batch(inputs, targets)

print("Loss is = {}".format(round(loss, 2)))

if i % evaluate_every == 0:

print("evaluating")

val_acc, score = loader.test_oneshot(siamese_net, N_way, n_val, i, verbose=True)

if val_acc >= best or s >= score:

print("saving")

siamese_net.save(r"weights") # weights_path = os.path.join(PATH, "weights")

best = val_acc

if i % loss_every == 0:

print("iteration {}, training loss: {:.2f},".format(i, loss))

ways = np.arange(1, 30, 2)

resume = False

val_accs, train_accs, valscore, trainscore = [], [], [], []

trials = 400

i = 0

for N in ways:

train, trains = loader.test_oneshot(

siamese_net, N, trials, i, "train", verbose=True

)

val, vals = loader.test_oneshot(siamese_net, N, trials, i, "val", verbose=True)

val_accs.append(val)

train_accs.append(train)

valscore.append(vals)

trainscore.append(trains)

i += 1

from statistics import mean

print("The Average testing Accuracy is {}%".format(round(mean(val_accs), 2)))

print("The Average testing F1-Score is {}".format(round(mean(valscore), 2)))

plt.figure(1)

plt.plot(ways, train_accs, "b", label="Siamese(train set)")

plt.plot(ways, val_accs, "r", label="Siamese(val set)")

plt.xlabel("Number of possible classes in one-shot tasks")

plt.ylabel("% Accuracy")

plt.title("MNIST One-Shot Learning performace of a Siamese Network")

# box = plt.get_position()

# plt.set_position([box.x0, box.y0, box.width * 0.8, box.height])

plt.legend(loc="center left", bbox_to_anchor=(1, 0.5))

plt.show()

# inputs,targets = loader.make_oneshot_task(10,"val")

plt.figure(2)

plt.plot(ways, trainscore, "g", label="Siamese(train set)")

plt.plot(ways, valscore, "r", label="Siamese(val set)")

plt.xlabel("Number of possible classes in one-shot tasks")

plt.ylabel("F1-Score")

plt.title("MNIST One-Shot Learning F1 Score of a Siamese Network")

# box = plt.get_position()

# plt.set_position([box.x0, box.y0, box.width * 0.8, box.height])

plt.legend(loc="center left", bbox_to_anchor=(1, 0.5))

plt.show()

|

import numpy as np

import pandas as pd

import seaborn as sns

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split, GridSearchCV

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import cross_val_score, cross_val_predict

from sklearn.svm import SVC

from sklearn.metrics import classification_report, confusion_matrix

df = pd.read_csv("../input/diabetes.csv")

df.head()

X = df.drop("Outcome", axis=1)

X = StandardScaler().fit_transform(X)

y = df["Outcome"]

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.25, random_state=0

)

model = SVC()

parameters = [

{"kernel": ["rbf"], "gamma": [1e-3, 1e-4], "C": [1, 10, 100, 1000]},

{"kernel": ["linear"], "C": [1, 10, 100, 1000]},

]

grid = GridSearchCV(estimator=model, param_grid=parameters, cv=5)

grid.fit(X_train, y_train)

roc_auc = np.around(

np.mean(cross_val_score(grid, X_test, y_test, cv=5, scoring="roc_auc")), decimals=4

)

print("Score: {}".format(roc_auc))

model1 = RandomForestClassifier(n_estimators=1000)

model1.fit(X_train, y_train)

predictions = cross_val_predict(model1, X_test, y_test, cv=5)

print(classification_report(y_test, predictions))

print(confusion_matrix(y_test, predictions))

score1 = np.mean(cross_val_score(model, X_test, y_test, cv=5, scoring="roc_auc"))

np.around(score1, decimals=4)

model2 = KNeighborsClassifier()

model2.fit(X_train, y_train)

predictions = cross_val_predict(model2, X_test, y_test, cv=5)

print(classification_report(y_test, predictions))

print(confusion_matrix(y_test, predictions))

score2 = np.around(

np.mean(cross_val_score(model2, X_test, y_test, cv=5, scoring="roc_auc")),

decimals=4,

)

print("Score : {}".format(score2))

model3 = LogisticRegression()

parameters = {"C": [0.001, 0.01, 0.1, 1, 10, 100]}

grid = GridSearchCV(estimator=model3, param_grid=parameters, cv=5)

grid.fit(X_train, y_train)

score3 = np.around(

np.mean(cross_val_score(model3, X_test, y_test, cv=5, scoring="roc_auc")),

decimals=4,

)

print("Score : {}".format(score3))

names = []

scores = []

names.extend(["SVC", "RF", "KNN", "LR"])

scores.extend([roc_auc, score1, score2, score3])

algorithms = pd.DataFrame({"Name": names, "Score": scores})

algorithms

print("Most accurate:\n{}".format(algorithms.max()))

|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the "../input/" directory.

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# Any results you write to the current directory are saved as output.

import pandas as pd

import numpy as np

import re

from tqdm import tqdm_notebook

from sklearn.preprocessing import StandardScaler, OneHotEncoder, LabelEncoder

from sklearn.model_selection import train_test_split, GridSearchCV, RandomizedSearchCV

import pickle

from sklearn.impute import SimpleImputer

from sklearn.metrics import roc_auc_score

from sklearn.model_selection import KFold

from xgboost import XGBClassifier

from sklearn.metrics import roc_auc_score

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

import seaborn as sns

import warnings

warnings.filterwarnings("ignore")

df = pd.read_csv("/kaggle/input/widsdatathon2020/training_v2.csv")

test = pd.read_csv("/kaggle/input/widsdatathon2020/unlabeled.csv")

print("Shape of the data is {}".format(df.shape))

print("Shape of the test data is {}".format(test.shape))

# * I dropped the columns which had more than 20 % missing values

# * After analysing the data I divided columns into following sections

target_column = "hospital_death"

doubtful_columns = [

"cirrhosis",

"diabetes_mellitus",

"immunosuppression",

"hepatic_failure",

"leukemia",

"lymphoma",

"solid_tumor_with_metastasis",

"gcs_unable_apache",

]

cols_with_around_70_percent_zeros = [

"intubated_apache",

"ventilated_apache",

]

cols_with_diff_dist_in_test = ["hospital_id", "icu_id"]

selected_columns = [

"d1_spo2_max",

"d1_diasbp_max",

"d1_temp_min",

"h1_sysbp_max",

"gender",

"heart_rate_apache",

"weight",

"icu_stay_type",

"d1_mbp_max",

"h1_resprate_max",

"d1_heartrate_min",

"apache_post_operative",

"apache_4a_hospital_death_prob",

"d1_mbp_min",

"apache_4a_icu_death_prob",

"d1_sysbp_max",

"icu_type",

"apache_3j_bodysystem",

"h1_sysbp_min",

"h1_resprate_min",

"d1_resprate_max",

"h1_mbp_min",

"ethnicity",

"arf_apache",

"resprate_apache",

"map_apache",

"temp_apache",

"icu_admit_source",

"h1_spo2_min",

"d1_spo2_min",

"d1_resprate_min",

"h1_mbp_max",

"height",

"age",

"h1_diasbp_max",

"d1_sysbp_min",

"pre_icu_los_days",

"d1_heartrate_max",

"d1_diasbp_min",

"apache_2_bodysystem",

"gcs_eyes_apache",

"apache_2_diagnosis",

"gcs_motor_apache",

"d1_temp_max",

"h1_spo2_max",

"h1_heartrate_max",

"bmi",

"d1_glucose_min",

"h1_heartrate_min",

"gcs_verbal_apache",

"apache_3j_diagnosis",

"d1_glucose_max",

"h1_diasbp_min",

]

print(f"Total number of diff. dist. columns are {len(cols_with_diff_dist_in_test)}")

print(

f"Total number of columns with 70% 0s are {len(cols_with_around_70_percent_zeros)}"

)

print(f"Total number of doubtful columns are {len(doubtful_columns)}")

print(f"Total number of selected columns are {len(selected_columns)}")

(

len(selected_columns)

+ len(cols_with_around_70_percent_zeros)

+ len(cols_with_diff_dist_in_test)

+ len(doubtful_columns)

)

# Dividing Columns into Categories

continuous_columns = [

"d1_spo2_max",

"d1_diasbp_max",

"d1_temp_min",

"h1_sysbp_max",

"heart_rate_apache",

"weight",

"d1_mbp_max",

"h1_resprate_max",

"d1_heartrate_min",

"apache_4a_hospital_death_prob",

"d1_mbp_min",

"apache_4a_icu_death_prob",

"d1_sysbp_max",

"h1_sysbp_min",

"h1_resprate_min",

"d1_resprate_max",

"h1_mbp_min",

"resprate_apache",

"map_apache",

"temp_apache",

"h1_spo2_min",

"d1_spo2_min",

"d1_resprate_min",

"h1_mbp_max",

"height",

"age",

"h1_diasbp_max",

"d1_sysbp_min",

"pre_icu_los_days",

"d1_heartrate_max",

"d1_diasbp_min",

"gcs_eyes_apache",

"gcs_motor_apache",

"d1_temp_max",

"h1_spo2_max",

"h1_heartrate_max",

"bmi",

"d1_glucose_min",

"h1_heartrate_min",

"gcs_verbal_apache",

"d1_glucose_max",

"h1_diasbp_min",

]

binary_columns = [

"apache_post_operative",

"arf_apache",

"cirrhosis",

"diabetes_mellitus",

"immunosuppression",

"hepatic_failure",

"leukemia",

"lymphoma",

"solid_tumor_with_metastasis",

"gcs_unable_apache",

"intubated_apache",

"ventilated_apache",

]

categorical_columns = [

"icu_stay_type",

"icu_type",

"apache_3j_bodysystem",

"ethnicity",

"gender",

"icu_admit_source",

"apache_2_bodysystem",

"apache_2_diagnosis",

"apache_3j_diagnosis",

]

high_cardinality_columns = ["hospital_id", "icu_id"]

print(

len(continuous_columns)

+ len(binary_columns)

+ len(categorical_columns)

+ len(high_cardinality_columns)

)

columns_to_be_used = list(

set(

doubtful_columns

+ cols_with_around_70_percent_zeros

+ cols_with_diff_dist_in_test

+ selected_columns

)

)

print(f"Total columns to be used initially are {len(columns_to_be_used)}")

categorical_columns = list(set(categorical_columns))

continuous_columns = list(set(continuous_columns))

binary_columns = list(set(binary_columns))

high_cardinality_columns = list(set(high_cardinality_columns))

print(f"Total categorical columns to be used initially are {len(categorical_columns)}")

print(f"Total continuous columns to be used initially are {len(continuous_columns)}")

print(f"Total binary_columns to be used initially are {len(binary_columns)}")

print(

f"Total high_cardinality_columns to be used initially are {len(high_cardinality_columns)}"

)

# Taking subset of the database with the above selected columns

df_train, Y_tr = df[columns_to_be_used], df[target_column]

df_test = test[columns_to_be_used]

print(df_train.shape, Y_tr.shape, df_test.shape)

# Using Label Encoder to encode text into integer classes

# for categorical label encoding

cat_labenc_mapping = {col: LabelEncoder() for col in categorical_columns}

for col in tqdm_notebook(categorical_columns):

df_train[col] = df_train[col].astype("str")

cat_labenc_mapping[col] = cat_labenc_mapping[col].fit(

np.unique(df_train[col].unique().tolist() + df_test[col].unique().tolist())

)

df_train[col] = cat_labenc_mapping[col].transform(df_train[col])

for col in tqdm_notebook(categorical_columns):

print()

df_test[col] = df_test[col].astype("str")

df_test[col] = cat_labenc_mapping[col].transform(df_test[col])

# ## Imputation

# 1. Imputing missing values in continuous columns by Median

# * I am considering high cardinality columns as continuous columns only

# 2. Imputing missing values in categorical columns by Mode

# imputing

# for categorical

cat_col2imputer_mapping = {

col: SimpleImputer(strategy="most_frequent") for col in categorical_columns

}

# for continuous

cont_col2imputer_mapping = {

col: SimpleImputer(strategy="median") for col in continuous_columns

}

# for binary

bin_col2imputer_mapping = {

col: SimpleImputer(strategy="most_frequent") for col in binary_columns

}

# for high cardinality

hicard_col2imputer_mapping = {

col: SimpleImputer(strategy="median") for col in high_cardinality_columns

}

all_imp_dicts = [

cat_col2imputer_mapping,

cont_col2imputer_mapping,

bin_col2imputer_mapping,

hicard_col2imputer_mapping,

]

# fitting imputers

for imp_mapping_obj in tqdm_notebook(all_imp_dicts):

for col, imp_object in imp_mapping_obj.items():

data = df_train[col].values.reshape(-1, 1)

imp_object.fit(data)

# transofrming imputed columns

# fitting imputers

for imp_mapping_obj in tqdm_notebook(all_imp_dicts):

for col, imp_object in imp_mapping_obj.items():

data = df_train[col].values.reshape(-1, 1)

data = imp_object.transform(data)

df_train[col] = list(

data.reshape(

-1,

)

)

# inputing on test

for imp_mapping_obj in tqdm_notebook(all_imp_dicts):

for col, imp_object in imp_mapping_obj.items():

data = df_test[col].values.reshape(-1, 1)

data = imp_object.transform(data)

df_test[col] = list(

data.reshape(

-1,

)

)

# Using sklearn's train test split to create validation set

# train_test split

X_train, X_eval, Y_train, Y_eval = train_test_split(

df_train, Y_tr, test_size=0.15, stratify=Y_tr

)

X_train.shape, X_eval.shape, Y_train.shape, Y_eval.shape

# ## Hyper Parameter Tuning

# ### Step 1. Finding n_estimators after fixing other parameters

# - max_depth = 5 : This should be between 3-10. I’ve started with 5 but you can choose a different number as well. 4-6 can be good starting points.

# - min_child_weight = 1 : A smaller value is chosen because it is a highly imbalanced class problem and leaf nodes can have smaller size groups.

# - gamma = 0 : A smaller value like 0.1-0.2 can also be chosen for starting. This will anyways be tuned later.

# > - subsample, colsample_bytree = 0.8 : This is a commonly used used start value. Typical values range between 0.5-0.9.

# - scale_pos_weight = 1: Because of high class imbalance.

#

# tuning tree specific features

gkf = KFold(n_splits=3, shuffle=True, random_state=42).split(X=X_train, y=Y_train)

fit_params_of_xgb = {

"early_stopping_rounds": 100,

"eval_metric": "auc",

"eval_set": [(X_eval, Y_eval)],

# 'callbacks': [lgb.reset_parameter(learning_rate=learning_rate_010_decay_power_099)],

"verbose": 100,

}

# A parameter grid for XGBoost

params = {

"booster": ["gbtree"],

"learning_rate": [0.1],

"n_estimators": range(100, 500, 100),

"min_child_weight": [1],

"gamma": [0],

"subsample": [0.8],

"colsample_bytree": [0.8],

"max_depth": [5],

"scale_pos_weight": [1],

}

xgb_estimator = XGBClassifier(

objective="binary:logistic",

# silent=True,

)

gsearch = GridSearchCV(

estimator=xgb_estimator,

param_grid=params,

scoring="roc_auc",

n_jobs=-1,

cv=gkf,

verbose=3,

)

# gsearch = RandomizedSearchCV(

# estimator=xgb_estimator,

# param_distributions=params,

# scoring='roc_auc',

# n_jobs=-1,

# cv=gkf, verbose=3

# )

xgb_model = gsearch.fit(X=X_train, y=Y_train, **fit_params_of_xgb)

gsearch.best_params_, gsearch.best_score_

# - Now we will fix this n_estimator=200 and learning_rate=0.1 value and find out others.

# ## Step 2. Finding min_child_weight and max_depth

gkf = KFold(n_splits=3, shuffle=True, random_state=42).split(X=X_train, y=Y_train)

fit_params_of_xgb = {

"early_stopping_rounds": 100,

"eval_metric": "auc",

"eval_set": [(X_eval, Y_eval)],

# 'callbacks': [lgb.reset_parameter(learning_rate=learning_rate_010_decay_power_099)],

"verbose": 100,

}

# A parameter grid for XGBoost

params = {

"booster": ["gbtree"],

"learning_rate": [0.1],

"n_estimators": [300],

"gamma": [0],

"subsample": [0.8],

"colsample_bytree": [0.8],

"scale_pos_weight": [1],

"max_depth": range(2, 7, 2),

"min_child_weight": range(2, 8, 2),

}

xgb_estimator = XGBClassifier(

objective="binary:logistic",

silent=True,

)

gsearch = GridSearchCV(

estimator=xgb_estimator,

param_grid=params,

scoring="roc_auc",

n_jobs=-1,

cv=gkf,

verbose=3,

)

xgb_model = gsearch.fit(X=X_train, y=Y_train, **fit_params_of_xgb)

gsearch.best_params_, gsearch.best_score_

# ## Tuning Gamma

gkf = KFold(n_splits=3, shuffle=True, random_state=42).split(X=X_train, y=Y_train)

fit_params_of_xgb = {

"early_stopping_rounds": 100,

"eval_metric": "auc",

"eval_set": [(X_eval, Y_eval)],

# 'callbacks': [lgb.reset_parameter(learning_rate=learning_rate_010_decay_power_099)],

"verbose": 100,

}

# A parameter grid for XGBoost

params = {

"booster": ["gbtree"],

"learning_rate": [0.1],

"n_estimators": [300],

"subsample": [0.8],

"colsample_bytree": [0.8],

"scale_pos_weight": [1],

"max_depth": [4],

"min_child_weight": [6],

"gamma": [0, 0.01, 0.01],

}

xgb_estimator = XGBClassifier(

objective="binary:logistic",

silent=True,

)

gsearch = GridSearchCV(

estimator=xgb_estimator,

param_grid=params,

scoring="roc_auc",

n_jobs=-1,

cv=gkf,

verbose=3,

)

# gsearch = RandomizedSearchCV(

# estimator=xgb_estimator,

# param_distributions=params,

# scoring='roc_auc',

# n_jobs=-1,

# cv=gkf, verbose=3

# )

xgb_model = gsearch.fit(X=X_train, y=Y_train, **fit_params_of_xgb)

gsearch.best_params_, gsearch.best_score_

# ## Tuning subsample and colsample_bytree

gkf = KFold(n_splits=3, shuffle=True, random_state=42).split(X=X_train, y=Y_train)

fit_params_of_xgb = {

"early_stopping_rounds": 100,

"eval_metric": "auc",

"eval_set": [(X_eval, Y_eval)],

# 'callbacks': [lgb.reset_parameter(learning_rate=learning_rate_010_decay_power_099)],

"verbose": 100,

}

# A parameter grid for XGBoost

params = {

"booster": ["gbtree"],

"learning_rate": [0.1],

"n_estimators": [300],

"scale_pos_weight": [1],

"max_depth": [4],

"min_child_weight": [6],

"gamma": [0],

"subsample": [i / 10.0 for i in range(2, 5)],

"colsample_bytree": [i / 10.0 for i in range(8, 10)],

}

xgb_estimator = XGBClassifier(

objective="binary:logistic",

silent=True,

)

gsearch = GridSearchCV(

estimator=xgb_estimator,

param_grid=params,

scoring="roc_auc",

n_jobs=-1,

cv=gkf,

verbose=3,

)

# gsearch = RandomizedSearchCV(

# estimator=xgb_estimator,

# param_distributions=params,

# scoring='roc_auc',

# n_jobs=-1,

# cv=gkf, verbose=3

# )

xgb_model = gsearch.fit(X=X_train, y=Y_train, **fit_params_of_xgb)

gsearch.best_params_, gsearch.best_score_

# ## Tuning reg_alpha

gkf = KFold(n_splits=3, shuffle=True, random_state=42).split(X=X_train, y=Y_train)

fit_params_of_xgb = {

"early_stopping_rounds": 100,

"eval_metric": "auc",

"eval_set": [(X_eval, Y_eval)],

# 'callbacks': [lgb.reset_parameter(learning_rate=learning_rate_010_decay_power_099)],

"verbose": 100,

}

# A parameter grid for XGBoost

params = {

"booster": ["gbtree"],

"learning_rate": [0.1],

"n_estimators": [300],

"scale_pos_weight": [1],

"max_depth": [4],

"min_child_weight": [6],

"gamma": [0],

"subsample": [0.4],

"colsample_bytree": [0.8],

"reg_alpha": [1, 0.5, 0.1, 0.08],

}

xgb_estimator = XGBClassifier(

objective="binary:logistic",

silent=True,

)

gsearch = GridSearchCV(

estimator=xgb_estimator,

param_grid=params,

scoring="roc_auc",

n_jobs=-1,

cv=gkf,

verbose=3,

)

# gsearch = RandomizedSearchCV(

# estimator=xgb_estimator,

# param_distributions=params,

# scoring='roc_auc',

# n_jobs=-1,

# cv=gkf, verbose=3

# )

xgb_model = gsearch.fit(X=X_train, y=Y_train, **fit_params_of_xgb)

gsearch.best_params_, gsearch.best_score_

# ## Reducing learning Rate and adding more Trees

gkf = KFold(n_splits=3, shuffle=True, random_state=42).split(X=X_train, y=Y_train)

fit_params_of_xgb = {

"early_stopping_rounds": 100,

"eval_metric": "auc",

"eval_set": [(X_eval, Y_eval)],

# 'callbacks': [lgb.reset_parameter(learning_rate=learning_rate_010_decay_power_099)],

"verbose": 100,

}

# A parameter grid for XGBoost

params = {

"booster": ["gbtree"],

"learning_rate": [0.01],

"n_estimators": range(1000, 6000, 1000),

"scale_pos_weight": [1],

"max_depth": [4],

"min_child_weight": [6],

"gamma": [0],

"subsample": [0.4],

"colsample_bytree": [0.8],

"reg_alpha": [0.08],

}

xgb_estimator = XGBClassifier(

objective="binary:logistic",

silent=True,

)

gsearch = GridSearchCV(

estimator=xgb_estimator,

param_grid=params,

scoring="roc_auc",

n_jobs=-1,

cv=gkf,

verbose=3,

)

xgb_model = gsearch.fit(X=X_train, y=Y_train, **fit_params_of_xgb)

gsearch.best_params_, gsearch.best_score_

|

# Data processing

import numpy as np

import pandas as pd

from keras.utils.np_utils import to_categorical

from sklearn.model_selection import train_test_split

from keras.preprocessing.image import ImageDataGenerator

# Dealing with warnings

import warnings

warnings.filterwarnings("ignore")

# Plotting the data

import matplotlib.pyplot as plt

import seaborn as sns

sns.set(style="whitegrid")

# Layers of NN

from keras.models import Sequential

from keras.layers.convolutional import Conv2D, MaxPooling2D

from keras.layers.core import Dense, Dropout, Activation, Flatten

# Optimizers

from keras.optimizers import SGD

# Metrics

from sklearn.metrics import log_loss, confusion_matrix

import itertools

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# Load train and test data

train = pd.read_csv("../input/digit-recognizer/train.csv")

test = pd.read_csv("../input/digit-recognizer/test.csv")

train.head()

# Shape of training data

train.shape

# 42k images, each with 784 features (pixels) of each images

# Shape of training data

test.shape

# 28k images, each with 784 features (pixels) of each images

# Checking for any null values in training data

train[train.isna().any(1)]

# Checking for any null values in testing data

test[test.isna().any(1)]

# Count of each label

sns.countplot(train["label"])

# Showing image

import random

sample_index = random.choice(range(0, 42000))

sample_image = train.iloc[sample_index, 1:].values.reshape(28, 28)

plt.imshow(sample_image)

plt.grid("off")

plt.show()

# Showing with gray map

plt.imshow(sample_image, cmap=plt.get_cmap("gray"))

plt.grid("off")

plt.show()

# Preparing the data

y = train[["label"]]

X = train.drop(labels=["label"], axis=1)

# Standardizing the values

X = X / 255.0

# Making class labels as one hot encoding

y_ohe = to_categorical(y)

# Splitting the data

X_train, X_test, y_train, y_test = train_test_split(

X, y_ohe, stratify=y_ohe, test_size=0.3

)

# Verifying shape of datasets

X_train.shape, X_test.shape, y_train.shape, y_test.shape

# ### Normal ANN without using convolution

# Sequential model is linear stackk of layers.

# Since the model needs to know the shape of input it is receiving, the first layer of model will have the

# input_shape parameter.

# Dense is our normal fully connected layer.

# Dropout layer is applying dropout to a fraction of neurons in particular layer, that means making their weight as 0.

# activation is relu for hidden layer and softmax for output layer (class probabilities).

# Making a basic vanilla neural network to do classification

model = Sequential()

model.add(Dense(units=256, input_shape=(784,), activation="relu"))

model.add(Dropout(rate=0.2))

model.add(Dense(units=256, activation="relu"))

model.add(Dropout(rate=0.2))

model.add(Dense(units=128, activation="relu"))

model.add(Dropout(rate=0.2))

model.add(Dense(units=64, activation="relu"))

model.add(Dense(units=10, activation="softmax"))

# Compile the model

# Before compiling the model we can actually set some parameters of whichever optimizer we choose

model.compile(loss="categorical_crossentropy", optimizer="adam", metrics=["accuracy"])

# Summary of the model

model.summary()

history = model.fit(

x=X_train,

y=y_train,

batch_size=32,

epochs=30,

verbose=1,

validation_data=(X_test, y_test),

)

# Plotting the train and validation set accuracy and error

plt.figure(figsize=(20, 6))

plt.subplot(1, 2, 1)

plt.plot(history.history["accuracy"])

plt.plot(history.history["val_accuracy"])

plt.title("model accuracy")

plt.ylabel("accuracy")

plt.xlabel("epoch")

plt.legend(["train", "validation"], loc="center")

plt.subplot(1, 2, 2)

plt.plot(history.history["loss"])

plt.plot(history.history["val_loss"])

plt.title("model loss")

plt.ylabel("loss")

plt.xlabel("epoch")

plt.legend(["train", "validation"], loc="center")

plt.show()

# Predicting the output probabilities

y_pred = model.predict(x=X_test, batch_size=32, verbose=1)

# Calculating log loss

log_loss(y_test, y_pred)

# The log loss is very good

# Converting the probabilitiies as such that highest value will get 1 else 0

y_pred = np.round(y_pred, 2)

b = np.zeros_like(y_pred)

b[np.arange(len(y_pred)), y_pred.argmax(1)] = 1

# Now calcualting the log loss

log_loss(y_test, b)

# It has increased now

# ### Using normal CNN without using Data Augmentation

# CNN stands for convolutional NN majorly due to the convolution operator we have. It works with 2D input array

# (actually 3D as channel value is also expected).

# It has majorly two parts convolution and pooling (normally max-pooling, but we have other poolings also).

# In convolution layer, we convolve inout image with something called kernels, which help us identify features of

# images like edges, corners, round shapes etc. The output of this is called feature map.

# Convolution happens through strides, we can have values for strides and we also have padding as kernels will have

# situation where they will not have data fitting the image properly.

# We have two types of padding, VALID and SAME.

# VALID padding means no padding, so in that case there will be loss of information. Valid actually means that it will

# only take valid data for the operatioon and will not add any padding.

# SAME padding means zero padding. Here in this case, we actually add 0 padding to match our kernel size with our data

# we will not lose any information in this case.

# This applies to conv layers and pool layers both.

# In a typical CNN, we can have multiple conv layers followed by pool layers to get the feature maps. In hidden layers

# it is typical to have more kernels to get the complex feature maps of the data.

# CNN lacks one thing which Hinton tried to solve in CapsuleNet and that is relative location of elements of images.