script

stringlengths 113

767k

|

|---|

#

# Version Updates

#

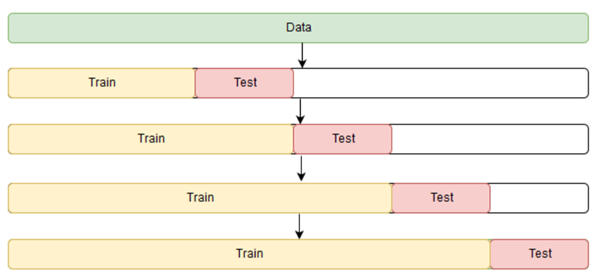

# Train Test Split for better Evaluation

# Transfer Learning with EfficinetNetB0

#

#

# ## Setup

import pandas as pd

import numpy as np

import cv2

import os

import random

import matplotlib.pyplot as plt

import seaborn as sns

from tensorflow.keras.applications import EfficientNetB0

from tensorflow.keras.layers import GlobalAveragePooling2D

from tensorflow.keras.models import Model

import tensorflow as tf

import tensorflow_hub as hub

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "3"

from keras.models import Sequential

from keras.layers import Dense, Flatten, Conv2D, MaxPool2D

from keras.layers import Input, Activation, LeakyReLU, Dropout

from keras.losses import BinaryCrossentropy

try:

from keras.optimizer import Adam

except:

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.callbacks import TensorBoard

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from sklearn.metrics import classification_report, confusion_matrix

# ## About Brain Tumor

# A brain tumor is a collection, or mass, of abnormal cells in your brain. Your skull, which encloses your brain, is very rigid. Any growth inside such a restricted space can cause problems.

# Brain tumors can be cancerous (malignant) or noncancerous (benign). When benign or malignant tumors grow, they can cause the pressure inside your skull to increase. This can cause brain damage, and it can be life-threatening.

# ### **How is Brain Tumor diagnosed?**

# ### **Magnetic resonance imaging (MRI)**

# An MRI uses magnetic fields to produce detailed images of the body. MRI can be used to measure the tumor’s size. A special dye called a contrast medium is given before the scan to create a clearer picture. This dye can be injected into a patient’s vein or given as a pill or liquid to swallow. MRIs create more detailed pictures than CT scans (see below) and are the preferred way to diagnose a brain tumor. The MRI may be of the brain, spinal cord, or both, depending on the type of tumor suspected and the likelihood that it will spread in the CNS. There are different types of MRI. The results of a neuro-examination, done by the internist or neurologist, helps determine which type of MRI to use.

# Here we have 253 samples of Brain MRI images, 98 of which show no tumor and 155 show brain tumor. Using a Convolutional Neural Network, we try to **classify whether the sample shows tumor or not**

#

import warnings

warnings.filterwarnings("ignore")

MAIN_DIR = (

"/kaggle/input/brain-mri-images-for-brain-tumor-detection/brain_tumor_dataset/"

)

SEED = 40

# ## Preparing the Image Data

os.listdir(MAIN_DIR)

for dirpath, dirnames, filenames in os.walk(MAIN_DIR):

print(f"{len(dirnames)} directories and {len(filenames)} images in {dirpath}")

# Inspect the raw data before preprocessing

def view_random_image():

subdirs = ["yes/", "no/"]

subdir = np.random.choice(subdirs)

target_folder = MAIN_DIR + subdir

random_image = random.sample(os.listdir(target_folder), 1)

img = cv2.imread(target_folder + random_image[0])

plt.imshow(img, cmap="gray")

plt.axis(False)

plt.title(img.shape)

plt.show()

# View Random Image

view_random_image()

# ### Image Data Generator

IMG_SHAPE = (128, 128)

BATCH_SIZE = 32

datagen = ImageDataGenerator(rescale=1 / 255.0, validation_split=0.5)

train_data = datagen.flow_from_directory(

MAIN_DIR,

target_size=IMG_SHAPE,

batch_size=BATCH_SIZE,

class_mode="binary",

shuffle=True,

subset="training",

)

test_data = datagen.flow_from_directory(

MAIN_DIR,

target_size=IMG_SHAPE,

batch_size=BATCH_SIZE,

class_mode="binary",

shuffle=True,

subset="validation",

)

len(train_data), len(test_data)

# ## Convolutional Neural Network

tf.random.set_seed(SEED)

model = Sequential(

[

Conv2D(filters=64, kernel_size=3, activation="relu"),

Conv2D(32, 3, activation="relu"),

MaxPool2D(pool_size=2),

Conv2D(32, 3, activation="relu"),

Conv2D(16, 3, activation="relu"),

MaxPool2D(2, padding="same"),

Flatten(),

Dense(1, activation="sigmoid"),

]

)

# Compile the model

model.compile(loss=BinaryCrossentropy(), optimizer=Adam(), metrics=["accuracy"])

# Fit the model

history = model.fit(

train_data,

epochs=10,

steps_per_epoch=len(train_data),

validation_data=test_data,

validation_steps=len(test_data),

)

# ## Evaluation

# Function to plot loss curves

def plot_curves(history):

"""

Returns separate loss and accuracy curves

"""

import matplotlib.pyplot as plt

loss = history.history["loss"]

val_loss = history.history["val_loss"]

accuracy = history.history["accuracy"]

val_accuracy = history.history["val_accuracy"]

epochs = range(len(history.history["loss"]))

plt.plot(epochs, loss, label="training_loss")

plt.plot(epochs, val_loss, label="val_loss")

plt.title("loss")

plt.xlabel("epochs")

plt.legend()

plt.figure()

plt.plot(epochs, accuracy, label="training_accuracy")

plt.plot(epochs, val_accuracy, label="val_accuracy")

plt.title("accuracy")

plt.xlabel("epochs")

plt.legend()

plot_curves(history)

result = model.evaluate(test_data, verbose=0)

print(f"Accuracy on Evaluation: {result[1]*100:.2f}%\nLoss: {result[0]:.4f}")

# Set up data generators

train_dir = "/kaggle/input/brain-mri-images-for-brain-tumor-detection/"

valid_dir = "/kaggle/input/brain-mri-images-for-brain-tumor-detection/"

img_size = (224, 224)

batch_size = 32

train_datagen = ImageDataGenerator(

rescale=1.0 / 255,

rotation_range=20,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

)

valid_datagen = ImageDataGenerator(rescale=1.0 / 255)

train_generator = train_datagen.flow_from_directory(

train_dir, target_size=img_size, batch_size=batch_size, class_mode="binary"

)

valid_generator = valid_datagen.flow_from_directory(

valid_dir, target_size=img_size, batch_size=batch_size, class_mode="binary"

)

# Load EfficientNetB0 model

base_model = EfficientNetB0(

include_top=False, input_shape=(img_size[0], img_size[1], 3)

)

# Add new classification layers

x = GlobalAveragePooling2D()(base_model.output)

x = Dense(128, activation="relu")(x)

x = Dense(1, activation="sigmoid")(x)

model = Model(inputs=base_model.input, outputs=x)

# Freeze base model layers

for layer in base_model.layers:

layer.trainable = False

# Compile model

model.compile(optimizer="adam", loss="binary_crossentropy", metrics=["accuracy"])

# Train model

epochs = 10

history = model.fit(train_generator, epochs=epochs, validation_data=valid_generator)

model.save("model.h5")

from tensorflow.keras.preprocessing import image

# Load the model

model = tf.keras.models.load_model("model.h5")

# Load an example image and preprocess it

img_path = "/kaggle/input/brain-mri-images-for-brain-tumor-detection/no/1 no.jpeg"

img = image.load_img(img_path, target_size=(224, 224))

img_array = image.img_to_array(img)

img_array = np.expand_dims(img_array, axis=0)

img_array = img_array / 255.0

# Make a prediction on the image

prediction = model.predict(img_array)

# Convert the prediction to a label (0 for no tumor, 1 for tumor)

label = int(np.round(prediction)[0][0])

if label == 0:

print("No tumor detected.")

else:

print("Tumor detected.")

# ## Transfer Learning with EfficientNetB0

# EfficientNetB0 Feature Vector

effnet_url = "https://tfhub.dev/tensorflow/efficientnet/b0/feature-vector/1"

# Feature Layer

effnet_layer = hub.KerasLayer(

effnet_url, trainable=False, name="feature_extraction_layer"

)

# Create Sequential model

effnet_model = Sequential([effnet_layer, Dense(1, activation="sigmoid")])

# Compile the model

effnet_model.compile(loss=BinaryCrossentropy(), optimizer=Adam(), metrics=["accuracy"])

# Fit the model

effnet_history = effnet_model.fit(

train_data,

epochs=10,

steps_per_epoch=len(train_data),

validation_data=test_data,

validation_steps=len(test_data),

)

plot_curves(effnet_history)

result = effnet_model.evaluate(test_data, verbose=0)

print(f"Accuracy on Evaluation: {result[1]*100:.2f}%\nLoss: {result[0]:.4f}")

|

# # CNN (Convolutional Neural Network) on Sign Language Digits Dataset

# **Introduction**

#

# In this kernel I try to guess to pictures which are sign language correctly by using CNN. I have used Keras Library.

# **Content**

# [Import Data](#import)

# [Visualize](#visualize)

# [Train-Test Split](#train-test_split)

# [Reshaping](#reshaping)

# [Creating Model](#creating_model)

# [Building Model](#building_model)

# [Defining and Compiling of Optimizer](#optimizer)

# [Accuracy](#accuracy)

# [Conclusion](#conclusion)

#

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

import matplotlib.pyplot as plt

import seaborn as sns

# Input data files are available in the "../input/" directory.

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# Any results you write to the current directory are saved as output.

#

# **Import Data**

# Import data

data_x = np.load(

"../input/sign-language-digits-dataset/Sign-language-digits-dataset/X.npy"

)

data_y = np.load(

"../input/sign-language-digits-dataset/Sign-language-digits-dataset/Y.npy"

)

#

# **Visualize**

#

# Visualize to samples

img_size = 64

plt.subplot(1, 3, 1)

plt.imshow(data_x[200].reshape(img_size, img_size))

plt.axis("off")

plt.subplot(1, 3, 2)

plt.imshow(data_x[800].reshape(img_size, img_size))

plt.axis("off")

plt.subplot(1, 3, 3)

plt.imshow(data_x[600].reshape(img_size, img_size))

plt.axis("off")

#

# **Train-Test Split**

# Train-Test Split

from sklearn.model_selection import train_test_split

x_train, x_test, y_train, y_test = train_test_split(

data_x, data_y, test_size=0.2, random_state=42

)

# Size of elements of train_test_split methods

print("x train shape: {}".format(x_train.shape))

print("y train shape: {}".format(y_train.shape))

print("x test shape: {}".format(x_test.shape))

print("y test shape: {}".format(y_test.shape))

#

# **Reshaping**

# Reshaping. We reshape x_train and x_test because Keras requires 3 dimention.

x_train = x_train.reshape(-1, 64, 64, 1)

x_test = x_test.reshape(-1, 64, 64, 1)

# New size of x_train and x_shape

print("x train shape: {}".format(x_train.shape))

print("x test shape: {}".format(x_test.shape))

#

# **Creating Model**

# Creating Model

from sklearn.metrics import confusion_matrix

import itertools

from keras.utils.np_utils import to_categorical # convert to one-hot-encoding

from keras.models import Sequential

from keras.layers import Dense, Dropout, Flatten, Conv2D, MaxPool2D

from keras.optimizers import RMSprop, Adam

from keras.preprocessing.image import ImageDataGenerator

from keras.callbacks import ReduceLROnPlateau

#

# **Building Model**

# building of our model

model = Sequential()

# we add convolutional layer, count of filter = 64, kernel_size means that dimension of filter.

model.add(

Conv2D(

filters=64,

kernel_size=(5, 5),

padding="Same",

activation="relu",

input_shape=(64, 64, 1),

)

)

# dimension of (64,64,1) is 3 because kernel requires 3 dimensions. Number "1" shows that it is used as gray scale.

model.add(MaxPool2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

# we rewrite the top one. We don't have to write input shape because these are things that are connected to each other like chains.

model.add(Conv2D(filters=128, kernel_size=(3, 3), padding="Same", activation="relu"))

model.add(MaxPool2D(pool_size=(2, 2), strides=(2, 2)))

model.add(Dropout(0.25))

model.add(Conv2D(filters=128, kernel_size=(3, 3), padding="Same", activation="relu"))

model.add(MaxPool2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

# fully connected

model.add(Flatten())

model.add(Dense(256, activation="relu"))

model.add(Dense(512, activation="relu"))

model.add(Dense(256, activation="relu"))

model.add(Dense(10, activation="softmax"))

# although sigma function is used for binary classification, softmax is a version of sigma function which is used for multi-output classification.

model.summary()

#

# **Defining and Compiling of Optimizer**

#

# defining optimizer

optimizer = Adam(lr=0.001, beta_1=0.9, beta_2=0.999)

# compiling optimizer

model.compile(

optimizer=optimizer, loss="categorical_crossentropy", metrics=["accuracy"]

)

# fitting

history = model.fit(x_train, y_train, epochs=100, validation_data=(x_test, y_test))

#

# **Accuracy**

scores = model.evaluate(x_test, y_test, verbose=0)

print("{}: {:.2f}%".format("accuracy", scores[1] * 100))

|

# Big Five Personality Test

import pandas as pd

import numpy as np

from sklearn.cluster import KMeans

import matplotlib.pyplot as plt

data = pd.read_csv("E:/ML projects/data-final.csv")

x = data.iloc[:, :50]

x.head()

# check null values there

x.isnull().sum()

# fill the values with 0 (1 million>> 1783)

x = x.fillna(0)

x.isnull().sum()

kmc = KMeans(n_clusters=10)

kmc.fit(x)

len(kmc.labels_)

kmc.labels_

# untagging the clusters seperately into 10

one, two, three, four, five, six, seven, eight, nine, ten = kmc.cluster_centers_

one

# observing the distribution of 5 traits in each cluster

# can't take the 1st 10 avg since there are positive and negative statements

oneScores = {}

oneScores["EXT_SCORE"] = (

one[0]

- one[1]

+ one[2]

- one[3]

+ one[4]

- one[5]

+ one[6]

- one[7]

+ one[8]

- one[9]

)

oneScores["EST_SCORE"] = (

one[10]

- one[11]

+ one[12]

- one[13]

+ one[14]

+ one[15]

+ one[16]

+ one[17]

+ one[18]

+ one[19]

)

oneScores["AGR_SCORE"] = (

-one[20]

+ one[21]

- one[22]

+ one[23]

- one[24]

+ one[25]

- one[26]

+ one[27]

+ one[28]

+ one[29]

)

oneScores["CSN_SCORE"] = (

one[30]

- one[31]

+ one[32]

- one[33]

+ one[34]

- one[35]

+ one[36]

- one[37]

+ one[38]

+ one[39]

)

oneScores["OPN_SCORE"] = (

one[40]

- one[41]

+ one[42]

- one[43]

+ one[44]

- one[45]

+ one[46]

- one[47]

+ one[48]

+ one[49]

)

oneScores

types = {

"one": one,

"two": two,

"three": three,

"four": four,

"five": five,

"six": six,

"seven": seven,

"eight": eight,

"nine": nine,

"ten": ten,

}

types.items()

typeScores = {}

for cluster, traitType in types.items():

peronsalScore = {}

peronsalScore["EXT_SCORE"] = (

traitType[0]

- traitType[1]

+ traitType[2]

- traitType[3]

+ traitType[4]

- traitType[5]

+ traitType[6]

- traitType[7]

+ traitType[8]

- traitType[9]

)

peronsalScore["EST_SCORE"] = (

traitType[10]

- traitType[11]

+ traitType[12]

- traitType[13]

+ traitType[14]

+ traitType[15]

+ traitType[16]

+ traitType[17]

+ traitType[18]

+ traitType[19]

)

peronsalScore["AGR_SCORE"] = (

-traitType[20]

+ traitType[21]

- traitType[22]

+ traitType[23]

- traitType[24]

+ traitType[25]

- traitType[26]

+ traitType[27]

+ traitType[28]

+ traitType[29]

)

peronsalScore["CSN_SCORE"] = (

traitType[30]

- traitType[31]

+ traitType[32]

- traitType[33]

+ traitType[34]

- traitType[35]

+ traitType[36]

- traitType[37]

+ traitType[38]

+ traitType[39]

)

peronsalScore["OPN_SCORE"] = (

traitType[40]

- traitType[41]

+ traitType[42]

- traitType[43]

+ traitType[44]

- traitType[45]

+ traitType[46]

- traitType[47]

+ traitType[48]

+ traitType[49]

)

typeScores[cluster] = peronsalScore

typeScores

# since there are negative values, we use min max array to get all positive

# first we have to take the sum of differnt traits of each cluster

totalEXT = []

totalEST = []

totalAGR = []

totalCSN = []

totalOPN = []

for cluster, traitType in typeScores.items():

totalEXT.append(traitType["EXT_SCORE"])

totalEST.append(traitType["EST_SCORE"])

totalAGR.append(traitType["AGR_SCORE"])

totalCSN.append(traitType["CSN_SCORE"])

totalOPN.append(traitType["OPN_SCORE"])

totalEXT

minMaxArray = lambda L: (np.array(L) - np.array(L).min()) / (

np.array(L).max() - np.array(L).min()

)

normExtro = list(minMaxArray(totalEXT))

normEST = list(minMaxArray(totalEST))

normARG = list(minMaxArray(totalAGR))

normCSN = list(minMaxArray(totalCSN))

normOPN = list(minMaxArray(totalOPN))

normCSN

normEST

idx = 0

normTypeScores = {}

for personalityType, personalityScore in typeScores.items():

normPersonScore = {}

normPersonScore["EXT_SCORE"] = normExtro[idx]

normPersonScore["EST_SCORE"] = normEST[idx]

normPersonScore["ARG_SCORE"] = normARG[idx]

normPersonScore["CSN_SCORE"] = normCSN[idx]

normPersonScore["OPN_SCORE"] = normOPN[idx]

normTypeScores[personalityType] = normPersonScore

idx = idx + 1

normTypeScores

# let's see the diffrent traits in cluster 1

plt.bar(list(normTypeScores["one"].keys()), normTypeScores["one"].values())

# let's see the diffrent traits in cluster 7

plt.bar(list(normTypeScores["seven"].keys()), normTypeScores["seven"].values())

|

# Personal Info: [blog](mostafatouny.github.io/), [twitter](@Mostafa_Touny), [email]([email protected])

# ### Preface

# If you wish to see only results without any technical details, hit the above _Run All_ button, skip coding and look at only graphs and tables. At the end there's a summary of the whole notebook's results if you do not like scrolling. Even-though data science methods used here are extremely primitive, I believe there're insights which could be extracted. So, share us your thoughts, as a gamer in interpreting these data. Certainly, they are going to be helpful as these results are just the outset of a long journey I am about to tackle in artifical emotional intellligence in games (still a beginner, anyway).

# ### Goal

# - Top games got high ratings from professional critics but not from community of users

# - Top games got high ratings from community of users but not from progessional critics

# - Graph of percentage of games whose disparity between critics and users are low, moderate, or high

# - Do above steps on four platforms, namely, PS4, Xbox One, Switch, and PC. Then we compare them

# 3rd-party libraries

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

# read input from kaggle into a dataframe

# a map from each platform to its corresponding dataframe

platform_df = {}

# platform names and their corresonding data file names

platformsNames = ["ps4", "xbox", "switch", "pc"]

filesNames = ["ps4.csv", "xbox.csv", "switch.csv", "pc.csv"]

# for each platform, then

for name in platformsNames:

# parse it as pandas dataframe, then map platform name to it

platform_df[name] = pd.read_csv(

"/kaggle/input/metacritic-best-2019-video-games/" + name + ".csv"

)

# take a look at a dataframe

platform_df["ps4"]

platform_df["ps4"].dtypes

# drop unneeded columns and re-organize them

for name in platformsNames:

platform_df[name] = platform_df[name][["title", "user_rating", "critic_rating"]]

# take a look at a dataframe, again

platform_df["xbox"]

# ### compute disparity (difference) between users and critics

# for each platform

for name in platform_df:

# get dataframe of the platform

df = platform_df[name]

# for each record, compute distance between user and critic ratings, then set result to a new column

df["userCritic_difference"] = df.apply(

lambda x: abs(x["user_rating"] - x["critic_rating"]), axis=1

)

# assign updates back to our dataframe

platform_df[name] = df

platform_df["pc"]

# ### discretize disparity computed earlier into categories

# define categories and their intervals

def numToCat(row):

# equal or greater than 30

if row["userCritic_difference"] >= 30:

return "high"

# equal or greater than 20 and less than 30

elif row["userCritic_difference"] >= 20:

return "moderate"

# less than 20

else:

return "low"

# compute categories as defined earlier

# loop on platforms

for platformName in platform_df:

# get dataframe of the platform

df = platform_df[platformName]

# add category based on difference just defined

df["difference_category"] = df.apply(lambda x: numToCat(x), axis=1)

# let categories be recognized by pandas

df["difference_category"] = df["difference_category"].astype("category")

# re-order categories

df["difference_category"] = df["difference_category"].cat.set_categories(

["low", "moderate", "high"]

)

# assign back to our dataframe

platform_df[platformName] = df

# take a look after our new columns added

platform_df["switch"]

# ### sort according to disparity between users and critics

# for each platform

for platformName in platform_df:

# get platform dataframe

df = platform_df[platformName]

# sort it by userCritic_difference

df = df.sort_values(axis=0, by="userCritic_difference", ascending=False)

# assign sorted dataframe back to our dataframe

platform_df[platformName] = df

# ### maximum disparity between users and critics ratings

platform_df["ps4"].head(20)

platform_df["xbox"].head(20)

platform_df["pc"].head(20)

platform_df["switch"].head(20)

# NBA, Fifa, Madden, COD: modern warefare games are on top of nearly all platforms lists

# ### Minimum disparity between users and critics

platform_df["ps4"].tail(20)

# _Star Wars Jedi: Fallen Order_ got zero disparity

# ### Games which got ratings from users higher than from professional critics

# filter only records whose user ratings is greater than critics ratings

def higherUserRatings(platform_in):

return platform_df[platform_in][

platform_df[platform_in]["user_rating"]

> platform_df[platform_in]["critic_rating"]

].head(10)

higherUserRatings("pc")

higherUserRatings("ps4")

higherUserRatings("xbox")

higherUserRatings("switch")

# _Left Alive_ is the most praised game by the community not appreciated by professional critics

# ### Pie graph on disparity between users and critics

plt.close("all")

# for each platform dataframe

for platformName in platform_df:

print("\non platform ", platformName)

# count categories among all records

categories_count = platform_df[platformName].groupby("difference_category").size()

# construct a series based on it

pie_series = pd.Series(categories_count, name="categories percentages")

# plot a pie chart

pie_series.plot.pie(figsize=(6, 6))

plt.show()

# Switch games got much lower percentage of high and moderate disparity

# ### Basic stats on disparity between users and critics

# for each platform

for platformName in platform_df:

# print platform name

print("\n", "on ", platformName)

# show basic stat

print(platform_df[platformName]["userCritic_difference"].describe())

|

import pandas as pd # 导入csv文件的库

import numpy as np # 进行矩阵运算的库

import matplotlib.pyplot as plt # 作图的库

import torch # 一个深度学习的库Pytorch

import torch.nn as nn # neural network,神经网络

from torch.autograd import Variable # 从自动求导中引入变量

import torch.optim as optim # 一个实现了各种优化算法的库

import torch.nn.functional as F # 神经网络函数库

import os # 与操作系统交互,处理文件和目录、管理进程、获取环境变量

from PIL import Image, ImageOps, ImageFilter, ImageEnhance # PIL是图像处理库

import torchvision.transforms as transforms # 图像、视频、文本增强和预处理的库

import zipfile # 对ZIP格式的归档文件进行读取、写入和操作

import warnings # 避免一些可以忽略的报错

warnings.filterwarnings("ignore") # filterwarnings()方法是用于设置警告过滤器的方法,它可以控制警告信息的输出方式和级别。

os.listdir("../input/dogs-vs-cats-redux-kernels-edition") # 返回指定路径下的文件和目录名的列表

zip_files = ["test", "train"]

for zip_file in zip_files:

with zipfile.ZipFile(

"../input/dogs-vs-cats-redux-kernels-edition/{}.zip".format(zip_file), "r"

) as z:

z.extractall(".") # 解压

print("{} unzipped".format(zip_file))

Train_Folder_Path = "../working/train"

Test_Folder_Path = "../working/test"

train_file_names = os.listdir(Train_Folder_Path)

test_file_names = os.listdir(Test_Folder_Path)

targets = []

full_paths = []

for file_name in train_file_names:

target = file_name.split(".")[0]

full_path = os.path.join(Train_Folder_Path, file_name)

full_paths.append(full_path)

targets.append(target)

train_df = pd.DataFrame()

train_df["image_path"] = full_paths

train_df["target"] = targets

full_paths = []

for file_name in test_file_names:

full_path = os.path.join(Test_Folder_Path, file_name)

full_paths.append(full_path)

test_df = pd.DataFrame()

test_df["image_path"] = full_paths

# 定义正则化的标准,3个颜色通道的均值和方差,这是从ImageNet数据集上得出的数值

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

transform = transforms.Compose(

[

# transforms.RandomRotation(30), #在[-30,30]的范围内随机旋转一个角度

transforms.RandomResizedCrop(

size=128,

scale=(0.8, 1.2),

interpolation=transforms.functional.InterpolationMode.BILINEAR,

), # 先将图像随机进行缩放操作,然后再将图像变成128*128的图像

# transforms.RandomHorizontalFlip(), # 随机水平翻转

# transforms.ColorJitter(brightness=0.5, contrast=0.5, saturation=0.5), # 随机颜色变换

transforms.ToTensor(), # 转换成张量形式

normalize, # 标准化操作

]

)

imgcnt = 25000

data = []

label = []

for i in range(imgcnt):

img = Image.open(train_df["image_path"][i]) # 读取图片

new_img = transform(img)

data.append(new_img.detach().cpu().numpy())

if train_df["target"][i] == "cat":

label.append(0) # 猫是0,狗是1

else:

label.append(1)

if i % 500 == 0:

print(i)

# 划分训练集和测试集

data = np.array(data)

label = np.array(label)

num = [i for i in range(imgcnt)]

np.random.shuffle(num)

data = data[num]

label = label[num]

train_X = data[: 8 * imgcnt // 10]

train_y = label[: 8 * imgcnt // 10]

test_X = data[8 * imgcnt // 10 :]

test_y = label[8 * imgcnt // 10 :]

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.model = nn.Sequential()

# 3*128*128

self.model.add_module("conv1_1", nn.Conv2d(3, 32, 3, 1, 0, bias=False))

# 32*126*126

self.model.add_module("relu1_1", nn.ReLU())

self.model.add_module("maxpool1", nn.MaxPool2d(2, 2, 0))

# 32*63*63

self.model.add_module("batch1", nn.BatchNorm2d(32))

self.model.add_module("conv2_1", nn.Conv2d(32, 64, 3, 1, 0, bias=False))

# 64*61*61

self.model.add_module("SiLU2_1", nn.SiLU())

self.model.add_module("maxpool2", nn.MaxPool2d(2, 2, 0))

# 64*30*30

# 全连接网络层,输入64*30*30,输出10个类别的概率

self.model.add_module("linear1", nn.Linear(64 * 30 * 30, 256))

self.model.add_module("dropout1", nn.Dropout(0.5))

self.model.add_module("Leakyrelu3_1", nn.LeakyReLU())

self.model.add_module("linear2", nn.Linear(256, 2))

self.model.add_module("Tanh3_2", nn.Tanh())

def forward(self, input):

output = input

for name, module in self.model.named_children():

if name == "linear1":

output = output.view(-1, 64 * 30 * 30)

output = module(output)

return F.softmax(output, dim=1)

def weight_init(m):

# 获取对象所属的类的名称

class_name = m.__class__.__name__

# 当对象的name中出现"conv",也就是卷积操作

if class_name.find("conv") != -1:

# 对卷积核按照正态分布的均值和标准差随机初始化

m.weight.data.normal_(0, 0.02)

# 初始化神经网络

netC = CNN()

netC.apply(weight_init)

print(netC)

# 优化器

optimizer = optim.Adam(netC.parameters(), lr=0.0002, betas=(0.5, 0.999))

# 损失函数

criterion = nn.NLLLoss() # 负对数似然损失函数,也是交叉熵损失函数的一种

# 训练周期为20次

num_epochs = 20

for epoch in range(num_epochs):

num = [i for i in range(len(train_X))]

np.random.shuffle(num)

train_X = train_X[num]

train_y = train_y[num]

for i in range(16):

image = []

label = []

for j in range(500):

image.append(train_X[1000 * i + j].reshape((3, 128, 128)))

label.append(train_y[1000 * i + j])

# 将数据转换成可以处理的张量格式

image = torch.Tensor(image) # .to(device)

label = torch.Tensor(label).long() # .to(device)

# 训练

netC.train()

# 将数据放进去训练

output = netC(image) # .to(device)

# 计算每次的损失函数

error = criterion(output, label) # .to(device)

# 反向传播

error.backward()

print(i)

# 优化器进行优化(梯度下降,降低误差)

optimizer.step()

# 将梯度清空

optimizer.zero_grad()

print(epoch, error)

pred_y = []

for i in range(len(train_X)):

pred = netC(torch.Tensor(train_X[i].reshape((1, 3, 128, 128))))[0] # .to(device)

pred = np.argmax(pred.detach().cpu().numpy())

pred_y.append(pred)

if i % 1000 == 0:

print(i)

print("训练集的准确率:", np.sum(pred_y == train_y) / len(train_y), "训练集总数为:", len(train_y))

pred_y = []

for i in range(len(test_X)):

pred = netC(torch.Tensor(test_X[i].reshape((1, 3, 128, 128))))[0]

pred = np.argmax(pred.detach().cpu().numpy())

pred_y.append(pred)

if i % 1000 == 0:

print(i)

print("测试集的准确率:", np.sum(pred_y == test_y) / len(test_y), "测试集总数为:", len(test_y))

torch.save(netC, "catsdogs.pth")

print(

test_df["image_path"][i],

)

imgcnt = 12500

data = []

for i in range(imgcnt):

img = Image.open(test_df["image_path"][i])

new_img = transform(img)

data.append(new_img.detach().cpu().numpy())

if i % 500 == 0:

print(i)

data = np.array(data)

pred_y = [0 for i in range(len(data))]

for i in range(len(data)):

pred = netC(torch.Tensor(data[i].reshape((1, 3, 128, 128))))[0] # .to(device))[0]

pred = np.argmax(pred.detach().cpu().numpy())

t = test_df["image_path"][i].split("/")

t = t[len(t) - 1]

t = int(t[: len(t) - 4])

pred_y[t - 1] = pred

if i % 1000 == 0:

print(i)

# 写入文件

import csv

head = ["id", "label"]

data = []

for i in range(len(pred_y)):

data.append([(i + 1), pred_y[i]])

with open("answer.csv", "w", newline="") as f:

writer = csv.writer(f)

writer.writerow(head)

writer.writerows(data)

|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

from sklearn.model_selection import train_test_split

from sklearn.svm import LinearSVC

from sklearn.feature_extraction import DictVectorizer

from sklearn.metrics import classification_report

# Input data files are available in the "../input/" directory.

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

df = pd.read_csv("/kaggle/input/titanic/train.csv")

# print(df.head())

useful_df = df[["Survived", "Pclass", "Sex", "Age", "SibSp", "Parch", "Embarked"]]

useful_df.dropna(inplace=True)

train_data, val_data = train_test_split(useful_df, test_size=0.3, random_state=1)

print(train_data.head())

def extract_train_features_and_labels(train_data):

train_labels = list(train_data["Survived"])

train_features = []

pclass = list(train_data["Pclass"])

sex = list(train_data["Sex"])

age = list(train_data["Age"])

sibsp = list(train_data["SibSp"])

parch = list(train_data["Parch"])

embarked = list(train_data["Embarked"])

for i in range(len(train_data)):

feature_dict = {

"pclass": pclass[i],

"sex": sex[i],

"age": age[i],

"sibsp": sibsp[i],

"parch": parch[i],

"embarked": embarked[i],

}

train_features.append(feature_dict)

return train_features, train_labels

# extract_train_features_and_labels(train_data)

def extract_val_features_and_labels(val_data):

val_labels = list(val_data["Survived"])

val_features = []

pclass = list(val_data["Pclass"])

sex = list(val_data["Sex"])

age = list(val_data["Age"])

sibsp = list(val_data["SibSp"])

parch = list(val_data["Parch"])

embarked = list(val_data["Embarked"])

for i in range(len(val_data)):

feature_dict = {

"pclass": pclass[i],

"sex": sex[i],

"age": age[i],

"sibsp": sibsp[i],

"parch": parch[i],

"embarked": embarked[i],

}

val_features.append(feature_dict)

return val_features, val_labels

# extract_val_features_and_labels(val_data)

def create_classifier(train_features, train_labels):

vec = DictVectorizer()

vec_train_features = vec.fit_transform(train_features)

model = LinearSVC()

fitted_model = model.fit(vec_train_features, train_labels)

return vec, fitted_model

def classify_data(vec, fitted_model, val_features):

vec_val_features = vec.transform(val_features)

prediction = fitted_model.predict(vec_val_features)

return prediction

def evaluation(val_labels, prediction):

report = classification_report(val_labels, prediction)

print(report)

return report

train_features, train_labels = extract_train_features_and_labels(train_data)

val_features, val_labels = extract_val_features_and_labels(val_data)

vec, fitted_model = create_classifier(train_features, train_labels)

prediction = classify_data(vec, fitted_model, val_features)

evaluation(val_labels, prediction)

|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 20GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session

# # Sentimental Analysis

# Will use 2 main approaches to sentimental analysis

# - VADER (Valence Aware Dictionary sEntiment Reasoner) - Bag of words approach

# - ROBERTA (Transformer) by 🤗

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

plt.style.use("ggplot")

import nltk

import warnings

warnings.filterwarnings("ignore")

df = pd.read_csv("../input/amazon-fine-food-reviews/Reviews.csv", index_col="Id")

df

df.Text.iloc[0]

df.shape

df_subset = df.head(500)

# # EDA

df.Score.value_counts().sort_index().plot(kind="bar", title="Reviews Score Count")

plt.xlabel("Score")

plt.ylabel("Count")

plt.show()

# ProductId and Count of Score type

temp = df_subset.groupby(["ProductId", "Score"]).aggregate({"Score": "count"})

temp.columns = ["count"]

temp.reset_index(inplace=True)

# for sorting

tempmask = df_subset.groupby(["ProductId"])["ProductId"].count()

# Top 10 Products by count

top10_Products = tempmask.sort_values(ascending=False).head(10).index

# Retrieve Top 10 Products

temp["TotalCount"] = temp["ProductId"].map(tempmask)

temp.sort_values("TotalCount", ascending=False, inplace=True)

toptemp = temp[temp["ProductId"].isin(top10_Products)].drop("TotalCount", axis=1)

# Grouped Bar plot

plt.figure(figsize=(15, 5))

sns.barplot(

x="ProductId",

y="value",

hue="Score",

data=pd.melt(toptemp, id_vars=["ProductId", "Score"]),

palette="Paired",

)

plt.title("Top 10 Products Grouped by Score")

plt.show()

# **Top 10 Products**

# - Most of the products are dominated by higher score of 5

# - Product B001ELL608 has the highest frequency of 1-scored reviews

# # NLTK (Python Natural Language Toolkit)

example = df_subset.Text.iloc[50]

example

# ### Tokenize

ex_token = nltk.word_tokenize(example)

np.array(ex_token)

# **The tokenizer is not just split the words by spaces, instead it is extracts real words. Ex: "don't" >> "do", "n't"**

# **This is called Stemming**

# ## Stop-words

from nltk.corpus import stopwords

stop_words = set(nltk.corpus.stopwords.words("english"))

filtered_sentence = [word for word in ex_token if not word.lower() in stop_words]

filtered_sentence

# ### Part Of Speech Tagging

ex_tagged = nltk.pos_tag(ex_token)

ex_tagged

# ref: https://www.educba.com/nltk-pos-tag/

ex_chunked = nltk.chunk.ne_chunk(ex_tagged)

ex_chunked.pprint()

# # VADER Sentiment scoring

# We'll use NLTK's `SentimentIntensityAnalyzer` to get the positive/negative/neutral scores of a text

# - Uses Bag of words:

# - Stop words are removed

# - Each word is stored and combined for a final score

#

# Remember it will score each word individually and then combines the scoring to check wheather the statement is positive or otherwise. Becuase of independent scoring, it does not take into account the relationship between words (context)

from nltk.sentiment import SentimentIntensityAnalyzer

sia = SentimentIntensityAnalyzer()

# Get scores of sentences

print(sia.polarity_scores("I have a happy and hugging face!"))

print(sia.polarity_scores("This couldn't be more awful"))

print(sia.polarity_scores("The product is good but couldn't have been better"))

# **The above statements is more of a;**

# - positive

# - negative

# - neutral

# sentiment resp. (compound shows overall score in range -1 to +1)

print(example)

ex_score = sia.polarity_scores(example)

ex_score

# Get scores for entire dataset

from tqdm.notebook import tqdm # for a beautiful progress bar

result_score = {}

for i, row in tqdm(df_subset.iterrows(), total=len(df_subset)):

result_score[i] = sia.polarity_scores(row["Text"])

vaders = pd.DataFrame(result_score).T

vaders.head()

# Merge to original dataset

df_vader = df_subset.merge(vaders, how="left", left_index=True, right_index=True)

df_vader.head()

# # Plot Vader

# If we assume the Review Score of 5 is more like positive sentiment than negative, we want the Compound score also to be higher.

# **low Score has lower Compound and Higher Score has higher Compound**

sns.barplot(

data=df_vader, x="Score", y="compound", label="Compound score by Review Score"

)

plt.show()

# We can see it is inline with our assumption

fig, axs = plt.subplots(1, 3, figsize=(15, 5))

sns.barplot(data=df_vader, x="Score", y="neg", ax=axs[0])

sns.barplot(data=df_vader, x="Score", y="neu", ax=axs[1])

sns.barplot(data=df_vader, x="Score", y="pos", ax=axs[2])

axs[0].set_title("Score v Neg")

axs[1].set_title("Score v Neu")

axs[2].set_title("Score v Pos")

plt.show()

sia.polarity_scores("You should cut yourself some slack")

# # Roberta - Pretrained Model

# - Roberta is a Transformer

# - Trained on Large corpus of data

# - Transformer models not only account for words, but also for the context of a statement

from transformers import AutoTokenizer # for tokenizing

from transformers import AutoModelForSequenceClassification # for classification

from scipy.special import softmax # for normalizing the output

# Compile Pre-Trained Model

MODEL = "cardiffnlp/twitter-roberta-base-sentiment"

tokenizer = AutoTokenizer.from_pretrained(MODEL)

model = AutoModelForSequenceClassification.from_pretrained(MODEL)

# Encode text

encoded_text = tokenizer(example, return_tensors="pt")

encoded_text

# Run the model

ex_score_roberta = model(**encoded_text)

ex_score_roberta

# Apply softmax

ex_score_roberta = ex_score_roberta[0][0].detach().numpy()

ex_score_roberta = softmax(ex_score_roberta)

ex_score_roberta = {

"roberta_neg": ex_score_roberta[0],

"roberta_neu": ex_score_roberta[1],

"roberta_pos": ex_score_roberta[2],

}

ex_score_roberta

print(example)

print("Score by VADER:", ex_score)

print("Score by Roberta:", ex_score_roberta)

# ### ** We can see Roberta accounts for context and is much more powerful than simple models like VADER **

# # Final Model Combine

def polarity_scores_roberta(text):

encoded_text = tokenizer(text, return_tensors="pt")

score = model(**encoded_text)

score = score[0][0].detach().numpy()

score = softmax(score)

score = {

"roberta_neg": score[0],

"roberta_neu": score[1],

"roberta_pos": score[2],

}

return score

result_score = {}

for i, row in tqdm(df_subset.iterrows(), total=len(df_subset)):

try:

# VADER

vader_score = sia.polarity_scores(row["Text"])

new_vader_score = {}

for key, value in vader_score.items():

new_vader_score[f"vader_{key}"] = value

# Roberta

roberta_score = polarity_scores_roberta(row["Text"])

combine_score = {**new_vader_score, **roberta_score}

result_score[i] = combine_score

except:

print(f"Broke for Id {i} - can't be handled by Roberta")

# Join DataFrame with Scores

result_df = pd.DataFrame(result_score).T

final_df = df_subset.merge(result_df, how="left", left_index=True, right_index=True)

final_df.head()

# # Inferences

sns.pairplot(

data=final_df,

vars=[

"vader_neg",

"vader_neu",

"vader_pos",

"roberta_neg",

"roberta_neu",

"roberta_pos",

],

hue="Score",

)

plt.show()

# **Roberta model exhibited a high kurtosis when compared to the VADER model. This indicates that the Roberta model is more confident in classifying sentiments.**

false_positives = final_df[

(final_df.Score == 1) & (final_df.roberta_pos > 0.5)

].sort_values("roberta_pos", ascending=False)[

["Score", "Text", "vader_pos", "roberta_pos"]

]

false_positives

for text in false_positives.Text:

print(text, "\n")

# **Findings:**

# - All comments even though has 5 stars, is actually saracsting and negative than positive, so Roberta has managed to classified all of them correctly.

false_negatives = final_df[

(final_df.Score == 5) & (final_df.roberta_neg > 0.5)

].sort_values("roberta_neg", ascending=False)[

["Score", "Text", "vader_neg", "roberta_neg"]

]

false_negatives

for text in false_negatives.Text:

print(text, "\n")

# **Findings:**

# - Both comments even though have 1 star, is more on the positive side, but Roberta failed to classify it correctly, and that too with a huge confident error of 96%. Here VADER has performed better in both situation.

True_positives = (

final_df[(final_df.Score == 5) & (final_df.roberta_pos > 0.5)]

.sort_values("roberta_pos", ascending=False)[

[

"Score",

"Text",

"vader_neg",

"vader_neu",

"vader_pos",

"roberta_neg",

"roberta_neu",

"roberta_pos",

]

]

.head(5)

)

True_positives

for text in True_positives.Text:

print(text, "\n")

# **Findings:**

# - Even though all comments are full of positivity, the VADER's bag of words has failed to capure it.

True_negative = (

final_df[(final_df.Score == 1) & (final_df.roberta_neg < 0.5)]

.sort_values("roberta_neg", ascending=False)[

[

"Score",

"Text",

"vader_neg",

"vader_neu",

"vader_pos",

"roberta_neg",

"roberta_neu",

"roberta_pos",

]

]

.head(5)

)

True_negative

for text in True_negative.Text:

print(text, "\n")

# **Findings:**

# - All comments are Negative, Both Roberta and VADER were inclined to classify it as neutral sentiments instead, while VADER being highly confident.

# # Evaluation of Models

temp = final_df[["Score", "vader_neg", "vader_pos", "roberta_neg", "roberta_pos"]]

temp

# Assuming lower scoring as a negative sentiment.

temp["isPosScore"] = temp["Score"].apply(lambda x: 1 if x > 3 else 0)

temp["isPosVader"] = temp["vader_pos"].apply(lambda x: 1 if x > 0.5 else 0)

temp["isPosRoberta"] = temp["roberta_pos"].apply(lambda x: 1 if x > 0.5 else 0)

temp

from sklearn.metrics import confusion_matrix, classification_report

print("VADER\n")

print(confusion_matrix(temp["isPosScore"], temp["isPosVader"]))

print(classification_report(temp["isPosScore"], temp["isPosVader"]))

print("ROBERTA\n")

print(confusion_matrix(temp["isPosScore"], temp["isPosRoberta"]))

print(classification_report(temp["isPosScore"], temp["isPosRoberta"]))

# #### The Accuracy of Roberta model is 87%, which is significantly higher compared to Vader model with merely 19% of accuracy

# # Using Pipelines

from transformers import pipeline

sentiment_pipeline = pipeline("sentiment-analysis")

sentiment_pipeline("How can I be good, if you are great?")

|

# # Big dataset in .csv or .tsv processing with [Dask](http://https://docs.dask.org/en/latest/) library

# ### Motivation

# Data in the form of tables has become a standard in Data Science. It is easy to process and analyse it.

# **But what if you have really a huge data set for every day work, and you need to process tons of files?

# **

# Imagine, you have 5 million records generated every day and you need to process them for production. You may also need to work with them throughout the day - to formulate new hypotheses or analysis.

# This task was set for me to pass one of the stages of the interview - a test task.

# I need to open file in the .tsv format (analogous to .csv) and carry out the following operations:

# 1. Scale test data using train data. Process some columns.

# 2. Find the maximum index value and write the index in a new column.

# To simplify I will show results of my experiments immediately

# # 1. Huge csv file generation.

# ## Skip this stage and use files in the Kaggle input folder unless you want to repeate my experiment

# Here we are preparing a test file (random.csv) of size 9G. This is 5 million records of random numbers (from 100 to 1 million) with 257 columns. Finally we get a table of 5 million for 257 random integers = 1 billion 285 million values.

# **Creating a DataFrame took 5 seconds **, uploading it to .csv format using Pandas (to_csv) - 14 minutes!

# **

# An attempt to open this file using the system or Pandas failed. ( So use files in Kaggle input directory to run the code.

import numpy as np

import pandas as pd

from dask_ml.preprocessing import StandardScaler

import gc

import time

import dask.dataframe as dask

from dask.distributed import Client, progress

# ## You can run LOCALY Dask Dashboard to track the perfomance

# set workers

client = Client(n_workers=2, threads_per_worker=2, memory_limit="2GB")

client # work locally only

# setting the number of rows for the CSV file

start_time = time.time()

N = 5_000_000

columns = 257

# create DF

df = pd.DataFrame(

np.random.randint(999, 999999, size=(N, columns)),

columns=["level_%s" % i for i in range(0, columns)],

)

print("%s seconds" % (time.time() - start_time))

display(df.head(2))

print(f"shape of generated data is {df.shape}")

# # 2. Uncomment and run you want to wait more that 15 minutes.

# # Strongly recommend to Use files in Kaggle input directory!

# # save df to csv

# start_time = time.time()

# df.to_csv('random.csv', sep=',')

# print('%s seconds' % (time.time() - start_time)) # 877.5422155857086 seconds, 8.9 G

# ## Set our small size files path

test = "../input/test.tsv"

train = "../input/train.tsv"

# # 3. Class to load, transform data with Dask

class LoadBigCsvFile:

"""load data from tsv, transform, scale, add two columns

Input .csv, .tsv files

Output transformed file ready to save in .csv, .tsv format

"""

def __init__(self, train, test, scaler=StandardScaler(copy=False)):

self.train = train

self.test = test

self.scaler = (

scaler # here we use StandartScaler of Dask. We can use sklearn one

)

def read_data(self):

# use dask and load with smallest possible format - int16 using 'C'

try:

data_train = dask.read_csv(

self.train, dtype={n: "int16" for n in range(1, 300)}, engine="c"

).reset_index()

data_test = dask.read_csv(

self.test, dtype={n: "int16" for n in range(1, 300)}, engine="c"

).reset_index()

except:

(IOError, OSError), "can not open file"

# if any data?

assert len(data_test) != 0 and len(data_train) != 0, "No data in files"

# fit train and transform test

self.scaler.fit(data_train.iloc[:, 1:])

del data_train # del file that we do not need

test_transformed = self.scaler.transform(data_test.iloc[:, 1:])

# compute values and add columns

test_transformed["max_feature_2_abs_mean_diff"] = abs(

test_transformed.mean(axis=1) - test_transformed.max(axis=1)

)

test_transformed["max_feature_2_index"] = test_transformed.idxmin(axis=1)

test_transformed["job_id"] = data_test.iloc[

:, 0

] # add first column (it is not numerical)

del data_test # del file that we do not need

return test_transformed

# # 4. Let run class instance and track the time

start_time = time.time()

data = LoadBigCsvFile(train, test).read_data()

gc.collect()

print("class loaded in %s seconds" % (time.time() - start_time))

# # 5. Data is ready to save. But what format to choose?

# ## Here we use hdf format - why? please the final test results

# save to hdf for later use or modification

start_time = time.time()

data.to_hdf("test_proc.hdf", key="df1")

print("file saved in hdf in %s seconds" % (time.time() - start_time))

# # 6. Load created file again into the system.

start_time = time.time()

hdf_read = dask.read_hdf("test_proc.hdf", key="df1", mode="r", chunksize=10000)

print("file load into system in %s seconds" % (time.time() - start_time))

display(hdf_read.head(3))

|

# This Python 3 Analysis of NUFORC's database contains 80,0000 rows of reported sightings.

# I'm so glad this one came with Lat/Long,s for me to play with.

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 20GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session

# Me: [LinkedIn](https://www.linkedin.com/in/dontadaya/)

# Product for [Mr. Tim Ventura](https://www.youtube.com/channel/UCl1ewEFZwBOTU2I_f0XiSUA) & UAP enthusiasts.

# Feel free to fork, cite, manipulate, scrutinize, comment, and provide insights you deem worthy.

# # Let's load NUFORC's 80K dataset as a csv.

# ## Let's all say thanks to [NUFORC](https://nuforc.org/databank/) for sharing the data!

# ### And most especially a tremendous applause to [Mr. Omariba Collins](https://www.linkedin.com/in/omariba-collins-b28b841b9/) for bringing this phat csv into Kags.

# I hope this serves you something delightful.

# ### What does the data look like?

df = pd.read_csv("/kaggle/input/ufo-sightings/ufos.csv", header=0)

df.datetime

df.head(25)

# Fix datetime column.

# df[''] = pd.to_datetime(df['datetime'], format='%m%b%Y:%H:%M:%S.%f', errors='coerce')

# df['datetime'] = pd.to_datetime(df['datetime'], format='%m/%d/%y %H:%M')

# import datetime as dt

# df['datetime'] = df['datetime'].apply(lambda x: dt.datetime.strptime(x,'%m/%d/%Y %H:%M'))

# ### Duration, Lat, Lon - numerical insight.

#

df.describe()

# #### Average duration of sighting: 9.016889e+03 seconds, or 150.28148333 mins.

# #### The Lat/Lon: 72.700000, 178.441900 - may or may not be significant. We'll see later.

# ## Map it oat.

import pandas as pd

from shapely.geometry import Point

import geopandas as gpd

from geopandas import GeoDataFrame

geometry = [Point(xy) for xy in zip(df["longitude"], df["latitude"])]

gdf = GeoDataFrame(df, geometry=geometry)

# This is a simple map that goes with geopandas

world = gpd.read_file(gpd.datasets.get_path("naturalearth_lowres"))

gdf.plot(ax=world.plot(figsize=(50, 25)), marker="o", color="darkred", markersize=15)

|

# # **Online Shop Customer Sales Data**

#

# The dataset we have is a collection of online shopping transaction records, containing information about the age, gender, time spent on the website, payment method used, browser, newsletter subscription, and the total purchase value.

# **The aim of this dataset is to identify any patterns and insights that can be gleaned from this data to better understand consumer behavior and to help companies tailor their marketing and sales strategies accordingly.**

# In this analysis, we will be performing exploratory data analysis to understand the distribution of the data, identifying any outliers and missing values, and visualizing the relationships between different variables in the dataset.

# We will also be performing statistical analysis to understand the impact of different variables on the total purchase value, which will help us identify key factors that can be optimized to improve revenue.

# Importing required librries

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

data = pd.read_csv(

"/kaggle/input/online-shop-customer-sales-data/Online Shop Customer Sales Data.csv"

)

# # **Understanding Dataset**

data.columns

# Here is a brief description of each variable:

# - **Customer_id:** A unique identifier for each customer.

# - **Age:** The age of the customer.

# - **Gender:** A binary variable where 0 represents male and 1 represents female.

# - **Revenue_Total:** Total sales revenue by the customer.

# - **N_Purchases:** The number of purchases made by the customer to date.

# - **Purchase_DATE:** The date of the latest purchase made by the customer.

# - **Purchase_VALUE:** The value of the latest purchase made by the customer in euros.

# - **Pay_Method:** A categorical variable indicating the payment method used by the customer. The - - categories are digital wallets, card, PayPal, and other.

# - **Time_Spent:** The time spent by the customer on the website in seconds.

# - **Browser:** A categorical variable indicating the browser used by the customer. The categories are Chrome, Safari, Edge, and other.

# - **Newsletter:** A binary variable indicating whether the customer is subscribed to the newsletter or not.

# - **Voucher:** A binary variable indicating whether the customer has used a voucher or not.

data.head()

data.tail()

# # **Revenue Percentage by Age Group**

print(data["Age"].dtype)

print(data["Age"].describe())

# * The dataset has a total of **65,796** customers with ages ranging from **16 to 63**.

# * The mean age of the customers is **39.59**, and the median age (50th percentile) is **40**.

# * The minimum age of customers in the dataset is **16**, while the maximum age is **63**.

sns.histplot(data=data, x="Age", bins=20)

plt.title("Age Distribution")

plt.xlabel("Age")

plt.ylabel("Frequency")

plt.show()

# Create age bins

age_bins = [15, 25, 35, 45, 65]

age_labels = ["16-25", "26-35", "36-45", "46-63"]

data["Age_Group"] = pd.cut(data["Age"], bins=age_bins, labels=age_labels)

# Group the data by age group and calculate total revenue

age_revenue = data.groupby("Age_Group")["Revenue_Total"].sum().reset_index()

# Calculate the percentage of total revenue generated by each age group

age_revenue["Revenue_Percentage"] = (

age_revenue["Revenue_Total"] / age_revenue["Revenue_Total"].sum() * 100

)

# Print the results

print(age_revenue)

import matplotlib.pyplot as plt

# Set up the figure and axis

fig, ax = plt.subplots()

# Create the pie chart

ax.pie(

age_revenue["Revenue_Percentage"],

labels=age_revenue["Age_Group"],

autopct="%1.1f%%",

startangle=90,

)

# Add a title

ax.set_title("Revenue Percentage by Age Group")

# Show the plot

plt.show()

# * Based on the analysis of revenue by age group, we can see that the highest revenue generation is from customers between **the age group of 46-63, with a revenue percentage of 37.77%.**

# * The revenue percentage for the other age groups is relatively similar, with 20.58% for 16-25, 20.63% for 26-35, and 21.01% for 36-45.

#

# * This indicates that the online shop is catering to a wide range of age groups and has a relatively even distribution of revenue among them, with the exception of the age group 46-63, which generates the highest revenue. This information can be useful for targeted marketing and advertising efforts towards the age group that generates the highest revenue.

# # **Distribution of Customers by Gender**

gender_counts = data["Gender"].value_counts()

total_customers = gender_counts.sum()

male_percent = (gender_counts[0] / total_customers) * 100

female_percent = (gender_counts[1] / total_customers) * 100

print(f"Male percentage: {male_percent:.2f}%")

print(f"Female percentage: {female_percent:.2f}%")

import matplotlib.pyplot as plt

gender_counts = data["Gender"].value_counts()

labels = ["Male", "Female"]

plt.pie(gender_counts, labels=labels, autopct="%1.1f%%")

plt.title("Distribution of Customers by Gender")

plt.show()

# Based on the pie chart of the gender distribution, we can see that around **67.1% of the customers in this dataset are female**, while 32.9% are male.

# **This indicates that the online shop is more popular among female customers.**

# # **Total Revenue by Gender**

gender_revenue = data.groupby("Gender")["Revenue_Total"].sum().reset_index()

gender_revenue["Revenue_Percentage"] = (

gender_revenue["Revenue_Total"] / gender_revenue["Revenue_Total"].sum() * 100

)

print(gender_revenue)

plt.pie(gender_revenue["Revenue_Total"], labels=["Male", "Female"], autopct="%1.1f%%")

plt.title("Total Revenue by Gender")

plt.show()

# The insights we can gather from the gender and total revenue percentage data are:

# * The total revenue generated from female customers is higher than that generated from male customers, with a revenue percentage of 67.11% for females and 32.89% for males.

# * This could be an indication that the online shop has a larger female customer base than male.

# # **No of purchases**

print(data["N_Purchases"].describe())

# * The mean number of purchases made by customers is around 4.

# * The standard deviation is relatively low, suggesting that most customers make a similar number of purchases.

# * The minimum number of purchases is 1, which means there are customers who have made only one purchase.

# * The maximum number of purchases is 7, suggesting that there are no customers who have made more than 7 purchases.

# * The median number of purchases is 4, indicating that half of the customers have made 4 or fewer purchases, and the other half have made 4 or more purchases.

# group the data by age group and aggregate the max and min values of N_Purchases

age_purchase = data.groupby("Age_Group")["N_Purchases"].agg(["min", "max"])

# print the result

print(age_purchase)

# # **Total Revenue by Month**

print(data["Purchase_DATE"].dtype)

print(data["Purchase_DATE"].describe())

data["Purchase_DATE"] = pd.to_datetime(data["Purchase_DATE"], format="%d.%m.%y")

print(data["Purchase_DATE"].dtype)

print(data["Purchase_DATE"].describe())

import pandas as pd

import matplotlib.pyplot as plt

# Load data

data = pd.read_csv(

"/kaggle/input/online-shop-customer-sales-data/Online Shop Customer Sales Data.csv"

)

# Convert purchase date to datetime format

data["Purchase_DATE"] = pd.to_datetime(data["Purchase_DATE"], format="%d.%m.%y")

# Aggregate data by month and calculate total revenue

monthly_revenue = data.groupby(pd.Grouper(key="Purchase_DATE", freq="M"))[

"Revenue_Total"

].sum()

# Plot monthly revenue

fig, ax = plt.subplots(figsize=(10, 6))

monthly_revenue.plot(kind="line", ax=ax)

ax.set_xlabel("Month")

ax.set_ylabel("Total Revenue (€)")

ax.set_title("Total Revenue by Month")

plt.show()

monthly_revenue

# Insights from the monthly revenue:

# * The highest monthly revenue was achieved in December 2021 with a total of 157438.0 units of revenue.

# * The lowest monthly revenue was achieved in January 2022 with a total of 4890.5 units of revenue.

# * The revenue seems to fluctuate over the year but with some general stability.

monthly_revenue.median()

# **The median monthly revenue for the given period is 151661.3.**

# This means that 50% of the monthly revenues are below this value and 50% of the monthly revenues are above this value. It is a useful metric to understand the central tendency of the data, as it is less affected by extreme values than the mean.

# checking starting and end date

print(data["Purchase_DATE"].min())

print(data["Purchase_DATE"].max())

# # **Purchase_VALUE**

print(data["Purchase_VALUE"].dtype)

print(data["Purchase_VALUE"].describe())

# The minimum purchase value is 0.005 and the maximum purchase value is 59.90.

# # **Payment Method Distribution**

print(data["Pay_Method"].dtype)

data["Pay_Method"].unique()

# * 0: Digital Wallets

# * 1: Card

# * 2: PayPal

# * 3: Other

# Count the number of each payment method

payment_counts = data["Pay_Method"].value_counts()

# Convert the counts to percentages

payment_percents = payment_counts / len(data) * 100

payment_percents

import matplotlib.pyplot as plt

# count the number of occurrences of each payment method

counts = data["Pay_Method"].value_counts()

# create a pie chart with labels for each payment method

labels = ["Digital Wallets", "Card", "PayPal", "Other"]

plt.pie(counts, labels=labels, autopct="%1.1f%%")

# add a title

plt.title("Payment Method Distribution")

# display the chart

plt.show()

# * Based on the data, the most commonly used payment method is **card**, accounting for **30.02%** of all purchases.

# * The next most popular method is digital wallets, accounting for 28.99% of purchases.

# * PayPal is the third most common method, accounting for 22.12% of purchases.

# * Other payment methods, which may include cash, bank transfers, or other types of payment, account for 18.87% of purchases.

# **It's important for businesses to understand the preferred payment methods of their customers in order to provide the best possible customer experience and increase sales.**

print(data["Time_Spent"].describe())

# * Time_Spent = time spent (in sec) on website

# * The average time spent is approximately **599 seconds (or around 10 minutes)** per purchase.

# * The standard deviation of the time spent is 278 seconds (or around 4.6 minutes) per purchase.

# * The minimum time spent is **120 seconds (or 2 minutes)** per purchase.

# * The 25th percentile of time spent is 358 seconds (or around 6 minutes) per purchase.

# * The median (50th percentile) of time spent is 598 seconds (or around 10 minutes) per purchase.

# * The 75th percentile of time spent is 840 seconds (or around 14 minutes) per purchase.

# * The maximum time spent is **1080 seconds (or 18 minutes)** per purchase.

# Create age bins

age_bins = [15, 25, 35, 45, 65]

age_labels = ["16-25", "26-35", "36-45", "46-63"]

data["Age_Group"] = pd.cut(data["Age"], bins=age_bins, labels=age_labels)

sns.boxplot(x="Age_Group", y="Time_Spent", data=data)

# **Browser**

# * 0: Chrome

# * 1: Safari

# * 2: Edge

# * 3: Other

# # **most commonly used browser**

browser_count = data["Browser"].value_counts()

print(browser_count)

import matplotlib.pyplot as plt

# Count the number of occurrences of each browser

browser_counts = data["Browser"].value_counts()

# Create a pie chart

labels = ["Chrome", "Safari", "Edge", "Other"]

sizes = [

browser_counts.get(0, 0),

browser_counts.get(1, 0),

browser_counts.get(2, 0),

browser_counts.get(3, 0),

]

colors = ["orange", "lightblue", "green", "pink"]

explode = (0.05, 0.05, 0.05, 0.05)

plt.pie(

sizes,

labels=labels,

colors=colors,

explode=explode,

autopct="%1.1f%%",

startangle=90,

)

plt.axis("equal")

# Add a title

plt.title("Percentage of Browsers Used")

# Show the chart

plt.show()

browser_counts

# * **Chrome is the most commonly used browser, followed by Safari, Other and Edge.**

# * It appears that Chrome is significantly more popular than the other browsers, as it has over three times as many users as Safari, and over twelve times as many users as Edge.

# **Newsletter**

# * 0: not subscribed

# * 1: subscribed

# # **Percentage of Newsletter Subscribers**

newsletter_counts = data["Newsletter"].value_counts()

newsletter_percents = newsletter_counts / newsletter_counts.sum() * 100

labels = ["Not Subscribed", "Subscribed"]

plt.pie(newsletter_percents, labels=labels, autopct="%1.1f%%")

plt.title("Percentage of Newsletter Subscribers")

plt.show()

newsletter_percents

# **84.9% of the customers have not subscribed** to the newsletter, while only 15.1% have subscribed.

# # **Percentage of Customers who have Used Vouchers**#

# * 0: not used

# * 1: used

# Get the voucher counts

voucher_counts = data["Voucher"].value_counts()

voucher_counts

voucher_percents = voucher_counts / voucher_counts.sum() * 100

labels = ["No Voucher", "Used Voucher"]

colors = ["#ff9999", "#66b3ff"]

explode = (0.05, 0)

plt.pie(

voucher_percents,

explode=explode,

labels=labels,

colors=colors,

autopct="%1.1f%%",

startangle=90,

)

plt.axis("equal")

plt.title("Percentage of Customers who have Used Vouchers")

plt.show()

# we can see that there are 75% customers who have not used any voucher, and 25% customers who have used at least one voucher.

# **Newsletter subscription does have any significant impact on voucher usage?**

voucher_pivot = pd.pivot_table(

data, values="Voucher", index="Newsletter", aggfunc=np.mean

)

voucher_pivot

plt.bar(voucher_pivot.index, voucher_pivot["Voucher"] * 100)

plt.xticks([0, 1], ["Not Subscribed", "Subscribed"])

plt.ylabel("Percentage of Voucher Users")

plt.show()

# the percentage of customers who used vouchers is almost the same for both groups, with 0.249485 (24.94%) of customers who didn't subscribe to the newsletter using vouchers and 0.254604 (25.46%) of customers who subscribed to the newsletter using vouchers.

# **Therefore, we can conclude that newsletter subscription does not have a significant impact on voucher usage.**

# # **Analyzing the impact of the browser on newsletter subscription**

import seaborn as sns

browser_newsletter_pivot = pd.pivot_table(

data,

values="Customer_id",

index="Browser",

columns="Newsletter",

aggfunc=lambda x: len(x.unique()),

)

sns.heatmap(browser_newsletter_pivot, annot=True, fmt="g", cmap="Blues")

browser_newsletter_pivot

# The table represents the number of newsletter subscribers and non-subscribers segmented by browser type. It shows that the majority of subscribers and non-subscribers used **Chrome browser.** The second most popular browser for both groups is Safari.

# However, there are differences in the proportion of newsletter subscribers to non-subscribers across browsers.

# For example, the proportion of newsletter subscribers is relatively higher among Safari users compared to non-subscribers. On the other hand, the proportion of non-subscribers is higher among Edge and Other browser users compared to newsletter subscribers.

# This suggests that the impact of newsletters on voucher usage may vary depending on the browser type.

# # **Newsletter subscription impact on Revenue**

import seaborn as sns

import matplotlib.pyplot as plt

sns.boxplot(x="Newsletter", y="Revenue_Total", data=data)

plt.show()

newsletter_revenue = data.groupby("Newsletter")["Revenue_Total"].agg(["mean", "std"])

print(newsletter_revenue)

# The insights from this output are:

# * On average, customers who subscribed to the newsletter **(Newsletter = 1)** have a slightly higher mean Revenue_Total than those who did not **(Newsletter = 0)**, **but the difference is not significant.**

# * The standard deviation of Revenue_Total is almost the same for both groups, indicating that there is not much variation in the Revenue_Total values within each group.

# * **Overall, the Newsletter subscription status does not seem to have a strong impact on Revenue_Total.**

# # **Payment Method by Age Group**

import matplotlib.pyplot as plt

# group the data by age group and payment method

age_pay = data.groupby(["Age_Group", "Pay_Method"])["Customer_id"].count().unstack()

# plot the stacked bar chart

age_pay.plot(kind="bar", stacked=True)

# add labels and title

plt.title("Payment Method by Age Group")

plt.xlabel("Age Group")

plt.ylabel("Count")

plt.xticks(rotation=0)

# show the plot

plt.show()

age_pay

# For all age groups, the most common payment method is **method 1 (card)**, followed by method 2 (PayPal), method 0 (digital wallets), and method 3 (other). We can also see that the number of payments made using method 0 is fairly consistent across all age groups.

# However, the number of payments made using method 1 (card) and method 2 (PayPal) is **highest** in the age group **46-63**.

import matplotlib.pyplot as plt

# Convert Date column to datetime format

data["Purchase_DATE"] = pd.to_datetime(data["Purchase_DATE"])

# Group data by Date and calculate the mean of Time_Spent

time_spent = data.groupby("Purchase_DATE")["Time_Spent"].mean()

# Create lineplot

plt.figure(figsize=(10, 6))

plt.plot(time_spent.index, time_spent.values)

plt.title("Time Spent over Time")

plt.xlabel("Date")

plt.ylabel("Time Spent (seconds)")

plt.show()

|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

ratings_df = pd.read_csv("/kaggle/input/movie-lens-small-latest-dataset/ratings.csv")[

["movieId", "userId", "rating"]

]

movies_df = pd.read_csv("/kaggle/input/movie-lens-small-latest-dataset/movies.csv")

movieids = list(ratings_df["movieId"].value_counts()[:100].keys())

newdf = ratings_df[ratings_df.movieId.isin(movieids) == True]

d_movie_id = {}

for i in range(100):

d_movie_id[movieids[i]] = i

ids = newdf["movieId"].values

newids = [d_movie_id[i] for i in ids]

newdf["Index"] = newids

newdf

oldid_newid = [

[list(d_movie_id.keys())[i], list(d_movie_id.values())[i]] for i in range(100)

]

rows = [0] * 100

Matrix = [rows.copy() for i in range(592)]

Matrix = np.array(Matrix)

Matrix.shape

# 592 : Number of users

# 100 : Number of movies

# 592 vectors, each vector of length 100, denoting 100 movies rating given by them

# if say row 0 , column 7 has value 6, then user denoted by index 0(row) has given movie denoted by index 7 ( column ) a rating of 6.

# This will form a list of vectors

# * : user 1

# * : user 2

# * ..

# * ..

# * : user 592

# Now if we train autoencoders on these 592 vectors , where it reconstructs the input , it will learn similarities in rating patterns.

# Now if a user inputs a new data then the network tries to predict what will his rating record in future be , as learnt from the 592 users. ie a a single vector with length 100.

# ....

#

userids = list(newdf["userId"].value_counts().keys())

d_user_id = {}

for i in range(592):

d_user_id[userids[i]] = i

userids = newdf["userId"].values

newids = [d_user_id[i] for i in userids]

newdf["userIndex"] = newids

newdf

x = newdf.iloc[:, 2:].values

for i in x:

rating = i[0]

movie = i[1]

user = i[2]

Matrix[int(user)][int(movie)] = rating

colsum = [0] * 100

colcount = [0] * 100

for i in range(592):

for j in range(100):

colsum[j] += Matrix[i][j]

if Matrix[i][j] != 0:

colcount[j] += 1

colavg = np.array([colsum[i] / colcount[i] for i in range(100)])

rowsum = [0] * 592

rowcount = [1] * 592

for i in range(592):

for j in range(100):

rowsum[i] += Matrix[i][j]

if Matrix[i][j] != 0:

rowcount[i] += 1

rowavg = np.array([rowsum[i] / rowcount[i] for i in range(592)])

for i in range(592):

for j in range(100):

if Matrix[i][j] == 0:

Matrix[i][j] = round((rowavg[i] + colavg[j]) / 2, 2)

np.save("MovieLens.npy", Matrix)

nameids = []

moviename = movies_df.iloc[:, :-1].values

for i in oldid_newid:

movid = i[0]

for j in moviename:

if j[0] == movid:

i.append(j[1])

nameids.append(i)

indexes = [x[1] for x in nameids]

names = [x[2] for x in nameids]

df = pd.DataFrame()

df["Index"] = indexes