script

stringlengths 113

767k

|

|---|

# # Keşifsel Veri Analizi (EDA)

# Keşifsel Veri Analizi veriyle ilgili yapılan tüm çalışmalarda her zaman olması gereken bir ön aşamadır. Doğru business kararlarını alabilmek, iyi modeller üretebilmek öncesinde yapılan detaylı bir EDA çalışmasına bağlıdır.

# Peki nedir bunun aşamaları?

# 1) **Hangi verilere ihtiyacınız olduğunu belirleyin**

# 2) **Verilerinizi toplayın ve yükleyin**

# 3) **Veri tiplerinizi kontrol edin**

# 4) **Veri setiniz hakkında genel bilgiler toplayın**

# 5) **Tekrar eden verileri kaldırın**

# 6) **Sütunlardaki değerleri gözden geçirin**

# 7) **Verilerinizin dağılımını inceleyin**

# 8) **Boş değerleri tespit edin ve gerekli düzenlemeleri yapın**

# 9) **Daha detaylı analizler için verilerinizi filtreleyin**

# 10) **Görsellerden yararlanarak analizinizi daha da derinleştirin**

# 11) **Sonuçlarınızı sunmak için kullanacağınız görselleri hazırlayın**

# ## Veri Setini Tanıyalım

# 1896 Atina Olimpiyatları'ndan 2016 Rio Olimpiyatları'na kadar uzanan ve oyunların kayıtlarına sahip olan Olimpiyat Oyunlarının kapsamlı bir veri kümesidir.

# Her örnek, bireysel bir Olimpik etkinlikte (sporcu etkinlikleri) yarışan bireysel bir sporcuya karşılık gelir. Kullanılan veri setinin metadatası için: [Metadata](https://www.kaggle.com/datasets/heesoo37/120-years-of-olympic-history-athletes-and-results)

# İlk işimiz kütüphanelerimizi çağırmak :)

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

df1 = pd.read_csv(

"/kaggle/input/120-years-of-olympic-history-athletes-and-results/athlete_events.csv",

index_col=1,

)

df1

df1.info()

# >**SORU:** Weight sütununun float dönmesini beklemiştik ama neden object olarak gelmiş?

# İlerleyen satırlarda bu durumu inceleyelim...

df2 = pd.read_csv(

"/kaggle/input/120-years-of-olympic-history-athletes-and-results/noc_regions.csv"

)

df2

df2.info()

# Veri setlerini birleştirmek için `pandas.merge()` fonksiyonunu kullanabiliriz.

df = pd.merge(df1, df2, on="NOC")

df

# Bu projemizin odak noktası **yalnızca yaz olimpiyatları** olacağı için, veri kümemizden tüm kış olimpiyatlarını filtreleyelim ve veri kümemiz üzerinde bazı temel analizler yapalım.

df = df[df["Season"] == "Summer"]

df

# Öncelikle boş değerlerimize bakalım.

df.isnull().sum()

# Her spor dalı için yalnızca üç kazanan olabileceği gerçeğinden dolayı madalya sütunun birçok NAN değerine sahip olduğuna inanıyorum. Ancak, yüzlerce katılımcı bu üç yer için yer almakta ve yarışmaktadır.

df.Medal.value_counts(normalize=True) # Değerlerin yüzdesine bakalım

missing_percentage = 100 * (df.isna().sum().sort_values(ascending=False) / len(df))

missing_percentage

plt.figure(figsize=(15, 6))

plt.title("Percentage Of Missing Values Per Column")

sns.barplot(x=missing_percentage.index, y=missing_percentage.values, color="Red")

# Görseller oluştururken ihtiyacımız olmayan bilgileri görselimizde bulundurmanın bizim için hiçbir yararı yok. Sebep olacağı tek şey karmaşıklık!

plt.figure(figsize=(8, 5))

plt.title("Percentage Of Missing Values Per Column")

sns.barplot(

x=missing_percentage[:5].index, y=missing_percentage[:5].values, color="Red"

)

# **Notes** sütunun bu EDA projemiz için bir faydası olmayacağını düşündüğümüz için kaldıralım.

df.drop(["notes"], axis=1, inplace=True)

# **Weight** sütunumuzun veri tipinde bir sorun vardı, şimdi bu durumu daha detaylı inceleyelim...

df["Weight"].value_counts()

# Aa ne bulduk... Peki ya bu durumu göremeseydik?

df["Weight"] = df["Weight"].astype(float)

# İlk aşamada boş değer yok gibi görünmesine rağmen "?" olarak girilen değerler var, o zaman bunları da boş değer olarak değiştirmemiz lazım.

df["Weight"] = df["Weight"].replace(

"?", np.nan

) # "?" olarak girilmiş ifadeleri NaN olarak değiştirelim

df.isnull().sum() # Tekrar kontrol edelim boş değer varlığını

# **Age**, **Height** ve **Weight** sütunlarındaki tüm boş değerleri aritmetik ortalamayla dolduralım:

df["Age"].fillna(value=df["Age"].mean(), inplace=True)

df["Height"].fillna(value=df["Height"].mean(), inplace=True)

df["Weight"].fillna(value=df["Weight"].mean(), inplace=True)

# **Weight** sütunu için neden hata aldık beraber inceleyelim...

df["Weight"] = df["Weight"].astype(

float

) # İlk halinde "?" string olduğu için tüm sütunu string algılıyordu, şimdi float tipine çevirelim

df["Weight"].fillna(value=df["Weight"].mean(), inplace=True)

df.info()

# Peki **region** sütunu içindeki boş değerleri ne yapabiliriz, birlikte düşünelim...

df["region"].unique()

df.region.dropna(

inplace=True

) # Sadece 21 tane olduğu için kaldırmamız çok bir sorun yaratmaz

# Yalnızca kendi etkinliklerinin galipleri bir Altın, Gümüş veya Bronz madalyaya sahip olacağından, veri setinde **Medal** sütunundaki tüm boş değerleri **Medal Not Won** olarak doldurabiliriz.

df["Medal"].fillna(value="Medal Not Won", inplace=True)

df.isnull().sum()

# Peki tekrar eden değerler?

df[df.duplicated()]

df.duplicated().sum()

df.drop_duplicates(inplace=True) # tekrar eden değerleri kaldıralım

df.duplicated().sum()

df

# Şimdi ise elimizde veriye bazı sorular soralım ve sorulardan elde ettiğimiz cevapları görseller aracılığıyla karşı tarafla paylaşalım.

# * Yaz Olimpiyatlarına en çok sporcu gönderen ülke hangisidir?

# * Sporcu, ülke ve etkinlik sayısı zaman içinde arttı mı yoksa azaldı mı?

# * Hangi ülkeler en çok madalyayı eve götürdü?

# * Erkek ve kadın sporcuların zaman korelasyonuna katılımı

# * United States'ın Madalya Kazandığı Tüm Sporlar?

# * ......

# ## Yaz Olimpiyatlarına en çok sporcu gönderen ülke hangisi?

athlete_count = df.Team.value_counts()

athlete_count

plt.figure(figsize=[18, 8])

sns.barplot(x=athlete_count.index, y=athlete_count.values)

# Maalesef bu görseli kullanamayız!

plt.figure(figsize=[18, 8])

sns.barplot(x=athlete_count[:10].index, y=athlete_count[:10])

plt.title("Countries Send the Most Athletes to the Olympics")

plt.xlabel("Countries")

plt.ylabel("Athlete Count")

# Sporcuların çoğu Amerika Birleşik Devletleri, İngiltere, Fransa, Almanya, İtalya, Japonya, Kanada ve Hollanda gibi birinci dünya ülkelerini temsil etmiştir.

# ## Hangi ülkeler eve en çok madalyayı götürdü?

df[df.Medal != "Medal Not Won"]

df_filtered = df[df.Medal != "Medal Not Won"]

medals_by_country = (

df_filtered.groupby("Team")["Medal"].count().sort_values(ascending=False)

)

medals_by_country

plt.figure(figsize=[18, 8])

plt.xticks(rotation=45) # Ülkelerin isminin açılı yazılmasını sağlıyor

sns.barplot(

x=medals_by_country[:10].index, y=medals_by_country[:10], palette="YlOrBr_r"

)

plt.title("Countries Won the Most Medals in the Olympics")

# En çok madalya United States temsil eden takımlar tarafından kazanılmış.

# ## Ülkeler gönderdikleri sporcu sayılarına göre madalya kazanmakta ne kadar başarılı?

filtered_athlete = athlete_count[athlete_count > 1000]

country_success = (

(medals_by_country / filtered_athlete).sort_values(ascending=False).dropna()

)

country_success

plt.figure(figsize=[15, 10])

sns.barplot(

x=country_success.values * 100, y=country_success.index, palette="coolwarm_r"

)

plt.title("Countries Medal Won Percentage in the Olympics")

plt.xlabel("Percentage (%)")

# ## Sporcuların Yaşının Zamana Göre Değişimi.

plt.figure(figsize=[20, 10])

sns.boxplot(x="Year", y="Age", data=df)

plt.title("Variation Of Athletes Age Over Time")

# **Box Plot**, sporcuların medyan yaşının 120 yıllık bir süre boyunca nispeten sabit kaldığını ve **25 - 26 yıl** civarında gezindiğini gösteriyor. Bu eğilim, çoğu insanın 20 ila 30 yaşları arasında atletik zirvede olduğu ve ardından vücutlarında geri dönüşü olmayan değişiklikler yaşamaya başladığı gerçeğiyle bilimsel olarak desteklenmektedir.

# ## Yıllar içinde zamanla katılımcı sayılarımız nasıl değişmiş?

athlete_by_year = df.groupby("Year")["Name"].count()

athlete_by_year

plt.figure(figsize=[18, 8])

plt.xticks(np.linspace(1896, 2016, 13)) # eksenlerin aralıklarını ayarlar

plt.grid() # kareli defter gibi bir arkaplan oluşturuyor

sns.lineplot(x=athlete_by_year.index, y=athlete_by_year.values)

plt.title("Change in the Number of Athletes Over the Years")

plt.ylabel("Athlete Count")

# Yıllar geçtikçe, Olimpiyatlardaki yarışmacı sayısı genel olarak giderek artış göstermiş. Ancak, üç durumda (1932, 1956 ve 1980) katılımcı sayısında önemli bir düşüş var.

# > **EK BİLGİ:** Bu durumların sebebi 2 kısımda değerlendirilebilir:

# * 1932 Olimpiyatları Büyük Buhran sırasında gerçekleştiğinden, çok az ülke yarışmak için takım gönderdi.

# * 1956 ve 1980 Olimpiyatlarına katılım, jeopolitik nedenlerle çeşitli ulus gruplarının toptan boykotları nedeniyle oldukça kısıtlandı. (ALAN BİLGİSİ)

plt.figure(figsize=[18, 8])

plt.xticks(np.linspace(1896, 2016, 13))

plt.grid()

sns.lineplot(x=athlete_by_year.index, y=athlete_by_year.values)

plt.title("Change in the Number of Athletes Over the Years")

plt.ylabel("Athlete Count")

plt.text(x=1932, y=1500, s="!", weight="bold", fontsize=35, color="red")

plt.text(x=1956, y=4000, s="!", weight="bold", fontsize=35, color="red")

plt.text(x=1980, y=6000, s="!", weight="bold", fontsize=35, color="red")

|

# # Fun with Variational Autoencoders

# This is a starter kernel to use **Labelled Faces in the Wild (LFW) Dataset** in order to maintain knowledge about main Autoencoder principles. PyTorch will be used for modelling.

# This kernel will not update for a while for the purpose of training by yourself.

# ### **Fork it and give it an upvote.**

#

# Useful links:

# * [Building Autoencoders in Keras](https://blog.keras.io/building-autoencoders-in-keras.html)

# * [Conditional VAE (Russian)](https://habr.com/ru/post/331664/)

# * [Tutorial on Variational Autoencoders](https://arxiv.org/abs/1606.05908)

# * [Introducing Variational Autoencoders (in Prose and Code)](https://blog.fastforwardlabs.com/2016/08/12/introducing-variational-autoencoders-in-prose-and.html)

# * [How Autoencoders work - Understanding the math and implementation (Notebook)](https://www.kaggle.com/shivamb/how-autoencoders-work-intro-and-usecases)

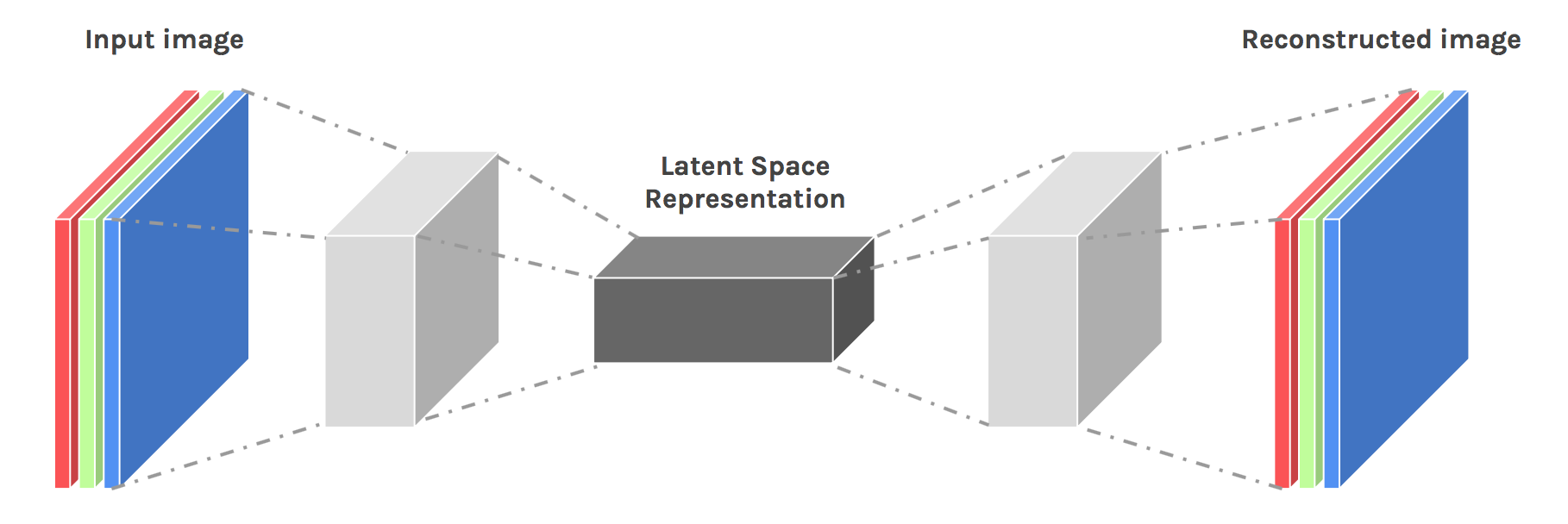

# # A bit of theory

# "Autoencoding" is a data compression algorithm where the compression and decompression functions are 1) data-specific, 2) lossy, and 3) learned automatically from examples rather than engineered by a human. Additionally, in almost all contexts where the term "autoencoder" is used, the compression and decompression functions are implemented with neural networks.

# 1) Autoencoders are data-specific, which means that they will only be able to compress data similar to what they have been trained on. This is different from, say, the MPEG-2 Audio Layer III (MP3) compression algorithm, which only holds assumptions about "sound" in general, but not about specific types of sounds. An autoencoder trained on pictures of faces would do a rather poor job of compressing pictures of trees, because the features it would learn would be face-specific.

# 2) Autoencoders are lossy, which means that the decompressed outputs will be degraded compared to the original inputs (similar to MP3 or JPEG compression). This differs from lossless arithmetic compression.

# 3) Autoencoders are learned automatically from data examples, which is a useful property: it means that it is easy to train specialized instances of the algorithm that will perform well on a specific type of input. It doesn't require any new engineering, just appropriate training data.

# source: https://blog.keras.io/building-autoencoders-in-keras.html

#

import matplotlib.pyplot as plt

import os

import glob

import pandas as pd

import random

import numpy as np

import cv2

import base64

import imageio

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

import torch.utils.data as data_utils

from copy import deepcopy

from torch.autograd import Variable

from tqdm import tqdm

from pprint import pprint

from PIL import Image

from sklearn.model_selection import train_test_split

import os

DATASET_PATH = "/kaggle/input/lfw-dataset/lfw-deepfunneled/lfw-deepfunneled/"

ATTRIBUTES_PATH = "/kaggle/input/lfw-attributes/lfw_attributes.txt"

DEVICE = torch.device("cuda")

# # Explore the data

dataset = []

for path in glob.iglob(os.path.join(DATASET_PATH, "**", "*.jpg")):

person = path.split("/")[-2]

dataset.append({"person": person, "path": path})

dataset = pd.DataFrame(dataset)

# too much Bush

dataset = dataset.groupby("person").filter(lambda x: len(x) < 25)

dataset.head(10)

dataset.groupby("person").count()[:200].plot(kind="bar", figsize=(20, 5))

plt.figure(figsize=(20, 10))

for i in range(20):

idx = random.randint(0, len(dataset))

img = plt.imread(dataset.path.iloc[idx])

plt.subplot(4, 5, i + 1)

plt.imshow(img)

plt.title(dataset.person.iloc[idx])

plt.xticks([])

plt.yticks([])

plt.tight_layout()

plt.show()

# # Prepare the dataset

def fetch_dataset(dx=80, dy=80, dimx=45, dimy=45):

df_attrs = pd.read_csv(

ATTRIBUTES_PATH,

sep="\t",

skiprows=1,

)

df_attrs = pd.DataFrame(df_attrs.iloc[:, :-1].values, columns=df_attrs.columns[1:])

photo_ids = []

for dirpath, dirnames, filenames in os.walk(DATASET_PATH):

for fname in filenames:

if fname.endswith(".jpg"):

fpath = os.path.join(dirpath, fname)

photo_id = fname[:-4].replace("_", " ").split()

person_id = " ".join(photo_id[:-1])

photo_number = int(photo_id[-1])

photo_ids.append(

{"person": person_id, "imagenum": photo_number, "photo_path": fpath}

)

photo_ids = pd.DataFrame(photo_ids)

df = pd.merge(df_attrs, photo_ids, on=("person", "imagenum"))

assert len(df) == len(df_attrs), "lost some data when merging dataframes"

all_photos = (

df["photo_path"]

.apply(imageio.imread)

.apply(lambda img: img[dy:-dy, dx:-dx])

.apply(lambda img: np.array(Image.fromarray(img).resize([dimx, dimy])))

)

all_photos = np.stack(all_photos.values).astype("uint8")

all_attrs = df.drop(["photo_path", "person", "imagenum"], axis=1)

return all_photos, all_attrs

data, attrs = fetch_dataset()

# 45,45

IMAGE_H = data.shape[1]

IMAGE_W = data.shape[2]

N_CHANNELS = 3

data = np.array(data / 255, dtype="float32")

X_train, X_val = train_test_split(data, test_size=0.2, random_state=42)

X_train = torch.FloatTensor(X_train)

X_val = torch.FloatTensor(X_val)

# # Building simple autoencoder

dim_z = 100

X_train.shape

class Autoencoder(nn.Module):

def __init__(self):

super().__init__()

self.encoder = nn.Sequential(

nn.Linear(45 * 45 * 3, 1500),

nn.BatchNorm1d(1500),

nn.ReLU(),

nn.Linear(1500, 1000),

nn.BatchNorm1d(1000),

nn.ReLU(),

# nn.Linear(1000,500),

# nn.ReLU(),

nn.Linear(1000, dim_z),

nn.BatchNorm1d(dim_z),

nn.ReLU(),

)

self.decoder = nn.Sequential(

nn.Linear(dim_z, 1000),

nn.BatchNorm1d(1000),

nn.ReLU(),

# nn.Linear(500,1000),

# nn.ReLU(),

nn.Linear(1000, 1500),

nn.BatchNorm1d(1500),

nn.ReLU(),

nn.Linear(1500, 45 * 45 * 3),

)

def encode(self, x):

return self.encoder(x)

def decode(self, z):

return self.decoder(z)

def forward(self, x):

# print("shape",x.shape)

# x = x.view(-1,45*45*3)

encoded = self.encode(x)

# latent_view = self.latent_view(encoder)

# decoder = self.decoder(latent_view).resize_((45,45,3))

decoded = self.decode(encoded)

return encoded, decoded

class Autoencoder_cnn(nn.Module):

def __init__(self):

super().__init__()

self.encoder = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=16, kernel_size=3),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(in_channels=16, out_channels=8, kernel_size=3),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2),

)

self.decoder = nn.Sequential(

nn.ConvTranspose2d(in_channels=8, out_channels=16, kernel_size=5, stride=2),

nn.ReLU(),

nn.ConvTranspose2d(in_channels=16, out_channels=3, kernel_size=5, stride=2),

# nn.ReLU(),

# nn.ConvTranspose2d(in_channels=8, out_channels=8, kernel_size=3, stride=2),

# nn.ReLU(),

# nn.ConvTranspose2d(in_channels=8, out_channels=3, kernel_size=5, stride=2)

)

def decode(self, z):

return self.decoder(z)

def forward(self, x):

# print("shape",x.shape)

# torch.Size([64, 45, 45, 3])

# x = x.view(-1,45*45*3)

# change to

x = x.permute(0, 3, 1, 2)

encoded = self.encoder(x)

# torch.Size([64, 8, 9, 9])

# print(encoded.shape)

# latent_view = self.latent_view(encoder)

# decoder = self.decoder(latent_view).resize_((45,45,3))

decoded = self.decode(encoded)

return encoded, decoded

model_cnn = Autoencoder_cnn().cuda()

model_auto = Autoencoder().to(DEVICE)

print(model_auto)

print(model_cnn)

# cnn

# torch.Size([64, 45, 45, 3]) -> permute to (64, 3, 45, 45)

# decoder shape: torch.Size([64, 3, 13, 13])

# # Train autoencoder

def get_batch(data, batch_size=64):

total_len = data.shape[0]

for i in range(0, total_len, batch_size):

yield data[i : min(i + batch_size, total_len)]

def plot_gallery(images, h, w, n_row=3, n_col=6, with_title=False, titles=[]):

plt.figure(figsize=(1.5 * n_col, 1.7 * n_row))

plt.subplots_adjust(bottom=0, left=0.01, right=0.99, top=0.90, hspace=0.35)

for i in range(n_row * n_col):

plt.subplot(n_row, n_col, i + 1)

try:

plt.imshow(

images[i].reshape((h, w, 3)),

cmap=plt.cm.gray,

vmin=-1,

vmax=1,

interpolation="nearest",

)

if with_title:

plt.title(titles[i])

plt.xticks(())

plt.yticks(())

except:

pass

def fit_epoch(model, train_x, criterion, optimizer, batch_size, is_cnn=False):

running_loss = 0.0

processed_data = 0

for inputs in get_batch(train_x, batch_size):

if not is_cnn:

inputs = inputs.view(-1, 45 * 45 * 3)

inputs = inputs.to(DEVICE)

optimizer.zero_grad()

encoder, decoder = model(inputs)

# print('decoder shape: ', decoder.shape)

if not is_cnn:

outputs = decoder.view(-1, 45 * 45 * 3)

else:

outputs = decoder.permute(0, 2, 3, 1)

loss = criterion(outputs, inputs)

loss.backward()

optimizer.step()

running_loss += loss.item() * inputs.shape[0]

processed_data += inputs.shape[0]

train_loss = running_loss / processed_data

return train_loss

def eval_epoch(model, x_val, criterion, is_cnn=False):

running_loss = 0.0

processed_data = 0

model.eval()

for inputs in get_batch(x_val):

if not is_cnn:

inputs = inputs.view(-1, 45 * 45 * 3)

inputs = inputs.to(DEVICE)

with torch.set_grad_enabled(False):

encoder, decoder = model(inputs)

if not is_cnn:

outputs = decoder.view(-1, 45 * 45 * 3)

else:

outputs = decoder.permute(0, 2, 3, 1)

loss = criterion(outputs, inputs)

running_loss += loss.item() * inputs.shape[0]

processed_data += inputs.shape[0]

val_loss = running_loss / processed_data

# draw

with torch.set_grad_enabled(False):

pic = x_val[3]

if not is_cnn:

pic_input = pic.view(-1, 45 * 45 * 3)

else:

# print(pic.shape)

pic_input = torch.FloatTensor(pic.unsqueeze(0))

# print(pic.shape)

pic_input = pic_input.to(DEVICE)

encoder, decoder = model(pic_input)

if not is_cnn:

pic_output = decoder.view(-1, 45 * 45 * 3).squeeze()

else:

# print('decoder shape eval: ', decoder.shape)

pic_output = decoder.permute(0, 2, 3, 1)

pic_output = pic_output.to("cpu")

# pic_output = pic_output.view(-1, 45,45,3)

# print(pic)

# print(pic_output)

pic_input = pic_input.to("cpu")

# pics = pic_input + pic_output

# plot_gallery(pics,45,45,10,2)

plot_gallery([pic_input, pic_output], 45, 45, 1, 2)

return val_loss

def train(train_x, val_x, model, epochs=10, batch_size=32, is_cnn=False):

criterion = nn.MSELoss()

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

history = []

log_template = (

"\nEpoch {ep:03d} train_loss: {t_loss:0.4f} val_loss: {val_loss:0.4f}"

)

with tqdm(desc="epoch", total=epochs) as pbar_outer:

for epoch in range(epochs):

train_loss = fit_epoch(

model, train_x, criterion, optimizer, batch_size, is_cnn

)

val_loss = eval_epoch(model, val_x, criterion, is_cnn)

print("loss: ", train_loss)

history.append((train_loss, val_loss))

pbar_outer.update(1)

tqdm.write(

log_template.format(ep=epoch + 1, t_loss=train_loss, val_loss=val_loss)

)

return history

# history_cnn = train(X_train, X_val, model_cnn, epochs=30, batch_size=64, is_cnn=True)

history = train(X_train, X_val, model_auto, epochs=50, batch_size=64)

train_loss, val_loss = zip(*history)

plt.figure(figsize=(15, 10))

plt.plot(train_loss, label="Train loss")

plt.plot(val_loss, label="Val loss")

plt.legend(loc="best")

plt.xlabel("epochs")

plt.ylabel("loss")

plt.plot()

# # Sampling

# Let's generate some samples from random vectors

z = np.random.randn(25, dim_z)

print(z.shape)

with torch.no_grad():

inputs = torch.FloatTensor(z)

inputs = inputs.to(DEVICE)

model_auto.eval()

output = model_auto.decode(inputs)

# print(output)

plot_gallery(output.data.cpu().numpy(), IMAGE_H, IMAGE_W, n_row=5, n_col=5)

# Convolution autoencoder sampling

# z = torch.rand((25,8,9,9))

#

# with torch.no_grad():

# inputs = torch.FloatTensor(z)

#

# inputs = inputs.to(DEVICE)

# model_cnn.eval()

# output = model_cnn.decode(inputs)

# print(output.shape)

# output = output.permute(0,2,3,1)

# print(output.shape)

#

# #print(output)

#

# plot_gallery(output.data.cpu().numpy(), IMAGE_H, IMAGE_W, n_row=5, n_col=5)

# Attributes

# # Adding smile and glasses

# Let's find some attributes like smiles or glasses on the photo and try to add it to the photos which don't have it. We will use the second dataset for it. It contains a bunch of such attributes.

attrs.head()

attrs.columns

smile_ids = attrs["Smiling"].sort_values(ascending=False).iloc[100:125].index.values

smile_data = data[smile_ids]

no_smile_ids = attrs["Smiling"].sort_values(ascending=True).head(25).index.values

no_smile_data = data[no_smile_ids]

eyeglasses_ids = attrs["Eyeglasses"].sort_values(ascending=False).head(25).index.values

eyeglasses_data = data[eyeglasses_ids]

sunglasses_ids = attrs["Sunglasses"].sort_values(ascending=False).head(25).index.values

sunglasses_data = data[sunglasses_ids]

plot_gallery(

smile_data, IMAGE_H, IMAGE_W, n_row=5, n_col=5, with_title=True, titles=smile_ids

)

plot_gallery(

no_smile_data,

IMAGE_H,

IMAGE_W,

n_row=5,

n_col=5,

with_title=True,

titles=no_smile_ids,

)

plot_gallery(

eyeglasses_data,

IMAGE_H,

IMAGE_W,

n_row=5,

n_col=5,

with_title=True,

titles=eyeglasses_ids,

)

plot_gallery(

sunglasses_data,

IMAGE_H,

IMAGE_W,

n_row=5,

n_col=5,

with_title=True,

titles=sunglasses_ids,

)

# Calculating latent space vector for the selected images.

def to_latent(pic):

with torch.no_grad():

inputs = torch.FloatTensor(pic.reshape(-1, 45 * 45 * 3))

inputs = inputs.to(DEVICE)

model_auto.eval()

output = model_auto.encode(inputs)

return output

def from_latent(vec):

with torch.no_grad():

inputs = vec.to(DEVICE)

model_auto.eval()

output = model_auto.decode(inputs)

return output

smile_data[0].reshape(-1, 45 * 45 * 3).shape

smile_latent = to_latent(smile_data).mean(axis=0)

no_smile_latent = to_latent(no_smile_data).mean(axis=0)

sunglasses_latent = to_latent(sunglasses_data).mean(axis=0)

smile_vec = smile_latent - no_smile_latent

sunglasses_vec = sunglasses_latent - smile_latent

def make_me_smile(ids):

for id in ids:

pic = data[id : id + 1]

latent_vec = to_latent(pic)

latent_vec[0] += smile_vec

pic_output = from_latent(latent_vec)

pic_output = pic_output.view(-1, 45, 45, 3).cpu()

plot_gallery([pic, pic_output], IMAGE_H, IMAGE_W, n_row=1, n_col=2)

def give_me_sunglasses(ids):

for id in ids:

pic = data[id : id + 1]

latent_vec = to_latent(pic)

latent_vec[0] += sunglasses_vec

pic_output = from_latent(latent_vec)

pic_output = pic_output.view(-1, 45, 45, 3).cpu()

plot_gallery([pic, pic_output], IMAGE_H, IMAGE_W, n_row=1, n_col=2)

make_me_smile(no_smile_ids)

give_me_sunglasses(smile_ids)

# While the concept is pretty straightforward the simple autoencoder have some disadvantages. Let's explore them and try to do better.

# # Variational autoencoder

# So far we have trained our encoder to reconstruct the very same image that we've transfered to latent space. That means that when we're trying to **generate** new image from the point decoder never met we're getting _the best image it can produce_, but the quelity is not good enough.

# > **In other words the encoded vectors may not be continuous in the latent space.**

# In other hand Variational Autoencoders makes not only one encoded vector but **two**:

# - vector of means, μ;

# - vector of standard deviations, σ.

#

# > picture from https://towardsdatascience.com/intuitively-understanding-variational-autoencoders-1bfe67eb5daf

#

dim_z = 256

class VAE(nn.Module):

def __init__(self):

super().__init__()

self.enc_fc1 = nn.Linear(1000, dim_z)

self.enc_fc2 = nn.Linear(1000, dim_z)

self.encoder = nn.Sequential(

nn.Linear(45 * 45 * 3, 1500),

# nn.BatchNorm1d(1500),

nn.ReLU(),

nn.Linear(1500, 1000),

# nn.BatchNorm1d(1000),

nn.ReLU(),

)

self.decoder = nn.Sequential(

nn.Linear(dim_z, 1000),

# nn.BatchNorm1d(1000),

nn.ReLU(),

nn.Linear(1000, 1500),

# nn.BatchNorm1d(1500),

nn.ReLU(),

nn.Linear(1500, 45 * 45 * 3),

)

def encode(self, x):

x = self.encoder(x)

return self.enc_fc1(x), self.enc_fc2(x)

def reparametrize(self, mu, logvar):

##if self.training:

# std = logsigma.exp_()

# eps = Variable(std.data.new(std.size()).normal_())

# return eps.mul(std).add_(mu)

##else:

##return mu

std = logvar.mul(0.5).exp_()

eps = torch.cuda.FloatTensor(std.size()).normal_()

eps = Variable(eps)

return eps.mul(std).add_(mu)

def decode(self, z):

return self.decoder(z)

def forward(self, x):

mu, logvar = self.encode(x)

encoded = self.reparametrize(mu, logvar)

decoded = self.decode(encoded)

return mu, logvar, decoded

# -----------------------------------------------------------------

class VAE2(nn.Module):

def __init__(self):

super(VAE2, self).__init__()

# self.training = True

self.fc1 = nn.Linear(45 * 45 * 3, 1500)

self.fc21 = nn.Linear(1500, 200)

self.fc22 = nn.Linear(1500, 200)

self.fc3 = nn.Linear(200, 1500)

self.fc4 = nn.Linear(1500, 45 * 45 * 3)

self.relu = nn.LeakyReLU()

def encode(self, x):

x = self.relu(self.fc1(x))

return self.fc21(x), self.fc22(x)

def reparametrize(self, mu, logvar):

std = logvar.mul(0.5).exp_()

if torch.cuda.is_available():

eps = torch.cuda.FloatTensor(std.size()).normal_()

else:

eps = torch.FloatTensor(std.size()).normal_()

eps = Variable(eps)

zz = eps.mul(std).add_(mu)

# print(zz.shape)

return zz

def reparametrize2(self, mu, logvar):

if self.training:

std = logvar.exp_()

eps = Variable(std.data.new(std.size()).normal_())

return eps.mul(std).add_(mu)

else:

print("training is off")

return mu

def reparametrize3(self, mu, logvar):

std = logvar.exp_()

eps = Variable(std.data.new(std.size()).normal_())

return eps.mul(std).add_(mu)

# --new---------------------------------------

def reparameterize4(self, mu, logvar):

std = torch.exp(0.5 * logvar)

eps = torch.randn_like(std)

return eps.mul(std).add_(mu)

# ---------------------------------------

def decode(self, z):

z = self.relu(self.fc3(z)) # 1500

return torch.sigmoid(self.fc4(z))

def forward(self, x):

mu, logvar = self.encode(x)

z = self.reparameterize4(mu, logvar)

z = self.decode(z)

return z, mu, logvar

def KL_divergence(mu, logsigma):

return -(1 / 2) * (1 + 2 * logsigma - mu**2 - torch.exp(logsigma) ** 2).sum(

dim=-1

)

def log_likelihood(x, reconstruction):

loss = nn.BCELoss()

loss_val = loss(reconstruction, x)

a = loss_val

return a

def loss_vae(x, mu, logsigma, reconstruction):

# kl = -KL_divergence(mu, logsigma)

# like = log_likelihood(x, reconstruction)

# print('kl ',kl)

# print('like', like)

# return -(kl + like).mean()

# return -(-KL_divergence(mu, logsigma) + log_likelihood(x, reconstruction)).mean()

# print(nn.BCELoss(reduction = 'sum')(reconstruction, x))

BCE = nn.BCELoss(reduction="sum")(reconstruction, x)

KLD = -0.5 * (1 + logsigma - mu.pow(2) - logsigma.exp().pow(2)).sum()

print("BCE ", BCE)

print("KLD ", KLD)

return BCE + KLD

def loss_vae2(x, mu, logvar, reconstruction):

loss = nn.BCELoss()

BCE = loss(reconstruction, x)

KLD_element = mu.pow(2).add_(logvar.exp()).mul_(-1).add_(1).add_(logvar)

KLD = torch.sum(KLD_element).mul_(-0.5)

# KL divergence

return BCE + KLD

# return -(-KL_divergence(mu, logsigma) + log_likelihood(x, reconstruction)).mean()

def loss_vae_fn(x, recon_x, mu, logvar):

# print('recon_x ',recon_x.shape)

# print('x ',x.shape)

BCE = F.binary_cross_entropy(recon_x, x, reduction="sum")

# see Appendix B from VAE paper:

# Kingma and Welling. Auto-Encoding Variational Bayes. ICLR, 2014

# https://arxiv.org/abs/1312.6114

# 0.5 * sum(1 + log(sigma^2) - mu^2 - sigma^2)

KLD = -0.5 * torch.sum(1 + logvar - mu.pow(2) - logvar.exp())

return BCE + KLD

# model_vae = VAE().cuda()

model_vae = VAE2().to(DEVICE)

def fit_epoch_vae(model, train_x, optimizer, batch_size, is_cnn=False):

running_loss = 0.0

processed_data = 0

for inputs in get_batch(train_x, batch_size):

inputs = inputs.view(-1, 45 * 45 * 3)

inputs = inputs.to(DEVICE)

optimizer.zero_grad()

(

decoded,

mu,

logvar,

) = model(inputs)

# print('decoded shape: ', decoded.shape)

outputs = decoded.view(-1, 45 * 45 * 3)

outputs = outputs.to(DEVICE)

loss = loss_vae_fn(inputs, outputs, mu, logvar)

loss.backward()

optimizer.step()

running_loss += loss.item() * inputs.shape[0]

processed_data += inputs.shape[0]

train_loss = running_loss / processed_data

return train_loss

def eval_epoch_vae(model, x_val, batch_size):

running_loss = 0.0

processed_data = 0

model.eval()

for inputs in get_batch(x_val, batch_size=batch_size):

inputs = inputs.view(-1, 45 * 45 * 3)

inputs = inputs.to(DEVICE)

with torch.set_grad_enabled(False):

decoded, mu, logvar = model(inputs)

# print('decoder shape: ', decoder.shape)

outputs = decoded.view(-1, 45 * 45 * 3)

loss = loss_vae_fn(inputs, outputs, mu, logvar)

running_loss += loss.item() * inputs.shape[0]

processed_data += inputs.shape[0]

val_loss = running_loss / processed_data

# draw

with torch.set_grad_enabled(False):

pic = x_val[3]

pic_input = pic.view(-1, 45 * 45 * 3)

pic_input = pic_input.to(DEVICE)

decoded, mu, logvar = model(inputs)

# model.training = False

# print('decoder shape: ', decoded.shape)

pic_output = decoded[0].view(-1, 45 * 45 * 3).squeeze()

# pic_output2 = decoder[1].view(-1, 45*45*3).squeeze()

# pic_output3 = decoder[2].view(-1, 45*45*3).squeeze()

# print(pic_input)

# print('input shape ', pic_input.shape)

# print('outout shape ', pic_output.shape)

pic_output = pic_output.to("cpu")

# pic_output2 = pic_output2.to("cpu")

# pic_output3 = pic_output3.to("cpu")

pic_input = pic_input.to("cpu")

# print(pic_input)

plot_gallery([pic_input, pic_output], 45, 45, 1, 2)

return val_loss

def train_vae(train_x, val_x, model, epochs=10, batch_size=32, lr=0.001):

optimizer = torch.optim.Adam(model.parameters(), lr=lr)

history = []

log_template = (

"\nEpoch {ep:03d} train_loss: {t_loss:0.4f} val_loss: {val_loss:0.4f}"

)

with tqdm(desc="epoch", total=epochs) as pbar_outer:

for epoch in range(epochs):

train_loss = fit_epoch_vae(model, train_x, optimizer, batch_size)

val_loss = eval_epoch_vae(model, val_x, batch_size)

print("loss: ", train_loss)

history.append((train_loss, val_loss))

pbar_outer.update(1)

tqdm.write(

log_template.format(ep=epoch + 1, t_loss=train_loss, val_loss=val_loss)

)

return history

history_vae = train_vae(X_train, X_val, model_vae, epochs=20, batch_size=64, lr=0.0005)

train_loss, val_loss = zip(*history_vae)

plt.figure(figsize=(15, 10))

plt.plot(train_loss, label="Train loss")

plt.plot(val_loss, label="Val loss")

plt.legend(loc="best")

plt.xlabel("epochs")

plt.ylabel("loss")

plt.plot()

|

# # Covid-19 CT ECKP 2023 - Evan

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.metrics import classification_report

import tensorflow as tf

from tensorflow.keras.preprocessing import image_dataset_from_directory

from tensorflow.keras.applications import ResNet50

from tensorflow.keras.models import Sequential, load_model

from tensorflow.keras.layers import (

Dense,

Conv2D,

MaxPooling2D,

InputLayer,

Flatten,

Dropout,

)

def plot_scores(train):

accuracy = train.history["accuracy"]

val_accuracy = train.history["val_accuracy"]

epochs = range(len(accuracy))

plt.plot(epochs, accuracy, "b", label="Score apprentissage")

plt.plot(epochs, val_accuracy, "r", label="Score validation")

plt.title("Scores")

plt.legend()

plt.show()

data_root = "/kaggle/input/large-covid19-ct-slice-dataset/curated_data/curated_data"

random_seed = 1075

batch_size = 32

validation_split = 0.2

image_size = (256, 256)

train_dataset = image_dataset_from_directory(

data_root,

validation_split=validation_split,

subset="training",

seed=random_seed,

image_size=image_size,

batch_size=batch_size,

label_mode="categorical",

)

val_dataset = image_dataset_from_directory(

data_root,

validation_split=validation_split,

subset="validation",

seed=random_seed,

image_size=image_size,

batch_size=batch_size,

label_mode="categorical",

)

resnet50 = ResNet50(include_top=False, input_shape=(256, 256, 3), classes=3)

model = Sequential()

model.add(resnet50)

model.add(Flatten())

model.add(Dense(64, activation="relu"))

model.add(Dense(3, activation="softmax"))

model.summary()

model.compile(

loss="categorical_crossentropy",

optimizer=tf.keras.optimizers.SGD(1e-2),

metrics=["accuracy"],

)

history = model.fit(train_dataset, validation_data=val_dataset, epochs=10, verbose=1)

plot_scores(history)

y_val = np.array([])

y_hat = np.array([])

for x, y in val_dataset:

y_val = np.concatenate([y_val, np.argmax(y.numpy(), axis=-1)])

y_hat = np.concatenate([y_hat, np.argmax(model.predict(x), axis=1)])

print(classification_report(y_val, y_hat, target_names=val_dataset.class_names))

meta_covid = pd.read_csv(

"/kaggle/input/large-covid19-ct-slice-dataset/meta_data_covid.csv",

encoding="windows-1252",

)

meta_cap = pd.read_csv(

"/kaggle/input/large-covid19-ct-slice-dataset/meta_data_cap.csv",

encoding="windows-1252",

)

meta_normal = pd.read_csv(

"/kaggle/input/large-covid19-ct-slice-dataset/meta_data_normal.csv",

encoding="windows-1252",

)

print(f"meta_covid: {meta_covid.shape}")

print(f"meta_cap: {meta_cap.shape}")

print(f"meta_normal: {meta_normal.shape}")

print(f"meta_covid: {meta_covid.columns}")

print(f"meta_normal: {meta_normal.columns}")

print(f"meta_cap: {meta_cap.columns}")

meta_covid.head()

meta_covid.count()

|

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import xgboost as xgb

import seaborn as sns

import time

from math import sqrt

from numpy import loadtxt

from itertools import product

from tqdm import tqdm

from numpy import loadtxt

import gc

from sklearn import preprocessing

from sklearn.model_selection import cross_val_score

from sklearn.metrics import mean_squared_error, f1_score

from sklearn.metrics import accuracy_score

from sklearn.model_selection import KFold

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import LabelEncoder

from catboost import CatBoostClassifier

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# Any results you write to the current directory are saved as output.

def reduce_mem_usage(df):

"""

iterate through all the columns of a dataframe and

modify the data type to reduce memory usage.

"""

start_mem = df.memory_usage().sum() / 1024**2

print(("Memory usage of dataframe is {:.2f}" "MB").format(start_mem))

for col in df.columns:

col_type = df[col].dtype

print(str(col_type))

if str(col_type) == "datetime64[ns]":

continue

if col_type != object:

c_min = df[col].min()

c_max = df[col].max()

if str(col_type)[:3] == "int":

if c_min > np.iinfo(np.int8).min and c_max < np.iinfo(np.int8).max:

df[col] = df[col].astype(np.int8)

elif c_min > np.iinfo(np.int16).min and c_max < np.iinfo(np.int16).max:

df[col] = df[col].astype(np.int16)

elif c_min > np.iinfo(np.int32).min and c_max < np.iinfo(np.int32).max:

df[col] = df[col].astype(np.int32)

elif c_min > np.iinfo(np.int64).min and c_max < np.iinfo(np.int64).max:

df[col] = df[col].astype(np.int64)

elif str(col_type) != "Timestamp":

if (

c_min > np.finfo(np.float16).min

and c_max < np.finfo(np.float16).max

):

df[col] = df[col].astype(np.float16)

elif (

c_min > np.finfo(np.float32).min

and c_max < np.finfo(np.float32).max

):

df[col] = df[col].astype(np.float32)

else:

df[col] = df[col].astype(np.float64)

else:

df[col] = df[col].astype("category")

end_mem = df.memory_usage().sum() / 1024**2

print(("Memory usage after optimization is: {:.2f}" "MB").format(end_mem))

print("Decreased by {:.1f}%".format(100 * (start_mem - end_mem) / start_mem))

return df

def df_info(df):

print("----------Top-5- Record----------")

print(df.head(5))

print("-----------Information-----------")

print(df.info())

print("-----------Data Types-----------")

print(df.dtypes)

print("----------Missing value-----------")

print(df.isnull().sum())

print("----------Null value-----------")

print(df.isna().sum())

print("----------Shape of Data----------")

print(df.shape)

print("----------description of Data----------")

print(df.describe())

print("----------Uniques of Data----------")

print(df.nunique())

print("------------Columns in data---------")

print(df.columns)

def downcast_dtypes(df):

float_cols = [c for c in df if df[c].dtype == "float64"]

int_cols = [c for c in df if df[c].dtype in ["int64", "int32"]]

df[float_cols] = df[float_cols].astype(np.float32)

df[int_cols] = df[int_cols].astype(np.int16)

return df

def model_performance_sc_plot(predictions, labels, title):

min_val = max(max(predictions), max(labels))

max_val = min(min(predictions), min(labels))

performance_df = pd.DataFrame({"Labels": labels})

performance_df["Predictions"] = predictions

sns.jointplot(y="Labels", x="Predictions", data=performance_df, kind="reg")

plt.plot([min_val, max_val], [min_val, max_val], "m--")

plt.title(title)

plt.show()

def calculate_counts(column_name, ref_sets, extension_set):

ref_sets_ids = [set(ref[column_name]) for ref in ref_sets]

ext_ids = set(extension_set[column_name])

refs_union = reduce(lambda s1, s2: s1 | s2, ref_sets_ids)

ref_counts = [len(ref) for ref in ref_sets_ids]

ext_count = len(ext_ids)

union_count = len(refs_union)

intersection_count = len(ext_ids & refs_union)

all_counts = ref_counts + [union_count, ext_count, intersection_count]

res_index = ["Ref {}".format(i) for i in range(1, len(ref_sets) + 1)] + [

"Refs Union",

"Extension",

"Union x Extension",

]

return pd.DataFrame({"Count": all_counts}, index=res_index)

train_path = "/kaggle/input/airplane-accidents-severity-dataset/train.csv"

test_path = "/kaggle/input/airplane-accidents-severity-dataset/test.csv"

submission_path = (

"/kaggle/input/airplane-accidents-severity-dataset/sample_submission.csv"

)

train = pd.read_csv(train_path)

df_info(train)

object_cols = ["Severity"]

lb = LabelEncoder()

lb.fit(train[object_cols])

train[object_cols] = lb.transform(train[object_cols])

train["Control_Metric"] = train["Control_Metric"].clip(25, 100)

train.head()

# Accident type code mean wrt control metric, Severity, Turbulence, Maxelevation, Adverse Weatehr

means_accident_code = train.groupby(["Accident_Type_Code"]).agg(

{

"Severity": "mean",

"Safety_Score": "mean",

"Days_Since_Inspection": "mean",

"Total_Safety_Complaints": "mean",

"Control_Metric": "mean",

"Turbulence_In_gforces": "mean",

"Cabin_Temperature": "mean",

"Max_Elevation": "mean",

"Violations": "mean",

"Adverse_Weather_Metric": "mean",

}

)

means_accident_code = means_accident_code.reset_index()

cols = list(means_accident_code.columns)

for i in range(1, len(cols)):

cols[i] = cols[i] + "_mean"

print(cols)

means_accident_code.columns = cols

train = train.merge(means_accident_code, on=["Accident_Type_Code"], how="left")

df = train.groupby(["Accident_Type_Code", "Severity"])["Severity"].agg({"no": "count"})

mask = df.groupby(level=0).agg("idxmax")

df_count = df.loc[mask["no"]]

df_count.drop(["no"], axis=1, inplace=True)

df_count = df_count.reset_index()

df_count.columns = ["Accident_Type_Code", "Severity_max"]

train = train.merge(df_count, on=["Accident_Type_Code"], how="left")

train.drop(["Accident_Type_Code"], axis=1, inplace=True)

train.head()

X = train.drop(["Severity", "Accident_ID"], axis=1)

Y = train["Severity"].values

x_train, x_val, y_train, y_val = train_test_split(X, Y, test_size=0.25)

x_val1, x_val2, y_val1, y_val2 = train_test_split(x_val, y_val, test_size=0.25)

test = pd.read_csv(test_path)

test.head().T

test = test.merge(means_accident_code, on=["Accident_Type_Code"], how="left")

test = test.merge(df_count, on=["Accident_Type_Code"], how="left")

test.drop(["Accident_Type_Code"], axis=1, inplace=True)

x_test = test.drop(["Accident_ID"], axis=1)

cb = CatBoostClassifier(

iterations=1000,

max_ctr_complexity=10,

random_seed=0,

od_type="Iter",

od_wait=50,

verbose=100,

depth=12,

)

from xgboost import XGBClassifier

xgbRegressor = XGBClassifier(

max_depth=10, eta=0.2, n_estimators=500, colsample_bytree=0.7, subsample=0.7, seed=0

)

from sklearn.model_selection import KFold

kf = KFold(n_splits=5, shuffle=True)

y_pred = np.zeros((Y.shape[0], 1))

for train_index, test_index in kf.split(X):

x_train, y_train = X.loc[train_index, :], Y[train_index]

x_val, y_val = X.loc[test_index, :], Y[test_index]

cb.fit(x_train, y_train)

y_pred_cb = cb.predict(x_val)

y_pred[test_index] = y_pred_cb

print("F1_Score val1 : ", f1_score(y_val, y_pred_cb, average="weighted"))

from sklearn.model_selection import KFold

kf = KFold(n_splits=5, shuffle=True)

y_pred_xg = np.zeros((Y.shape[0], 1))

for train_index, test_index in kf.split(X):

x_train, y_train = X.loc[train_index, :], Y[train_index]

x_val, y_val = X.loc[test_index, :], Y[test_index]

xgbRegressor.fit(x_train, y_train, verbose=100)

y_pred_x = xgbRegressor.predict(x_val)

y_pred_xg[test_index] = y_pred_x.reshape(-1, 1)

print("F1_Score val1 : ", f1_score(y_val, y_pred_x, average="weighted"))

cb.fit(X, Y)

test_pred_cb = cb.predict(x_test)

xgbRegressor.fit(X, Y)

test_pred_xg = xgbRegressor.predict(x_test)

first_level = pd.DataFrame(y_pred, columns=["catboost"])

first_level["XGB"] = y_pred_xg

first_level_test = pd.DataFrame(test_pred_cb, columns=["catboost"])

first_level_test["XGB"] = test_pred_xg

first_level_train, first_level_val, y_train, y_val = train_test_split(

first_level, Y, test_size=0.3

)

metamodel = XGBClassifier(

max_depth=8, eta=0.2, n_estimators=500, colsample_bytree=0.7, subsample=0.7, seed=0

)

metamodel.fit(

first_level_train,

y_train,

eval_set=[(first_level_train, y_train), (first_level_val, y_val)],

verbose=20,

early_stopping_rounds=120,

)

ensemble_pred = metamodel.predict(first_level_val)

test_pred = metamodel.predict(first_level_test)

print("Val F1-Score :", f1_score(y_val, ensemble_pred, average="weighted"))

model_performance_sc_plot(ensemble_pred, y_val, "Validation")

# xgbRegressor.fit(X, Y, eval_set = [(X,Y),(x_val1, y_val1)], verbose = 20, early_stopping_rounds = 120)

# test_pred = xgbRegressor.predict(x_test)

y_pred = test_pred

y_pred = y_pred.astype(np.int32)

y_pred = lb.inverse_transform(y_pred)

ids = []

for i in range(test.shape[0]):

ids.append(test.loc[i, "Accident_ID"])

sub = pd.DataFrame(ids, columns=["Accident_ID"])

sub["Severity"] = y_pred

sub.head()

sub.to_csv("Submission.csv", index=False)

|

#

# # Developer's Survey Data Analysis

# ####We have imported the Developers Survey Dataset which was already available on Kaggle Datasets. This data was collected from the users of Stack Overflow in 2020. We will analyze the survey data and try to find some important insites from the dataset.

# * We will check our directory for the required .csv files from the list of files.

# checking the directory path

import os

os.listdir("/kaggle/input/stack-overflow-developer-survey-2020/developer_survey_2020")

# ####There are four files in our folder, but we need only need files with Survey Questions and Survey Submissions

# importing required libraries

import pandas as pd

import numpy as np

# ####We will import the required files with the help of the pandas therefore we will install the current required libraries for the analysis. The files will be imported as a dataframe with help of *read_csv()* of pandas.

# importing Data

raw_df = pd.read_csv(

"/kaggle/input/stack-overflow-developer-survey-2020/developer_survey_2020/survey_results_public.csv"

)

raw_question_df = pd.read_csv(

"/kaggle/input/stack-overflow-developer-survey-2020/developer_survey_2020/survey_results_schema.csv"

)

# ####Observing the basic structure of the dataset

# viewing data frame

raw_df.head(2)

# observing the columns of the dataset

raw_df.columns

# ####As we can observe that there are many unnecessary columns as per our requirement for the analysis, so we will create a list of columns that we need for the analysis.

# create a list of column that we need for the analysis

selected_columns = [

"Age",

"Age1stCode",

"Employment",

"Gender",

"DevType",

"YearsCode",

"YearsCodePro",

"LanguageDesireNextYear",

"LanguageWorkedWith",

"EdLevel",

"UndergradMajor",

"WorkWeekHrs",

"Hobbyist",

"Country",

]

# ####We will create a new Dataframe from the *raw_df* with only the selected columns, this will considerably reduce the size of dataset used in analysis and it will help us with keeping our focus on important field.

survey_df = raw_df[selected_columns].copy()

# observing new dataframe

survey_df

# # Cleaning the Dataset

# ####We check each column's data for any kind of inconsistency and if found, we will remove that data. Firstly we will check all the columns with the numeric values for any kind of inconsistency.

# checking the numerical columns for inconsistency

survey_df.Age1stCode.unique()

survey_df.Age.unique()

survey_df.YearsCode.unique()

survey_df.YearsCodePro.unique()

# ####We found some string values in those numeric columns therefore we will use *to_numeric* function of pandas to convert all non numeric data to **NAN**.

survey_df["Age1stCode"] = pd.to_numeric(survey_df.Age1stCode, errors="coerce")

survey_df["Age"] = pd.to_numeric(survey_df.Age, errors="coerce")

survey_df["YearsCode"] = pd.to_numeric(survey_df.YearsCode, errors="coerce")

survey_df["YearsCodePro"] = pd.to_numeric(survey_df.YearsCodePro, errors="coerce")

# ####Cheking the numeric columns after the correction.

survey_df.YearsCodePro.unique()

# ####Performing same cleaning procedures for the second table of Questions.

# check the scema table

survey_question_df = raw_question_df.copy()

# setting index to column name for selected number of column

survey_question_df.set_index("Column", inplace=False)

# ####We will change the index to the Columns for filtering the required questions only.

# using transpose and defining the locations

survey_question = survey_question_df.transpose()

survey_question.columns = survey_question.iloc[0]

survey_question = survey_question.iloc[1:]

# Checking the new dataset with selected questions only

survey_question = survey_question[selected_columns]

survey_question

# ####Describing the survey dataset for basic view of data.

survey_df.describe()

# ####We can clearly observe that there are multiple outliers, we will remove these outlier and keep the data authentic.

# * An **outlier** is an observation that lies an abnormal distance from other values in a random sample from a population.

# * In age column we can see that minimum age is **1** year for a programmer and maximum age is **279** years.

# * In Age1stCode column we can see that minimum age is **5** year for a programmer and maximum age is **85** years

# * In WorkWeekHrs column we can see that minimum Work hour is **1** hour and maximum work hour is **475** hours

# deleting the outliers

# creating a new dataframe from old data frame after droping outlier data

survey_df1 = survey_df.drop(survey_df[survey_df["Age"] < 10].index, inplace=False)

# now using newly created dataframe for further process

survey_df1 = survey_df1.drop(survey_df1[survey_df1["Age"] > 80].index, inplace=False)

survey_df1 = survey_df1.drop(

survey_df1[survey_df1["Age1stCode"] > 80].index, inplace=False

)

survey_df1 = survey_df1.drop(

survey_df1[survey_df1["Age1stCode"] < 10].index, inplace=False

)

survey_df1 = survey_df1.drop(

survey_df1[survey_df1["WorkWeekHrs"] > 140].index, inplace=False

)

survey_df1 = survey_df1.drop(

survey_df1[survey_df1["WorkWeekHrs"] < 10].index, inplace=False

)

# observing the dataset

survey_df1.describe()

# ####We will now clean non-numeric data in dataset.

# checking the other columns for any uncertainity

survey_df1.Gender.value_counts()

# ####We can clearly observe that there are multiple gender values which seems to be typing mistakes.

# * We will keep only 3 types of all values and others will be excluded.

# we need only three gender types

survey_cleaned_df = survey_df1.drop(

survey_df1[survey_df1.Gender.str.contains(";", na=False)].index, inplace=False

)

# observing the new gender column

survey_cleaned_df.Gender.value_counts()

# ####We will check other colums.

survey_cleaned_df.Employment.value_counts()

survey_cleaned_df.Hobbyist.value_counts()

# # Analyzing the Dataset and Creating some user friendly Visualizations.

# ####Importing all the required libraries for the Visualization.

# the numerical data is clean, Now analysing and visualizing the filtered data

import seaborn as sns

import matplotlib

import matplotlib.pyplot as plt

# for keeping the background of the chart darker

sns.set_style("darkgrid")

# ####We will find the highest number of users as per countries.

# first analysing data as per geographical location

survey_df1.Country.value_counts()

countries_programmer = survey_cleaned_df.Country.value_counts().head(20)

# ####Getting the list of top **20** countries with most Programers.

countries_programmer

# plotting the graph for top 20 countries

plt.figure(figsize=(12, 12))

plt.title("Countries with Most Users")

plt.xlabel("Number of users as per survey")

plt.ylabel("Countries")

ax = sns.barplot(x=countries_programmer, y=countries_programmer.index)

# annotating the numerical value of data set on graph

n = 0

for p in ax.patches:

x = p.get_x() + p.get_width() + 0.2

y = p.get_y() + p.get_height() / 2 + 0.1

ax.annotate(countries_programmer[n], (x, y))

n = n + 1

plt.show()

# ####We can observe that **United States** and **India** have Largest user base of programmers.

# let us find some trends based on age

plt.figure(figsize=(12, 12))

plt.title("First Code Age Vs Age")

sns.scatterplot(

x=survey_cleaned_df.Age,

y=survey_cleaned_df.Age1stCode,

hue="Gender",

data=survey_cleaned_df,

)

# ####From above scatter plot we can conclude that

# * Multiple people had exposure to programming after **40** years of age, Even through most popular age for first code is between **10** to **30** years.

# ####We will find the distribution of age of programmers in current senario.

plt.figure(figsize=(9, 9))

plt.title("Programmers Age Distribution")

plt.xlabel("Age")

plt.ylabel("Number of users as per survey")

plt.hist(x=survey_cleaned_df.Age, bins=np.arange(10, 80, 5), color="orange")

# ####From above histogram we can conclude that

# * Maximum number of programmers are between age **20 - 45** years.

survey_cleaned_df.head(2)

# ####We will find the Employment status of programmers as per this survey.

# finding some trends related to the employment type

Employment_df = survey_cleaned_df.Employment.value_counts()

Employment_df

plt.figure(figsize=(9, 6))

plt.title("Employment distribution of People")

plt.xlabel("Number of users as per survey")

plt.ylabel("Employment status")

ax = sns.barplot(x=Employment_df, y=Employment_df.index)

total = len(survey_cleaned_df)

for p in ax.patches:

percentage = "{:.1f}%".format(100 * p.get_width() / total)

x = p.get_x() + p.get_width() + 0.2

y = p.get_y() + p.get_height() / 2

ax.annotate(percentage, (x, y))

plt.show()

# ####From above data we can conclude that

# * Most number of Respondents are **Employed full-time**.

# * A significant amount of people are **Students** and **Freelancers**.

# ####We will identify the Educational status of the Respondent Programmers

# Educational level trends

educational_df = survey_df.EdLevel.value_counts()

educational_df

plt.figure(figsize=(9, 6))

plt.title("Programmers Education Status")

plt.xlabel("Number of users as per survey")

plt.ylabel("Education level")

ax = sns.barplot(x=educational_df, y=educational_df.index)

total = len(survey_cleaned_df)

for p in ax.patches:

percentage = "{:.1f}%".format(100 * p.get_width() / total)

x = p.get_x() + p.get_width() + 0.02

y = p.get_y() + p.get_height() / 2

ax.annotate(percentage, (x, y))

plt.show()

# ####From above data we can conclue that

# * Most of programmers have **Educational** background.

# * Most common Educational level is **Bachelors** and **Masters**.

# ####We will find the most common field of study of programers

# Finding the majors of the programmers

majors_list = survey_cleaned_df.UndergradMajor.value_counts()

majors_list

plt.figure(figsize=(9, 6))

plt.title("Programmers Education Major Status")

plt.xlabel("Number of users as per survey")

plt.ylabel("Education Major")

ax = sns.barplot(x=majors_list, y=majors_list.index)

total = len(survey_cleaned_df)

for p in ax.patches:

percentage = "{:.1f}%".format(100 * p.get_width() / total)

x = p.get_x() + p.get_width() + 0.02

y = p.get_y() + p.get_height() / 2

ax.annotate(percentage, (x, y))

plt.show()

# ####We got some important insites from above data they are as follow

# * Maximum number of programmers have there education in the field of **CS**,**CE** or **SE**.

# * Almost **Half** of the programmers are not from **Computer Science** background, they had there primary education in some other Fields.

# ####We will compare the **Working Hours** of programmers of different nation.

work_week_hours_countries = survey_cleaned_df.groupby("Country")

# Top 40

work_week_hours_countries_df = (

round(work_week_hours_countries["WorkWeekHrs"].mean(), 2)

.sort_values(ascending=False)

.head(40)

)

# All countries

work_week_hours_countries_df_all = round(

work_week_hours_countries["WorkWeekHrs"].mean(), 2

).sort_values(ascending=False)

work_week_hours_countries_df

work_week_hours_countries_df_all.describe()

plt.figure(figsize=(12, 12))

plt.title("Programmers Weekly Working Hours")

plt.xlabel("Working Hours")

plt.ylabel("Countries")

ax = sns.barplot(x=work_week_hours_countries_df, y=work_week_hours_countries_df.index)

n = 0

for p in ax.patches:

x = p.get_x() + p.get_width() + 0.2

y = p.get_y() + p.get_height() / 2 + 0.25

ax.annotate(work_week_hours_countries_df[n], (x, y))

n = n + 1

plt.show()

# ####From above data we can conclude that

# * **Asian** and **African** nations have higher Working hours in Week

# * Average worldwide working hours in a week is **41.6 hours**

# ####We will find the most used language in current senario

survey_cleaned_df.head(2)

# ####As we can observe above the **LanguageWorkedWith** column have multiple language responses as a programmers works on multiple programming languages in their lifetime.

# * We will create function which will convert our language worked with column in a dataframe with languages as there columns and boolean value **True** for used and **False** for not used in there respective cells as Rows will have distinct users.

# finding most popular programming language

# As a single programmer can use multiple languages we need to take all of it in account

def split_multicolumn(series):

result = series.to_frame()

options = []

for idx, value in series[series.notnull()].iteritems():

for option in value.split(";"):

if option not in result:

options.append(option)

result[option] = False

result.at[idx, option] = True

return result[options]

# ####We will use the above created function for finding getting the required dataframe for analysis.

# Creating the required dataframe

Language_Worked_With_df = split_multicolumn(survey_cleaned_df.LanguageWorkedWith)

# Observing the dataframe

Language_Worked_With_df

# calculating the percentage of programers prefering a perticular language

language_currently_used_percentage = round(

(100 * Language_Worked_With_df.sum() / Language_Worked_With_df.count()).sort_values(

ascending=False

),

2,

)

language_currently_used_percentage

plt.figure(figsize=(12, 12))

plt.title("Most used current language")

plt.xlabel("Percentage")

plt.ylabel("Language")

ax = sns.barplot(

x=language_currently_used_percentage, y=language_currently_used_percentage.index

)

n = 0

for p in ax.patches:

x = p.get_x() + p.get_width() + 0.2

y = p.get_y() + p.get_height() / 2

ax.annotate(language_currently_used_percentage[n], (x, y))

n = n + 1

plt.show()

# ####From above data we can conclude that

# * **JavaScript, HTML/CSS ,SQL ,Python ,Java** are the top five most used languages by the respondents

#

Language_Want_to_Work_df = split_multicolumn(survey_cleaned_df.LanguageDesireNextYear)

language_most_loved_percentage = round(

(

100 * Language_Want_to_Work_df.sum() / Language_Want_to_Work_df.count()

).sort_values(ascending=False),

2,

)

language_most_loved_percentage

# ploting the language that are most preffered for future

plt.figure(figsize=(12, 12))

plt.title("Most loved programming language for future")

plt.xlabel("Percentage")

plt.ylabel("Language")

ax = sns.barplot(

x=language_most_loved_percentage, y=language_most_loved_percentage.index

)

n = 0

for p in ax.patches:

x = p.get_x() + p.get_width() + 0.2

y = p.get_y() + p.get_height() / 2

ax.annotate(language_most_loved_percentage[n], (x, y))

n = n + 1

plt.show()

# ####From above data we can observe that

# * **Python** and **JavaScript** are the most preferred language

# * Some new Programming languages like **Rust**, **Go** and **TypeScript** are getting popular

# ####Finding the Gender proportionality of the programmer community

gender_percentage = survey_cleaned_df.Gender.value_counts()

gender_percentage

plt.title("Gender proportion of Programming community")

plt.pie(x=gender_percentage, labels=gender_percentage.index, autopct="%1.1f%%")

|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 20GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session

from sklearn.ensemble import RandomForestRegressor

from sklearn.model_selection import GridSearchCV

from sklearn.neural_network import MLPRegressor

# Read training set and test set

train_df = pd.read_csv("/kaggle/input/us-college-completion-rate-analysis/train.csv")

test_df = pd.read_csv("/kaggle/input/us-college-completion-rate-analysis/x_test.csv")

# Select the characteristics and target factors of random forest

features = [

"Tuition_in_state",

"Tuition_out_state",

"Faculty_salary",

"Pell_grant_rate",

"SAT_average",

"ACT_50thPercentile",

"pct_White",

"pct_Black",

"pct_Hispanic",

"pct_Asian",

"Parents_middlesch",

"Parents_highsch",

"Parents_college",

]

target = "Completion_rate"

# Extracting features and target variables from training and test sets

X_train = train_df[features]

y_train = train_df[target]

X_test = test_df[features]

from sklearn.ensemble import ExtraTreesRegressor, BaggingRegressor

# Create the Bagging model and specify the base estimator as Extra Trees Regression

model = BaggingRegressor(

base_estimator=ExtraTreesRegressor(n_estimators=100, random_state=42),

n_estimators=10,

random_state=42,

)

# Fitting the model on the training set

model.fit(X_train, y_train)

# Predicting the results of the test set

y_pred = model.predict(X_test)

# Output CSV file (add ID manually and delete last blank line)

name = ["Completion_rate"]

df = pd.DataFrame(columns=name, data=y_pred_mlp)

print(df)

df.to_csv("submission.csv", index=True, index_label="id")

|

# Importo las librerias

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import os

from glob import glob

import seaborn as sns

from PIL import Image

np.random.seed(123)

from sklearn.preprocessing import label_binarize

from sklearn.metrics import confusion_matrix

import itertools

import keras

from keras.utils.np_utils import (

to_categorical,

) # utilizada para convertir etiquetas a codificación en

from keras.models import Sequential

from keras.layers import Dense, Dropout, Flatten, Conv2D, MaxPool2D

from keras import backend as K

import itertools

from keras.layers.normalization import BatchNormalization

from keras.utils.np_utils import to_categorical

from keras.optimizers import Adam

from keras.preprocessing.image import ImageDataGenerator

from keras.callbacks import ReduceLROnPlateau

from sklearn.model_selection import train_test_split

# aqui grafico el modelo de perddias y el modelo de presision

def plot_model_history(model_history):

fig, axs = plt.subplots(1, 2, figsize=(15, 5))

# acc=accurancy val_acc=valor de precision

axs[0].plot(

range(1, len(model_history.history["acc"]) + 1), model_history.history["acc"]

)

axs[0].plot(

range(1, len(model_history.history["val_acc"]) + 1),

model_history.history["val_acc"],

)

axs[0].set_title("Modelo de precision")

axs[0].set_ylabel("Precision")

axs[0].set_xlabel("Epoch") # epocas

axs[0].set_xticks(

np.arange(1, len(model_history.history["acc"]) + 1),

len(model_history.history["acc"]) / 10,

)

axs[0].legend(["train", "val"], loc="best")

axs[1].plot(

range(1, len(model_history.history["loss"]) + 1), model_history.history["loss"]

)

axs[1].plot(

range(1, len(model_history.history["val_loss"]) + 1),

model_history.history["val_loss"],

)

axs[1].set_title("Modelo de perdidas")

axs[1].set_ylabel("Perdidas")

axs[1].set_xlabel("Epoch") # epocas

axs[1].set_xticks(

np.arange(1, len(model_history.history["loss"]) + 1),

len(model_history.history["loss"]) / 10,

)

axs[1].legend(["train", "val"], loc="best")

plt.show()

# aquise hace un diccionario de ruta de imagenes para poder unir las carpetas HAM10000_images_part1 y part2 en la carpeta base_skin_dir

base_skin_dir = os.path.join("..", "input")

# Fusionar imágenes de ambas carpetas HAM10000_images_part1 y part2 en un diccionario

imageid_path_dict = {

os.path.splitext(os.path.basename(x))[0]: x

for x in glob(os.path.join(base_skin_dir, "*", "*.jpg"))

}

# aqui cambio las etiquetas que se muestran por el nombre completo

lesion_type_dict = {

"nv": "Melanocytic nevi",

"mel": "Melanoma",

"bkl": "Benign keratosis-like lesions ",

"bcc": "Basal cell carcinoma",

"akiec": "Actinic keratoses",

"vasc": "Vascular lesions",

"df": "Dermatofibroma",

}

##aqui se hace la lectura de los datos y se procesan los datos

# Leemos el dataset HAM10000_metadata.csvuniendolo a la ruta de la carpeta de imagenes base_skin_di que hice arriba

skin_df = pd.read_csv(os.path.join(base_skin_dir, "HAM10000_metadata.csv"))

# Aqui creo nuevs columnas para poder tener los datos image_id, cell_type tiene el nombre corto de la etiquta del tipo de lesión y

# cell_type_idx que asigna una categoria a cada tipo de lesión va del 0 al 6

skin_df["path"] = skin_df["image_id"].map(imageid_path_dict.get)

skin_df["cell_type"] = skin_df["dx"].map(lesion_type_dict.get)

skin_df["cell_type_idx"] = pd.Categorical(skin_df["cell_type"]).codes

# para ver como esta el datset modificado

skin_df.head()

# # Limpieza de datos

# veo los valores nulos qeu tienen las columnas del dataset

skin_df.isnull().sum()

# como en la columna edad hay datos vacios y no son muchos entonves voya llenarlos con la media

skin_df["age"].fillna((skin_df["age"].mean()), inplace=True)

# veo si se hizo el cambio

skin_df.isnull().sum()

# veo que tipo d e dato maneja cada columna

print(skin_df.dtypes)

# ## Analisis Exploratorio de los Datos

# aqui grafico los tipos de cancer para ver como estan distribuidos los datos

fig, ax1 = plt.subplots(1, 1, figsize=(10, 5))

skin_df["cell_type"].value_counts().plot(kind="bar", ax=ax1)

# nos indica la forma en la fue diagnosticado el cáncer

skin_df["dx_type"].value_counts().plot(kind="bar")

# Grafica de donde esta unicado

skin_df["localization"].value_counts().plot(kind="bar")

# veo la distribucion de la edad

skin_df["age"].hist(bins=40)

# para ver si son hombres o ujeres los paicentes

skin_df["sex"].value_counts().plot(kind="bar")

# aqui cargo y cambio el tamañod elas imagenes cambio el tamaño por que las dimensiones son de 450 x 600 x3 y tensorflow no tranaja con ese tamaño el tamaño debe ser 100*75

# las imagenes se cargan en la caolumna path(sera la ruta de la imagen) en image se guarda el codigod e la imagen en color rgb

skin_df["image"] = skin_df["path"].map(

lambda x: np.asarray(Image.open(x).resize((100, 75)))

)

# veo dataset

skin_df.head()

# aqui veo las imagenes 5 por cada tipo de lesion

n_samples = 5

fig, m_axs = plt.subplots(7, n_samples, figsize=(4 * n_samples, 3 * 7))

for n_axs, (type_name, type_rows) in zip(

m_axs, skin_df.sort_values(["cell_type"]).groupby("cell_type")

):

n_axs[0].set_title(type_name)

for c_ax, (_, c_row) in zip(

n_axs, type_rows.sample(n_samples, random_state=1234).iterrows()

):

c_ax.imshow(c_row["image"])

c_ax.axis("off")

fig.savefig("category_samples.png", dpi=300)

# Comprobando la distribución del tamaño de la imagen

skin_df["image"].map(lambda x: x.shape).value_counts()

features = skin_df.drop(columns=["cell_type_idx"], axis=1)

target = skin_df["cell_type_idx"]

# ## Datos para prueba y entrenamiento

# vamos a dividos los datos ccon 80:20

x_train_o, x_test_o, y_train_o, y_test_o = train_test_split(

features, target, test_size=0.20, random_state=1234

)

# # Normalizacion

# normalizamos los datos de x_train, x_test restando de sus valores medios y luego dividiendo por su desviación estándar.

x_train = np.asarray(x_train_o["image"].tolist())

x_test = np.asarray(x_test_o["image"].tolist())

x_train_mean = np.mean(x_train)

x_train_std = np.std(x_train)

x_test_mean = np.mean(x_test)

x_test_std = np.std(x_test)

x_train = (x_train - x_train_mean) / x_train_std

x_test = (x_test - x_test_mean) / x_test_std

# La capa de salida también tendrá dos nodos, por lo que necesitamos alimentar nuestra serie de etiquetas en un

# marcador de posición para un escalar y luego convertir esos valores en un vector caliente.

y_train = to_categorical(y_train_o, num_classes=7)

y_test = to_categorical(y_test_o, num_classes=7)

# aqui se hace la divicion de datos para el entrenamiento y para la valizacion

# se usara 90:10 90 para entrenar y 10 para validadicones

x_train, x_validate, y_train, y_validate = train_test_split(

x_train, y_train, test_size=0.1, random_state=2

)

# reshape reforma la imagen debe tener 3 dimenciones (alto=75 px,ancho=100 px,canal=3)

x_train = x_train.reshape(x_train.shape[0], *(75, 100, 3))

x_test = x_test.reshape(x_test.shape[0], *(75, 100, 3))

x_validate = x_validate.reshape(x_validate.shape[0], *(75, 100, 3))

# ## Construcción del modelo CNN

# para construir el modelo uso API secuencial de Keras por que aqui solo se tien que agregar una capa a la vez, comenzando desde la entrada.

# convolucional (Conv2D) es como un conjunto de filtros que se pueden aprender. 32 filtros para las dos primeras capas conv2D, 64 filtros para las dos últimas cada filtro que se usa transforma una parte de la imagen (definida por el tamaño del núcleo) utilizando el filtro del núcleo. La matriz de filtro del núcleo se aplica a toda la imagen.

# agrupación (MaxPool2D) esta capa simplemente actúa como un filtro de disminución de resolución. Mira los 2 píxeles vecinos y selecciona el valor máximo. Estos se utilizan para reducir el costo computacional y, en cierta medida, también reducen el sobreajuste.

# La deserción (Dropout) es un método de regularización, donde una proporción de nodos en la capa se ignora aleatoriamente para cada muestra de entrenamiento. Esto deja caer al azar una promoción de la red y obliga a la red a aprender características de forma distribuida. Esta técnica también mejora la generalización y reduce el sobreajuste.

# 'relu' es el rectificador (función de activación max (0, x). La función de activación del rectificador se utiliza para agregar no linealidad a la red.

# La capa Flatten se usa para convertir los mapas de entidades finales en un solo vector 1D. Este paso de aplanamiento es necesario para que pueda utilizar capas completamente conectadas después de algunas capas convolucionales / maxpool. Combina todas las características locales encontradas de las capas convolucionales anteriores.

# 2 Capas Completamente conectadas (densas) que es simplemente un clasificador artificial de redes neuronales (ANN). En la última capa (Denso (10, activación = "softmax")) la distribución de probabilidad de salidas netas de cada clase.

# modelo

input_shape = (75, 100, 3)

num_classes = 7

model = Sequential()

model.add(

Conv2D(

32,

kernel_size=(3, 3),

activation="relu",

padding="Same",

input_shape=input_shape,

)

)

model.add(

Conv2D(

32,

kernel_size=(3, 3),

activation="relu",

padding="Same",

)

)

model.add(MaxPool2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Conv2D(64, (3, 3), activation="relu", padding="Same"))

model.add(Conv2D(64, (3, 3), activation="relu", padding="Same"))

model.add(MaxPool2D(pool_size=(2, 2)))

model.add(Dropout(0.40))

model.add(Flatten())

model.add(Dense(128, activation="relu"))

model.add(Dropout(0.5))

model.add(Dense(num_classes, activation="softmax"))

model.summary()

# defino el optimizador

# Adam Optimizer trata de solventar el problema con la fijación de el ratio de aprendizaje para ello adapta el ratio de aprendizaje en función de cómo estén distribuidos los parámetros.

# Si los parámetros están muy dispersos el ratio de aprendizaje aumentará.

optimizer = Adam(