script

stringlengths 113

767k

|

|---|

# # Imports

from boruta import BorutaPy

import warnings

warnings.filterwarnings("ignore")

import pandas as pd

import numpy as np

from rdkit import Chem

from rdkit.Chem import AllChem

from rdkit.Chem import Descriptors

from rdkit.ML.Descriptors import MoleculeDescriptors

from rdkit.Chem import MACCSkeys

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.feature_selection import VarianceThreshold

from sklearn.metrics import f1_score, recall_score

from sklearn.base import BaseEstimator, TransformerMixin

from sklearn.pipeline import Pipeline

from xgboost import XGBClassifier

import time

import os

# # Preprocessing and pipeline

train_data_url = "https://raw.githubusercontent.com/RohithOfRivia/SMILES-Toxicity-Prediction/main/Data/train_II.csv"

test_data_url = "https://raw.githubusercontent.com/RohithOfRivia/SMILES-Toxicity-Prediction/main/Data/test_II.csv"

df = pd.read_csv(train_data_url)

# transforming each compound into their canonical SMILES format. Optional.

def canonicalSmiles(smile):

try:

return Chem.MolToSmiles(Chem.MolFromSmiles(smile))

except:

return Chem.MolToSmiles(Chem.MolFromSmiles("[Na+].[Na+].F[Si--](F)(F)(F)(F)F"))

# Reads data and split up the given features

class FileReadTransform(BaseEstimator, TransformerMixin):

def fit(self, X, y=None):

return self

# training and test data are slightly different, hence passing optional test param

def transform(self, X, test=False):

try:

# if test == False:

X["SMILES"] = X["Id"].apply(lambda x: x.split(";")[0])

X["assay"] = X["Id"].apply(lambda x: x.split(";")[1])

except KeyError:

X["SMILES"] = X["x"].apply(lambda x: x.split(";")[0])

X["assay"] = X["x"].apply(lambda x: x.split(";")[1])

print("FileReadTransform done")

# correct smiles for this compound found through https://www.molport.com/shop/index

# X["SMILES"] = X["SMILES"].replace({"F[Si-2](F)(F)(F)(F)F.[Na+].[Na+]":"[Na+].[Na+].F[Si--](F)(F)(F)(F)F"})

# Deleting invalid compound from the data

X = X.loc[X.SMILES != "F[Si-2](F)(F)(F)(F)F.[Na+].[Na+]"]

return X

# Converts each SMILES value to its respective canonical SMILES

class CanonicalGenerator(BaseEstimator, TransformerMixin):

def fit(self, X, y=None):

return self

def transform(self, X):

X["SMILES"] = X["SMILES"].apply(canonicalSmiles)

print("CanonicalGenerator done")

return X

# Generate fingerprints for all compounds

class FingerprintGenerator(BaseEstimator, TransformerMixin):

def fit(self, X, y=None):

return self

def transform(self, X):

# tracks each unique compound and its fingerprints

tracker = []

fps = []

assays = []

unique = len(X["SMILES"].unique())

counter = 0

for index, columns in X[["SMILES", "assay"]].iterrows():

# skip if already in tracker

if columns[0] in tracker:

continue

# append each unique compound and theer respective fingerprints

else:

tracker.append(columns[0])

assays.append(columns[1])

mol = Chem.MolFromSmiles(columns[0])

fp = AllChem.GetMorganFingerprintAsBitVect(mol, 2, nBits=256)

fps.append(fp.ToList())

counter += 1

# print(f"compound {counter}/{unique}...

# Combining all compounds, assays and fingerprints into one dataframe

cols = a = ["x" + str(i) for i in range(1, 257)]

smiles_df = pd.DataFrame(columns=["SMILES"], data=tracker)

fingerprints = pd.DataFrame(columns=cols, data=fps)

df = pd.concat([smiles_df, fingerprints], axis=1)

print("FingerprintGenerator done")

return pd.merge(X, df, on="SMILES")

# Fingerprint generation for MACCS Keys

class FingerprintGeneratorM(BaseEstimator, TransformerMixin):

def fit(self, X, y=None):

return self

def transform(self, X):

# tracks each unique compound and its fingerprints

tracker = []

fps = []

assays = []

unique = len(X["SMILES"].unique())

counter = 0

for index, columns in X[["SMILES", "assay"]].iterrows():

# skip if already in tracker

if columns[0] in tracker:

continue

# append each unique compound and thier respective fingerprints

else:

tracker.append(columns[0])

assays.append(columns[1])

mol = Chem.MolFromSmiles(columns[0])

fp = MACCSkeys.GenMACCSKeys(mol)

fps.append(fp.ToList())

counter += 1

# print(f"compound {counter}/{unique}...

# Combining all compounds, assays and fingerprints into one dataframe

cols = a = ["x" + str(i) for i in range(1, 168)]

smiles_df = pd.DataFrame(columns=["SMILES"], data=tracker)

fingerprints = pd.DataFrame(columns=cols, data=fps)

df = pd.concat([smiles_df, fingerprints], axis=1)

print("FingerprintGenerator done")

return pd.merge(X, df, on="SMILES")

# Feature reduction with variance threshold

class VarianceThresh(BaseEstimator, TransformerMixin):

def fit(self, X, y=None):

return self

def transform(self, X, thresh=0.8):

# Looks to columns to determine whether X is training or testing data

cols = X.columns

if "x" in cols:

temp_df = X.drop(columns=["x", "assay", "SMILES"])

cols = ["x", "assay", "SMILES"]

else:

temp_df = X.drop(columns=["Id", "Expected", "assay", "SMILES"])

cols = ["Id", "Expected", "assay", "SMILES"]

# Selecting features based on the variance threshold

selector = VarianceThreshold(threshold=(thresh * (1 - thresh)))

selector.fit(temp_df)

# This line transforms the data while keeping the column names

temp_df = temp_df.loc[:, selector.get_support()]

# Attaching the ids, assays, smiles etc. that is still required for model

return pd.concat([X[cols], temp_df], axis=1), selector

# Scale descriptors (Not used in this notebook)

class Scaler(BaseEstimator, TransformerMixin):

def fit(self, X, y=None):

return self

def transform(self, X):

scaler = StandardScaler()

if "Id" in X.columns:

temp_df = X.drop(columns=["Id", "Expected", "assay", "SMILES"])

cols = ["Id", "Expected", "assay", "SMILES"]

X_scaled = pd.DataFrame(

scaler.fit_transform(temp_df), columns=temp_df.columns

)

X = pd.concat([X[cols].reset_index(drop=True), X_scaled], axis=1)

return X

else:

temp_df = X.drop(columns=["x", "assay", "SMILES"])

cols = ["x", "assay", "SMILES"]

X_scaled = pd.DataFrame(

scaler.fit_transform(temp_df), columns=temp_df.columns

)

X = pd.concat([X[cols].reset_index(drop=True), X_scaled], axis=1)

return X

# # Generating descriptors

class DescriptorGenerator(BaseEstimator, TransformerMixin):

def fit(self, X, y=None):

return self

def transform(self, X):

# Initializing descriptor calculator

calc = MoleculeDescriptors.MolecularDescriptorCalculator(

[x[0] for x in Descriptors._descList]

)

desc_names = calc.GetDescriptorNames()

# Tracking each unique compound and generating descriptors

tracker = []

descriptors = []

for compound in X["SMILES"]:

if compound in tracker:

continue

else:

tracker.append(compound)

mol = Chem.MolFromSmiles(compound)

current_descriptors = calc.CalcDescriptors(mol)

descriptors.append(current_descriptors)

# Combining X, SMILES, and generated descriptors

df = pd.DataFrame(descriptors, columns=desc_names)

temp_df = pd.DataFrame(tracker, columns=["SMILES"])

df = pd.concat([df, temp_df], axis=1)

print("DescriptorGenerator done")

return pd.merge(X, df, on="SMILES")

# # Create Pipeline

feature_generation_pipeline = Pipeline(

steps=[

("read", FileReadTransform()),

("canon", CanonicalGenerator()),

("fpr", FingerprintGenerator()),

("desc", DescriptorGenerator()),

]

)

df_processed = feature_generation_pipeline.fit_transform(df)

test_processed = feature_generation_pipeline.fit_transform(pd.read_csv(test_data_url))

# # Feature Selection

# Isolating the chemical descriptors for feature selection

descriptors = pd.concat(

[

df_processed[["Id", "SMILES", "assay", "Expected"]],

df_processed[df_processed.columns[-208:]],

],

axis=1,

)

len(descriptors.columns[4:])

# Checking for NANs

descriptors.isna().sum().sum()

# Removing all columns which have NAN values

descriptors2 = descriptors.drop(

columns=[

"BCUT2D_MWHI",

"BCUT2D_MWLOW",

"BCUT2D_CHGHI",

"BCUT2D_CHGLO",

"BCUT2D_LOGPHI",

"BCUT2D_LOGPLOW",

"BCUT2D_MRHI",

"BCUT2D_MRLOW",

]

)

descriptors2 = descriptors2.dropna()

X = descriptors2.drop(["Id", "SMILES", "Expected"], axis=1)

y = descriptors2[["Expected"]]

# Removing all columns which have NAN values

X["assay"] = X["assay"].astype("int64")

# Features selected from BorutaPy

boruta_features2 = [

"HeavyAtomMolWt",

"MaxPartialCharge",

"MinAbsPartialCharge",

"BertzCT",

"Chi1",

"Chi1n",

"Chi2v",

"Chi3n",

"Chi3v",

"Chi4v",

"LabuteASA",

"PEOE_VSA3",

"SMR_VSA6",

"SMR_VSA7",

"EState_VSA8",

"VSA_EState2",

"VSA_EState4",

"HeavyAtomCount",

"NumAromaticCarbocycles",

"MolLogP",

"MolMR",

"fr_Ar_OH",

"fr_COO",

"fr_COO2",

"fr_C_O",

"fr_C_O_noCOO",

"fr_amide",

"fr_benzene",

"fr_phenol",

"fr_phenol_noOrthoHbond",

"fr_sulfonamd",

"fr_thiazole",

"fr_thiophene",

"fr_urea",

"assay",

]

# Splitting data for training and validation. Mapping expected values from 2 to 1 because XGBoost does not support it for binary classification

X_train, X_test, y_train, y_test = train_test_split(

X[boruta_features2],

y["Expected"].map({2: 0, 1: 1}),

test_size=0.2,

random_state=0,

stratify=y["Expected"].map({2: 0, 1: 1}),

)

""" Optional to run this. Gives a list of features that pass the test as the output.

The variable declaredboruta_features2 is a saved version of the output when the code was run for the best submission.

Output may vary slightly if executed again"""

# model = XGBClassifier(max_depth=6, learning_rate=0.01, n_estimators=600, colsample_bytree=0.3)

# boruta_features = []

# # let's initialize Boruta

# feat_selector = BorutaPy(

# verbose=2,

# estimator=model,

# n_estimators='auto',

# max_iter=10 # number of iterations to perform

# )

# # train Boruta

# # N.B.: X and y must be numpy arrays

# feat_selector.fit(np.array(X_train), np.array(y_train))

# # print support and ranking for each feature

# print("\n------Support and Ranking for each feature------")

# for i in range(len(feat_selector.support_)):

# if feat_selector.support_[i]:

# boruta_features.append(X_train.columns[i])

# print("Passes the test: ", X_train.columns[i],

# " - Ranking: ", feat_selector.ranking_[i])

# else:

# print("Doesn't pass the test: ",

# X_train.columns[i], " - Ranking: ", feat_selector.ranking_[i])

# # Training and Validation

# This method trains the model with the training data and then prints the f1 score by using predictions from the holdout data

def train_model(xtrain, xtest, ytrain, ytest):

model = XGBClassifier(seed=20, max_depth=10, n_estimators=700)

model.fit(xtrain, ytrain)

predictions = model.predict(xtest)

print(f"F1 Score of model: {f1_score(predictions, ytest)}")

train_model(X_train, X_test, y_train, y_test)

# Now training with the entire dataset to make predictions on the test set

descriptors2["assay"] = descriptors2["assay"].astype("int64")

model = XGBClassifier(seed=20, max_depth=10, n_estimators=700)

model.fit(descriptors2[boruta_features2], y["Expected"].map({2: 0, 1: 1}))

# Final predictions

# Changing assayID to int

test_processed["assay"] = test_processed["assay"].astype("int64")

# Making predicitons with the model

test_preds = model.predict(test_processed[boruta_features2])

test_preds

# Checking predictions for posititve and negative valeus

np.unique(test_preds, return_counts=True)

# # Saving Predicitons for kaggle submission

# Converting the predictions into a dataframe

res = pd.DataFrame({})

res["Id"] = test_processed["x"]

res["Predicted"] = test_preds

# Mapping expected values back to 2 and 1

res["Predicted"] = res["Predicted"].map({0: 2, 1: 1})

res

# For saving predictions as csv in JUPYTER

res.to_csv("submissions.csv")

# ONLY FOR GOOGLE COLLAB Downloading the csv for submission to kaggle

# from google.colab import files

# res.to_csv('28-03-23-2.csv', encoding = 'utf-8-sig', index=False)

# files.download('28-03-23-2.csv')

res

|

# # **Most Important Python Packages**

# Python is a popular and powerful general purpose programming language that recently emerged as the preferred language among data scientists. You can write your machine-learning algorithms using Python, and it works very well. However, there are a lot of modules and libraries already implemented in Python, that can make your life much easier. Here, the hands on experience of the packages are as follows-

# **NumPy(Numerical Python):** The first package is **NumPy** which is a math library to work with N-dimensional arrays in Python. It enables you to do computation efficiently and effectively. It is better than regular Python because of its amazing capabilities. For example, for working with **arrays**, **dictionaries**, **functions**, **datatypes** and working with **images** you need to know NumPy.

# One practical example of NumPy:

import numpy as np

arr = np.array([1, 2, 3, 4, 5])

print(arr)

print(type(arr))

# **SciPy(Scientic Python):** SciPy is a collection of numerical algorithms and domain specific toolboxes, including **signal processing**, **optimization**, statistics and much more. SciPy is a good library for scientific and high performance computation.

#

from scipy import constants

print(constants.liter)

|

# # Introduction

# - There is a lot of competition among the brands in the smartwatch industry. Smartwatches are preferred by people who like to take care of their fitness. Analyzing the data collected on your fitness is one of the use cases of Data Science in healthcare. So if you want to learn how to analyze smartwatch fitness data, this notebook is for you. In this notebook, I will take you through the task of Smartwatch Data Analysis using Python.

# ## Dataset

# - This dataset generated by respondents to a distributed survey via Amazon Mechanical Turk between 03.12.2016-05.12.2016. Thirty eligible Fitbit users consented to the submission of personal tracker data, including minute-level output for physical activity, heart rate, and sleep monitoring. Individual reports can be parsed by export session ID (column A) or timestamp (column B). Variation between output represents use of different types of Fitbit trackers and individual tracking behaviors / preferences.

# # Import libraries

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import plotly.express as px

import plotly.graph_objects as go

# # Read Data

data = pd.read_csv(

"/kaggle/input/fitbit/Fitabase Data 4.12.16-5.12.16/dailyActivity_merged.csv"

)

print(data.head())

# # null Values

# - Does the dataset contain null values or not?

print(data.isnull().sum())

# - So the dataset does not have any null values.

# - Let’s have a look at the information about columns in the dataset:

print(data.info())

# - The column containing the date of the record is an object. We may need to use dates in our analysis, so let’s convert this column into a datetime column:

# Changing datatype of ActivityDate

data["ActivityDate"] = pd.to_datetime(data["ActivityDate"], format="%m/%d/%Y")

print(data.info())

|

# #

# NumPy (Numerical Python) is a widely-used open-source Python library for numerical computing. It provides support for large, multi-dimensional arrays and matrices, along with a collection of mathematical functions to operate on these arrays efficiently. NumPy is used in scientific computing, data analysis, machine learning, and other domains that require numerical computations. It offers optimized array operations for high performance, making it a powerful tool for working with large datasets. NumPy also provides functionality for working with mathematical data types and performing operations on arrays of arbitrary shapes and sizes. It is a fundamental library in the scientific Python ecosystem.

# # Basic_of_numpy

## importing the numpy library as np

import numpy as np

import warnings

warnings.filterwarnings("ignore") ## warning is ignoring the update warning

# ## I. 1D array in numpy

# One dimensional array contains elements only in one dimension.

## Create a numpy array

arr = np.array([1, 2, 3, 4, 5])

arr

# cheking the array size,ndim,shape,dtype

print(arr.shape) ## check the array shape( like columns and row)

print(arr.size) ## check the number of array size

print(arr.ndim) ## check the array number of disimile

print(arr.dtype) ## check the datatypes

# ## II. 2D and 3D arrays in numpy

# 2d array

arr2d = np.array([[1, 2, 3, 4], [5, 6, 7, 8]])

print(arr2d)

print("--" * 5)

print(arr2d.shape) ## check the array shape( like columns and row)

print(arr2d.size) ## check the number of array size

print(arr2d.ndim) ## check the array number of disimile

print(arr2d.dtype) ## check the datatypes

# 3d array

arr3d = np.array([[1, 2, 3], [4, 5, 6], [7, 8, 9]])

print(arr3d)

print("--" * 5)

print(arr3d.shape) ## check the array shape( like columns and row)

print(arr3d.size) ## check the number of array size

print(arr3d.ndim) ## check the array number of disimile

print(arr3d.dtype) ## check the datatypes

# ## III. Indexing in numpy array

## 1d array in numpy indexing

arr

print(arr[0]) ## 0 index is showing the stating array value

print(arr[-1]) ## -1 is showong the end of the index value

print(arr[:3]) ## this is slicing indexing methon in numpy

# ##### Indexing maltiple arrays in numpy

arr2d

arr2d[0] ## 2d array in 0 index are showing fist row of values

arr2d[1] ## 2d array in 1 index are showing second row of values

# ##### slicing indexing in numpy array

# 1. Same slicing methed are appling all thpe of array like (2D,3D,4D) many mores arrays

arr2d

arr2d[:1] ## 2d array in 0 index are showing fist row of values

arr2d[1:] ## 2d array in 1 index are showing second row of values

arr2d[:, :1] ## slising indexing for one [:,:1] are showing first columns values

arr2d[:, -1:] ## slising indexing for one [:,1:] are showing last columns values

arr2d[:, 1:2] ## slising indexing for one [:,1:2] are showing second columns of values

arr2d[:, 2:-1] ## slising indexing for one [:,2:-1] are showing therd columns of values

# ## IV. Replace the array values in numpy

arr3d

arr3d[:1, :] = 1 ## replace the first row values change and repalce

arr3d

arr3d[:, 1:-1] = 2 ## replace the midele columns values change and repalce

arr3d

arr3d[:, 2:] = 3 ## replace the last columns values change and repalce

arr3d

arr3d[0:, :-2] = 4 ## replace the first columns values change and repalce

arr3d

arr3d[1:2] = 5 ## replace the midele row values change and repalce

arr3d

arr3d[2:3] = 6 ## replace the last row values change and repalce

arr3d

# #### particalur values replace in numpy

arr2d

arr2d[:1, :1] = 22 ## replace the first column first row values change by 22

arr2d

arr2d[:1, -1:] = 23 ## replace the first row last values by 23

arr2d

arr2d[1:2, -1:] = 25 ## replace the second row last values by 25

arr2d

# #### Maltiple array values are also replace

arr2d[:2, 1:-2] = [28], [29] ## replace the second columns values by 28,29

arr2d

arr2d[:2, 2:] = [33, 34], [

56,

66,

] ### first and second row last two columns values chang by

arr2d

arr2d[0] = 23, 34, 5, 65 ## particalues first row values replace

arr2d

# #### Create array like floats ,bool,complex

ar = np.array([1, 2, 3, 4], float) ## creating the float array

ar2 = np.array([4, 5, 6], bool) ## creating the bool array

ar3 = np.array([7, 8, 9], complex) ## creating the complex array

print(ar)

print(ar2)

print(ar3)

# ## V. Change the array dtype

## change the array dtype float to int

changedtype1 = ar.astype(int)

print(changedtype1)

## change the array dtype bool to int

changedtype2 = ar2.astype(int)

print(changedtype2)

## change the array dtype complex to int

changedtype3 = ar3.astype(int)

print(changedtype3)

# ## VI. Numpy most useful function

# #### arange

# NumPy arange() is one of the array creation routines based on numerical ranges

f_arr = np.arange(24)

f_arr

print(f_arr.size)

print(f_arr.dtype)

# #### reshape

# Reshaping means changing the shape of an array. The shape of an array is the number of elements in each dimension

f_arr.reshape(2, 12) ## reshape function used by convert the ndim.

## two columns ndim

f_arr.reshape(12, 2)

## try to diff ndim

f_arr.reshape(6, 4)

f_arr.reshape(4, 6)

arangefunction = f_arr.reshape(6, 4) ## store the varaibles

arangefunction

# ### ravel

# The numpy.ravel() functions returns contiguous flattened array(1D array with all the input-array elements and with the same type as it).

#

arangefunction.ravel() ## ravel funtion used by reshape aposite like rearage the range

# #### append

# append() function is O(n) where n is the number of elements being appended.

append_array = np.append(

f_arr, [3, 5, 6, 7]

) ## append function add the new array values

append_array

len(append_array) ## check the len of array similer to size

append_array_re = append_array.reshape(7, 4) ## stored variables

append_array_re

# #### delete array values

# delete() function is used to delete the elements based on index position

deletelastrow = np.delete(append_array_re, append_array_re[-1:])

deletelastrow

len(deletelastrow)

# ### zeros

np.zeros((5, 5)) ## zeros are also help to malpile time creating zeros

# ### ones

np.ones((5, 5)) ## one are also help to malpile time creating zeros

# ### Identity

# The identity array is a square array with ones on the main diagonal. ... Reference object to allow the creation of arrays which are not NumPy arrays.

np.identity(5)

# ### linspace

np.linspace(10, 5) # used to create an evenly spaced sequence in a specified interval.

np.linspace(1, 20, 10)

# ### empty

# empty() function is used to return new array of a given shape and type. It has random values and uninitialized entries.

np.empty((10, 5))

# # VII. random in numpy

# Random() in Python. The random is a module present in the NumPy library. This module contains the functions which are used for generating random numbers. This module contains some simple random data generation methods, some permutation and distribution functions, and random generator functions.

np.random.randint(

100, size=(5, 5)

) # randint() is one of the function for doing random sampling in numpy

np.random.rand(10) # Random values in a given shape

np.random.normal(100, size=(10, 2))

np.random.power(

100, size=(10)

) # First array elements raised to powers from second array, element-wise.

# ### argmax,argmin,argsort

a = np.array([[1, 2, 3, 4], [55, 7, 5, 4], [8, 9, 10, 11]])

a

a.argmax() # used to return the indices of the max elements of the given array along with the specified axis.

a.argmin() # used to return the indices of the min elements of the given array along with the specified axis.

a.argsort() # the NumPy library has a function called argsort() , which computes the indirect sorting of an array.

# # VIII. basic maths in numpy

#

a

a.min() # NumPy's minimum() function is the tool of choice for finding minimum values across arrays.

a.max() # NumPy's maximum() function is the tool of choice for finding maximum values across arrays.

a.sum() ## sum funtion giving the total of array values

a.mean() # Returns the average of the array elements.

np.sqrt(a) # use the numpy. sqrt() method in Python Numpy.

# # IX. Numpy Operations..

# NumPy performs operations element-by-element, so multiplying 2D arrays with * is not a matrix multiplication – it's an element-by-element multiplication.

a = np.arange(4, 8)

b = np.array([6, 7, 8, 4])

a, b

a + b

a * b

a / b

a // b

a - b

a**b

# ## X. Joining numpy arrays

# Joining means putting contents of two or more arrays in a single array.

a

b

np.concatenate([a, b]) ## maliple varieable marge using concat funtion

c = np.arange(10).reshape(2, 5)

d = np.array([[4, 5, 6, 3, 5], [7, 6, 4, 2, 3]])

c, d

marge = np.concatenate([c, d], axis=0)

marge

marge.T ## trainform the columns to row data

marge.sort() ## sort funtion used by sequent wise values showing

marge

# ### array_split

array_split = np.array_split(marge, [3]) # Split an array into multiple sub-arrays

array_split

array_split[0]

array_split[1]

# # XI. Axis in numpy

# Axes are defined for arrays with more than one dimension.

marge.sum(axis=0)

marge.sum(axis=1)

marge.max(axis=1)

marge.min(axis=0)

# # XII. where

# The NumPy module provides a function numpy. where() for selecting elements based on a condition

np.where(marge > 6)

np.where(

marge > 6, -1, marge

) ## select the particale values replace values usning the where duntion

# ### count_nonzero,nonzero

np.count_nonzero(marge) ## except to zero values

np.nonzero(marge) ## indexing find the zero values

# ### setdiff1d

# Find the set difference of two arrays. Return the unique values in ar1 that are not in ar2. Parameters: ar1array_like. Input array.

c, d

np.setdiff1d(c, d)

# ### intersect1d

# the intersection of two arrays and return the sorted, unique values that are in both of the input arrays.

np.intersect1d(c, d)

for i in a:

print(i) ## loop fintion used by conver series.

|

#

# ---

# # | Python: Projeto Final - Análise de Crédito

# Caderno de **Aula**

# Professor [André Perez](https://www.linkedin.com/in/andremarcosperez/)

# Aluno [Thomaz Pires](https://www.linkedin.com/in/thomazbp/)

# ---

# ## 1\. Problema

# Vamos explorar dados de crédito presentes neste neste [link](https://raw.githubusercontent.com/andre-marcos-perez/ebac-course-utils/develop/dataset/credito.csv). Os dados estão no formato CSV e contém informações sobre clientes de uma instituição financeira. Em especial, estamos interessados em explicar a segunda coluna, chamada de **default**, que indica se um cliente é adimplente(`default = 0`), ou inadimplente (`default = 1`), ou seja, queremos entender o porque um cliente deixa de honrar com suas dívidas baseado no comportamento de outros atributos, como salário, escolaridade e movimentação financeira. Uma descrição completa dos atributos está abaixo.

# ### **1.1. LGPD**

# Uma análise de crédito justa é essencial, principalmente ao calcular classificações de crédito e avaliar a confiabilidade de um pagador. Ao forçar as organizações a obter consentimento dos titulares de dados e restringir as transferências de informações a terceiros autorizados, a LGPD aumentou a barreira. Como resultado, as pessoas passaram a ter mais direitos e as empresas devem ser mais cautelosas ao fazer análises de crédito.

# As empresas não podem mais recusar crédito com base apenas na residência ou idade de uma pessoa. Essas práticas passaram a ser vistas como injustas e discriminatórias porque a LGPD preconiza o tratamento igualitário de todos.

# As análises feitas aqui levam em não levam em consideração criterios como raça, sexo, genêro, moradia, crença, religião, ideologia política ou outros fatores que possam ser considerados discriminatórios.

# Segue estrutura dos dados:

# | Coluna | Descrição |

# | ------- | --------- |

# | id | Número da conta |

# | default | Indica se o cliente é adimplente (0) ou inadimplente (1) |

# | idade | --- |

# | sexo | --- |

# | depedentes | --- |

# | escolaridade | --- |

# | estado_civil | --- |

# | salario_anual | Faixa do salario mensal multiplicado por 12 |

# | tipo_cartao | Categoria do cartao: blue, silver, gold e platinium |

# | meses_de_relacionamento | Quantidade de meses desde a abertura da conta |

# | qtd_produtos | Quantidade de produtos contratados |

# | iteracoes_12m | Quantidade de iteracoes com o cliente no último ano |

# | meses_inatico_12m | Quantidade de meses que o cliente ficou inativo no último ano |

# | limite_credito | Valor do limite do cartão de crédito |

# | valor_transacoes_12m | Soma total do valor das transações no cartão de crédito no último ano |

# | qtd_transacoes_12m | Quantidade total de transações no cartão de crédito no último ano |

# ## 2\. Setup

# Import libraries

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

# Data Import

df = pd.read_csv("../input/creditocsv/credito.csv", na_values="na")

df.head(n=10) # retorna 10 primeiras linhas

# ### **2.1. Schema**

# Estão presentes 10127 registros em 16 colunas.

df.shape # retorna uma tupla (qtd linhas, qtd colunas)

# Será necessário tratamento das colunas `limite_credito` e `valor_transacoes_12m` de `object` para `float `

df.dtypes # retorna data types das colunas

# - Atributos **categóricos**.

df.select_dtypes("object").describe().transpose()

# - Atributos **numéricos**.

df.drop("id", axis=1).select_dtypes("number").describe().transpose()

# Dados faltantes podem ser:

# * Vazios ("");

# * Nulos (None);

# * Não disponíveis ou aplicaveis (na, NA, etc.);

# * Não numérico (nan, NaN, NAN, etc).

df.isna().any() # retorna booleano para presença de dados faltantes em cada coluna

# A função abaixo levanta algumas estatisticas sobre as colunas dos dados faltantes. Apesar do percentual de dados faltantes, clientes adimplentes e inadimplentes serem proxímos e que a escolha por deletar esses dados represente apenas uma alteração de 0,5% no padrão dos dados, a escolha por manter esses dados e completá-los se dá para o aprofundamento das análises.

def stats_dados_faltantes(

df: pd.DataFrame,

) -> None: # função para contagem de dados faltantes

stats_dados_faltantes = []

for col in df.columns:

if df[col].isna().any():

qtd, _ = df[df[col].isna()].shape

total, _ = df.shape

dict_dados_faltantes = {

col: {"quantidade": qtd, "porcentagem": round(100 * qtd / total, 2)}

}

stats_dados_faltantes.append(dict_dados_faltantes)

for stat in stats_dados_faltantes:

print(stat)

print("Estatisticas de dados faltantes de todo o df\n")

stats_dados_faltantes(df=df) # estatisticas de dados faltantes de todo o df

print("\nEstatisticas dados faltantes especificas de clientes adimplentes\n")

stats_dados_faltantes(

df=df[df["default"] == 0]

) # estatisticas dados faltantes especificas de clientes adimplentes

print("\nEstatisticas dados faltantes especificas de clientes inadimplentes\n")

stats_dados_faltantes(

df=df[df["default"] == 1]

) # estatisticas dados faltantes especificas de clientes inadimplentes

# ## 3\. Transformação e limpeza de dados

# As colunas limite_credito e valor_transacoes_12m estavam sendo interpretadas como colunas categóricas (dtype = object).

df[["limite_credito", "valor_transacoes_12m"]].dtypes

df[["limite_credito", "valor_transacoes_12m"]].head(n=5)

# Função lambda para limpar os dados.

fn = lambda valor: float(valor.replace(".", "").replace(",", "."))

df["valor_transacoes_12m"] = df["valor_transacoes_12m"].apply(fn)

df["limite_credito"] = df["limite_credito"].apply(fn)

df[["limite_credito", "valor_transacoes_12m"]].head(n=5)

df[["limite_credito", "valor_transacoes_12m"]].dtypes

# ### **3.1. Adição de dados faltantes**

# Para adição dos dados faltantes será testado o metodo ffill e bfill. Como os dados são do tipo object será utilizado o `countplot` para visualizar.

# * `'escolaridade'`

# * `'estado_civil'`

# * `'salario_anual'`

#

sns.countplot(x="escolaridade", data=df)

df["escolaridade"].fillna(method="ffill", inplace=True)

sns.countplot(x="escolaridade", data=df)

sns.countplot(x="estado_civil", data=df)

df["escolaridade"].fillna(method="ffill", inplace=True)

sns.countplot(x="estado_civil", data=df)

sns.countplot(x="salario_anual", data=df)

df["salario_anual"].fillna(method="ffill", inplace=True)

sns.countplot(x="salario_anual", data=df)

# O metódo 'ffill' matenve a forma geral das colunas, mas pode não ter sido a melhor opção. Será mantido esse formato pela finalidade de estudo dest projeto.

df.isna().any() # retorna booleano para presença de dados faltantes em cada coluna

# A coluna 'estado_civil' ainda apreenta valores nulos. será aplicado o metodo bfill

df["escolaridade"].fillna(method="bfill", inplace=True)

df.isna().any() # retorna booleano para presença de dados faltantes em cada coluna

# Como a coluna ainda possui valores nulos. Será utilizado um metodo de adicionar um valor 'random' dentre as opções desta coluna.

import random

possible_values = (

df["estado_civil"].dropna().tolist()

) # cria lista de valores possiveis mantendo a probabilidade estatistica dos valores

print(possible_values)

df["estado_civil"].fillna(random.choice(possible_values), inplace=True)

df.isna().any() # retorna booleano para presença de dados faltantes em cada coluna

# ## 4\. Visualização de dados

# Vamos sempre comparar a base com todos os clientes com a base de adimplentes e inadimplentes.

sns.set_style("whitegrid")

df_adimplente = df[df["default"] == 0]

df_inadimplente = df[df["default"] == 1]

df.select_dtypes("object").head(n=5)

# ### **4.1. Visualizações categóricas**

df.select_dtypes("object").describe().transpose()

# - Escolaridade : Não se observa critérios claros com base nessa variável

coluna = "escolaridade"

titulos = [

"Escolaridade dos Clientes",

"Escolaridade dos Clientes Adimplentes",

"Escolaridade dos Clientes Inadimplentes",

]

eixo = 0

max_y = 0

max = df.select_dtypes("object").describe()[coluna]["freq"] * 1.1

figura, eixos = plt.subplots(1, 3, figsize=(20, 5), sharex=True)

for dataframe in [df, df_adimplente, df_inadimplente]:

df_to_plot = dataframe[coluna].value_counts().to_frame()

df_to_plot.rename(columns={coluna: "frequencia_absoluta"}, inplace=True)

df_to_plot[coluna] = df_to_plot.index

df_to_plot.sort_values(by=[coluna], inplace=True)

df_to_plot.sort_values(by=[coluna])

f = sns.barplot(

x=df_to_plot[coluna], y=df_to_plot["frequencia_absoluta"], ax=eixos[eixo]

)

f.set(title=titulos[eixo], xlabel=coluna.capitalize(), ylabel="Frequência Absoluta")

f.set_xticklabels(labels=f.get_xticklabels(), rotation=90)

_, max_y_f = f.get_ylim()

max_y = max_y_f if max_y_f > max_y else max_y

f.set(ylim=(0, max_y))

eixo += 1

figura.show()

# - Salário Anual - Não se observa critérios claros com base nessa variável

#

coluna = "salario_anual"

titulos = [

"Salário Anual dos Clientes",

"Salário Anual dos Clientes Adimplentes",

"Salário Anual dos Clientes Inadimplentes",

]

eixo = 0

max_y = 0

figura, eixos = plt.subplots(1, 3, figsize=(20, 5), sharex=True)

for dataframe in [df, df_adimplente, df_inadimplente]:

df_to_plot = dataframe[coluna].value_counts().to_frame()

df_to_plot.rename(columns={coluna: "frequencia_absoluta"}, inplace=True)

df_to_plot[coluna] = df_to_plot.index

df_to_plot.reset_index(inplace=True, drop=True)

df_to_plot.sort_values(by=[coluna], inplace=True)

f = sns.barplot(

x=df_to_plot[coluna], y=df_to_plot["frequencia_absoluta"], ax=eixos[eixo]

)

f.set(title=titulos[eixo], xlabel=coluna.capitalize(), ylabel="Frequência Absoluta")

f.set_xticklabels(labels=f.get_xticklabels(), rotation=90)

_, max_y_f = f.get_ylim()

max_y = max_y_f if max_y_f > max_y else max_y

f.set(ylim=(0, max_y))

eixo += 1

figura.show()

# - Tipo cartao : Observa-se critérios que tendem a influenciar essa variável

coluna = "tipo_cartao"

titulos = [

"Tipo do Cartão",

"Tipo do Cartão dos Clientes Adimplentes",

"Tipo do Cartão dos Clientes Inadimplentes",

]

eixo = 0

max_y = 0

figura, eixos = plt.subplots(1, 3, figsize=(20, 5), sharex=True)

for dataframe in [df, df_adimplente, df_inadimplente]:

df_to_plot = dataframe[coluna].value_counts().to_frame()

df_to_plot.rename(columns={coluna: "frequencia_absoluta"}, inplace=True)

df_to_plot[coluna] = df_to_plot.index

df_to_plot.reset_index(inplace=True, drop=True)

df_to_plot.sort_values(by=[coluna], inplace=True)

f = sns.barplot(

x=df_to_plot[coluna], y=df_to_plot["frequencia_absoluta"], ax=eixos[eixo]

)

f.set(title=titulos[eixo], xlabel=coluna.capitalize(), ylabel="Frequência Absoluta")

f.set_xticklabels(labels=f.get_xticklabels(), rotation=90)

_, max_y_f = f.get_ylim()

max_y = max_y_f if max_y_f > max_y else max_y

f.set(ylim=(0, max_y))

eixo += 1

figura.show()

# ### **4.2. Visualizações numéricas**

# Nesta seção, vamos visualizar a relação entre a variável resposta **default** com os atributos numéricos.

df.drop(["id", "default"], axis=1).select_dtypes("number").head(n=5)

# - Quantidade de Transações nos Últimos 12 Meses - Observa-se critérios que tendem a influenciar essa variável

coluna = "qtd_transacoes_12m"

titulos = [

"Qtd. de Transações no Último Ano",

"Qtd. de Transações no Último Ano de Adimplentes",

"Qtd. de Transações no Último Ano de Inadimplentes",

]

eixo = 0

max_y = 0

figura, eixos = plt.subplots(1, 3, figsize=(20, 5), sharex=True)

for dataframe in [df, df_adimplente, df_inadimplente]:

f = sns.histplot(x=coluna, data=dataframe, stat="count", ax=eixos[eixo])

f.set(title=titulos[eixo], xlabel=coluna.capitalize(), ylabel="Frequência Absoluta")

_, max_y_f = f.get_ylim()

max_y = max_y_f if max_y_f > max_y else max_y

f.set(ylim=(0, max_y))

eixo += 1

figura.show()

# - Valor das Transações nos Últimos 12 Meses - Observa-se critérios que tendem a influenciar essa variável

coluna = "valor_transacoes_12m"

titulos = [

"Valor das Transações no Último Ano",

"Valor das Transações no Último Ano de Adimplentes",

"Valor das Transações no Último Ano de Inadimplentes",

]

eixo = 0

max_y = 0

figura, eixos = plt.subplots(1, 3, figsize=(20, 5), sharex=True)

for dataframe in [df, df_adimplente, df_inadimplente]:

f = sns.histplot(x=coluna, data=dataframe, stat="count", ax=eixos[eixo])

f.set(title=titulos[eixo], xlabel=coluna.capitalize(), ylabel="Frequência Absoluta")

_, max_y_f = f.get_ylim()

max_y = max_y_f if max_y_f > max_y else max_y

f.set(ylim=(0, max_y))

eixo += 1

figura.show()

# - Valor de Transações nos Últimos 12 Meses x Quantidade de Transações nos Últimos 12 Meses - Observa-se critérios que tendem a influenciar essa variável

f = sns.relplot(

x="valor_transacoes_12m", y="qtd_transacoes_12m", data=df, hue="default"

)

_ = f.set(

title="Relação entre Valor e Quantidade de Transações no Último Ano",

xlabel="Valor das Transações no Último Ano",

ylabel="Quantidade das Transações no Último Ano",

)

# - Tempo de relacionamento -Não observa-se critérios que tendem a influenciar essa variável

coluna = "meses_de_relacionamento"

titulos = [

"Tempo de relacionamento",

"Tempo de relacionamento de Adimplentes",

"Tempo de relacionamento de Inadimplentes",

]

eixo = 0

max_y = 0

figura, eixos = plt.subplots(1, 3, figsize=(20, 5), sharex=True)

for dataframe in [df, df_adimplente, df_inadimplente]:

f = sns.histplot(x=coluna, data=dataframe, stat="count", ax=eixos[eixo])

f.set(title=titulos[eixo], xlabel=coluna.capitalize(), ylabel="Frequência Absoluta")

_, max_y_f = f.get_ylim()

max_y = max_y_f if max_y_f > max_y else max_y

f.set(ylim=(0, max_y))

eixo += 1

figura.show()

# - Quantidade de produtos -Não observa-se critérios que tendem a influenciar essa variável

coluna = "qtd_produtos"

titulos = [

"Quantidade de produtos",

"Quantidade de produtos de Adimplentes",

"Quantidade de produtos de Inadimplentes",

]

eixo = 0

max_y = 0

figura, eixos = plt.subplots(1, 3, figsize=(20, 5), sharex=True)

for dataframe in [df, df_adimplente, df_inadimplente]:

f = sns.histplot(x=coluna, data=dataframe, stat="count", ax=eixos[eixo])

f.set(title=titulos[eixo], xlabel=coluna.capitalize(), ylabel="Frequência Absoluta")

_, max_y_f = f.get_ylim()

max_y = max_y_f if max_y_f > max_y else max_y

f.set(ylim=(0, max_y))

eixo += 1

figura.show()

# - Iterações em 12 meses -Não observa-se critérios que tendem a influenciar essa variável

coluna = "iteracoes_12m"

titulos = ["Iteraçãoes", "Iteraçãoes de Adimplentes", "Iteraçãoes de Inadimplentes"]

eixo = 0

max_y = 0

figura, eixos = plt.subplots(1, 3, figsize=(20, 5), sharex=True)

for dataframe in [df, df_adimplente, df_inadimplente]:

f = sns.histplot(x=coluna, data=dataframe, stat="count", ax=eixos[eixo])

f.set(title=titulos[eixo], xlabel=coluna.capitalize(), ylabel="Frequência Absoluta")

_, max_y_f = f.get_ylim()

max_y = max_y_f if max_y_f > max_y else max_y

f.set(ylim=(0, max_y))

eixo += 1

figura.show()

# - Inatividade em 12 meses -Não observa-se critérios que tendem a influenciar essa variável

coluna = "meses_inativo_12m"

titulos = [

"Inatividade em 12 meses",

"Inatividade em 12 meses de Adimplentes",

"Inatividade em 12 meses de Inadimplentes",

]

eixo = 0

max_y = 0

figura, eixos = plt.subplots(1, 3, figsize=(20, 5), sharex=True)

for dataframe in [df, df_adimplente, df_inadimplente]:

f = sns.histplot(x=coluna, data=dataframe, stat="count", ax=eixos[eixo])

f.set(title=titulos[eixo], xlabel=coluna.capitalize(), ylabel="Frequência Absoluta")

_, max_y_f = f.get_ylim()

max_y = max_y_f if max_y_f > max_y else max_y

f.set(ylim=(0, max_y))

eixo += 1

figura.show()

# - Limite de crédito -Não observa-se critérios que tendem a influenciar essa variável

coluna = "limite_credito"

titulos = [

"Limite de credito",

"Limite de credito de Adimplentes",

"Limite de credito de Inadimplentes",

]

eixo = 0

max_y = 0

figura, eixos = plt.subplots(1, 3, figsize=(20, 5), sharex=True)

for dataframe in [df, df_adimplente, df_inadimplente]:

f = sns.histplot(x=coluna, data=dataframe, stat="count", ax=eixos[eixo])

f.set(title=titulos[eixo], xlabel=coluna.capitalize(), ylabel="Frequência Absoluta")

_, max_y_f = f.get_ylim()

max_y = max_y_f if max_y_f > max_y else max_y

f.set(ylim=(0, max_y))

eixo += 1

figura.show()

# ## 5\. Resumo dos insights gerados.

# Observa-se que até 75% do inadimplentes transacionaram menos de 51 vezes e valores menores a 2772.615 nos últimos 12 meses, também observa-se que 75% possuem até 9933 reais de crédito

filtered_df = df[df["default"] == 1]

filtered_df.drop("id", axis=1).select_dtypes("number").describe().transpose()

|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 20GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session

import pandas as pd

df = pd.read_csv("/kaggle/input/chatgpt-1000-daily-tweets/chatgpt_daily_tweets.csv")

df.info()

df.describe()

print(df.isnull().sum())

# The result shows that the table contains 8000 rows and 20 columns.

# The "source" column does not contain any non-zero values, so it can be removed from the dataframe.

# The columns "user_location", "user_description", "user_created", "user_followers_count", "user_following_count", "user_tweet_count", "user_verified", "retweet_count", "like_count", "reply_count" and "impression_count" have zero values, so you need to check how they can affect the results of the analysis.

# You should also bring the columns "tweet_created" and "tweet_extracted" to the datetime type for the convenience of further work with them.

df.drop(columns=["source"], inplace=True)

df["tweet_created"] = pd.to_datetime(df["tweet_created"])

df["tweet_extracted"] = pd.to_datetime(df["tweet_extracted"])

df.head()

# # EDA

# Тo begin with, let's plot the distribution of some features.

# For example, the distribution of the number of likes, retweets and replies to tweets can be plotted using histograms:

tweets_count = df.shape[0]

likes_count = df["like_count"].sum()

retweets_count = df["retweet_count"].sum()

replies_count = df["reply_count"].sum()

impressions_count = df["impression_count"].sum()

print(f"Number of tweets: {tweets_count}")

print(f"Number of likes: {likes_count}")

print(f"Number of retweets: {retweets_count}")

print(f"Number of responses: {replies_count}")

print(f"Number of views: {impressions_count}")

import matplotlib.pyplot as plt

# распределение количества лайков

plt.hist(df["like_count"], bins=50)

plt.title("Like count distribution")

plt.xlabel("Like count")

plt.ylabel("Number of tweets")

plt.show()

# распределение количества ретвитов

plt.hist(df["retweet_count"], bins=50)

plt.title("Retweet count distribution")

plt.xlabel("Retweet count")

plt.ylabel("Number of tweets")

plt.show()

# распределение количества ответов

plt.hist(df["reply_count"], bins=50)

plt.title("Reply count distribution")

plt.xlabel("Reply count")

plt.ylabel("Number of tweets")

plt.show()

# ящик с усами для количества лайков

plt.boxplot(df["like_count"].dropna())

plt.title("Like count boxplot")

plt.ylabel("Like count")

plt.show()

# ящик с усами для количества ретвитов

plt.boxplot(df["retweet_count"].dropna())

plt.title("Retweet count boxplot")

plt.ylabel("Retweet count")

plt.show()

# ящик с усами для количества ответов

plt.boxplot(df["reply_count"].dropna())

plt.title("Reply count boxplot")

plt.ylabel("Reply count")

plt.show()

# Graphs show the distribution of the number of likes, retweets and replies in tweets.

# For all three graphs, it can be concluded that there are many outliers, i.e. values that differ significantly from most other values in the sample. You can also notice that the distribution of the number of likes, retweets and replies has a heavy right tail, which suggests that a small number of tweets get a lot of likes, retweets and replies, while most tweets have a small number of likes, retweets and replies.

# диаграмма рассеяния для количества лайков и ретвитов

plt.scatter(df["like_count"], df["retweet_count"])

plt.title("Like count vs Retweet count")

plt.xlabel("Like count")

plt.ylabel("Retweet count")

plt.show()

# This graph is a scatter plot for the number of likes and retweets that received responses. It allows you to evaluate the relationship between these two variables.

# If the points on the graph are located close to a straight line, then this indicates that there is a strong positive correlation between likes and retweets - that is, the more likes, the more retweets. If the points are located far from each other and form bundles, then this indicates the absence or weak correlation between these variables.

# In this case, the graph shows that there are a number of tweets that have received a large number of likes and retweets. However, in general, it can be noticed that many tweets received less than 100 likes and retweets. You can also notice that there are a number of tweets that have received a lot of likes, but few retweets, and vice versa - a lot of retweets, but few likes. This may be due to various factors, such as the subject of the tweet or the hashtags that were used.

corr_matrix = df[["like_count", "retweet_count", "reply_count"]].corr()

# создаем таблицу из матрицы корреляций и добавляем цветовую шкалу

table = corr_matrix.style.background_gradient(cmap="coolwarm")

# выводим таблицу на экран

table

# The following conclusions can be drawn from this matrix:

# There is a strong positive correlation between the number of likes and the number of responses (the correlation coefficient is 0.44). This may mean that users who put likes are more likely to leave comments under the post, and vice versa.

# The correlation between the number of likes and the number of retweets is very weak (the correlation coefficient is close to 0). This may indicate that users who put likes do not necessarily retweet the post, and vice versa.

# The correlation between the number of retweets and the number of replies is also very weak (the correlation coefficient is close to 0). This may mean that users who retweet a post do not necessarily leave comments under it, and vice versa.

# The general conclusion: the number of likes on a post is strongly related to the number of comments, but not related to the number of retweets, and vice versa. The number of retweets is not related to the number of comments.

# # Analysis content of responses

# To analyze the content of responses, you can use text analysis methods, such as tonality analysis, keyword extraction, and thematic modeling.

# To analyze the tonality, you can use machine learning algorithms that classify tweets into positive, negative or neutral. To highlight keywords, you can use natural language processing methods, such as TF-IDF or a bag of words.

# Thematic modeling allows you to identify the main topics that are covered in tweets. To do this, you can use the LDA (Latent Dirichlet Allocation) or NMF (Non-negative Matrix Factorization) methods.

# You can also use graphical analysis to identify the most common words and highlight the most significant topics. To do this, you can use libraries for data visualization, for example, Wordcloud or Matplotlib.

# For most methods of text analysis, including thematic modeling and tonality analysis, the language of the text plays an important role, since these methods often use linguistic features of the language to determine the themes, tonality and other properties of the text.

# If the answers are written in different languages, then appropriate text processing methods and models specific to that language should be used for each language. Some text analysis methods can be trained in several languages, but it depends on the specific model and the languages that were used to teach it.

# It is also worth considering that when translating a text into another language, there may be a loss of information and a change in the meaning of the text, which may affect the results of the analysis. Therefore, when analyzing text in several languages, it is important to take into account the peculiarities of each language and use appropriate methods and models for processing text in each language.

# # Тonality analysis

from textblob import TextBlob

# Add new column with sentiment polarity to the DataFrame

df["sentiment"] = df["text"].apply(lambda x: TextBlob(x).sentiment.polarity)

# Print the mean sentiment polarity by gender

import matplotlib.pyplot as plt

plt.hist(df["sentiment"], bins=25)

plt.xlabel("Sentiment polarity")

plt.ylabel("Frequency")

plt.title("Distribution of Sentiment Polarity")

plt.show()

# On the histogram, we see the distribution of the tone of tweets in positive, neutral and negative tones. Most tweets have a neutral tone, which means that the authors do not express an explicit positive or negative assessment. A small number of tweets have a very low (very negative) or very high (very positive) tonality.

# Подсчитать средние значения retweet_count, like_count и reply_count для каждой тональности

counts_by_sentiment = df.groupby("sentiment")[

["retweet_count", "like_count", "reply_count"]

].sum()

print(counts_by_sentiment)

# график retweet_count

plt.plot(counts_by_sentiment.index, counts_by_sentiment["retweet_count"])

plt.title("Retweets by Sentiment Range")

plt.xlabel("Sentiment Range")

plt.ylabel("Retweets")

plt.show()

# график like_count

plt.plot(counts_by_sentiment.index, counts_by_sentiment["like_count"])

plt.title("Likes by Sentiment Range")

plt.xlabel("Sentiment Range")

plt.ylabel("Likes")

plt.show()

# график reply_count

plt.plot(counts_by_sentiment.index, counts_by_sentiment["reply_count"])

plt.title("Replies by Sentiment Range")

plt.xlabel("Sentiment Range")

plt.ylabel("Replies")

plt.show()

# **Several conclusions can be drawn from this data:**

# The average value of retweet_count is significantly higher for tweets with a negative tone than for tweets with a positive or neutral tone. This may mean that users are more actively retweeting and discussing tweets with a negative tone.

# The average value of like_count and reply_count is low enough for tweets with negative tonality and neutral tonality, and high enough for tweets with positive tonality. This may mean that users are more likely to like and respond to tweets with a positive tone.

# The average value of like_count is the highest for tweets with a tonality of 0.4-0.6, and the average value of reply_count is for tweets with a tonality of 0.8-1.0. This may indicate that users are more likely to like tweets that cause them positive emotions, and the largest number of responses to tweets are those that cause the brightest emotions, including negative ones.

# # Highlighting keywords

# Тo highlight keywords in the text column, we can use the NLP (Natural Language Processing) method, a frequently used tool for analyzing text data. It allows you to process and analyze the text, breaking it into individual words, defining their parts of speech, highlighting nouns, verbs, etc. Then we can analyze the information received and highlight the most common words in the text.

# To do this, we will need the space library, an open source Python code for natural language processing, which provides tools for processing and analyzing text data.

import spacy

import pandas as pd

nlp = spacy.load("en_core_web_sm")

def get_keywords(text):

doc = nlp(text)

keywords = []

for token in doc:

if not token.is_stop and token.is_alpha:

keywords.append(token.lemma_)

return keywords

# Добавление нового столбца с ключевыми словами

df["keywords"] = df["text"].apply(get_keywords)

from collections import Counter

import pandas as pd

# Получить все ключевые слова на английском языке

en_keywords = [

kw.lower()

for index, row in df.iterrows()

if row["lang"] == "en"

for kw in row["keywords"]

]

# Подсчитать частотность каждого ключевого слова

counter = Counter(en_keywords)

# Создать таблицу и заполнить данными

data = {"keyword": [], "frequency": []}

for keyword, freq in counter.items():

data["keyword"].append(keyword)

data["frequency"].append(freq)

df_result = pd.DataFrame(data)

# Вывести таблицу на экран

print(df_result)

df_sorted = df_result.sort_values(by="frequency", ascending=False)

top_10 = df_sorted.head(10)

import matplotlib.pyplot as plt

# Данные для построения круговой диаграммы

labels = top_10["keyword"].tolist()

sizes = top_10["frequency"].tolist()

# Построение диаграммы

plt.pie(sizes, labels=labels, autopct="%1.1f%%")

plt.axis("equal")

plt.show()

|

# # SUPPORT VECTOR MACHINE

#

# The SVM (Support Vector Machine) is a supervised machine learning algorithm typically used for binary classification problems. It’s trained by feeding a dataset with labeled examples (xᵢ, yᵢ).

# The algorithm finds a hyperplane (or decision boundary) which should ideally have the following properties:

# It creates separation between examples of two classes with a maximum margin

# Its equation (w.x + b = 0) yields a value ≥ 1 for examples from+ve class and ≤-1 for examples from -ve class

# ### How does it find this hyperplane?

# By finding the optimal values w* (weights/normal) and b* (intercept) which define this hyperplane. The optimal values are found by minimizing a cost function. Once the algorithm identifies these optimal values, the SVM model f(x) is then defined as shown below:

#

#

#

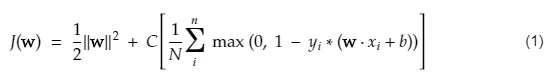

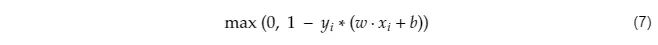

# ### Cost Function

#

# Our objective is to find a hyperplane that separates +ve and -ve examples with the largest margin while keeping the misclassification as low as possible

# We will minimize the cost/objective function shown below:

#

#

# ### The Gradient of the Cost Function

#

# Why do we minimize the cost function? Because the cost function is essentially a measure of how bad our model is doing at achieving the objective. If you look closely at J(w), to find it’s minimum, we have to:

# Minimize ∣∣w∣∣² which maximizes margin (2/∣∣w∣∣)

# Minimize the sum of hinge loss which minimizes misclassifications.

#

#

# # IMPORTING THE LIBRARIES

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.metrics import accuracy_score, confusion_matrix, ConfusionMatrixDisplay

# # IMPLEMENTING THE MODEL

class SVM:

def __init__(self, learning_rate=0.0001, lambda_param=0.001, n_iters=10000):

self.weights = None

self.bias = None

self.lr = learning_rate

self.lambda_param = lambda_param

self.n_iters = n_iters

def fit(self, X, y):

self.m, self.n = X.shape

y1 = np.where(y <= 0, -1, 1)

self.weights = np.zeros(self.n)

self.bias = 0

for i in range(self.n_iters):

for idx, x_i in enumerate(X):

if y1[idx] * (np.dot(x_i, self.weights) - self.bias) >= 1:

self.weights -= self.lr * (2 * self.lambda_param * self.weights)

else:

self.weights -= self.lr * (

2 * self.lambda_param * self.weights - np.dot(x_i, y1[idx])

)

self.bias -= self.lr * y1[idx]

def predict(self, X):

output = np.dot(X, self.weights) - self.bias

y_pred = np.sign(output)

y_hat = np.where(y_pred <= -1, 0, 1)

return y_hat

# # READING THE DATA

data = pd.read_csv("/kaggle/input/loan-prediction-dataset")

data.head()

data.shape

data.info()

data.describe()

# # DATA PREPROCESSING

# ### DROPPING UNNECESSARY FEATURES

data.drop(["loan_id", "gender", "education"], axis=1, inplace=True)

data.head()

# ### CHECK FOR NULL VALUES

data.isnull().sum()

# ### FILLING NULL VALUES

data.married.unique()

data["married"].fillna(data["married"].mode()[0], inplace=True)

data.dependents.unique()

data["dependents"].fillna(data["dependents"].mode()[0], inplace=True)

data.self_employed.unique()

data["self_employed"].fillna(data["self_employed"].mode()[0], inplace=True)

data["loanamount"].fillna(data["loanamount"].mean(), inplace=True)

data.loan_amount_term.unique()

data["loan_amount_term"].fillna(data["loan_amount_term"].mode()[0], inplace=True)

data.credit_history.unique()

data["credit_history"].fillna(data["credit_history"].mode()[0], inplace=True)

data.isnull().sum()

# ### CHECK FOR DUPLICATES

data.duplicated().sum()

data.drop_duplicates(keep="first", inplace=True)

data.duplicated().sum()

data.head()

# ## DATA VISUALIZATION

sns.pairplot(data)

# # FEATURE EXTRACTION

column = ["married", "self_employed", "property_area", "loan_status"]

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

for i in column:

data[i] = le.fit_transform(data[i])

data.dependents = data.dependents.replace("3+", 3)

plt.figure(figsize=(12, 8))

sns.heatmap(data.corr())

# # SPLITING THE DATASET

x = data.drop("loan_status", axis=1)

y = data.loan_status

from sklearn.model_selection import train_test_split

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.20)

x_test

# # FEATURE SCALING

from sklearn.preprocessing import StandardScaler

sc = StandardScaler()

x_train = sc.fit_transform(x_train)

x_test = sc.transform(x_test)

# # MODEL

svm_classifier = SVM()

svm_classifier.fit(x_train, y_train)

y_pred = svm_classifier.predict(x_test)

accuracy_score(y_test, y_pred)

cm = ConfusionMatrixDisplay(

confusion_matrix(y_test, y_pred), display_labels=[True, False]

)

cm.plot()

# # SKLEARN MODEL

from sklearn.svm import SVC

svm_c = SVC(kernel="linear", random_state=1)

svm_c.fit(x_train, y_train)

y2 = svm_c.predict(x_test)

y2

|

import numpy as np

from glob import glob

import tensorflow as tf

from tensorflow import keras

import matplotlib.pyplot as plt

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.utils import plot_model

from tensorflow.keras.models import load_model, Model, Sequential

from tensorflow.keras.layers import Flatten, Dense, Dropout, Softmax

from tensorflow.keras.applications.resnet50 import ResNet50, preprocess_input

# A simple CNN model structure

# ```

# model = Sequential()

# # first layer

# model.add(Conv2D()) # feature selection/processing

# model.add(MaxPooling2D()) # downsampling

# model.add(BatchNormalization()) # rescaling/normalize

# model.add(Dropout(0.3)) # Drop noisy data

# # Second layer

# model.add(Conv2D()) # feature selection/processing

# model.add(MaxPooling2D()) # downsampling

# model.add(BatchNormalization()) # rescaling/normalize

# model.add(Dropout(0.3)) # Drop noisy data

# and so on ...

# ```

img_size = [224, 224]

test_path = "../input/cars-dataset/Test"

train_path = "../input/cars-dataset/Train"

[224, 224] + [3]

resnet = ResNet50(

include_top=False,

input_shape=img_size + [3], # Making the image into 3 Channel, so concating 3.

weights="imagenet",

)

# visualize the layers

plot_model(resnet, show_shapes=True, show_layer_names=True)

# Parameter informations

resnet.summary()

# False for pretrained model, use the default weights used by the imagenet.

for layer in resnet.layers:

layer.trainable = False

folders = glob("../input/cars-dataset/Train/*")

folders

METRICS = [

tf.keras.metrics.BinaryAccuracy(name="accuracy"),

tf.keras.metrics.Precision(name="precision"),

tf.keras.metrics.Recall(name="recall"),

tf.keras.metrics.AUC(name="auc"),

]

# convert model output to single dimension

x = Flatten()(resnet.output)

prediction = Dense(len(folders), activation="softmax")(x)

model = Model(inputs=resnet.input, outputs=prediction)

plot_model(model)

model.compile(loss="categorical_crossentropy", optimizer="adam", metrics=METRICS)

model.summary()

from tensorflow.keras.preprocessing.image import ImageDataGenerator

# creating Dynamic image augmentation

train_datagen = ImageDataGenerator(

rescale=1 / 255,

shear_range=0.2,

zoom_range=0.2,

rotation_range=5,

width_shift_range=0.2,

height_shift_range=0.2,

horizontal_flip=True,

vertical_flip=True,

fill_mode="nearest",

)

test_datagen = ImageDataGenerator(rescale=1 / 255)

training_set = train_datagen.flow_from_directory(

"../input/cars-dataset/Train",

target_size=(224, 224),

batch_size=32,

class_mode="categorical",

)

testing_set = test_datagen.flow_from_directory(

"../input/cars-dataset/Test",

target_size=(224, 224),

batch_size=32,

class_mode="categorical",

)

from tensorflow.keras.callbacks import EarlyStopping

es = EarlyStopping(verbose=1, patience=20)

r = model.fit(

training_set,

validation_data=testing_set,

epochs=50,

steps_per_epoch=len(training_set),

validation_steps=len(testing_set),

callbacks=[es],

)

r

plt.plot(r.history["loss"], label="train loss")

plt.plot(r.history["val_loss"], label="val loss")

plt.legend()

plt.show()

plt.savefig("LossVal_loss")

plt.plot(r.history["accuracy"], label="train acc")

plt.plot(r.history["val_accuracy"], label="val acc")

plt.legend()

plt.show()

plt.savefig("AccuVal_acc")

model.save("../working/model_resent50.h5")

y_pred = model.predict(testing_set)

print(y_pred)

y_pred = np.argmax(y_pred, axis=1)

y_pred

from tensorflow.keras.models import load_model

from tensorflow.keras.preprocessing import image

model = load_model("../working/model_resent50.h5")

img = image.load_img(

"../input/cars-dataset/Test/lamborghini/10.jpg", target_size=(224, 224)

)

img

# x=image.img_to_array(img)

x = image.img_to_array(img)

x

x = x / 255

x

x = np.expand_dims(x, axis=0)

x

x.shape

img_data = preprocess_input(x)

img_data.shape

preds = model.predict(x)

print(preds)

preds = np.argmax(preds, axis=1)

preds

labels = training_set.class_indices

print(labels)

type(labels)

labels = dict((v, k) for k, v in labels.items())

print(labels)

# predictions = [labels[k] for k in predicted_class_indices]

type(labels)

labels[preds[0]].capitalize()

img = image.load_img("../input/cars-dataset/Test/audi/25.jpg", target_size=(224, 224))

x = image.img_to_array(img)

x = x / 255

x = np.expand_dims(x, axis=0)

img_data = preprocess_input(x)

preds = model.predict(x)

preds = np.argmax(preds, axis=1)

print(preds)

labels[preds[0]].capitalize()

img = image.load_img(

"../input/cars-dataset/Test/mercedes/30.jpg", target_size=(224, 224)

)

x = image.img_to_array(img)

x = x / 255

x = np.expand_dims(x, axis=0)

img_data = preprocess_input(x)

preds = model.predict(x)

preds = np.argmax(preds, axis=1)

labels[preds[0]].capitalize()

img = image.load_img(

"../input/cars-dataset/Test/mercedes/30.jpg", target_size=(224, 224)

)

x = image.img_to_array(img)

x = x / 255

x = np.expand_dims(x, axis=0)

img_data = preprocess_input(x)

preds = model.predict(x)

preds = np.argmax(preds, axis=1)

labels[preds[0]].capitalize()

def Train_Val_Plot(

acc, val_acc, loss, val_loss, auc, val_auc, precision, val_precision

):

fig, (ax1, ax2, ax3, ax4) = plt.subplots(1, 4, figsize=(20, 5))

fig.suptitle(" MODEL'S METRICS VISUALIZATION ")

ax1.plot(range(1, len(acc) + 1), acc)

ax1.plot(range(1, len(val_acc) + 1), val_acc)

ax1.set_title("History of Accuracy")

ax1.set_xlabel("Epochs")

ax1.set_ylabel("Accuracy")

ax1.legend(["training", "validation"])

ax2.plot(range(1, len(loss) + 1), loss)

ax2.plot(range(1, len(val_loss) + 1), val_loss)

ax2.set_title("History of Loss")

ax2.set_xlabel("Epochs")

ax2.set_ylabel("Loss")

ax2.legend(["training", "validation"])

ax3.plot(range(1, len(auc) + 1), auc)

ax3.plot(range(1, len(val_auc) + 1), val_auc)

ax3.set_title("History of AUC")

ax3.set_xlabel("Epochs")

ax3.set_ylabel("AUC")

ax3.legend(["training", "validation"])

ax4.plot(range(1, len(precision) + 1), precision)

ax4.plot(range(1, len(val_precision) + 1), val_precision)

ax4.set_title("History of Precision")

ax4.set_xlabel("Epochs")

ax4.set_ylabel("Precision")

ax4.legend(["training", "validation"])

plt.show()

Train_Val_Plot(

r.history["accuracy"],

r.history["val_accuracy"],

r.history["loss"],

r.history["val_loss"],

r.history["auc"],

r.history["val_auc"],

r.history["precision"],

r.history["val_precision"],

)

|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 20GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session

# # Artificial intelligence

# # Bicycle prices in the Sultanate of Oman.

# # The dataset is from OpenSooq.

# # This assignment groups learn how to analyze data using data science.

# **Student: Maryam Khalifa Al Bulushi -- Riham Abdul Kareim Al Bulushi**

# **ID: 201916069 -- 201916071**

# # about DataSet:-

# # **The dataset contains id, brand, model, year, kilometers, condition, price, and category.**

# # First we need to convert it into a useful dataset.

# # **Use the correct library then Importing the csv file as dataset**

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

# # Reading the file (DataSet)

ds = pd.read_csv("/kaggle/input/dataset1/Data_Set1.csv")

ds.head()

# # Rename the prand to brand

ds.rename(columns={"prand": "brand"}, inplace=True)

ds.head()

# # checking the information of data

ds.info()

# # Data cleansing and improvement(Make the information more useful)

# **Arange the services to be in column rather than rows**

ds1 = ds.copy()

# # Checking number of rows before change anything

ds.info()

def change_to_numeric(x, ds1):

temp = pd.get_dummies(ds1[x])

ds1 = pd.concat([ds1, temp], axis=1)

ds1.drop([x], axis=1, inplace=True)

return ds1

ds2 = change_to_numeric("model", ds1)

ds2.head()

# ***change the number to 0 and 1 and this will help later in machine learning.***

# # Checking number of rows in the new dataframe

ds2.info()

# **no changes**

# **Checking the values for "burgman 650 Executive"**

ds2["burgman 650 Executive"].unique()

# **as you can see the values changed to 0 and 1 and that's going to help in machine learning**

ds3 = ds2.copy()

# # Understand your data

ds.describe().T.style.background_gradient(cmap="magma")

# # Data Cleansing and Improvement

# # Find if there is some duplicated data

ds.loc[ds.duplicated()]

# # Checking if there's any duplicated value

ds.duplicated().sum()

# # Process Missing data

ds.isna().sum()

x = ds.isna().sum()

cnt = 0

for temp in x.values:

if temp > 0:

print(x.index[cnt], x.values[cnt])

cnt += 1

# **at we see there are no missing data**

temp = ds[ds["price"].isna()]

temp

ds.isna().sum()

# **We use unique to see the array and dtype of BMW**

ds1 = ds[ds["model"] == "BMW"].copy()

ds1["model"].unique()

# # The following graph shows the relationship between brand and price bicycles by scatter

xd = ds["brand"]

yd = ds["price"]

plt.scatter(xd, yd)

plt.show()

# # The following graphics show the count and price in 2 diffrent way : 1-Distribution 2- Spread

plt.figure(figsize=(20, 8))

plt.subplot(1, 2, 1)

plt.title("Motorcycles Price Distribution")

sns.histplot(ds.price)

plt.subplot(1, 2, 2)

plt.title("Motorcycles Price Spread")

sns.boxplot(y=ds.price)

plt.show()

# # The following graphics show the Frequency relationship with (Motorcycles Model and Year and Motorcycles Brands)

plt.figure(figsize=(25, 6))

plt.subplot(1, 3, 1)

plt1 = ds.brand.value_counts().plot(kind="bar")

plt.title("Motorcycles Brands")

plt1.set(xlabel="Motorcycles Brand", ylabel="Frequency")

plt.subplot(1, 3, 2)

plt1 = ds.year.value_counts().plot(kind="bar")

plt.title(" Year")

plt1.set(xlabel=" Year", ylabel="Frequency")

plt.subplot(1, 3, 3)

plt1 = ds.model.value_counts().plot(kind="bar")

plt.title("Motorcycles Model")

plt1.set(xlabel="Motorcycles Model", ylabel="Frequency")

plt.show()

# # we drop the "category" columns and show the dataframe.

ds1 = ds.copy()

ds1 = ds1.drop(["category"], axis=1)

ds1.head()

feat = []

feat_val = []

def cat_to_no(ds):

categ = list(ds.select_dtypes(exclude="number"))

feat.append(categ)

categ.remove("kilometers")

for cat in categ:

f = list(ds[cat].unique())

feat_val.append(f)

for x in f:

val = f.index(x)

ds[cat] = ds[cat].replace(x, val)

return ds

ds1 = cat_to_no(ds1)

ds1.head()

# ***It looks like the code is attempting to convert categorical data to numerical data by assigning unique values to each category. The code first identifies categorical columns and removes the 'kilometers' column since it is likely a numerical column. It then creates two lists: feat (to store the categorical column names) and feat_val (to store the unique values for each category). Finally, the code iterates through each unique value in each category column and replaces it with a numerical value based on its position in the feat_val list.

# It seems like this code could be useful for machine learning tasks that require numerical data, but it is important to make sure the conversion is appropriate for the specific data and task at hand.***

# ***Show the row that the array is [0].***

feat = feat[0]

feat

# ***To show the data of ['brand', 'model', 'condition'].***

feat_val

ds["kilometers"] = ds["kilometers"].replace("200,000", "200,000-200,000")

ds["kilometers"] = ds["kilometers"].replace("0", "0-0")

f = list(ds["kilometers"].unique())

f

# **As an AI language model, I do not have access to the dataset you are referring to. Therefore, I am unable to perform the above operations. However, the code above seems to be replacing '200,000' with '200,000-200,000' and '0' with '0-0' in the 'kilometers' column of the dataset. The code then creates a list of unique values in the 'kilometers' column and stores it in the variable 'f'.**

for x in f:

k1 = x.split("-")[0]

k2 = x.split("-")[1]

k1 = k1.replace(",", "")

k2 = k2.replace(",", "")

k1 = int(k1)

k2 = int(k2)

val = k1 + 1 + (k2 - k1) // 2

print(x, val)