content

stringlengths 73

1.12M

| license

stringclasses 3

values | path

stringlengths 9

197

| repo_name

stringlengths 7

106

| chain_length

int64 1

144

|

|---|---|---|---|---|

<jupyter_start><jupyter_text># Text Classification Model for Movie Review Sentiment Analysis Using TensorFlow Take 5

### David Lowe

### December 25, 2020

Template Credit: Adapted from a template made available by Dr. Jason Brownlee of Machine Learning Mastery. [https://machinelearningmastery.com/]

SUMMARY: This project aims to construct a text classification model using a neural network and document the end-to-end steps using a template. The Movie Review Sentiment Analysis dataset is a binary classification situation where we attempt to predict one of the two possible outcomes.

Additional Notes: This script is a replication, with some small modifications, of Dr. Jason Brownlee's blog post, Deep Convolutional Neural Network for Sentiment Analysis (https://machinelearningmastery.com/develop-word-embedding-model-predicting-movie-review-sentiment/). I plan to leverage Dr. Brownlee's tutorial and build a TensorFlow-based text classification notebook template for future modeling of similar datasets.

INTRODUCTION: The Movie Review Data is a collection of movie reviews retrieved from the imdb.com website in the early 2000s by Bo Pang and Lillian Lee. The reviews were collected and made available as part of their research on natural language processing. The dataset comprises 1,000 positive and 1,000 negative movie reviews drawn from an archive of the rec.arts.movies.reviews newsgroup hosted at IMDB. The authors refer to this dataset as the 'polarity dataset.'

We will use the last 100 positive reviews and the last 100 negative reviews as a test set (200 reviews) and the remaining 1,800 reviews as the training dataset. This is a 90% train, 10% split of the data.

In iteration Take1, we constructed the necessary code modules to handle the tasks of loading text, cleaning text, and vocabulary development.

In iteration Take2, we constructed a bag-of-words model and analyzed it with a simple multi-layer perceptron network.

In iteration Take3, we fine-tuned the model using different Tokenizer modes in the Keras API.

In iteration Take4, we will fine-tune the model using word embedding to fit a deep learning model.

In this Take5 iteration, we will fine-tune the model using the Word2Vec algorithm for developing a standalone word embedding mechanism.

ANALYSIS: From iteration Take2, the baseline model's performance achieved an average accuracy score of 85.81% after 25 epochs with ten iterations of cross-validation. Furthermore, the final model processed the test dataset with an accuracy measurement of 91.50%.

From iteration Take3, the best model's performance (using the frequency Tokenizer mode) achieved an average accuracy score of 86.06% after 25 epochs with ten iterations of cross-validation. Furthermore, the final model processed the test dataset with an accuracy measurement of 86.50%.

From iteration Take4, the best model's performance (using the word embedding with Keras) achieved an average accuracy score of 84.17% after ten epochs with ten iterations of cross-validation. Furthermore, the final model processed the test dataset with an accuracy measurement of 88.50%.

In this Take5 iteration, the best model's performance (using the Word2Vec word embedding algorithm) achieved an average accuracy score of 52.61% after ten epochs with ten iterations of cross-validation. Furthermore, the final model processed the test dataset with an accuracy measurement of 49.00%.

CONCLUSION: In this iteration, the Word2Vec word embedding algorithm appeared unsuitable for modeling this dataset. We should consider experimenting with other word embedding techniques for further modeling.

Dataset Used: Movie Review Sentiment Analysis Dataset

Dataset ML Model: Binary class text classification with text-oriented features

Dataset Reference: https://www.cs.cornell.edu/home/llee/papers/cutsent.pdf and http://www.cs.cornell.edu/people/pabo/movie-review-data/review_polarity.tar.gz

One potential source of performance benchmarks: https://machinelearningmastery.com/develop-word-embedding-model-predicting-movie-review-sentiment/

A deep-learning text classification project generally can be broken down into five major tasks:

1. Prepare Environment

2. Load and Prepare Text Data

3. Define and Train Models

4. Evaluate and Optimize Models

5. Finalize Model and Make Predictions# Task 1 - Prepare Environment<jupyter_code># # Install the packages to support accessing environment variable and SQL databases

# !pip install python-dotenv PyMySQL boto3

# # Retrieve GPU configuration information from Colab

# gpu_info = !nvidia-smi

# gpu_info = '\n'.join(gpu_info)

# if gpu_info.find('failed') >= 0:

# print('Select the Runtime → "Change runtime type" menu to enable a GPU accelerator, ')

# print('and then re-execute this cell.')

# else:

# print(gpu_info)

# # Retrieve memory configuration information from Colab

# from psutil import virtual_memory

# ram_gb = virtual_memory().total / 1e9

# print('Your runtime has {:.1f} gigabytes of available RAM\n'.format(ram_gb))

# if ram_gb < 20:

# print('To enable a high-RAM runtime, select the Runtime → "Change runtime type"')

# print('menu, and then select High-RAM in the Runtime shape dropdown. Then, ')

# print('re-execute this cell.')

# else:

# print('You are using a high-RAM runtime!')

# # Direct Colab to use TensorFlow v2

# %tensorflow_version 2.x

# Retrieve CPU information from the system

ncpu = !nproc

print("The number of available CPUs is:", ncpu[0])<jupyter_output>The number of available CPUs is: 4

<jupyter_text>## 1.a) Load libraries and modules<jupyter_code># Set the random seed number for reproducible results

seedNum = 888

# Load libraries and packages

import random

random.seed(seedNum)

import numpy as np

np.random.seed(seedNum)

import pandas as pd

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

import os

import sys

import boto3

import shutil

import string

import nltk

import gensim

from nltk.corpus import stopwords

from collections import Counter

from datetime import datetime

from dotenv import load_dotenv

from sklearn.model_selection import RepeatedKFold

from sklearn.metrics import accuracy_score

from sklearn.metrics import confusion_matrix

from sklearn.metrics import classification_report

import tensorflow as tf

tf.random.set_seed(seedNum)

from tensorflow import keras

from tensorflow.keras import layers

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras.callbacks import ReduceLROnPlateau

nltk.download('popular')<jupyter_output>[nltk_data] Downloading collection 'popular'

[nltk_data] |

[nltk_data] | Downloading package cmudict to

[nltk_data] | /home/pythonml/nltk_data...

[nltk_data] | Package cmudict is already up-to-date!

[nltk_data] | Downloading package gazetteers to

[nltk_data] | /home/pythonml/nltk_data...

[nltk_data] | Package gazetteers is already up-to-date!

[nltk_data] | Downloading package genesis to

[nltk_data] | /home/pythonml/nltk_data...

[nltk_data] | Package genesis is already up-to-date!

[nltk_data] | Downloading package gutenberg to

[nltk_data] | /home/pythonml/nltk_data...

[nltk_data] | Package gutenberg is already up-to-date!

[nltk_data] | Downloading package inaugural to

[nltk_data] | /home/pythonml/nltk_data...

[nltk_data] | Package inaugural is already up-to-date!

[nltk_data] | Downloading package movie_reviews to

[nltk_data] | /home/pythonml/nltk_data...

[nltk_data] | Package movie_reviews is a[...]<jupyter_text>## 1.b) Set up the controlling parameters and functions<jupyter_code># Begin the timer for the script processing

startTimeScript = datetime.now()

# Set up the number of CPU cores available for multi-thread processing

n_jobs = 2

# Set up the flag to stop sending progress emails (setting to True will send status emails!)

notifyStatus = False

# Set Pandas options

pd.set_option("display.max_rows", None)

pd.set_option("display.max_columns", None)

pd.set_option("display.width", 140)

# Set the percentage sizes for splitting the dataset

test_set_size = 0.2

val_set_size = 0.25

# Set the number of folds for cross validation

n_folds = 5

n_iterations = 1

# Set various default modeling parameters

default_loss = 'binary_crossentropy'

default_metrics = ['accuracy']

default_optimizer = tf.keras.optimizers.Adam(learning_rate=0.001)

default_kernel_init = tf.keras.initializers.RandomNormal(seed=seedNum)

default_epochs = 10

default_batch_size = 16

default_vector_space = 100

default_filters = 128

default_kernel_size = 5

default_pool_size = 2

default_neighbors = 5

# Define the labels to use for graphing the data

train_metric = "accuracy"

validation_metric = "val_accuracy"

train_loss = "loss"

validation_loss = "val_loss"

# Check the number of GPUs accessible through TensorFlow

print('Num GPUs Available:', len(tf.config.list_physical_devices('GPU')))

# Print out the TensorFlow version for confirmation

print('TensorFlow version:', tf.__version__)

# Set up the parent directory location for loading the dotenv files

# useColab = True

# if useColab:

# # Mount Google Drive locally for storing files

# from google.colab import drive

# drive.mount('/content/gdrive')

# gdrivePrefix = '/content/gdrive/My Drive/Colab_Downloads/'

# env_path = '/content/gdrive/My Drive/Colab Notebooks/'

# dotenv_path = env_path + "python_script.env"

# load_dotenv(dotenv_path=dotenv_path)

# Set up the dotenv file for retrieving environment variables

# useLocalPC = True

# if useLocalPC:

# env_path = "/Users/david/PycharmProjects/"

# dotenv_path = env_path + "python_script.env"

# load_dotenv(dotenv_path=dotenv_path)

# Set up the email notification function

def status_notify(msg_text):

access_key = os.environ.get('SNS_ACCESS_KEY')

secret_key = os.environ.get('SNS_SECRET_KEY')

aws_region = os.environ.get('SNS_AWS_REGION')

topic_arn = os.environ.get('SNS_TOPIC_ARN')

if (access_key is None) or (secret_key is None) or (aws_region is None):

sys.exit("Incomplete notification setup info. Script Processing Aborted!!!")

sns = boto3.client('sns', aws_access_key_id=access_key, aws_secret_access_key=secret_key, region_name=aws_region)

response = sns.publish(TopicArn=topic_arn, Message=msg_text)

if response['ResponseMetadata']['HTTPStatusCode'] != 200 :

print('Status notification not OK with HTTP status code:', response['ResponseMetadata']['HTTPStatusCode'])

if notifyStatus: status_notify('(TensorFlow Text Classification) Task 1 - Prepare Environment has begun on ' + datetime.now().strftime('%A %B %d, %Y %I:%M:%S %p'))

# Reset the random number generators

def reset_random(x):

random.seed(x)

np.random.seed(x)

tf.random.set_seed(x)

if notifyStatus: status_notify('(TensorFlow Text Classification) Task 1 - Prepare Environment completed on ' + datetime.now().strftime('%A %B %d, %Y %I:%M:%S %p'))<jupyter_output><empty_output><jupyter_text># Task 2 - Load and Prepare Text Data<jupyter_code>if notifyStatus: status_notify('(TensorFlow Text Classification) Task 2 - Load and Prepare Text Data has begun on ' + datetime.now().strftime('%A %B %d, %Y %I:%M:%S %p'))<jupyter_output><empty_output><jupyter_text>## 2.a) Download Text Data Archive<jupyter_code># Clean up the old files and download directories before receiving new ones

!rm -rf staging/

!rm review_polarity.tar.gz

!rm vocabulary.txt

!wget https://dainesanalytics.com/datasets/cornell-movie-review-polarity/review_polarity.tar.gz<jupyter_output>--2020-12-13 00:26:39-- https://dainesanalytics.com/datasets/cornell-movie-review-polarity/review_polarity.tar.gz

Resolving dainesanalytics.com (dainesanalytics.com)... 13.225.150.103, 13.225.150.58, 13.225.150.31, ...

Connecting to dainesanalytics.com (dainesanalytics.com)|13.225.150.103|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 3127238 (3.0M) [application/x-gzip]

Saving to: ‘review_polarity.tar.gz’

review_polarity.tar 100%[===================>] 2.98M 11.8MB/s in 0.3s

2020-12-13 00:26:39 (11.8 MB/s) - ‘review_polarity.tar.gz’ saved [3127238/3127238]

<jupyter_text>## 2.b) Splitting Data for Training and Validation<jupyter_code>staging_dir = 'staging/'

!mkdir staging/

!mkdir staging/testing/

!mkdir staging/testing/pos/

!mkdir staging/testing/neg/

local_archive = 'review_polarity.tar.gz'

shutil.unpack_archive(local_archive, staging_dir)

training_dir = 'staging/txt_sentoken/'

testing_dir = 'staging/testing/'

classA_name = 'pos'

classB_name = 'neg'

# Brief listing of training text files for both classes before splitting

training_classA_dir = os.path.join(training_dir, classA_name)

training_classA_files = os.listdir(training_classA_dir)

print('Number of training images for', classA_name, ':', len(training_classA_files))

print('Training samples for', classA_name, ':', training_classA_files[:10])

training_classB_dir = os.path.join(training_dir, classB_name)

training_classB_files = os.listdir(training_classB_dir)

print('Number of training images for', classB_name, ':', len(training_classB_files))

print('Training samples for', classB_name, ':', training_classB_files[:10])

# Move the testing files from the training directories

testing_classA_dir = os.path.join(testing_dir, classA_name)

testing_classB_dir = os.path.join(testing_dir, classB_name)

file_prefix = 'cv9'

i = 0

for text_file in training_classA_files:

if text_file.startswith(file_prefix):

source_file = training_classA_dir + '/' + text_file

dest_file = testing_classA_dir + '/' + text_file

# print('Moving file from', source_file, 'to', dest_file)

shutil.move(source_file, dest_file)

i = i + 1

print('Number of', classA_name, 'files moved:', i, '\n')

i = 0

for text_file in training_classB_files:

if text_file.startswith(file_prefix):

source_file = training_classB_dir + '/' + text_file

dest_file = testing_classB_dir + '/' + text_file

# print('Moving file from', source_file, 'to', dest_file)

shutil.move(source_file, dest_file)

i = i + 1

print('Number of', classB_name, 'files moved:', i)

# Brief listing of training text files for both classes after splitting

training_classA_files = os.listdir(training_classA_dir)

print('Number of training files for', classA_name, ':', len(training_classA_files))

print('Training samples for', classA_name, ':', training_classA_files[:10])

training_classB_files = os.listdir(training_classB_dir)

print('Number of training files for', classB_name, ':', len(training_classB_files))

print('Training samples for', classB_name, ':', training_classB_files[:10])

# Brief listing of testing text files for both classes after splitting

testing_classA_files = os.listdir(testing_classA_dir)

print('Number of testing files for', classA_name, ':', len(testing_classA_files))

print('Test samples for', classA_name, ':', testing_classA_files[:10])

testing_classB_files = os.listdir(testing_classB_dir)

print('Number of testing files for', classB_name, ':', len(testing_classB_files))

print('Test samples for', classB_name, ':', testing_classB_files[:10])<jupyter_output>Number of testing files for pos : 100

Test samples for pos : ['cv900_10331.txt', 'cv901_11017.txt', 'cv902_12256.txt', 'cv903_17822.txt', 'cv904_24353.txt', 'cv905_29114.txt', 'cv906_11491.txt', 'cv907_3541.txt', 'cv908_16009.txt', 'cv909_9960.txt']

Number of testing files for neg : 100

Test samples for neg : ['cv900_10800.txt', 'cv901_11934.txt', 'cv902_13217.txt', 'cv903_18981.txt', 'cv904_25663.txt', 'cv905_28965.txt', 'cv906_12332.txt', 'cv907_3193.txt', 'cv908_17779.txt', 'cv909_9973.txt']

<jupyter_text>## 2.c) Load Document and Build Vocabulary<jupyter_code># load doc into memory

def load_doc(filename):

# open the file as read only

file = open(filename, 'r')

# read all text

text = file.read()

# close the file

file.close()

return text

# turn a doc into clean tokens

def clean_doc(doc):

# split into tokens by white space

tokens = doc.split()

# remove punctuation from each token

table = str.maketrans('', '', string.punctuation)

tokens = [w.translate(table) for w in tokens]

# remove remaining tokens that are not alphabetic

tokens = [word for word in tokens if word.isalpha()]

# filter out stop words

stop_words = set(stopwords.words('english'))

tokens = [w for w in tokens if not w in stop_words]

# filter out short tokens

tokens = [word for word in tokens if len(word) > 1]

return tokens

# load doc and add to vocab

def add_doc_to_vocab(filename, vocab):

# load doc

doc = load_doc(filename)

# clean doc

tokens = clean_doc(doc)

# update counts

vocab.update(tokens)

# load all docs in a directory

def build_vocab_from_docs(directory, vocab):

# walk through all files in the folder

i = 0

print('Processing the text files and showing the first 10...')

for filename in os.listdir(directory):

# skip files that do not have the right extension

if not filename.endswith(".txt"):

continue

# create the full path of the file to open

path = directory + '/' + filename

# add doc to vocab

add_doc_to_vocab(path, vocab)

i = i + 1

if i < 10: print('Loaded %s' % path)

print('Total number of text files loaded:', i, '\n')

# define vocab

vocab = Counter()

# add all docs to vocab

build_vocab_from_docs(training_classA_dir, vocab)

build_vocab_from_docs(training_classB_dir, vocab)

# print the size of the vocab

print('The total number of words in the vocabulary:', len(vocab))

# print the top words in the vocab

top_words = 50

print('The top', top_words, 'words in the vocabulary:\n', vocab.most_common(top_words))

# keep tokens with a min occurrence

min_occurane = 2

tokens = [k for k,c in vocab.items() if c >= min_occurane]

print('The number of words with the minimum appearance:', len(tokens))

# save list to file

def save_list(lines, filename):

# convert lines to a single blob of text

data = '\n'.join(lines)

# open file

file = open(filename, 'w')

# write text

file.write(data)

# close file

file.close()

# save tokens to a vocabulary file

save_list(tokens, 'vocabulary.txt')<jupyter_output><empty_output><jupyter_text>## 2.d) Prepare Word Embedding using Word2Vec Algorithm<jupyter_code># load the vocabulary

vocab_filename = 'vocabulary.txt'

vocab = load_doc(vocab_filename)

vocab = vocab.split()

vocab = set(vocab)

print('Number of tokens in the vocabulary:', len(vocab))

# turn a doc into clean tokens

def doc_to_clean_lines(doc, vocab):

clean_lines = list()

lines = doc.splitlines()

for line in lines:

# split into tokens by white space

tokens = line.split()

# remove punctuation from each token

table = str.maketrans('', '', string.punctuation)

tokens = [w.translate(table) for w in tokens]

# filter out tokens not in vocab

tokens = [w for w in tokens if w in vocab]

clean_lines.append(tokens)

return clean_lines

# load all docs in a directory

def load_lines_from_docs(directory, vocab):

lines = list()

# walk through all files in the folder

for filename in os.listdir(directory):

# create the full path of the file to open

path = directory + '/' + filename

# load and clean the doc

doc = load_doc(path)

doc_lines = doc_to_clean_lines(doc, vocab)

# add lines to list

lines += doc_lines

return lines

# load training data

positive_train_cases = load_lines_from_docs(training_classA_dir, vocab)

print('The number of positive sentences processed:', len(positive_train_cases))

negative_train_cases = load_lines_from_docs(training_classB_dir, vocab)

print('The number of negative sentences processed:', len(negative_train_cases))

train_sentences = negative_train_cases + positive_train_cases

print('Total training sentences: %d' % len(train_sentences))

# train word2vec model

embed_model = gensim.models.Word2Vec(train_sentences, size=default_vector_space, window=default_neighbors, workers=n_jobs, min_count=1)

# summarize vocabulary size in model

words = list(embed_model.wv.vocab)

print('Vocabulary size: %d' % len(words))

# save model in ASCII (word2vec) format

filename = 'embedding_word2vec.txt'

embed_model.wv.save_word2vec_format(filename, binary=False)<jupyter_output><empty_output><jupyter_text>## 2.e) Create Tokenizer and Encode the Input Text<jupyter_code># load doc, clean and return line of tokens

def doc_to_line(filename, vocab):

# load the doc

doc = load_doc(filename)

# clean doc

tokens = clean_doc(doc)

# filter by vocab

tokens = [w for w in tokens if w in vocab]

return ' '.join(tokens)

# load all docs in a directory

def process_docs_to_lines(directory, vocab):

lines = list()

# walk through all files in the folder

for filename in os.listdir(directory):

# create the full path of the file to open

path = directory + '/' + filename

# load and clean the doc

line = doc_to_line(path, vocab)

# add to list

lines.append(line)

return lines

# prepare encoding of docs

def encode_train_data(train_docs, maxlen):

# create the tokenizer

tokenizer = Tokenizer()

# fit the tokenizer on the documents

tokenizer.fit_on_texts(train_docs)

# encode training data set

encoded_docs = tokenizer.texts_to_sequences(train_docs)

# pad sequences

train_encoded = pad_sequences(encoded_docs, maxlen=maxlen, padding='post')

return train_encoded, tokenizer

# Load all training cases

positive_train_cases = process_docs_to_lines(training_classA_dir, vocab)

print('The number of positive reviews processed:', len(positive_train_cases))

negative_train_cases = process_docs_to_lines(training_classB_dir, vocab)

print('The number of negative reviews processed:', len(negative_train_cases))

training_docs = negative_train_cases + positive_train_cases

# Get maximum doc length for padding sequences

max_length = max([len(s.split()) for s in training_docs])

print('The maximum document length:', max_length)

# encode training and validation datasets

X_train, vocab_tokenizer = encode_train_data(training_docs, max_length)

print('The shape of the encoded training dataset:', X_train.shape)

y_train = np.array([0 for _ in range(len(negative_train_cases))] + [1 for _ in range(len(positive_train_cases))])

print('The shape of the encoded training classes:', y_train.shape)

if notifyStatus: status_notify('(TensorFlow Text Classification) Task 2 - Load and Prepare Text Data completed on ' + datetime.now().strftime('%A %B %d, %Y %I:%M:%S %p'))<jupyter_output><empty_output><jupyter_text># Task 3 - Define and Train Models<jupyter_code>if notifyStatus: status_notify('(TensorFlow Text Classification) Task 3 - Define and Train Models has begun on ' + datetime.now().strftime('%A %B %d, %Y %I:%M:%S %p'))

# Define the default numbers of input/output for modeling

num_outputs = 1

# load embedding as a dict

def load_embedding(filename):

# load embedding into memory, skip first line

file = open(filename,'r')

lines = file.readlines()[1:]

file.close()

# create a map of words to vectors

embedding = dict()

for line in lines:

parts = line.split()

# key is string word, value is numpy array for vector

embedding[parts[0]] = np.asarray(parts[1:], dtype='float32')

return embedding

# create a weight matrix for the Embedding layer from a loaded embedding

def get_weight_matrix(embedding, vocab):

# total vocabulary size plus 0 for unknown words

vocab_size = len(vocab) + 1

# define weight matrix dimensions with all 0

weight_matrix = np.zeros((vocab_size, default_vector_space))

# step vocab, store vectors using the Tokenizer's integer mapping

for word, i in vocab.items():

weight_matrix[i] = embedding.get(word)

return weight_matrix

# load embedding from file

raw_embedding = load_embedding('embedding_word2vec.txt')

# get vectors in the right order

embedding_vectors = get_weight_matrix(raw_embedding, vocab_tokenizer.word_index)

# define vocabulary size (largest integer value)

vocab_size = len(vocab_tokenizer.word_index) + 1

print('The maximum vocabulary size is:', vocab_size)

# create the embedding layer

embedding_layer = keras.layers.Embedding(vocab_size, default_vector_space, weights=[embedding_vectors], input_length=max_length, trainable=False)

# Define the baseline model for benchmarking

def create_nn_model(n_inputs=max_length, n_outputs=num_outputs, embed_layer=embedding_layer, conv1_filters=default_filters, conv1_kernels=default_kernel_size,

n_pools=default_pool_size, opt_param=default_optimizer, init_param=default_kernel_init):

nn_model = keras.Sequential([

embed_layer,

layers.Conv1D(filters=conv1_filters, kernel_size=conv1_kernels, activation='relu'),

layers.MaxPooling1D(pool_size=n_pools),

layers.Flatten(),

layers.Dense(n_outputs, activation='sigmoid', kernel_initializer=init_param)

])

nn_model.compile(loss=default_loss, optimizer=opt_param, metrics=default_metrics)

return nn_model

# Initialize the default model and get a baseline result

startTimeModule = datetime.now()

results = list()

iteration = 0

cv = RepeatedKFold(n_splits=n_folds, n_repeats=n_iterations, random_state=seedNum)

for train_ix, val_ix in cv.split(X_train):

feature_train, feature_validation = X_train[train_ix], X_train[val_ix]

target_train, target_validation = y_train[train_ix], y_train[val_ix]

reset_random(seedNum)

baseline_model = create_nn_model()

baseline_model.fit(feature_train, target_train, epochs=default_epochs, batch_size=default_batch_size, verbose=1)

model_metric = baseline_model.evaluate(feature_validation, target_validation, verbose=0)[1]

iteration = iteration + 1

print('Accuracy measurement from iteration %d >>> %.2f%%' % (iteration, model_metric*100),'\n')

results.append(model_metric)

validation_score = np.mean(results)

validation_variance = np.std(results)

print('Average model accuracy from all validation iterations: %.2f%% (%.2f%%)' % (validation_score*100, validation_variance*100))

print('Total time for model fitting and cross validating:', (datetime.now() - startTimeModule))

if notifyStatus: status_notify('(TensorFlow Text Classification) Task 3 - Define and Train Models completed on ' + datetime.now().strftime('%A %B %d, %Y %I:%M:%S %p'))<jupyter_output><empty_output><jupyter_text># Task 4 - Evaluate and Optimize Models<jupyter_code>if notifyStatus: status_notify('(TensorFlow Text Classification) Task 4 - Evaluate and Optimize Models has begun on ' + datetime.now().strftime('%A %B %d, %Y %I:%M:%S %p'))

# Not applicable for this iteration of modeling

if notifyStatus: status_notify('(TensorFlow Text Classification) Task 4 - Evaluate and Optimize Models completed on ' + datetime.now().strftime('%A %B %d, %Y %I:%M:%S %p'))<jupyter_output><empty_output><jupyter_text># Task 5 - Finalize Model and Make Predictions<jupyter_code>if notifyStatus: status_notify('(TensorFlow Text Classification) Task 5 - Finalize Model and Make Predictions has begun on ' + datetime.now().strftime('%A %B %d, %Y %I:%M:%S %p'))

# prepare encoding of docs

def encode_test_data(test_docs, maxlen, tokenizer):

# encode training data set

encoded_docs = tokenizer.texts_to_sequences(test_docs)

# pad sequences

test_encoded = pad_sequences(encoded_docs, maxlen=maxlen, padding='post')

return test_encoded

# load all validation cases

positive_test_cases = process_docs_to_lines(testing_classA_dir, vocab)

print('The number of positive reviews processed:', len(positive_test_cases))

negative_test_cases = process_docs_to_lines(testing_classB_dir, vocab)

print('The number of negative reviews processed:', len(negative_test_cases))

testing_docs = negative_test_cases + positive_test_cases

# encode training and validation datasets

X_test = encode_test_data(testing_docs, max_length, vocab_tokenizer)

print('The shape of the encoded test dataset:', X_test.shape)

y_test = np.array([0 for _ in range(len(negative_test_cases))] + [1 for _ in range(len(positive_test_cases))])

print('The shape of the encoded test classes:', y_test.shape)

# Create the final model for evaluating the test dataset

reset_random(seedNum)

final_model = create_nn_model()

final_model.fit(X_train, y_train, epochs=default_epochs, batch_size=default_batch_size, verbose=1)

# Summarize the final model

final_model.summary()

# test_predictions = final_model.predict(X_test, batch_size=default_batch, verbose=1)

test_predictions = (final_model.predict(X_test) > 0.5).astype("int32").ravel()

print('Accuracy Score:', accuracy_score(y_test, test_predictions))

print(confusion_matrix(y_test, test_predictions))

print(classification_report(y_test, test_predictions))

if notifyStatus: status_notify('(TensorFlow Text Classification) Task 5 - Finalize Model and Make Predictions completed on ' + datetime.now().strftime('%A %B %d, %Y %I:%M:%S %p'))

print ('Total time for the script:',(datetime.now() - startTimeScript))<jupyter_output>Total time for the script: 0:11:48.403900

|

non_permissive

|

/py_nlp_movie_review_sentiment/py_nlp_movie_review_sentiment_take5.ipynb

|

daines-analytics/nlp-projects

| 12 |

<jupyter_start><jupyter_text>### Nacachian Mauro -- 99619

## Simulacion Monte Carlo para ambos tipos de repetidores

Se simula el sistema analogico y digital para 9 etapas y para 5 a 25 dB. Se usan 1000 muestras <jupyter_code>

import matplotlib.pyplot as plt

import numpy as np

from scipy import stats

from copy import copy

# Funcion indicador para el sistema analogico

# devuelve 1 si el simbolo recibido es distinto a lo recibido

# usando la toma de decision en la utlima etapa

#

def etapaDecision(x,yn):

if x > 0 and yn < 0:

return 1

if x < 0 and yn >= 0:

return 1

else:

return 0

def monteCarloDig(xn, x1, k):

sum = 0

for i in range(k):

if xn[i] != x1[i]:

sum = sum + 1

return sum/k

def monteCarloAnalog(yn, x1, k):

sum = 0

for i in range(k):

sum = sum + etapaDecision(x1[i], yn[i])

return sum/k

def probErrorAnalog(snr,n):

return stats.norm.sf(np.sqrt( 1 / (pow((snr+1) / snr,n) - 1) ))

def probErrorDigital(snr,n):

return (1- pow((1 - 2 * stats.norm.sf( np.sqrt(snr) ) ), n) ) / 2

muestras = 1000 ## cantidad de realizaciones

n = 9 ## cantidad de etapas

h = 1

A = 1

snr_db = np.arange(5,25,1) # en dB

snr = 10**((snr_db)/10)

pErrorAnalog = []

pErrorDig = []

for k in range(np.size(snr_db)):

sigma = pow(h, 2) * pow(A, 2) / snr[k]

Wi = np.random.randn(muestras, n) * np.sqrt(sigma)

X1 = np.random.choice([A, -A], muestras, p = [0.5, 0.5])

#######################

## Sistema Analogico ##

#######################

G = np.sqrt(snr[k] / (snr[k] + 1)) / h

ruido = []

for j in range(muestras):

aux = 0

for i in range(n):

aux = aux + Wi[j][i] * pow(G,n-(i+1)) * pow(h,n-(i+1))

ruido.append(aux)

Yn = []

for i in range(muestras):

Yn = np.append(Yn,pow(G,n-1) * pow(h,n) * X1[i] + ruido[i])

pErrorAnalog = np.append(pErrorAnalog, monteCarloAnalog(Yn, X1, muestras))

#####################

## Sistema Digital ##

#####################

#W = np.random.randn(muestras, n) * np.sqrt(sigma)

Ynd = X1 * h + Wi[:,0] # Accedo a las realizaciones de la primer etapa

Xn = copy(X1)

for i in range(muestras):

for j in range(n):

Ynd[i] = Xn[i] * h + Wi[i][j]

# Toma de decision #

if Ynd[i] < 0:

Xn[i] = -A

else:

Xn[i] = A

pErrorDig = np.append(pErrorDig, monteCarloDig(Xn, X1, muestras))

# Probabilidades por formula

#

pErrorDigTeorica = probErrorDigital(snr, n)

pErrorAnalogTeorica = probErrorAnalog(snr, n)

# Graficos comparativos para n = 9

#

plt.figure(figsize = (15,10))

plt.plot(snr_db, pErrorAnalogTeorica, '--o', label = 'Probabilidad teorica', color = 'black', linewidth = 2)

plt.plot(snr_db, pErrorAnalog, '-^', label = 'Probabilidad simulada', color = 'red', linewidth = 2)

#plt.yscale('log')

plt.legend()

plt.xticks(snr_db)

plt.xlabel('SNR [dB]')

plt.ylabel('Probabilidad de error')

plt.grid(b = True, which = 'major', color = 'black', linestyle = '-', linewidth = 0.4)

plt.grid(b = True, which = 'minor', color = 'black', linestyle = '-', linewidth = 0.4)

plt.legend(loc = 'best')

plt.title('Probabilidad de error para el sistema analogica con n = 9')

plt.savefig("comparativa_analog_sim.png")

plt.figure(figsize = (15,10))

plt.plot(snr_db, pErrorDigTeorica, '--o', label = 'Probabilidad teorica', color = 'black', linewidth = 2)

plt.plot(snr_db, pErrorDig, '-^' ,label = 'Probabilidad simulada', color = 'red', linewidth = 2)

#plt.yscale('log')

plt.legend()

plt.xticks(snr_db)

plt.xlabel('SNR [dB]')

plt.ylabel('Probabilidad de error')

plt.grid(b = True, which = 'major', color = 'black', linestyle = '-', linewidth = 0.4)

plt.grid(b = True, which = 'minor', color = 'black', linestyle = '-', linewidth = 0.4)

plt.legend(loc = 'best')

plt.title('Probabilidad de error para el sistema digital con n = 9')

plt.savefig("comparativa_dig_sim.png")

<jupyter_output><empty_output>

|

no_license

|

/tp1 repetidores/tp1Ejercicio4A.ipynb

|

mauronaca/tps-procesos-estocasticos-fiuba

| 1 |

<jupyter_start><jupyter_text># Text core

> Basic function to preprocess text before assembling it in a `DataBunch`.<jupyter_code>#export

import spacy,html

from spacy.symbols import ORTH<jupyter_output><empty_output><jupyter_text>## Preprocessing rulesThe following are rules applied to texts before or after it's tokenized.<jupyter_code>#export

#special tokens

UNK, PAD, BOS, EOS, FLD, TK_REP, TK_WREP, TK_UP, TK_MAJ = "xxunk xxpad xxbos xxeos xxfld xxrep xxwrep xxup xxmaj".split()

#export

_all_ = ["UNK", "PAD", "BOS", "EOS", "FLD", "TK_REP", "TK_WREP", "TK_UP", "TK_MAJ"]

#export

_re_spec = re.compile(r'([/#\\])')

def spec_add_spaces(t):

"Add spaces around / and #"

return _re_spec.sub(r' \1 ', t)

test_eq(spec_add_spaces('#fastai'), ' # fastai')

test_eq(spec_add_spaces('/fastai'), ' / fastai')

test_eq(spec_add_spaces('\\fastai'), ' \\ fastai')

#export

_re_space = re.compile(' {2,}')

def rm_useless_spaces(t):

"Remove multiple spaces"

return _re_space.sub(' ', t)

test_eq(rm_useless_spaces('a b c'), 'a b c')

#export

_re_rep = re.compile(r'(\S)(\1{2,})')

def replace_rep(t):

"Replace repetitions at the character level: cccc -- TK_REP 4 c"

def _replace_rep(m):

c,cc = m.groups()

return f' {TK_REP} {len(cc)+1} {c} '

return _re_rep.sub(_replace_rep, t)<jupyter_output><empty_output><jupyter_text>It starts replacing at 3 repetitions of the same character or more.<jupyter_code>test_eq(replace_rep('aa'), 'aa')

test_eq(replace_rep('aaaa'), f' {TK_REP} 4 a ')

#export

_re_wrep = re.compile(r'(?:\s|^)(\w+)\s+((?:\1\s+)+)\1(\s|\W|$)')

#hide

"""

Matches any word repeated at least four times with spaces between them

(?:\s|^) Non-Capture either a whitespace character or the beginning of text

(\w+) Capture any alphanumeric character

\s+ One or more whitespace

((?:\1\s+)+) Capture a repetition of one or more times \1 followed by one or more whitespace

\1 Occurence of \1

(\s|\W|$) Capture last whitespace, non alphanumeric character or end of text

""";

#export

def replace_wrep(t):

"Replace word repetitions: word word word word -- TK_WREP 4 word"

def _replace_wrep(m):

c,cc,e = m.groups()

return f' {TK_WREP} {len(cc.split())+2} {c} {e}'

return _re_wrep.sub(_replace_wrep, t)<jupyter_output><empty_output><jupyter_text>It starts replacing at 3 repetitions of the same word or more.<jupyter_code>test_eq(replace_wrep('ah ah'), 'ah ah')

test_eq(replace_wrep('ah ah ah'), f' {TK_WREP} 3 ah ')

test_eq(replace_wrep('ah ah ah ah'), f' {TK_WREP} 4 ah ')

test_eq(replace_wrep('ah ah ah ah '), f' {TK_WREP} 4 ah ')

test_eq(replace_wrep('ah ah ah ah.'), f' {TK_WREP} 4 ah .')

test_eq(replace_wrep('ah ah ahi'), f'ah ah ahi')

#export

def fix_html(x):

"Various messy things we've seen in documents"

x = x.replace('#39;', "'").replace('amp;', '&').replace('#146;', "'").replace('nbsp;', ' ').replace(

'#36;', '$').replace('\\n', "\n").replace('quot;', "'").replace('<br />', "\n").replace(

'\\"', '"').replace('<unk>',UNK).replace(' @.@ ','.').replace(' @-@ ','-').replace('...',' …')

return html.unescape(x)

test_eq(fix_html('#39;bli#146;'), "'bli'")

test_eq(fix_html('Sarah amp; Duck...'), 'Sarah & Duck …')

test_eq(fix_html('a nbsp; #36;'), 'a $')

test_eq(fix_html('\\" <unk>'), f'" {UNK}')

test_eq(fix_html('quot; @.@ @-@ '), "' .-")

test_eq(fix_html('<br />text\\n'), '\ntext\n')

#export

_re_all_caps = re.compile(r'(\s|^)([A-Z]+[^a-z\s]*)(?=(\s|$))')

#hide

"""

Catches any word in all caps, even with ' or - inside

(\s|^) Capture either a whitespace or the beginning of text

([A-Z]+ Capture one capitalized letter or more...

[^a-z\s]*) ...followed by anything that's non lowercase or whitespace

(?=(\s|$)) Look ahead for a space or end of text

""";

#export

def replace_all_caps(t):

"Replace tokens in ALL CAPS by their lower version and add `TK_UP` before."

def _replace_all_caps(m):

tok = f'{TK_UP} ' if len(m.groups()[1]) > 1 else ''

return f"{m.groups()[0]}{tok}{m.groups()[1].lower()}"

return _re_all_caps.sub(_replace_all_caps, t)

test_eq(replace_all_caps("I'M SHOUTING"), f"{TK_UP} i'm {TK_UP} shouting")

test_eq(replace_all_caps("I'm speaking normally"), "I'm speaking normally")

test_eq(replace_all_caps("I am speaking normally"), "i am speaking normally")

#export

_re_maj = re.compile(r'(\s|^)([A-Z][^A-Z\s]*)(?=(\s|$))')

#hide

"""

Catches any capitalized word

(\s|^) Capture either a whitespace or the beginning of text

([A-Z] Capture exactly one capitalized letter...

[^A-Z\s]*) ...followed by anything that's not uppercase or whitespace

(?=(\s|$)) Look ahead for a space of end of text

""";

#export

def replace_maj(t):

"Replace tokens in ALL CAPS by their lower version and add `TK_UP` before."

def _replace_maj(m):

tok = f'{TK_MAJ} ' if len(m.groups()[1]) > 1 else ''

return f"{m.groups()[0]}{tok}{m.groups()[1].lower()}"

return _re_maj.sub(_replace_maj, t)

test_eq(replace_maj("Jeremy Howard"), f'{TK_MAJ} jeremy {TK_MAJ} howard')

test_eq(replace_maj("I don't think there is any maj here"), ("i don't think there is any maj here"),)

#export

def lowercase(t, add_bos=True, add_eos=False):

"Converts `t` to lowercase"

return (f'{BOS} ' if add_bos else '') + t.lower().strip() + (f' {EOS}' if add_eos else '')

#export

def replace_space(t):

"Replace embedded spaces in a token with unicode line char to allow for split/join"

return t.replace(' ', '▁')

#export

defaults.text_spec_tok = [UNK, PAD, BOS, EOS, FLD, TK_REP, TK_WREP, TK_UP, TK_MAJ]

defaults.text_proc_rules = [fix_html, replace_rep, replace_wrep, spec_add_spaces, rm_useless_spaces,

replace_all_caps, replace_maj, lowercase]

defaults.text_postproc_rules = [replace_space]<jupyter_output><empty_output><jupyter_text>## TokenizingA tokenizer is a class that must implement a `pipe` method. This `pipe` method receives a generator of texts and must return a generator with their tokenized versions. Here is the most basic example:<jupyter_code>#export

class BaseTokenizer():

"Basic tokenizer that just splits on spaces"

def __init__(self, split_char=' ', **kwargs): self.split_char=split_char

def __call__(self, items): return (t.split(self.split_char) for t in items)

tok = BaseTokenizer()

for t in tok(["This is a text"]): test_eq(t, ["This", "is", "a", "text"])

tok = BaseTokenizer('x')

for t in tok(["This is a text"]): test_eq(t, ["This is a te", "t"])

#export

class SpacyTokenizer():

"Spacy tokenizer for `lang`"

def __init__(self, lang='en', special_toks=None, buf_sz=5000):

special_toks = ifnone(special_toks, defaults.text_spec_tok)

nlp = spacy.blank(lang, disable=["parser", "tagger", "ner"])

for w in special_toks: nlp.tokenizer.add_special_case(w, [{ORTH: w}])

self.pipe,self.buf_sz = nlp.pipe,buf_sz

def __call__(self, items):

return (L(doc).attrgot('text') for doc in self.pipe(items, batch_size=self.buf_sz))

tok = SpacyTokenizer()

inp,exp = "This isn't the easiest text.",["This", "is", "n't", "the", "easiest", "text", "."]

test_eq(L(tok([inp]*5)), [exp]*5)

#export

class TokenizeBatch:

"A wrapper around `tok_func` to apply `rules` and tokenize in parallel"

def __init__(self, tok_func=SpacyTokenizer, rules=None, post_rules=None, **tok_kwargs ):

self.rules = L(ifnone(rules, defaults.text_proc_rules))

self.post_f = compose(*L(ifnone(post_rules, defaults.text_postproc_rules)))

self.tok = tok_func(**tok_kwargs)

def __call__(self, batch):

return (L(o).map(self.post_f) for o in self.tok(maps(*self.rules, batch)))

f = TokenizeBatch()

test_eq(f(["This isn't a problem"]), [[BOS, TK_MAJ, 'this', 'is', "n't", 'a', 'problem']])

f = TokenizeBatch(BaseTokenizer, rules=[], split_char="'")

test_eq(f(["This isn't a problem"]), [['This▁isn', 't▁a▁problem']])<jupyter_output><empty_output><jupyter_text>The main function that will be called during one of the processes handling tokenization. It will create an instance of a tokenizer with `tok_func` and `tok_kwargs` at init, then iterate through the `batch` of texts, apply them `rules` and tokenize them.<jupyter_code>texts = ["this is a text", "this is another text"]

tok = TokenizeBatch(BaseTokenizer, texts.__getitem__)

test_eq([t for t in tok([0,1])],[['this', 'is', 'a', 'text'], ['this', 'is', 'another', 'text']])

#export

def tokenize1(text, tok_func=SpacyTokenizer, rules=None, post_rules=None, **tok_kwargs):

"Tokenize one `text` with an instance of `tok_func` and some `rules`"

return next(iter(TokenizeBatch(tok_func, rules, post_rules, **tok_kwargs)([text])))

test_eq(tokenize1("This isn't a problem"),

[BOS, TK_MAJ, 'this', 'is', "n't", 'a', 'problem'])

test_eq(tokenize1("This isn't a problem", BaseTokenizer, rules=[], split_char="'"),

['This▁isn', 't▁a▁problem'])

#export

def parallel_tokenize(items, tok_func, rules, as_gen=False, n_workers=defaults.cpus, **tok_kwargs):

"Calls a potential setup on `tok_func` before launching `TokenizeBatch` in parallel"

if hasattr(tok_func, 'setup'): tok_kwargs = tok_func(**tok_kwargs).setup(items, rules)

return parallel_gen(TokenizeBatch, items, as_gen=as_gen, tok_func=tok_func,

rules=rules, n_workers=n_workers, **tok_kwargs)<jupyter_output><empty_output><jupyter_text>### Tokenize texts in filesPreprocessing function for texts in filenames. Tokenized texts will be saved in a similar fashion in a directory suffixed with `_tok` in the parent folder of `path` (override with `output_dir`).<jupyter_code>#export

fn_counter_pkl = 'counter.pkl'

#export

def tokenize_folder(path, extensions=None, folders=None, output_dir=None, n_workers=defaults.cpus,

rules=None, tok_func=SpacyTokenizer, **tok_kwargs):

"Tokenize text files in `path` in parallel using `n_workers`"

path,extensions = Path(path),ifnone(extensions, ['.txt'])

fnames = get_files(path, extensions=extensions, recurse=True, folders=folders)

output_dir = Path(ifnone(output_dir, path.parent/f'{path.name}_tok'))

rules = Path.read + L(ifnone(rules, defaults.text_proc_rules.copy()))

counter = Counter()

for i,tok in parallel_tokenize(fnames, tok_func, rules, as_gen=True, n_workers=n_workers, **tok_kwargs):

out = output_dir/fnames[i].relative_to(path)

out.write(' '.join(tok))

out.with_suffix('.len').write(str(len(tok)))

counter.update(tok)

(output_dir/fn_counter_pkl).save(counter)<jupyter_output><empty_output><jupyter_text>The result will be in `output_dir` (defaults to a folder in the same parent directory as `path`, with `_tok` added to `path.name`) with the same structure as in `path`. Tokenized texts for a given file will be in the file having the same name in `output_dir`. Additionally, a file with a .len suffix contains the number of tokens and the count of all words is stored in `output_dir/counter.pkl`.

`extensions` will default to `['.txt']` and all text files in `path` are treated unless you specify a list of folders in `include`. `tok_func` is instantiated in each process with `tok_kwargs`, and `rules` (that defaults to `defaults.text_proc_rules`) are applied to each text before going in the tokenizer.### Tokenize texts in a dataframe<jupyter_code>#export

def _join_texts(df, mark_fields=False):

"Join texts in row `idx` of `df`, marking each field with `FLD` if `mark_fields=True`"

text_col = (f'{FLD} {1} ' if mark_fields else '' ) + df.iloc[:,0].astype(str)

for i in range(1,len(df.columns)):

text_col += (f' {FLD} {i+1} ' if mark_fields else ' ') + df.iloc[:,i].astype(str)

return text_col.values

#hide

texts = [f"This is an example of text {i}" for i in range(10)]

df = pd.DataFrame({'text': texts, 'text1': texts}, columns=['text', 'text1'])

col = _join_texts(df, mark_fields=True)

for i in range(len(df)):

test_eq(col[i], f'{FLD} 1 This is an example of text {i} {FLD} 2 This is an example of text {i}')

#export

def tokenize_df(df, text_cols, n_workers=defaults.cpus, rules=None, mark_fields=None,

tok_func=SpacyTokenizer, **tok_kwargs):

"Tokenize texts in `df[text_cols]` in parallel using `n_workers`"

text_cols = L(text_cols)

#mark_fields defaults to False if there is one column of texts, True if there are multiple

if mark_fields is None: mark_fields = len(text_cols)>1

rules = L(ifnone(rules, defaults.text_proc_rules.copy()))

texts = _join_texts(df[text_cols], mark_fields=mark_fields)

outputs = L(parallel_tokenize(texts, tok_func, rules, n_workers=n_workers, **tok_kwargs)

).sorted().itemgot(1)

other_cols = df.columns[~df.columns.isin(text_cols)]

res = df[other_cols].copy()

res['text'],res['text_lengths'] = outputs,outputs.map(len)

return res,Counter(outputs.concat())<jupyter_output><empty_output><jupyter_text>This function returns a new dataframe with the same non-text columns, a colum named text that contains the tokenized texts and a column named text_lengths that contains their respective length. It also returns a counter of all words see to quickly build a vocabulary afterward.

`tok_func` is instantiated in each process with `tok_kwargs`, and `rules` (that defaults to `defaults.text_proc_rules`) are applied to each text before going in the tokenizer. If `mark_fields` isn't specified, it defaults to `False` when there is a single text column, `True` when there are several. In that case, the texts in each of those columns are joined with `FLD` markes followed by the number of the field.<jupyter_code>#export

def tokenize_csv(fname, text_cols, outname=None, n_workers=4, rules=None, mark_fields=None,

tok_func=SpacyTokenizer, header='infer', chunksize=50000, **tok_kwargs):

"Tokenize texts in the `text_cols` of the csv `fname` in parallel using `n_workers`"

df = pd.read_csv(fname, header=header, chunksize=chunksize)

outname = Path(ifnone(outname, fname.parent/f'{fname.stem}_tok.csv'))

cnt = Counter()

for i,dfp in enumerate(df):

out,c = tokenize_df(dfp, text_cols, n_workers=n_workers, rules=rules,

mark_fields=mark_fields, tok_func=tok_func, **tok_kwargs)

out.text = out.text.str.join(' ')

out.to_csv(outname, header=(None,header)[i==0], index=False, mode=('a','w')[i==0])

cnt.update(c)

outname.with_suffix('.pkl').save(cnt)

#export

def load_tokenized_csv(fname):

"Utility function to quickly load a tokenized csv ans the corresponding counter"

fname = Path(fname)

out = pd.read_csv(fname)

for txt_col in out.columns[1:-1]:

out[txt_col] = out[txt_col].str.split(' ')

return out,fname.with_suffix('.pkl').load()<jupyter_output><empty_output><jupyter_text>The result will be written in a new csv file in `outname` (defaults to the same as `fname` with the suffix `_tok.csv`) and will have the same header as the original file, the same non-text columns, a text and a text_lengths column as described in `tokenize_df`.

`tok_func` is instantiated in each process with `tok_kwargs`, and `rules` (that defaults to `defaults.text_proc_rules`) are applied to each text before going in the tokenizer. If `mark_fields` isn't specified, it defaults to `False` when there is a single text column, `True` when there are several. In that case, the texts in each of those columns are joined with `FLD` markes followed by the number of the field.

The csv file is opened with `header` and optionally with blocks of `chunksize` at a time. If this argument is passed, each chunk is processed independtly and saved in the output file to save memory usage.<jupyter_code>def _prepare_texts(tmp_d):

"Prepare texts in a folder struct in tmp_d, a csv file and returns a dataframe"

path = Path(tmp_d)/'tmp'

path.mkdir()

for d in ['a', 'b', 'c']:

(path/d).mkdir()

for i in range(5):

with open(path/d/f'text{i}.txt', 'w') as f: f.write(f"This is an example of text {d} {i}")

texts = [f"This is an example of text {d} {i}" for i in range(5) for d in ['a', 'b', 'c']]

df = pd.DataFrame({'text': texts, 'label': list(range(15))}, columns=['text', 'label'])

csv_fname = tmp_d/'input.csv'

df.to_csv(csv_fname, index=False)

return path,df,csv_fname

with tempfile.TemporaryDirectory() as tmp_d:

path,df,csv_fname = _prepare_texts(Path(tmp_d))

#Tokenize as folders

tokenize_folder(path)

outp = Path(tmp_d)/'tmp_tok'

for d in ['a', 'b', 'c']:

p = outp/d

for i in range(5):

test_eq((p/f'text{i}.txt').read(), ' '.join([

BOS, TK_MAJ, 'this', 'is', 'an', 'example', 'of', 'text', d, str(i) ]))

test_eq((p/f'text{i}.len').read(), '10')

cnt_a = (outp/fn_counter_pkl).load()

test_eq(cnt_a['this'], 15)

test_eq(cnt_a['a'], 5)

test_eq(cnt_a['0'], 3)

#Tokenize as a dataframe

out,cnt_b = tokenize_df(df, text_cols='text')

test_eq(list(out.columns), ['label', 'text', 'text_lengths'])

test_eq(out['label'].values, df['label'].values)

test_eq(out['text'], [(outp/d/f'text{i}.txt').read().split(' ') for i in range(5) for d in ['a', 'b', 'c']])

test_eq(cnt_a, cnt_b)

#Tokenize as a csv

out_fname = Path(tmp_d)/'output.csv'

tokenize_csv(csv_fname, text_cols='text', outname=out_fname)

test_eq((out,cnt_b), load_tokenized_csv(out_fname))<jupyter_output><empty_output><jupyter_text>## Sentencepiece<jupyter_code>eu_langs = ["bg", "cs", "da", "de", "el", "en", "es", "et", "fi", "fr", "ga", "hr", "hu",

"it","lt","lv","mt","nl","pl","pt","ro","sk","sl","sv"] # all European langs

#export

class SentencePieceTokenizer():#TODO: pass the special tokens symbol to sp

"Spacy tokenizer for `lang`"

def __init__(self, lang='en', special_toks=None, sp_model=None, vocab_sz=None, max_vocab_sz=30000,

model_type='unigram', char_coverage=None, cache_dir='tmp'):

try: from sentencepiece import SentencePieceTrainer,SentencePieceProcessor

except ImportError:

raise Exception('sentencepiece module is missing: run `pip install sentencepiece`')

self.sp_model,self.cache_dir = sp_model,Path(cache_dir)

self.vocab_sz,self.max_vocab_sz,self.model_type = vocab_sz,max_vocab_sz,model_type

self.char_coverage = ifnone(char_coverage, 0.99999 if lang in eu_langs else 0.9998)

self.special_toks = ifnone(special_toks, defaults.text_spec_tok)

if sp_model is None: self.tok = None

else:

self.tok = SentencePieceProcessor()

self.tok.Load(str(sp_model))

os.makedirs(self.cache_dir, exist_ok=True)

def _get_vocab_sz(self, raw_text_path):

cnt = Counter()

with open(raw_text_path, 'r') as f:

for line in f.readlines():

cnt.update(line.split())

if len(cnt)//4 > self.max_vocab_sz: return self.max_vocab_sz

res = len(cnt)//4

while res%8 != 0: res+=1

return res

def train(self, raw_text_path):

"Train a sentencepiece tokenizer on `texts` and save it in `path/tmp_dir`"

from sentencepiece import SentencePieceTrainer

vocab_sz = self._get_vocab_sz(raw_text_path) if self.vocab_sz is None else self.vocab_sz

spec_tokens = ['\u2581'+s for s in self.special_toks]

SentencePieceTrainer.Train(" ".join([

f"--input={raw_text_path} --vocab_size={vocab_sz} --model_prefix={self.cache_dir/'spm'}",

f"--character_coverage={self.char_coverage} --model_type={self.model_type}",

f"--unk_id={len(spec_tokens)} --pad_id=-1 --bos_id=-1 --eos_id=-1",

f"--user_defined_symbols={','.join(spec_tokens)}"]))

raw_text_path.unlink()

return self.cache_dir/'spm.model'

def setup(self, items, rules):

if self.tok is not None: return {'sp_model': self.sp_model}

raw_text_path = self.cache_dir/'texts.out'

with open(raw_text_path, 'w') as f:

for t in progress_bar(maps(*rules, items), total=len(items), leave=False):

f.write(f'{t}\n')

return {'sp_model': self.train(raw_text_path)}

def __call__(self, items):

for t in items: yield self.tok.EncodeAsPieces(t)

texts = [f"This is an example of text {i}" for i in range(10)]

df = pd.DataFrame({'text': texts, 'label': list(range(10))}, columns=['text', 'label'])

out,cnt = tokenize_df(df, text_cols='text', tok_func=SentencePieceTokenizer, vocab_sz=34)<jupyter_output><empty_output><jupyter_text>## Export -<jupyter_code>#hide

from local.notebook.export import notebook2script

notebook2script(all_fs=True)<jupyter_output>Converted 00_test.ipynb.

Converted 01_core.ipynb.

Converted 01a_torch_core.ipynb.

Converted 02_script.ipynb.

Converted 03_dataloader.ipynb.

Converted 04_transform.ipynb.

Converted 05_data_core.ipynb.

Converted 06_data_transforms.ipynb.

Converted 07_vision_core.ipynb.

Converted 08_pets_tutorial.ipynb.

Converted 09_vision_augment.ipynb.

Converted 11_layers.ipynb.

Converted 11a_vision_models_xresnet.ipynb.

Converted 12_optimizer.ipynb.

Converted 13_learner.ipynb.

Converted 14_callback_schedule.ipynb.

Converted 14a_callback_data.ipynb.

Converted 15_callback_hook.ipynb.

Converted 16_callback_progress.ipynb.

Converted 17_callback_tracker.ipynb.

Converted 18_callback_fp16.ipynb.

Converted 19_callback_mixup.ipynb.

Converted 20_metrics.ipynb.

Converted 21_tutorial_imagenette.ipynb.

Converted 22_vision_learner.ipynb.

Converted 23_tutorial_transfer_learning.ipynb.

Converted 30_text_core.ipynb.

Converted 31_text_data.ipynb.

Converted 32_text_models_awdlstm.ipynb.

Converted 33_text_models_core.ipyn[...]

|

non_permissive

|

/dev/30_text_core.ipynb

|

davanstrien/fastai_dev

| 12 |

<jupyter_start><jupyter_text><jupyter_code>Ingest<jupyter_output><empty_output>

|

no_license

|

/Aparna_R.ipynb

|

aparnark-git/dsi_gittingstarted

| 1 |

<jupyter_start><jupyter_text>Capstone project on Segmenting and Clustering Neighborhoods in Toronto_Part1

## Problem 1

Use the Notebook to build the code to scrape the following Wikipedia page, https://en.wikipedia.org/wiki/List_of_postal_codes_of_Canada:_M, in order to obtain the data that is in the table of postal codes and to transform the data into a pandas dataframe.

To create the above dataframe:

- The dataframe will consist of three columns: PostalCode, Borough, and Neighborhood

- Only process the cells that have an assigned borough. Ignore cells with a borough that is Not assigned.

- More than one neighborhood can exist in one postal code area. For example, in the table on the Wikipedia page, you will notice that - M5A is listed twice and has two neighborhoods: Harbourfront and Regent Park. These two rows will be combined into one row with the - neighborhoods separated with a comma as shown in row 11 in the above table.

- If a cell has a borough but a Not assigned neighborhood, then the neighborhood will be the same as the borough. So for the 9th cell in the table on the Wikipedia page, the value of the Borough and the Neighborhood columns will be Queen's Park.

- Clean your Notebook and add Markdown cells to explain your work and any assumptions you are making.

- In the last cell of your notebook, use the .shape method to print the number of rows of your dataframe.

<jupyter_code>import numpy as np # library to handle data in a vectorized manner

import pandas as pd # library for data analsysis

pd.set_option('display.max_columns', None)

pd.set_option('display.max_rows', None)

import json # library to handle JSON files

#!conda install -c conda-forge geopy --yes # uncomment this line if you haven't completed the Foursquare API lab

from geopy.geocoders import Nominatim # convert an address into latitude and longitude values

import requests # library to handle requests

from pandas.io.json import json_normalize # tranform JSON file into a pandas dataframe

# Matplotlib and associated plotting modules

import matplotlib.cm as cm

import matplotlib.colors as colors

# import k-means from clustering stage

from sklearn.cluster import KMeans

from bs4 import BeautifulSoup

#!conda install -c conda-forge folium=0.5.0 --yes # uncomment this line if you haven't completed the Foursquare API lab

import folium # map rendering library

import requests

webUrl = requests.get('https://en.wikipedia.org/wiki/List_of_postal_codes_of_Canada:_M').text

print('Libraries imported.')<jupyter_output>Libraries imported.

<jupyter_text>###### Scrape the List of postal codes of Canada<jupyter_code>soup = BeautifulSoup(webUrl, 'xml')

table=soup.find('table')

#print(table)

#dataframe will consist of three columns: PostalCode, Borough, and Neighborhood

column_names = ['Postalcode','Borough','Neighborhood']

data = pd.DataFrame(columns = column_names)

# Search all the postcode, borough, neighborhood

for tr in table.find_all('tr'):

rowData=[]

for td in tr.find_all('td'):

rowData.append(td.text.strip())

if len(rowData)==3:

data.loc[len(data)] = rowData

data.head(20)<jupyter_output><empty_output><jupyter_text>###### Data Cleaning

remove rows where Borough is 'Not assigned'<jupyter_code>data=data[data['Borough']!='Not assigned']

data.head()

data[data['Neighborhood']=='Not assigned']=data['Borough']

data.head()

temp_data=data.groupby('Postalcode')['Neighborhood'].apply(lambda x: "%s" % ', '.join(x))

temp_data=temp_data.reset_index(drop=False)

temp_data.rename(columns={'Neighborhood':'Neighborhood_joined'},inplace=True)

data_merge = pd.merge(data, temp_data, on='Postalcode')

data_merge.drop(['Neighborhood'],axis=1,inplace=True)

data_merge.drop_duplicates(inplace=True)

data_merge.rename(columns={'Neighborhood_joined':'Neighborhood'},inplace=True)

data_merge.head()

data_merge.shape<jupyter_output><empty_output>

|

no_license

|

/Neighborhoods_in_Toronto_1st.ipynb

|

natfik/Coursera_Capstone

| 3 |

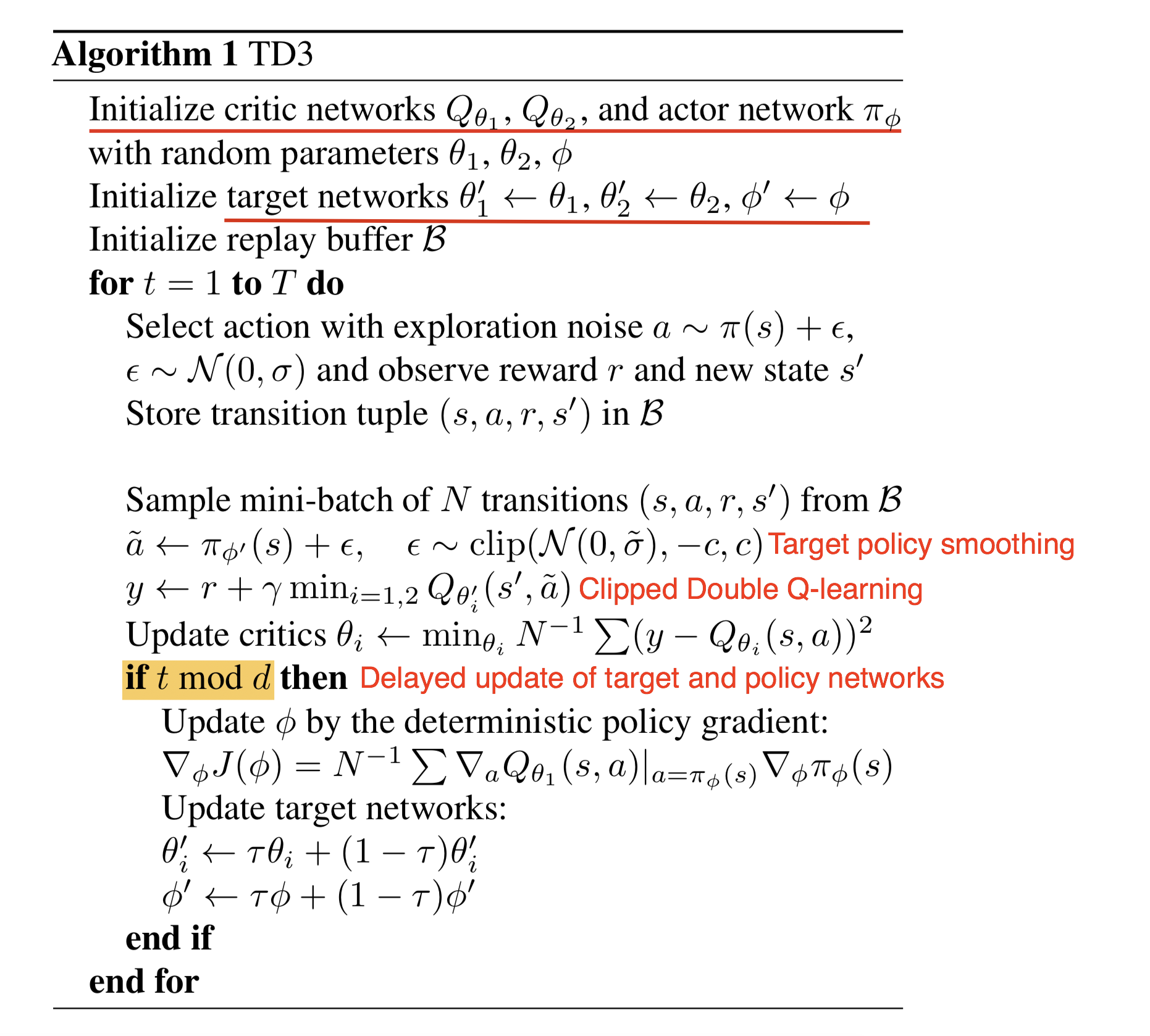

<jupyter_start><jupyter_text># TD3

**Author:** [pavelkolev](https://github.com/PavelKolev)

**Extending the work of:** [amifunny](https://github.com/amifunny)

**Date created:** 2020/09/22

**Last modified:** 2020/09/22

**Description:** Implementing TD3 algorithm on the LunarLanderContinuous-v2.<jupyter_code>import sys, os

if 'google.colab' in sys.modules:

if not os.path.exists('.setup_complete'):

!wget -q https://raw.githubusercontent.com/yandexdataschool/Practical_RL/master/setup_colab.sh -O- | bash

!touch .setup_complete

# This code creates a virtual display to draw game images on.

# It will have no effect if your machine has a monitor.

if type(os.environ.get("DISPLAY")) is not str or len(os.environ.get("DISPLAY")) == 0:

!bash ../xvfb start

os.environ['DISPLAY'] = ':1'

!pip install box2d-py

import tensorflow as tf

from tensorflow.keras import layers

import gym

import numpy as np<jupyter_output><empty_output><jupyter_text>We use [OpenAIGym](http://gym.openai.com/docs) to create the environment.

We will use the `upper_bound` parameter to scale our actions later.<jupyter_code>env = gym.make('LunarLanderContinuous-v2')

env.reset()

dim_state = env.observation_space.shape[0]

print("Size of State Space -> {}".format(dim_state))

dim_action = env.action_space.shape[0]

print("Size of Action Space -> {}".format(dim_action))

lower_bound = env.action_space.low[0]

upper_bound = env.action_space.high[0]

print("Min Value of Action -> {}".format(lower_bound))

print("Max Value of Action -> {}".format(upper_bound))

import matplotlib.pyplot as plt

%matplotlib inline

plt.imshow(env.render('rgb_array'))

plt.show()<jupyter_output><empty_output><jupyter_text>To implement better exploration by the Actor network, we use noisy perturbations, specifically

an **Ornstein-Uhlenbeck process** for generating noise, as described in the paper.

It samples noise from a correlated normal distribution.<jupyter_code>class Gaussian:

def __init__(self, mean, std_deviation, bounds = None):

self.mean = mean

self.std_dev = std_deviation

self.bounds = bounds

def __call__(self):

x = np.random.normal(self.mean, self.std_dev, self.mean.shape)

if not self.bounds == None:

x = np.clip(x, self.bounds[0], self.bounds[1])

return x<jupyter_output><empty_output><jupyter_text>---

TD3 Algorithm Explained:

https://lilianweng.github.io/lil-log/2018/04/08/policy-gradient-algorithms.html#td3

--------------------------------Here we define the Actor and Critic networks. These are basic Dense models

with `ReLU` activation. `BatchNormalization` is used to normalize dimensions across

samples in a mini-batch, as activations can vary a lot due to fluctuating values of input

state and action.

Note: We need the initialization for last layer of the Actor to be between

`-0.003` and `0.003` as this prevents us from getting `1` or `-1` output values in

the initial stages, which would squash our gradients to zero,

as we use the `tanh` activation.<jupyter_code># mu(s) = a

def get_actor():

# Initialize weights between -3e-3 and 3-e3

inputs = layers.Input(shape=(dim_state,))

out = layers.Dense(256, activation="relu")(inputs)

out = layers.Dense(128, activation="relu")(out)

outputs = layers.Dense(dim_action, activation="tanh")(out)

# Scaling: Upper bound

outputs = outputs * upper_bound

model = tf.keras.Model(inputs, outputs)

return model

# Q(s,a)

def get_critic():

# State as input

state_input = layers.Input(shape=(dim_state))

state_out = layers.Dense(32, activation="relu")(state_input)

# Action as input

action_input = layers.Input(shape=(dim_action))

action_out = layers.Dense(32, activation="relu")(action_input)

# Both are passed through seperate layer before concatenating

concat = layers.Concatenate()([state_out, action_out])

out = layers.Dense(256, activation="relu")(concat)

out = layers.Dense(128, activation="relu")(out)

outputs = layers.Dense(1)(out)

# Outputs single value for give state-action

model = tf.keras.Model([state_input, action_input], outputs)

return model<jupyter_output><empty_output><jupyter_text>## Training hyperparameters<jupyter_code># Learning rate for actor-critic models

critic_lr = 0.001

actor_lr = 0.0001

total_episodes = 4000

# Size N

N = 2 ** 16

size_B = 2 ** 6

# Discount factor for future rewards

gamma = 0.99

# Used to update target networks

tau = 0.005

noise_mu = Gaussian(mean = np.zeros(dim_action),

std_deviation = float( 0.1 ) * np.ones(dim_action))

noise_Q = Gaussian(mean = np.zeros(dim_action),

std_deviation = float(0.2) * np.ones(dim_action),

bounds = [-0.5, 0.5])

# Function Approx: mu and Q(s,a)

mu = get_actor()

mu_t = get_actor()

Q1 = get_critic()

Q1_t = get_critic()

Q2 = get_critic()

Q2_t = get_critic()

# Making the weights equal initially

mu_t.set_weights(mu.get_weights())

Q1_t.set_weights(Q1.get_weights())

Q2_t.set_weights(Q2.get_weights())

mu_opt = tf.keras.optimizers.Adam(actor_lr)

Q1_opt = tf.keras.optimizers.Adam(critic_lr)

Q2_opt = tf.keras.optimizers.Adam(critic_lr)<jupyter_output><empty_output><jupyter_text>`policy()` returns an action sampled from our Actor network plus some noise for

exploration.<jupyter_code>def policy(actor, state, noise_object):

# Call "actor" mu

sampled_actions = tf.squeeze( actor(state) )

# Sample Noise

noise = noise_object()

sampled_actions = sampled_actions.numpy() + noise

# Legal action

legal_action = np.clip(sampled_actions, lower_bound, upper_bound)

return legal_action

@tf.function

def policy_symbolic(actor, state, noise_object):

sampled_actions = tf.squeeze( actor(state) )

# Sample Noise

noise = noise_object()

sampled_actions = sampled_actions + noise

# Legal action

legal_action = tf.clip_by_value(sampled_actions, lower_bound, upper_bound)

return legal_action

# Eager execution is turned on by default in TensorFlow 2.

# Decorating with tf.function allows TensorFlow to build a static graph

# out of the logic and computations in our function.

# This provides a large speed up for blocks of code that contain

# many small TensorFlow operations such as this one.

# Input: each has dim [batch,?]

@tf.function

def update_Q1_Q2(state, action, reward, next_state, is_done):

with tf.GradientTape(persistent = True) as tape:

next_action = tf.stop_gradient( policy_symbolic(mu_t, next_state, noise_Q) )

Q1_t_nsa = tf.stop_gradient( Q1_t([next_state, next_action]) )

Q2_t_nsa = tf.stop_gradient( Q2_t([next_state, next_action]) )

y = reward + (1.0 - is_done) * gamma * tf.math.minimum(Q1_t_nsa, Q2_t_nsa)

Q1_nsa = Q1([state, action])

Q2_nsa = Q2([state, action])

diff_1_squared = tf.math.square(y - Q1_nsa)

diff_2_squared = tf.math.square(y - Q2_nsa)

Q1_loss = tf.math.reduce_mean(diff_1_squared)

Q2_loss = tf.math.reduce_mean(diff_2_squared)

Q1_vars = Q1.trainable_variables

Q1_grad = tape.gradient(Q1_loss, Q1_vars)

Q1_opt.apply_gradients(zip(Q1_grad, Q1_vars))

Q2_vars = Q2.trainable_variables

Q2_grad = tape.gradient(Q2_loss, Q2_vars)

Q2_opt.apply_gradients(zip(Q2_grad, Q2_vars))

# Input: each has dim [batch,?]

@tf.function

def update_mu(state, action):

with tf.GradientTape() as tape:

actions = mu(state)

Q1_sa = Q1([state, actions])

# Used "-value" as we want to maximize the value given

# by the critic for our actions

mu_loss = -tf.math.reduce_mean(Q1_sa)

mu_vars = mu.trainable_variables

mu_grad = tape.gradient(mu_loss, mu_vars)

mu_opt.apply_gradients(zip(mu_grad, mu_vars))

# This update target parameters slowly

# Based on rate `tau`, which is much less than one.

@tf.function

def update_target(target_weights, weights, tau):

for (t, w) in zip(target_weights, weights):

t.assign( (1 - tau) * t + tau * w )

class Buffer:

def __init__(self, capacity=2**14, batch_size=2**3):

self.batch_size = batch_size

self.capacity = capacity

self.buffer_index = 0

# capacity

self.state = np.zeros((capacity, dim_state))

self.action = np.zeros((capacity, dim_action))

self.reward = np.zeros((capacity, 1))

self.next_state = np.zeros((capacity, dim_state))

self.is_done = np.zeros((capacity, 1))

# Takes (s,a,r,s',done) obervation tuple

def record(self, obs_tuple):

# Set index to zero if capacity is exceeded,

# replacing old records

index = self.buffer_index % self.capacity

self.state[index] = obs_tuple[0]

self.action[index] = obs_tuple[1]

self.reward[index] = obs_tuple[2]

self.next_state[index] = obs_tuple[3]

self.is_done[index] = obs_tuple[4]

self.buffer_index += 1

# We compute the loss and update parameters

def learn(self, update_mu_target):

# Get sampling range

record_range = min(self.buffer_index, self.capacity)

# Randomly sample indices

batch_indices = np.random.choice(record_range, self.batch_size)

# Convert to tensors

state_batch = tf.convert_to_tensor(self.state[batch_indices])

action_batch = tf.convert_to_tensor(self.action[batch_indices])

reward_batch = tf.convert_to_tensor(self.reward[batch_indices])

reward_batch = tf.cast(reward_batch, dtype=tf.float32)

next_state_batch = tf.convert_to_tensor(self.next_state[batch_indices])

is_done_batch = tf.convert_to_tensor(self.is_done[batch_indices])

is_done_batch = tf.cast(is_done_batch, dtype=tf.float32)

# Update Current Models

deltas = update_Q1_Q2(state_batch, action_batch, reward_batch,

next_state_batch, is_done_batch)

# Train Target Models

if update_mu_target:

# Update policy mu

update_mu(state_batch, action_batch)

# Update All Target models

update_target(Q1_t.variables, Q1.variables, tau)

update_target(Q2_t.variables, Q2.variables, tau)

update_target(mu_t.variables, mu.variables, tau)

<jupyter_output><empty_output><jupyter_text>Now we implement our main training loop, and iterate over episodes.

We sample actions using `policy()` and train with `learn()` at each time step,

along with updating the Target networks at a rate `tau`.<jupyter_code>from IPython.display import clear_output

from tqdm import trange

# To store reward history of each episode

ep_reward_list = []

# To store average reward history of last few episodes

avg_reward_list = []

# Create Buffer

buffer = Buffer(N, size_B)

def save_weights():

# Save the weights

mu.save_weights("mu.h5")

mu_t.save_weights("mu_t.h5")

Q1.save_weights("Q1.h5")

Q1_t.save_weights("Q1_t.h5")

Q2.save_weights("Q2.h5")

Q2_t.save_weights("Q2_t.h5")

total_episodes = 4000

# Takes about 4 min to train

is_train_done = False

curr_iter = 0

for ep in trange(total_episodes + 1):

prev_state = env.reset()

episodic_reward = 0

while True:

# Uncomment this to see the Actor in action

# But not in a python notebook.

# env.render()

tf_prev_state = tf.expand_dims(tf.convert_to_tensor(prev_state), 0)

action = policy(mu, tf_prev_state, noise_mu)

# Recieve state and reward from environment.

state, reward, done, info = env.step(action)

reward = reward / 200.

obs_tuple = (prev_state, action, reward, state, done)

buffer.record(obs_tuple)

episodic_reward += reward

update_mu_target = (curr_iter % 2 == 0 )

buffer.learn(update_mu_target)

# End this episode when `done` is True

if done:

break

prev_state = state

curr_iter += 1

ep_reward_list.append(episodic_reward)

# Mean of last 40 episodes

avg_reward = np.mean(ep_reward_list[-40:])

avg_reward_list.append(200 * avg_reward)

if ep % 50 == 0:

# Plotting graph

# Episodes versus Avg. Rewards

clear_output(True)

plt.plot(avg_reward_list)

plt.xlabel("Episode")

plt.ylabel("Avg. Epsiodic Reward")

plt.show()

if avg_reward >= 200:

print("Pass!")

is_train_done = True

break

if is_train_done:

save_weights()

break

if ep >=1 and ep % 500 == 0:

save_weights()

<jupyter_output><empty_output><jupyter_text>Evaluate Learned Agent<jupyter_code>def det_policy(state):

# Use deterministic actor: "mu"

sampled_actions = tf.squeeze( mu(state) )

# We make sure action is within bounds

legal_action = np.clip(sampled_actions, lower_bound, upper_bound)

return legal_action

def evaluate(env, n_games=1):

"""Plays an a game from start till done, returns per-game rewards """

game_rewards = []

for _ in range(n_games):

# initial observation and memory

obs = env.reset()

total_reward = 0

while True:

action = det_policy(obs[None])

obs, reward, done, info = env.step(action)

total_reward += reward

if done:

break

game_rewards.append(total_reward)

return game_rewards

import gym.wrappers

with gym.wrappers.Monitor(env, directory="videos", force=True) as env_monitor:

final_rewards = evaluate(env_monitor, n_games=10)

print("Final mean reward", np.mean(final_rewards))<jupyter_output>Final mean reward 274.87823239927627

<jupyter_text>-------------------------------------------------<jupyter_code>gpu_info = !nvidia-smi

gpu_info = '\n'.join(gpu_info)

if gpu_info.find('failed') >= 0:

print('Select the Runtime > "Change runtime type" menu to enable a GPU accelerator, ')

print('and then re-execute this cell.')

else:

print(gpu_info)

from psutil import virtual_memory

ram_gb = virtual_memory().total / 1e9

print('Your runtime has {:.1f} gigabytes of available RAM\n'.format(ram_gb))

if ram_gb < 20:

print('To enable a high-RAM runtime, select the Runtime > "Change runtime type"')

print('menu, and then select High-RAM in the Runtime shape dropdown. Then, ')

print('re-execute this cell.')

else:

print('You are using a high-RAM runtime!')

<jupyter_output><empty_output>

|

no_license

|

/TD3_Gaussian/TD3_LLCont.ipynb

|

PavelKolev/rl_algos

| 9 |

<jupyter_start><jupyter_text># Load and Test Model for test set

This notebook loads the model and performs predictions on all the data in the Test set (the split with the least data samples). Once the predictions are completed, a classification plot and confusion matrix is displayed.## Load dependencies<jupyter_code>import matplotlib.pyplot as plt

import numpy as np

from PIL import Image

import torch

from torchvision import transforms

from tqdm import tqdm

import sys

import av

import pandas as pd

import os

from sklearn.metrics import classification_report, confusion_matrix, ConfusionMatrixDisplay<jupyter_output><empty_output><jupyter_text>## Init file paths and dataframes<jupyter_code>data_dir = "dataset"

test_dir = f'../{data_dir}/test'

# All labels

filtered_data = "data"

test_label_df = pd.read_csv(f'../{filtered_data}/test.csv', header=None)

# convert all into hashmap - key = u_vid_name , value = label

test_label = {f"{test_dir}/{k[0]}": k[1] for k in test_label_df.values.tolist()}

# Total label + turkish to english translation

total_label = pd.read_csv(f'../{filtered_data}/filtered_ClassId.csv')

u_len_label = len(total_label['ClassId'].unique())