url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 48

51

| id

int64 600M

2.19B

| node_id

stringlengths 18

24

| number

int64 2

6.73k

| title

stringlengths 1

290

| user

dict | labels

listlengths 0

4

| state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

listlengths 0

4

| milestone

dict | comments

listlengths 0

30

| created_at

timestamp[s] | updated_at

timestamp[s] | closed_at

timestamp[s] | author_association

stringclasses 3

values | active_lock_reason

null | draft

null | pull_request

null | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

null | state_reason

stringclasses 3

values |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/3851

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3851/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3851/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3851/events

|

https://github.com/huggingface/datasets/issues/3851

| 1,162,137,998 |

I_kwDODunzps5FRNGO

| 3,851 |

Load audio dataset error

|

{

"login": "lemoner20",

"id": 31890987,

"node_id": "MDQ6VXNlcjMxODkwOTg3",

"avatar_url": "https://avatars.githubusercontent.com/u/31890987?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lemoner20",

"html_url": "https://github.com/lemoner20",

"followers_url": "https://api.github.com/users/lemoner20/followers",

"following_url": "https://api.github.com/users/lemoner20/following{/other_user}",

"gists_url": "https://api.github.com/users/lemoner20/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lemoner20/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lemoner20/subscriptions",

"organizations_url": "https://api.github.com/users/lemoner20/orgs",

"repos_url": "https://api.github.com/users/lemoner20/repos",

"events_url": "https://api.github.com/users/lemoner20/events{/privacy}",

"received_events_url": "https://api.github.com/users/lemoner20/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] |

closed

| false | null |

[] | null |

[

"Hi @lemoner20, thanks for reporting.\r\n\r\nI'm sorry but I cannot reproduce your problem:\r\n```python\r\nIn [1]: from datasets import load_dataset, load_metric, Audio\r\n ...: raw_datasets = load_dataset(\"superb\", \"ks\", split=\"train\")\r\n ...: print(raw_datasets[0][\"audio\"])\r\nDownloading builder script: 30.2kB [00:00, 13.0MB/s] \r\nDownloading metadata: 38.0kB [00:00, 16.6MB/s] \r\nDownloading and preparing dataset superb/ks (download: 1.45 GiB, generated: 9.64 MiB, post-processed: Unknown size, total: 1.46 GiB) to .../.cache/huggingface/datasets/superb/ks/1.9.0/fc1f59e1fa54262dfb42de99c326a806ef7de1263ece177b59359a1a3354a9c9...\r\nDownloading data: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1.49G/1.49G [00:37<00:00, 39.3MB/s]\r\nDownloading data: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 71.3M/71.3M [00:01<00:00, 36.1MB/s]\r\nDownloading data files: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 2/2 [00:41<00:00, 20.67s/it]\r\nExtracting data files: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 2/2 [00:28<00:00, 14.24s/it]\r\nDataset superb downloaded and prepared to .../.cache/huggingface/datasets/superb/ks/1.9.0/fc1f59e1fa54262dfb42de99c326a806ef7de1263ece177b59359a1a3354a9c9. Subsequent calls will reuse this data.\r\n{'path': '.../.cache/huggingface/datasets/downloads/extracted/8571921d3088b48f58f75b2e514815033e1ffbd06aa63fd4603691ac9f1c119f/_background_noise_/doing_the_dishes.wav', 'array': array([ 0. , 0. , 0. , ..., -0.00592041,\r\n -0.00405884, -0.00253296], dtype=float32), 'sampling_rate': 16000}\r\n``` \r\n\r\nWhich version of `datasets` are you using? Could you please fill in the environment info requested in the bug report template? You can run the command `datasets-cli env` and copy-and-paste its output below\r\n## Environment info\r\n<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->\r\n- `datasets` version:\r\n- Platform:\r\n- Python version:\r\n- PyArrow version:",

"@albertvillanova Thanks for your reply. The environment info below\r\n\r\n## Environment info\r\n- `datasets` version: 1.18.3\r\n- Platform: Linux-4.19.91-007.ali4000.alios7.x86_64-x86_64-with-debian-buster-sid\r\n- Python version: 3.6.12\r\n- PyArrow version: 6.0.1",

"Thanks @lemoner20,\r\n\r\nI cannot reproduce your issue in datasets version 1.18.3 either.\r\n\r\nMaybe redownloading the data file may work if you had already cached this dataset previously. Could you please try passing \"force_redownload\"?\r\n```python\r\nraw_datasets = load_dataset(\"superb\", \"ks\", split=\"train\", download_mode=\"force_redownload\")",

"Thanks, @albertvillanova,\r\n\r\nI install the python package of **librosa=0.9.1** again, it works now!\r\n\r\n\r\n",

"Cool!",

"@albertvillanova, you can actually reproduce the error if you reach the cell `common_voice_train[0][\"path\"]` of this [notebook](https://colab.research.google.com/github/patrickvonplaten/notebooks/blob/master/Fine_Tune_XLSR_Wav2Vec2_on_Turkish_ASR_with_%F0%9F%A4%97_Transformers.ipynb#scrollTo=_0kRndSvqaKk). Error gets solved after updating the versions of the libraries used in there.",

"@jvel07, thanks for reporting and finding a solution.\r\n\r\nMaybe we could tell @patrickvonplaten about the version pinning issue in his notebook.",

"Should I update the version of datasets @albertvillanova ? "

] | 2022-03-08T02:16:04 | 2022-09-27T12:13:55 | 2022-03-08T11:20:06 |

NONE

| null | null | null |

## Load audio dataset error

Hi, when I load audio dataset following https://huggingface.co/docs/datasets/audio_process and https://github.com/huggingface/datasets/tree/master/datasets/superb,

```

from datasets import load_dataset, load_metric, Audio

raw_datasets = load_dataset("superb", "ks", split="train")

print(raw_datasets[0]["audio"])

```

following errors occur

```

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-169-3f8253239fa0> in <module>

----> 1 raw_datasets[0]["audio"]

/usr/lib/python3.6/site-packages/datasets/arrow_dataset.py in __getitem__(self, key)

1924 """Can be used to index columns (by string names) or rows (by integer index or iterable of indices or bools)."""

1925 return self._getitem(

-> 1926 key,

1927 )

1928

/usr/lib/python3.6/site-packages/datasets/arrow_dataset.py in _getitem(self, key, decoded, **kwargs)

1909 pa_subtable = query_table(self._data, key, indices=self._indices if self._indices is not None else None)

1910 formatted_output = format_table(

-> 1911 pa_subtable, key, formatter=formatter, format_columns=format_columns, output_all_columns=output_all_columns

1912 )

1913 return formatted_output

/usr/lib/python3.6/site-packages/datasets/formatting/formatting.py in format_table(table, key, formatter, format_columns, output_all_columns)

530 python_formatter = PythonFormatter(features=None)

531 if format_columns is None:

--> 532 return formatter(pa_table, query_type=query_type)

533 elif query_type == "column":

534 if key in format_columns:

/usr/lib/python3.6/site-packages/datasets/formatting/formatting.py in __call__(self, pa_table, query_type)

279 def __call__(self, pa_table: pa.Table, query_type: str) -> Union[RowFormat, ColumnFormat, BatchFormat]:

280 if query_type == "row":

--> 281 return self.format_row(pa_table)

282 elif query_type == "column":

283 return self.format_column(pa_table)

/usr/lib/python3.6/site-packages/datasets/formatting/formatting.py in format_row(self, pa_table)

310 row = self.python_arrow_extractor().extract_row(pa_table)

311 if self.decoded:

--> 312 row = self.python_features_decoder.decode_row(row)

313 return row

314

/usr/lib/python3.6/site-packages/datasets/formatting/formatting.py in decode_row(self, row)

219

220 def decode_row(self, row: dict) -> dict:

--> 221 return self.features.decode_example(row) if self.features else row

222

223 def decode_column(self, column: list, column_name: str) -> list:

/usr/lib/python3.6/site-packages/datasets/features/features.py in decode_example(self, example)

1320 else value

1321 for column_name, (feature, value) in utils.zip_dict(

-> 1322 {key: value for key, value in self.items() if key in example}, example

1323 )

1324 }

/usr/lib/python3.6/site-packages/datasets/features/features.py in <dictcomp>(.0)

1319 if self._column_requires_decoding[column_name]

1320 else value

-> 1321 for column_name, (feature, value) in utils.zip_dict(

1322 {key: value for key, value in self.items() if key in example}, example

1323 )

/usr/lib/python3.6/site-packages/datasets/features/features.py in decode_nested_example(schema, obj)

1053 # Object with special decoding:

1054 elif isinstance(schema, (Audio, Image)):

-> 1055 return schema.decode_example(obj) if obj is not None else None

1056 return obj

1057

/usr/lib/python3.6/site-packages/datasets/features/audio.py in decode_example(self, value)

100 array, sampling_rate = self._decode_non_mp3_file_like(file)

101 else:

--> 102 array, sampling_rate = self._decode_non_mp3_path_like(path)

103 return {"path": path, "array": array, "sampling_rate": sampling_rate}

104

/usr/lib/python3.6/site-packages/datasets/features/audio.py in _decode_non_mp3_path_like(self, path)

143

144 with xopen(path, "rb") as f:

--> 145 array, sampling_rate = librosa.load(f, sr=self.sampling_rate, mono=self.mono)

146 return array, sampling_rate

147

/usr/lib/python3.6/site-packages/librosa/core/audio.py in load(path, sr, mono, offset, duration, dtype, res_type)

110

111 y = []

--> 112 with audioread.audio_open(os.path.realpath(path)) as input_file:

113 sr_native = input_file.samplerate

114 n_channels = input_file.channels

/usr/lib/python3.6/posixpath.py in realpath(filename)

392 """Return the canonical path of the specified filename, eliminating any

393 symbolic links encountered in the path."""

--> 394 filename = os.fspath(filename)

395 path, ok = _joinrealpath(filename[:0], filename, {})

396 return abspath(path)

TypeError: expected str, bytes or os.PathLike object, not _io.BufferedReader

```

## Expected results

```

>>> raw_datasets[0]["audio"]

{'array': array([-0.0005188 , -0.00109863, 0.00030518, ..., 0.01730347,

0.01623535, 0.01724243]),

'path': '/root/.cache/huggingface/datasets/downloads/extracted/bb3a06b491a64aff422f307cd8116820b4f61d6f32fcadcfc554617e84383cb7/bed/026290a7_nohash_0.wav',

'sampling_rate': 16000}

```

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/3851/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/3851/timeline

| null |

completed

|

https://api.github.com/repos/huggingface/datasets/issues/3848

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3848/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3848/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3848/events

|

https://github.com/huggingface/datasets/issues/3848

| 1,162,076,902 |

I_kwDODunzps5FQ-Lm

| 3,848 |

NonMatchingChecksumError when checksum is None

|

{

"login": "jxmorris12",

"id": 13238952,

"node_id": "MDQ6VXNlcjEzMjM4OTUy",

"avatar_url": "https://avatars.githubusercontent.com/u/13238952?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jxmorris12",

"html_url": "https://github.com/jxmorris12",

"followers_url": "https://api.github.com/users/jxmorris12/followers",

"following_url": "https://api.github.com/users/jxmorris12/following{/other_user}",

"gists_url": "https://api.github.com/users/jxmorris12/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jxmorris12/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jxmorris12/subscriptions",

"organizations_url": "https://api.github.com/users/jxmorris12/orgs",

"repos_url": "https://api.github.com/users/jxmorris12/repos",

"events_url": "https://api.github.com/users/jxmorris12/events{/privacy}",

"received_events_url": "https://api.github.com/users/jxmorris12/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] |

closed

| false |

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

] | null |

[

"Hi @jxmorris12, thanks for reporting.\r\n\r\nThe objective of `verify_checksums` is to check that both checksums are equal. Therefore if one is None and the other is non-None, they are not equal, and the function accordingly raises a NonMatchingChecksumError. That behavior is expected.\r\n\r\nThe question is: how did you generate the expected checksum? Normally, it should not be None. To properly generate it (it is contained in the `dataset_infos.json` file), you should have runned: https://github.com/huggingface/datasets/blob/master/ADD_NEW_DATASET.md\r\n```shell\r\ndatasets-cli test <your-dataset-folder> --save_infos --all_configs\r\n```\r\n\r\nOn the other hand, you should take into account that the generation of this file is NOT mandatory for personal/community datasets (we only require it for \"canonical\" datasets, i.e., datasets added to our library GitHub repository: https://github.com/huggingface/datasets/tree/master/datasets). Therefore, other option would be just to delete the `dataset_infos.json` file. If that file is not present, the function `verify_checksums` is not executed.\r\n\r\nFinally, you can circumvent the `verify_checksums` function by passing `ignore_verifications=True` to `load_dataset`:\r\n```python\r\nload_dataset(..., ignore_verifications=True)\r\n``` ",

"Thanks @albertvillanova!\r\n\r\nThat's fine. I did run that command when I was adding a new dataset. Maybe because the command crashed in the middle, the checksum wasn't stored properly. I don't know where the bug is happening. But either (i) `verify_checksums` should properly handle this edge case, where the passed checksum is None or (ii) the `datasets-cli test` shouldn't generate a corrupted dataset_infos.json file.\r\n\r\nJust a more high-level thing, I was trying to follow the instructions for adding a dataset in the CONTRIBUTING.md, so if running that command isn't even necessary, that should probably be mentioned in the document, right? But that's somewhat of a moot point, since something isn't working quite right internally if I was able to get into this corrupted state in the first place, just by following those instructions.",

"Hi @jxmorris12,\r\n\r\nDefinitely, your `dataset_infos.json` was corrupted (and wrongly contains expected None checksum). \r\n\r\nWhile we further investigate how this can happen and fix it, feel free to delete your `dataset_infos.json` file and recreate it with:\r\n```shell\r\ndatasets-cli test <your-dataset-folder> --save_infos --all_configs\r\n```\r\n\r\nAlso note that `verify_checksum` is working as expected: if it receives a None and and a non-None checksums as input pair, it must raise an exception: they are not equal. That is not a bug.",

"At a higher level, also note that we are preparing the release of `datasets` version 2.0, and some docs are being updated...\r\n\r\nIn order to add a dataset, I think the most updated instructions are in our official documentation pages: https://huggingface.co/docs/datasets/share",

"Thanks for the info. Maybe you can update the contributing.md if it's not up-to-date.",

"Hi @jxmorris12, we have discovered the bug why `None` checksums wrongly appeared when generating the `dataset_infos.json` file:\r\n- #3892\r\n\r\nThe fix will be accessible once this PR merged. And we are planning to do our 2.0 release today.\r\n\r\nWe are also working on updating all our docs for our release today.",

"Thanks @albertvillanova - congrats on the release!"

] | 2022-03-08T00:24:12 | 2022-03-15T14:37:26 | 2022-03-15T12:28:23 |

CONTRIBUTOR

| null | null | null |

I ran into the following error when adding a new dataset:

```bash

expected_checksums = {'https://adversarialglue.github.io/dataset/dev.zip': {'checksum': None, 'num_bytes': 40662}}

recorded_checksums = {'https://adversarialglue.github.io/dataset/dev.zip': {'checksum': 'efb4cbd3aa4a87bfaffc310ae951981cc0a36c6c71c6425dd74e5b55f2f325c9', 'num_bytes': 40662}}

verification_name = 'dataset source files'

def verify_checksums(expected_checksums: Optional[dict], recorded_checksums: dict, verification_name=None):

if expected_checksums is None:

logger.info("Unable to verify checksums.")

return

if len(set(expected_checksums) - set(recorded_checksums)) > 0:

raise ExpectedMoreDownloadedFiles(str(set(expected_checksums) - set(recorded_checksums)))

if len(set(recorded_checksums) - set(expected_checksums)) > 0:

raise UnexpectedDownloadedFile(str(set(recorded_checksums) - set(expected_checksums)))

bad_urls = [url for url in expected_checksums if expected_checksums[url] != recorded_checksums[url]]

for_verification_name = " for " + verification_name if verification_name is not None else ""

if len(bad_urls) > 0:

error_msg = "Checksums didn't match" + for_verification_name + ":\n"

> raise NonMatchingChecksumError(error_msg + str(bad_urls))

E datasets.utils.info_utils.NonMatchingChecksumError: Checksums didn't match for dataset source files:

E ['https://adversarialglue.github.io/dataset/dev.zip']

src/datasets/utils/info_utils.py:40: NonMatchingChecksumError

```

## Expected results

The dataset downloads correctly, and there is no error.

## Actual results

Datasets library is looking for a checksum of None, and it gets a non-None checksum, and throws an error. This is clearly a bug.

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/3848/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/3848/timeline

| null |

completed

|

https://api.github.com/repos/huggingface/datasets/issues/3847

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3847/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3847/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3847/events

|

https://github.com/huggingface/datasets/issues/3847

| 1,161,856,417 |

I_kwDODunzps5FQIWh

| 3,847 |

Datasets' cache not re-used

|

{

"login": "gejinchen",

"id": 15106980,

"node_id": "MDQ6VXNlcjE1MTA2OTgw",

"avatar_url": "https://avatars.githubusercontent.com/u/15106980?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/gejinchen",

"html_url": "https://github.com/gejinchen",

"followers_url": "https://api.github.com/users/gejinchen/followers",

"following_url": "https://api.github.com/users/gejinchen/following{/other_user}",

"gists_url": "https://api.github.com/users/gejinchen/gists{/gist_id}",

"starred_url": "https://api.github.com/users/gejinchen/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/gejinchen/subscriptions",

"organizations_url": "https://api.github.com/users/gejinchen/orgs",

"repos_url": "https://api.github.com/users/gejinchen/repos",

"events_url": "https://api.github.com/users/gejinchen/events{/privacy}",

"received_events_url": "https://api.github.com/users/gejinchen/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] |

open

| false | null |

[] | null |

[

"<s>I think this is because the tokenizer is stateful and because the order in which the splits are processed is not deterministic. Because of that, the hash of the tokenizer may change for certain splits, which causes issues with caching.\r\n\r\nTo fix this we can try making the order of the splits deterministic for map.</s>",

"Actually this is not because of the order of the splits, but most likely because the tokenizer used to process the second split is in a state that has been modified by the first split.\r\n\r\nTherefore after reloading the first split from the cache, then the second split can't be reloaded since the tokenizer hasn't seen the first split (and therefore is considered a different tokenizer).\r\n\r\nThis is a bit trickier to fix, we can explore fixing this next week maybe",

"Sorry didn't have the bandwidth to take care of this yet - will re-assign when I'm diving into it again !",

"I had this issue with `run_speech_recognition_ctc.py` for wa2vec2.0 fine-tuning. I made a small change and the hash for the function (which includes tokenisation) is now the same before and after pre-porocessing. With the hash being the same, the caching works as intended.\r\n\r\nBefore:\r\n```\r\n def prepare_dataset(batch):\r\n # load audio\r\n sample = batch[audio_column_name]\r\n\r\n inputs = feature_extractor(sample[\"array\"], sampling_rate=sample[\"sampling_rate\"])\r\n batch[\"input_values\"] = inputs.input_values[0]\r\n batch[\"input_length\"] = len(batch[\"input_values\"])\r\n\r\n # encode targets\r\n additional_kwargs = {}\r\n if phoneme_language is not None:\r\n additional_kwargs[\"phonemizer_lang\"] = phoneme_language\r\n\r\n batch[\"labels\"] = tokenizer(batch[\"target_text\"], **additional_kwargs).input_ids\r\n\r\n return batch\r\n\r\n with training_args.main_process_first(desc=\"dataset map preprocessing\"):\r\n vectorized_datasets = raw_datasets.map(\r\n prepare_dataset,\r\n remove_columns=next(iter(raw_datasets.values())).column_names,\r\n num_proc=num_workers,\r\n desc=\"preprocess datasets\",\r\n )\r\n```\r\nAfter:\r\n```\r\n def prepare_dataset(batch, feature_extractor, tokenizer):\r\n # load audio\r\n sample = batch[audio_column_name]\r\n\r\n inputs = feature_extractor(sample[\"array\"], sampling_rate=sample[\"sampling_rate\"])\r\n batch[\"input_values\"] = inputs.input_values[0]\r\n batch[\"input_length\"] = len(batch[\"input_values\"])\r\n\r\n # encode targets\r\n additional_kwargs = {}\r\n if phoneme_language is not None:\r\n additional_kwargs[\"phonemizer_lang\"] = phoneme_language\r\n\r\n batch[\"labels\"] = tokenizer(batch[\"target_text\"], **additional_kwargs).input_ids\r\n\r\n return batch\r\n\r\n pd = lambda batch: prepare_dataset(batch, feature_extractor, tokenizer)\r\n\r\n with training_args.main_process_first(desc=\"dataset map preprocessing\"):\r\n vectorized_datasets = raw_datasets.map(\r\n pd,\r\n remove_columns=next(iter(raw_datasets.values())).column_names,\r\n num_proc=num_workers,\r\n desc=\"preprocess datasets\",\r\n )\r\n```",

"Not sure why the second one would work and not the first one - they're basically the same with respect to hashing. In both cases the function is hashed recursively, and therefore the feature_extractor and the tokenizer are hashed the same way.\r\n\r\nWith which tokenizer or feature extractor are you experiencing this behavior ?\r\n\r\nDo you also experience this ?\r\n> Tokenization for some subsets are repeated at the 2nd and 3rd run. Starting from the 4th run, everything are loaded from cache.",

"Thanks ! Hopefully this can be useful to others, and also to better understand and improve hashing/caching ",

"`tokenizer.save_pretrained(training_args.output_dir)` produces a different tokenizer hash when loaded on restart of the script. When I was debugging before I was terminating the script prior to this command, then rerunning. \r\n\r\nI compared the tokenizer items on the first and second runs, there are two different items:\r\n1st:\r\n```\r\n('_additional_special_tokens', [AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True)])\r\n\r\n...\r\n\r\n('tokens_trie', <transformers.tokenization_utils.Trie object at 0x7f4d6d0ddb38>)\r\n```\r\n\r\n2nd:\r\n```\r\n('_additional_special_tokens', [AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"<s>\", rstrip=False, lstrip=False, single_word=False, normalized=True), AddedToken(\"</s>\", rstrip=False, lstrip=False, single_word=False, normalized=True)])\r\n\r\n...\r\n\r\n('tokens_trie', <transformers.tokenization_utils.Trie object at 0x7efc23dcce80>)\r\n```\r\n\r\n On every run of this the special tokens are being added on, and the hash is different on the `tokens_trie`. The increase in the special tokens category could be cleaned, but not sure about the hash for the `tokens_trie`. What might work is that the call for the tokenizer encoding can be translated into a function that strips any unnecessary information out, but that's a guess.\r\n",

"Thanks for investigating ! Does that mean that `save_pretrained`() produces non-deterministic tokenizers on disk ? Or is it `from_pretrained()` which is not deterministic given the same files on disk ?\r\n\r\nI think one way to fix this would be to make save/from_pretrained deterministic, or make the pickling of `transformers.tokenization_utils.Trie` objects deterministic (this could be implemented in `transformers`, but maybe let's discuss in an issue in `transformers` before opening a PR)",

"Late to the party but everything should be deterministic (afaik at least).\r\n\r\nBut `Trie` is a simple class object, so afaik it's hash function is linked to its `id(self)` so basically where it's stored in memory, so super highly non deterministic. Could that be the issue ?",

"> But Trie is a simple class object, so afaik it's hash function is linked to its id(self) so basically where it's stored in memory, so super highly non deterministic. Could that be the issue ?\r\n\r\nWe're computing the hash of the pickle dump of the class so it should be fine, as long as the pickle dump is deterministic",

"I've ported wav2vec2.0 fine-tuning into Optimum-Graphcore which is where I found the issue. The majority of the script was copied from the Transformers version to keep it similar, [here is the tokenizer loading section from the source](https://github.com/huggingface/transformers/blob/f0982682bd6fd0b438dda79ec45f3a8fac83a985/examples/pytorch/speech-recognition/run_speech_recognition_ctc.py#L531).\r\n\r\nIn the last comment I have two loaded tokenizers, one from run 'N' of the script and one from 'N+1'. I think what's happening is that when you add special tokens (e.g. PAD and UNK) another AddedToken object is appended when tokenizer is saved regardless of whether special tokens are there already. \r\n\r\nIf there is a AddedTokens cleanup at load/save this could solve the issue, but then is Trie going to cause hash to be different? I'm not sure. ",

"Which Python version are you using ?\r\n\r\nThe trie is basically a big dict of dics, so deterministic nature depends on python version:\r\nhttps://stackoverflow.com/questions/2053021/is-the-order-of-a-python-dictionary-guaranteed-over-iterations\r\n\r\nMaybe the investigation is actually not finding the right culprit though (the memory id is changed, but `datasets` is not using that to compare, so maybe we need to be looking within `datasets` so see where the comparison fails)",

"Similar issue found on `BartTokenizer`. You can bypass the bug by loading a fresh new tokenizer everytime.\r\n\r\n```\r\n dataset = dataset.map(lambda x: tokenize_func(x, BartTokenizer.from_pretrained(xxx)),\r\n num_proc=num_proc, desc='Tokenize')\r\n```",

"Linking in https://github.com/huggingface/datasets/issues/6179#issuecomment-1701244673 with an explanation.",

"I got the same problem while using Wav2Vec2CTCTokenizer in a distributed experiment (many processes), and found that the problem was localized in the serialization (pickle dump) of the field `tokenizer.tokens_trie._tokens` (just a python set). I focussed into the set serialization and found it is not deterministic:\r\n\r\n```\r\nfrom datasets.fingerprint import Hasher\r\nfrom pickle import dumps,loads\r\n\r\n# used just once to get a serialized literal\r\n#print(dumps(set(\"abc\")))\r\nserialized = b'\\x80\\x04\\x95\\x11\\x00\\x00\\x00\\x00\\x00\\x00\\x00\\x8f\\x94(\\x8c\\x01a\\x94\\x8c\\x01c\\x94\\x8c\\x01b\\x94\\x90.'\r\n\r\nmyset = loads(serialized)\r\nprint(f'{myset=} {Hasher.hash(myset)}')\r\nprint(serialized == dumps(myset))\r\n```\r\n\r\nEvery time you run the python script (different processes) you get a random result. @lhoestq does it make any sense?",

"OK, I assume python's set is just a hash table implementation that uses internally the hash() function. The problem is that python's hash() is not deterministic. I believe that setting the environment variable PYTHONHASHSEED to a fixed value, you can force it to be deterministic. I tried it (file `set_pickle_dump.py`):\r\n\r\n```\r\n#!/usr/bin/python3\r\n\r\nfrom datasets.fingerprint import Hasher\r\nfrom pickle import dumps,loads\r\n\r\n# used just once to get a serialized literal (with environment variable PYTHONHASHSEED set to 42)\r\n#print(dumps(set(\"abc\")))\r\nserialized = b'\\x80\\x04\\x95\\x11\\x00\\x00\\x00\\x00\\x00\\x00\\x00\\x8f\\x94(\\x8c\\x01b\\x94\\x8c\\x01c\\x94\\x8c\\x01a\\x94\\x90.'\r\n\r\nmyset = loads(serialized)\r\nprint(f'{myset=} {Hasher.hash(myset)}')\r\nprint(serialized == dumps(myset))\r\n```\r\n\r\nand now every run (`PYTHONHASHSEED=42 ./set_pickle_dump.py`) gets tthe same result. I tried then to test it with the tokenizer (file `test_tokenizer.py`):\r\n\r\n```\r\n#!/usr/bin/python3\r\nfrom transformers import AutoTokenizer\r\nfrom datasets.fingerprint import Hasher\r\n\r\ntokenizer = AutoTokenizer.from_pretrained('model')\r\nprint(f'{type(tokenizer)=}')\r\nprint(f'{Hasher.hash(tokenizer)=}')\r\n```\r\n\r\nexecuted as `PYTHONHASHSEED=42 ./test_tokenizer.py` and now the tokenizer fingerprint is allways the same!\r\n",

"Thanks for reporting. I opened a PR here to propose a fix: https://github.com/huggingface/datasets/pull/6318 and doesn't require setting `PYTHONHASHSEED`\r\n\r\nCan you try to install `datasets` from this branch and tell me if it fixes the issue ?",

"I patched (*) the file `datasets/utils/py_utils.py` and cache is working propperly now. Thanks!\r\n\r\n(*): I am running my experiments inside a docker container that depends on `huggingface/transformers-pytorch-gpu:latest`, so pattched the file instead of rebuilding the container from scratch",

"Fixed by #6318.",

"The OP issue hasn't been fixed, re-opening",

"I think the Trie()._tokens of PreTrainedTokenizer need to be a sorted set So that the results of `hash_bytes(dumps(tokenizer))` are consistent every time",

"I believe the issue may be linked to [tokenization_utils.py#L507](https://github.com/huggingface/transformers/blob/main/src/transformers/tokenization_utils.py#L507),specifically in the line where self.tokens_trie.add(token.content) is called. The function _update_trie appears to modify an unordered set. Consequently, this line:\r\n`value = hash_bytes(dumps(tokenizer.tokens_trie._tokens))`\r\ncan lead to inconsistencies when rerunning the code.\r\n\r\nThis, in turn, results in inconsistent outputs for both `hash_bytes(dumps(function))` at [arrow_dataset.py#L3053](https://github.com/huggingface/datasets/blob/main/src/datasets/arrow_dataset.py#L3053) and\r\n`hasher.update(transform_args[key])` at [fingerprint.py#L323](https://github.com/huggingface/datasets/blob/main/src/datasets/fingerprint.py#L323)\r\n\r\n```\r\ndataset_kwargs = {\r\n \"shard\": raw_datasets,\r\n \"function\": tokenize_function,\r\n}\r\ntransform = format_transform_for_fingerprint(Dataset._map_single)\r\nkwargs_for_fingerprint = format_kwargs_for_fingerprint(Dataset._map_single, (), dataset_kwargs)\r\nkwargs_for_fingerprint[\"fingerprint_name\"] = \"new_fingerprint\"\r\nnew_fingerprint = update_fingerprint(raw_datasets._fingerprint, transform, kwargs_for_fingerprint)\r\n```\r\n",

"Alternatively, does the \"dumps\" function require separate processing for the set?",

"We did a fix that does sorting whenever we hash sets. The fix is available on `main` if you want to try it out. We'll do a new release soon :)",

"Is there a documentation chapter that discusses in which cases you should expect your dataset preprocessing to be cached. Including do's and don'ts for the preprocessing functions? I think Datasets team does amazing job at tacking this issue on their side, but it would be great to have some guidelines on the user side as well.\r\n\r\nIn our current project we have two cases (text-to-text classification and summarization) and in one of them the cache is sometimes reused when it's not supposed to be reused while in the other it's never used at all 😅",

"You can find some docs here :) \r\nhttps://huggingface.co/docs/datasets/about_cache"

] | 2022-03-07T19:55:15 | 2023-11-20T18:14:37 | null |

NONE

| null | null | null |

## Describe the bug

For most tokenizers I have tested (e.g. the RoBERTa tokenizer), the data preprocessing cache are not fully reused in the first few runs, although their `.arrow` cache files are in the cache directory.

## Steps to reproduce the bug

Here is a reproducer. The GPT2 tokenizer works perfectly with caching, but not the RoBERTa tokenizer in this example.

```python

from datasets import load_dataset

from transformers import AutoTokenizer

raw_datasets = load_dataset("wikitext", "wikitext-2-raw-v1")

# tokenizer = AutoTokenizer.from_pretrained("gpt2")

tokenizer = AutoTokenizer.from_pretrained("roberta-base")

text_column_name = "text"

column_names = raw_datasets["train"].column_names

def tokenize_function(examples):

return tokenizer(examples[text_column_name], return_special_tokens_mask=True)

tokenized_datasets = raw_datasets.map(

tokenize_function,

batched=True,

remove_columns=column_names,

load_from_cache_file=True,

desc="Running tokenizer on every text in dataset",

)

```

## Expected results

No tokenization would be required after the 1st run. Everything should be loaded from the cache.

## Actual results

Tokenization for some subsets are repeated at the 2nd and 3rd run. Starting from the 4th run, everything are loaded from cache.

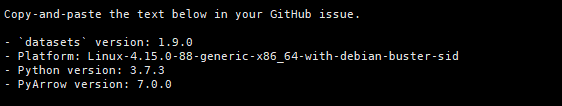

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 1.18.3

- Platform: Ubuntu 18.04.6 LTS

- Python version: 3.6.9

- PyArrow version: 6.0.1

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/3847/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/3847/timeline

| null |

reopened

|

https://api.github.com/repos/huggingface/datasets/issues/3841

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3841/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3841/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3841/events

|

https://github.com/huggingface/datasets/issues/3841

| 1,161,203,842 |

I_kwDODunzps5FNpCC

| 3,841 |

Pyright reportPrivateImportUsage when `from datasets import load_dataset`

|

{

"login": "lkhphuc",

"id": 12573521,

"node_id": "MDQ6VXNlcjEyNTczNTIx",

"avatar_url": "https://avatars.githubusercontent.com/u/12573521?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lkhphuc",

"html_url": "https://github.com/lkhphuc",

"followers_url": "https://api.github.com/users/lkhphuc/followers",

"following_url": "https://api.github.com/users/lkhphuc/following{/other_user}",

"gists_url": "https://api.github.com/users/lkhphuc/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lkhphuc/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lkhphuc/subscriptions",

"organizations_url": "https://api.github.com/users/lkhphuc/orgs",

"repos_url": "https://api.github.com/users/lkhphuc/repos",

"events_url": "https://api.github.com/users/lkhphuc/events{/privacy}",

"received_events_url": "https://api.github.com/users/lkhphuc/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] |

closed

| false | null |

[] | null |

[

"Hi! \r\n\r\nThis issue stems from `datasets` having `py.typed` defined (see https://github.com/microsoft/pyright/discussions/3764#discussioncomment-3282142) - to avoid it, we would either have to remove `py.typed` (added to be compliant with PEP-561) or export the names with `__all__`/`from .submodule import name as name`.\r\n\r\nTransformers is fine as it no longer has `py.typed` (removed in https://github.com/huggingface/transformers/pull/18485)\r\n\r\nWDYT @lhoestq @albertvillanova @polinaeterna \r\n\r\n@sgugger's point makes sense - we should either be \"properly typed\" (have py.typed + mypy tests) or drop `py.typed` as Transformers did (I like this option better).\r\n\r\n(cc @Wauplin since `huggingface_hub` has the same issue.)",

"I'm fine with dropping it, but autotrain people won't be happy @SBrandeis ",

"> (cc @Wauplin since huggingface_hub has the same issue.)\r\n\r\nHmm maybe we have the same issue but I haven't been able to reproduce something similar to `\"load_dataset\" is not exported from module \"datasets\"` message (using VSCode+Pylance -that is powered by Pyright). `huggingface_hub` contains a `py.typed` file but the package itself is actually typed. We are running `mypy` in our CI tests since ~3 months and so far it seems to be ok. But happy to change if it causes some issues with linters.\r\n\r\nAlso the top-level [`__init__.py`](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/__init__.py) is quite different in `hfh` than `datasets` (at first glance). We have a section at the bottom to import all high level methods/classes in a `if TYPE_CHECKING` block.",

"@Wauplin I only get the error if I use Pyright's CLI tool or the Pyright extension (not sure why, but Pylance also doesn't report this issue on my machine)\r\n\r\n> Also the top-level [`__init__.py`](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/__init__.py) is quite different in `hfh` than `datasets` (at first glance). We have a section at the bottom to import all high level methods/classes in a `if TYPE_CHECKING` block.\r\n\r\nI tried to fix the issue with `TYPE_CHECKING`, but it still fails if `py.typed` is present.",

"@mariosasko thank for the tip. I have been able to reproduce the issue as well. I would be up for including a (huge) static `__all__` variable in the `__init__.py` (since the file is already generated automatically in `hfh`) but honestly I don't think it's worth the hassle. \r\n\r\nI'll delete the `py.typed` file in `huggingface_hub` to be consistent between HF libraries. I opened a PR here: https://github.com/huggingface/huggingface_hub/pull/1329",

"I am getting this error in google colab today:\r\n\r\n\r\n\r\nThe code runs just fine too."

] | 2022-03-07T10:24:04 | 2023-02-18T19:14:03 | 2023-02-13T13:48:41 |

CONTRIBUTOR

| null | null | null |

## Describe the bug

Pyright complains about module not exported.

## Steps to reproduce the bug

Use an editor/IDE with Pyright Language server with default configuration:

```python

from datasets import load_dataset

```

## Expected results

No complain from Pyright

## Actual results

Pyright complain below:

```

`load_dataset` is not exported from module "datasets"

Import from "datasets.load" instead [reportPrivateImportUsage]

```

Importing from `datasets.load` does indeed solves the problem but I believe importing directly from top level `datasets` is the intended usage per the documentation.

## Environment info

- `datasets` version: 1.18.3

- Platform: macOS-12.2.1-arm64-arm-64bit

- Python version: 3.9.10

- PyArrow version: 7.0.0

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/3841/reactions",

"total_count": 3,

"+1": 3,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/3841/timeline

| null |

completed

|

https://api.github.com/repos/huggingface/datasets/issues/3839

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3839/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3839/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3839/events

|

https://github.com/huggingface/datasets/issues/3839

| 1,161,183,482 |

I_kwDODunzps5FNkD6

| 3,839 |

CI is broken for Windows

|

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] |

closed

| false |

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

] | null |

[] | 2022-03-07T10:06:42 | 2022-05-20T14:13:43 | 2022-03-07T10:07:24 |

MEMBER

| null | null | null |

## Describe the bug

See: https://app.circleci.com/pipelines/github/huggingface/datasets/10292/workflows/83de4a55-bff7-43ec-96f7-0c335af5c050/jobs/63355

```

___________________ test_datasetdict_from_text_split[test] ____________________

[gw0] win32 -- Python 3.7.11 C:\tools\miniconda3\envs\py37\python.exe

split = 'test'

text_path = 'C:\\Users\\circleci\\AppData\\Local\\Temp\\pytest-of-circleci\\pytest-0\\popen-gw0\\data6\\dataset.txt'

tmp_path = WindowsPath('C:/Users/circleci/AppData/Local/Temp/pytest-of-circleci/pytest-0/popen-gw0/test_datasetdict_from_text_spl7')

@pytest.mark.parametrize("split", [None, NamedSplit("train"), "train", "test"])

def test_datasetdict_from_text_split(split, text_path, tmp_path):

if split:

path = {split: text_path}

else:

split = "train"

path = {"train": text_path, "test": text_path}

cache_dir = tmp_path / "cache"

expected_features = {"text": "string"}

> dataset = TextDatasetReader(path, cache_dir=cache_dir).read()

tests\io\test_text.py:118:

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

C:\tools\miniconda3\envs\py37\lib\site-packages\datasets\io\text.py:43: in read

use_auth_token=use_auth_token,

C:\tools\miniconda3\envs\py37\lib\site-packages\datasets\builder.py:588: in download_and_prepare

self._download_prepared_from_hf_gcs(dl_manager.download_config)

C:\tools\miniconda3\envs\py37\lib\site-packages\datasets\builder.py:630: in _download_prepared_from_hf_gcs

reader.download_from_hf_gcs(download_config, relative_data_dir)

C:\tools\miniconda3\envs\py37\lib\site-packages\datasets\arrow_reader.py:260: in download_from_hf_gcs

downloaded_dataset_info = cached_path(remote_dataset_info.replace(os.sep, "/"))

C:\tools\miniconda3\envs\py37\lib\site-packages\datasets\utils\file_utils.py:301: in cached_path

download_desc=download_config.download_desc,

C:\tools\miniconda3\envs\py37\lib\site-packages\datasets\utils\file_utils.py:560: in get_from_cache

headers=headers,

C:\tools\miniconda3\envs\py37\lib\site-packages\datasets\utils\file_utils.py:476: in http_head

max_retries=max_retries,

C:\tools\miniconda3\envs\py37\lib\site-packages\datasets\utils\file_utils.py:397: in _request_with_retry

response = requests.request(method=method.upper(), url=url, timeout=timeout, **params)

C:\tools\miniconda3\envs\py37\lib\site-packages\requests\api.py:61: in request

return session.request(method=method, url=url, **kwargs)

C:\tools\miniconda3\envs\py37\lib\site-packages\requests\sessions.py:529: in request

resp = self.send(prep, **send_kwargs)

C:\tools\miniconda3\envs\py37\lib\site-packages\requests\sessions.py:645: in send

r = adapter.send(request, **kwargs)

C:\tools\miniconda3\envs\py37\lib\site-packages\responses\__init__.py:840: in unbound_on_send

return self._on_request(adapter, request, *a, **kwargs)

C:\tools\miniconda3\envs\py37\lib\site-packages\responses\__init__.py:780: in _on_request

match, match_failed_reasons = self._find_match(request)

_ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _ _

self = <responses.RequestsMock object at 0x000002048AD70588>

request = <PreparedRequest [HEAD]>

def _find_first_match(self, request):

match_failed_reasons = []

> for i, match in enumerate(self._matches):

E AttributeError: 'RequestsMock' object has no attribute '_matches'

C:\tools\miniconda3\envs\py37\lib\site-packages\moto\core\models.py:289: AttributeError

```

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/3839/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/3839/timeline

| null |

completed

|

https://api.github.com/repos/huggingface/datasets/issues/3838

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3838/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3838/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3838/events

|

https://github.com/huggingface/datasets/issues/3838

| 1,161,137,406 |

I_kwDODunzps5FNYz-

| 3,838 |

Add a data type for labeled images (image segmentation)

|

{

"login": "severo",

"id": 1676121,

"node_id": "MDQ6VXNlcjE2NzYxMjE=",

"avatar_url": "https://avatars.githubusercontent.com/u/1676121?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/severo",

"html_url": "https://github.com/severo",

"followers_url": "https://api.github.com/users/severo/followers",

"following_url": "https://api.github.com/users/severo/following{/other_user}",

"gists_url": "https://api.github.com/users/severo/gists{/gist_id}",

"starred_url": "https://api.github.com/users/severo/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/severo/subscriptions",

"organizations_url": "https://api.github.com/users/severo/orgs",

"repos_url": "https://api.github.com/users/severo/repos",

"events_url": "https://api.github.com/users/severo/events{/privacy}",

"received_events_url": "https://api.github.com/users/severo/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892871,

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement",

"name": "enhancement",

"color": "a2eeef",

"default": true,

"description": "New feature or request"

}

] |

open

| false |

{

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

}

] | null |

[] | 2022-03-07T09:38:15 | 2022-04-10T13:34:59 | null |

CONTRIBUTOR

| null | null | null |

It might be a mix of Image and ClassLabel, and the color palette might be generated automatically.

---

### Example

every pixel in the images of the annotation column (in https://huggingface.co/datasets/scene_parse_150) has a value that gives its class, and the dataset itself is associated with a color palette (eg https://github.com/open-mmlab/mmsegmentation/blob/98a353b674c6052d319e7de4e5bcd65d670fcf84/mmseg/datasets/ade.py#L47) that maps every class with a color.

So we might want to render the image as a colored image instead of a black and white one.

<img width="785" alt="156741519-fbae6844-2606-4c28-837e-279d83d00865" src="https://user-images.githubusercontent.com/1676121/157005263-7058c584-2b70-465a-ad94-8a982f726cf4.png">

See https://github.com/tensorflow/datasets/blob/master/tensorflow_datasets/core/features/labeled_image.py for reference in Tensorflow

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/3838/reactions",

"total_count": 1,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/3838/timeline

| null | null |

https://api.github.com/repos/huggingface/datasets/issues/3835

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3835/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3835/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3835/events

|

https://github.com/huggingface/datasets/issues/3835

| 1,161,029,205 |

I_kwDODunzps5FM-ZV

| 3,835 |

The link given on the gigaword does not work

|

{

"login": "martin6336",

"id": 26357784,

"node_id": "MDQ6VXNlcjI2MzU3Nzg0",

"avatar_url": "https://avatars.githubusercontent.com/u/26357784?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/martin6336",

"html_url": "https://github.com/martin6336",

"followers_url": "https://api.github.com/users/martin6336/followers",

"following_url": "https://api.github.com/users/martin6336/following{/other_user}",

"gists_url": "https://api.github.com/users/martin6336/gists{/gist_id}",

"starred_url": "https://api.github.com/users/martin6336/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/martin6336/subscriptions",

"organizations_url": "https://api.github.com/users/martin6336/orgs",

"repos_url": "https://api.github.com/users/martin6336/repos",

"events_url": "https://api.github.com/users/martin6336/events{/privacy}",

"received_events_url": "https://api.github.com/users/martin6336/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] |

closed

| false | null |

[] | null |

[] | 2022-03-07T07:56:42 | 2022-03-15T12:30:23 | 2022-03-15T12:30:23 |

NONE

| null | null | null |

## Dataset viewer issue for '*name of the dataset*'

**Link:** *link to the dataset viewer page*

*short description of the issue*

Am I the one who added this dataset ? Yes-No

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/3835/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/3835/timeline

| null |

completed

|

https://api.github.com/repos/huggingface/datasets/issues/3832

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3832/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3832/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3832/events

|

https://github.com/huggingface/datasets/issues/3832

| 1,160,503,446 |

I_kwDODunzps5FK-CW

| 3,832 |

Making Hugging Face the place to go for Graph NNs datasets

|

{

"login": "omarespejel",

"id": 4755430,

"node_id": "MDQ6VXNlcjQ3NTU0MzA=",

"avatar_url": "https://avatars.githubusercontent.com/u/4755430?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/omarespejel",

"html_url": "https://github.com/omarespejel",

"followers_url": "https://api.github.com/users/omarespejel/followers",

"following_url": "https://api.github.com/users/omarespejel/following{/other_user}",

"gists_url": "https://api.github.com/users/omarespejel/gists{/gist_id}",

"starred_url": "https://api.github.com/users/omarespejel/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/omarespejel/subscriptions",

"organizations_url": "https://api.github.com/users/omarespejel/orgs",

"repos_url": "https://api.github.com/users/omarespejel/repos",

"events_url": "https://api.github.com/users/omarespejel/events{/privacy}",

"received_events_url": "https://api.github.com/users/omarespejel/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 2067376369,

"node_id": "MDU6TGFiZWwyMDY3Mzc2MzY5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20request",

"name": "dataset request",

"color": "e99695",

"default": false,

"description": "Requesting to add a new dataset"

},

{

"id": 3898693527,

"node_id": "LA_kwDODunzps7oYVeX",

"url": "https://api.github.com/repos/huggingface/datasets/labels/graph",

"name": "graph",

"color": "7AFCAA",

"default": false,

"description": "Datasets for Graph Neural Networks"

}

] |

open

| false | null |

[] | null |

[

"It will be indeed really great to add support to GNN datasets. Big :+1: for this initiative.",

"@napoles-uach identifies the [TUDatasets](https://chrsmrrs.github.io/datasets/) (A collection of benchmark datasets for graph classification and regression). \r\n\r\nAdded to the Tasks in the initial issue.",

"Thanks Omar, that is a great collection!",

"Great initiative! Let's keep this issue for these 3 datasets, but moving forward maybe let's create a new issue per dataset :rocket: great work @napoles-uach and @omarespejel!"

] | 2022-03-06T03:02:58 | 2022-03-14T07:45:38 | null |

NONE

| null | null | null |

Let's make Hugging Face Datasets the central hub for GNN datasets :)

**Motivation**. Datasets are currently quite scattered and an open-source central point such as the Hugging Face Hub would be ideal to support the growth of the GNN field.

What are some datasets worth integrating into the Hugging Face hub?

Instructions to add a new dataset can be found [here](https://github.com/huggingface/datasets/blob/master/ADD_NEW_DATASET.md).

Special thanks to @napoles-uach for his collaboration on identifying the first ones:

- [ ] [SNAP-Stanford OGB Datasets](https://github.com/snap-stanford/ogb).

- [ ] [SNAP-Stanford Pretrained GNNs Chemistry and Biology Datasets](https://github.com/snap-stanford/pretrain-gnns).

- [ ] [TUDatasets](https://chrsmrrs.github.io/datasets/) (A collection of benchmark datasets for graph classification and regression)

cc @osanseviero

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/3832/reactions",

"total_count": 5,

"+1": 1,

"-1": 0,

"laugh": 0,

"hooray": 2,

"confused": 0,

"heart": 2,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/3832/timeline

| null | null |

https://api.github.com/repos/huggingface/datasets/issues/3831

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3831/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3831/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3831/events

|

https://github.com/huggingface/datasets/issues/3831

| 1,160,501,000 |

I_kwDODunzps5FK9cI

| 3,831 |

when using to_tf_dataset with shuffle is true, not all completed batches are made

|

{

"login": "greenned",

"id": 42107709,

"node_id": "MDQ6VXNlcjQyMTA3NzA5",

"avatar_url": "https://avatars.githubusercontent.com/u/42107709?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/greenned",

"html_url": "https://github.com/greenned",

"followers_url": "https://api.github.com/users/greenned/followers",

"following_url": "https://api.github.com/users/greenned/following{/other_user}",

"gists_url": "https://api.github.com/users/greenned/gists{/gist_id}",

"starred_url": "https://api.github.com/users/greenned/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/greenned/subscriptions",

"organizations_url": "https://api.github.com/users/greenned/orgs",

"repos_url": "https://api.github.com/users/greenned/repos",

"events_url": "https://api.github.com/users/greenned/events{/privacy}",

"received_events_url": "https://api.github.com/users/greenned/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] |

closed

| false | null |

[] | null |

[

"Maybe @Rocketknight1 can help here",

"Hi @greenned, this is expected behaviour for `to_tf_dataset`. By default, we drop the smaller 'remainder' batch during training (i.e. when `shuffle=True`). If you really want to keep that batch, you can set `drop_remainder=False` when calling `to_tf_dataset()`.",

"@Rocketknight1 Oh, thank you. I didn't get **drop_remainder** Have a nice day!",

"No problem!\r\n"

] | 2022-03-06T02:43:50 | 2022-03-08T15:18:56 | 2022-03-08T15:18:56 |

NONE

| null | null | null |

## Describe the bug

when converting a dataset to tf_dataset by using to_tf_dataset with shuffle true, the remainder is not converted to one batch

## Steps to reproduce the bug

this is the sample code below

https://colab.research.google.com/drive/1_oRXWsR38ElO1EYF9ayFoCU7Ou1AAej4?usp=sharing

## Expected results

regardless of shuffle is true or not, 67 rows dataset should be 5 batches when batch size is 16.

## Actual results

4 batches

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 1.18.3

- Platform: Linux-5.4.144+-x86_64-with-Ubuntu-18.04-bionic

- Python version: 3.7.12

- PyArrow version: 6.0.1

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/3831/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/3831/timeline

| null |

completed

|

https://api.github.com/repos/huggingface/datasets/issues/3830

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/3830/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/3830/comments

|

https://api.github.com/repos/huggingface/datasets/issues/3830/events

|

https://github.com/huggingface/datasets/issues/3830

| 1,160,181,404 |

I_kwDODunzps5FJvac

| 3,830 |

Got error when load cnn_dailymail dataset

|

{

"login": "wgong0510",

"id": 78331051,

"node_id": "MDQ6VXNlcjc4MzMxMDUx",

"avatar_url": "https://avatars.githubusercontent.com/u/78331051?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/wgong0510",

"html_url": "https://github.com/wgong0510",

"followers_url": "https://api.github.com/users/wgong0510/followers",

"following_url": "https://api.github.com/users/wgong0510/following{/other_user}",

"gists_url": "https://api.github.com/users/wgong0510/gists{/gist_id}",

"starred_url": "https://api.github.com/users/wgong0510/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/wgong0510/subscriptions",

"organizations_url": "https://api.github.com/users/wgong0510/orgs",

"repos_url": "https://api.github.com/users/wgong0510/repos",

"events_url": "https://api.github.com/users/wgong0510/events{/privacy}",

"received_events_url": "https://api.github.com/users/wgong0510/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892865,

"node_id": "MDU6TGFiZWwxOTM1ODkyODY1",

"url": "https://api.github.com/repos/huggingface/datasets/labels/duplicate",

"name": "duplicate",

"color": "cfd3d7",

"default": true,

"description": "This issue or pull request already exists"

}

] |

closed

| false | null |

[] | null |

[