url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 48

51

| id

int64 600M

2.19B

| node_id

stringlengths 18

24

| number

int64 2

6.73k

| title

stringlengths 1

290

| user

dict | labels

listlengths 0

4

| state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

listlengths 0

4

| milestone

dict | comments

listlengths 0

30

| created_at

timestamp[s] | updated_at

timestamp[s] | closed_at

timestamp[s] | author_association

stringclasses 3

values | active_lock_reason

null | draft

null | pull_request

null | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

null | state_reason

stringclasses 3

values |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/4118

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/4118/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/4118/comments

|

https://api.github.com/repos/huggingface/datasets/issues/4118/events

|

https://github.com/huggingface/datasets/issues/4118

| 1,195,638,944 |

I_kwDODunzps5HRACg

| 4,118 |

Failing CI tests on Windows

|

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] |

closed

| false |

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

] | null |

[] | 2022-04-07T07:36:25 | 2022-04-07T07:57:13 | 2022-04-07T07:57:13 |

MEMBER

| null | null | null |

## Describe the bug

Our CI Windows tests are failing from yesterday: https://app.circleci.com/pipelines/github/huggingface/datasets/11092/workflows/9cfdb1dd-0fec-4fe0-8122-5f533192ebdc/jobs/67414

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/4118/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/4118/timeline

| null |

completed

|

https://api.github.com/repos/huggingface/datasets/issues/4117

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/4117/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/4117/comments

|

https://api.github.com/repos/huggingface/datasets/issues/4117/events

|

https://github.com/huggingface/datasets/issues/4117

| 1,195,552,406 |

I_kwDODunzps5HQq6W

| 4,117 |

AttributeError: module 'huggingface_hub' has no attribute 'hf_api'

|

{

"login": "arymbe",

"id": 4567991,

"node_id": "MDQ6VXNlcjQ1Njc5OTE=",

"avatar_url": "https://avatars.githubusercontent.com/u/4567991?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/arymbe",

"html_url": "https://github.com/arymbe",

"followers_url": "https://api.github.com/users/arymbe/followers",

"following_url": "https://api.github.com/users/arymbe/following{/other_user}",

"gists_url": "https://api.github.com/users/arymbe/gists{/gist_id}",

"starred_url": "https://api.github.com/users/arymbe/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/arymbe/subscriptions",

"organizations_url": "https://api.github.com/users/arymbe/orgs",

"repos_url": "https://api.github.com/users/arymbe/repos",

"events_url": "https://api.github.com/users/arymbe/events{/privacy}",

"received_events_url": "https://api.github.com/users/arymbe/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] |

closed

| false |

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

] | null |

[

"Hi @arymbe, thanks for reporting.\r\n\r\nUnfortunately, I'm not able to reproduce your problem.\r\n\r\nCould you please write the complete stack trace? That way we will be able to see which package originates the exception.",

"Hello, thank you for your fast replied. this is the complete error that I got\r\n\r\n---------------------------------------------------------------------------\r\n\r\nAttributeError Traceback (most recent call last)\r\n\r\n---------------------------------------------------------------------------\r\n\r\nAttributeError Traceback (most recent call last)\r\n\r\nInput In [27], in <module>\r\n----> 1 from datasets import load_dataset\r\n\r\nvenv/lib/python3.8/site-packages/datasets/__init__.py:39, in <module>\r\n 37 from .arrow_dataset import Dataset, concatenate_datasets\r\n 38 from .arrow_reader import ReadInstruction\r\n---> 39 from .builder import ArrowBasedBuilder, BeamBasedBuilder, BuilderConfig, DatasetBuilder, GeneratorBasedBuilder\r\n 40 from .combine import interleave_datasets\r\n 41 from .dataset_dict import DatasetDict, IterableDatasetDict\r\n\r\nvenv/lib/python3.8/site-packages/datasets/builder.py:40, in <module>\r\n 32 from .arrow_reader import (\r\n 33 HF_GCP_BASE_URL,\r\n 34 ArrowReader,\r\n (...)\r\n 37 ReadInstruction,\r\n 38 )\r\n 39 from .arrow_writer import ArrowWriter, BeamWriter\r\n---> 40 from .data_files import DataFilesDict, sanitize_patterns\r\n 41 from .dataset_dict import DatasetDict, IterableDatasetDict\r\n 42 from .features import Features\r\n\r\nvenv/lib/python3.8/site-packages/datasets/data_files.py:297, in <module>\r\n 292 except FileNotFoundError:\r\n 293 raise FileNotFoundError(f\"The directory at {base_path} doesn't contain any data file\") from None\r\n 296 def _resolve_single_pattern_in_dataset_repository(\r\n--> 297 dataset_info: huggingface_hub.hf_api.DatasetInfo,\r\n 298 pattern: str,\r\n 299 allowed_extensions: Optional[list] = None,\r\n 300 ) -> List[PurePath]:\r\n 301 data_files_ignore = FILES_TO_IGNORE\r\n 302 fs = HfFileSystem(repo_info=dataset_info)\r\n\r\nAttributeError: module 'huggingface_hub' has no attribute 'hf_api'",

"This is weird... It is long ago that the package `huggingface_hub` has a submodule called `hf_api`.\r\n\r\nMaybe you have a problem with your installed `huggingface_hub`...\r\n\r\nCould you please try to update it?\r\n```shell\r\npip install -U huggingface_hub\r\n```",

"Yap, I've updated several times. Then, I've tried numeral combination of datasets and huggingface_hub versions. However, I think your point is right that there is a problem with my huggingface_hub installation. I'll try another way to find the solution. I'll update it later when I get the solution. Thank you :)",

"I'm sorry I can't reproduce your problem.\r\n\r\nMaybe you could try to create a new Python virtual environment and install all dependencies there from scratch. You can use either:\r\n- Python venv: https://docs.python.org/3/library/venv.html\r\n- or conda venv (if you are using conda): https://docs.conda.io/projects/conda/en/latest/user-guide/tasks/manage-environments.html",

"Facing the same issue.\r\n\r\nResponse from `pip show datasets`\r\n```\r\nName: datasets\r\nVersion: 1.15.1\r\nSummary: HuggingFace community-driven open-source library of datasets\r\nHome-page: https://github.com/huggingface/datasets\r\nAuthor: HuggingFace Inc.\r\nAuthor-email: [email protected]\r\nLicense: Apache 2.0\r\nLocation: /usr/local/lib/python3.8/dist-packages\r\nRequires: aiohttp, dill, fsspec, huggingface-hub, multiprocess, numpy, packaging, pandas, pyarrow, requests, tqdm, xxhash\r\nRequired-by: lm-eval\r\n```\r\n\r\nResponse from `pip show huggingface_hub`\r\n\r\n```\r\nName: huggingface-hub\r\nVersion: 0.8.1\r\nSummary: Client library to download and publish models, datasets and other repos on the huggingface.co hub\r\nHome-page: https://github.com/huggingface/huggingface_hub\r\nAuthor: Hugging Face, Inc.\r\nAuthor-email: [email protected]\r\nLicense: Apache\r\nLocation: /usr/local/lib/python3.8/dist-packages\r\nRequires: filelock, packaging, pyyaml, requests, tqdm, typing-extensions\r\nRequired-by: datasets\r\n```\r\n\r\nresponse from `datasets-cli env`\r\n\r\n```\r\nTraceback (most recent call last):\r\n File \"/usr/local/bin/datasets-cli\", line 5, in <module>\r\n from datasets.commands.datasets_cli import main\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/__init__.py\", line 37, in <module>\r\n from .builder import ArrowBasedBuilder, BeamBasedBuilder, BuilderConfig, DatasetBuilder, GeneratorBasedBuilder\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/builder.py\", line 44, in <module>\r\n from .data_files import DataFilesDict, _sanitize_patterns\r\n File \"/usr/local/lib/python3.8/dist-packages/datasets/data_files.py\", line 120, in <module>\r\n dataset_info: huggingface_hub.hf_api.DatasetInfo,\r\n File \"/usr/local/lib/python3.8/dist-packages/huggingface_hub/__init__.py\", line 105, in __getattr__\r\n raise AttributeError(f\"No {package_name} attribute {name}\")\r\nAttributeError: No huggingface_hub attribute hf_api\r\n```",

"A workaround: \r\nI changed lines around Line 125 in `__init__.py` of `huggingface_hub` to something like\r\n```\r\n__getattr__, __dir__, __all__ = _attach(\r\n __name__,\r\n submodules=['hf_api'],\r\n```\r\nand it works ( which gives `datasets` direct access to `huggingface_hub.hf_api` ).",

"I was getting the same issue. After trying a few versions, following combination worked for me.\r\ndataset==2.3.2\r\nhuggingface_hub==0.7.0\r\n\r\nIn another environment, I just installed latest repos from pip through `pip install -U transformers datasets tokenizers evaluate`, resulting in following versions. This also worked. Hope it helps someone. \r\n\r\ndatasets-2.3.2 evaluate-0.1.2 huggingface-hub-0.8.1 responses-0.18.0 tokenizers-0.12.1 transformers-4.20.1",

"For layoutlm_v3 finetune\r\ndatasets-2.3.2 evaluate-0.1.2 huggingface-hub-0.8.1 responses-0.18.0 tokenizers-0.12.1 transformers-4.12.5",

"(For layoutlmv3 fine-tuning) In my case, modifying `requirements.txt` as below worked.\r\n\r\n- python = 3.7\r\n\r\n```\r\ndatasets==2.3.2\r\nevaluate==0.1.2\r\nhuggingface-hub==0.8.1\r\nresponse==0.5.0\r\ntokenizers==0.10.1\r\ntransformers==4.12.5\r\nseqeval==1.2.2\r\ndeepspeed==0.5.7\r\ntensorboard==2.7.0\r\nseqeval==1.2.2\r\nsentencepiece\r\ntimm==0.4.12\r\nPillow\r\neinops\r\ntextdistance\r\nshapely\r\n```",

"> For layoutlm_v3 finetune datasets-2.3.2 evaluate-0.1.2 huggingface-hub-0.8.1 responses-0.18.0 tokenizers-0.12.1 transformers-4.12.5\r\n\r\nGOOD!! Thanks!",

"I encountered the same issue where the problem is the absence of the 'scipy' library.\r\nTo solve this open your terminal or command prompt and run the following command to install 'scipy': pip install scipy .\r\nRestart the kernel and rerun the cell and it will work.\r\n"

] | 2022-04-07T05:52:36 | 2024-02-15T14:11:35 | 2022-04-19T15:36:35 |

NONE

| null | null | null |

## Describe the bug

Could you help me please. I got this following error.

AttributeError: module 'huggingface_hub' has no attribute 'hf_api'

## Steps to reproduce the bug

when I imported the datasets

# Sample code to reproduce the bug

from datasets import list_datasets, load_dataset, list_metrics, load_metric

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 2.0.0

- Platform: macOS-12.3-x86_64-i386-64bit

- Python version: 3.8.9

- PyArrow version: 7.0.0

- Pandas version: 1.3.5

- Huggingface-hub: 0.5.0

- Transformers: 4.18.0

Thank you in advance.

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/4117/reactions",

"total_count": 3,

"+1": 3,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/4117/timeline

| null |

completed

|

https://api.github.com/repos/huggingface/datasets/issues/4115

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/4115/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/4115/comments

|

https://api.github.com/repos/huggingface/datasets/issues/4115/events

|

https://github.com/huggingface/datasets/issues/4115

| 1,194,907,555 |

I_kwDODunzps5HONej

| 4,115 |

ImageFolder add option to ignore some folders like '.ipynb_checkpoints'

|

{

"login": "cceyda",

"id": 15624271,

"node_id": "MDQ6VXNlcjE1NjI0Mjcx",

"avatar_url": "https://avatars.githubusercontent.com/u/15624271?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/cceyda",

"html_url": "https://github.com/cceyda",

"followers_url": "https://api.github.com/users/cceyda/followers",

"following_url": "https://api.github.com/users/cceyda/following{/other_user}",

"gists_url": "https://api.github.com/users/cceyda/gists{/gist_id}",

"starred_url": "https://api.github.com/users/cceyda/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/cceyda/subscriptions",

"organizations_url": "https://api.github.com/users/cceyda/orgs",

"repos_url": "https://api.github.com/users/cceyda/repos",

"events_url": "https://api.github.com/users/cceyda/events{/privacy}",

"received_events_url": "https://api.github.com/users/cceyda/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892871,

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement",

"name": "enhancement",

"color": "a2eeef",

"default": true,

"description": "New feature or request"

}

] |

closed

| false | null |

[] | null |

[

"Maybe it would be nice to ignore private dirs like this one (ones starting with `.`) by default. \r\n\r\nCC @mariosasko ",

"Maybe we can add a `ignore_hidden_files` flag to the builder configs of our packaged loaders (to be consistent across all of them), wdyt @lhoestq @albertvillanova? ",

"I think they should always ignore them actually ! Not sure if adding a flag would be helpful",

"@lhoestq But what if the user explicitly requests those files via regex?\r\n\r\n`glob.glob` ignores hidden files (files starting with \".\") by default unless they are explicitly requested, but fsspec's `glob` doesn't follow this behavior, which is probably a bug, so maybe we can raise an issue or open a PR in their repo?",

"> @lhoestq But what if the user explicitly requests those files via regex?\r\n\r\nUsually hidden files are meant to be ignored. If they are data files, they must be placed outside a hidden directory in the first place right ? I think it's more sensible to explain this than adding a flag.\r\n\r\n> glob.glob ignores hidden files (files starting with \".\") by default unless they are explicitly requested, but fsspec's glob doesn't follow this behavior, which is probably a bug, so maybe we can raise an issue or open a PR in their repo?\r\n\r\nAfter globbing using `fsspec`, we already ignore files that start with a `.` in `_resolve_single_pattern_locally` and `_resolve_single_pattern_in_dataset_repository`, I guess we can just account for parent directories as well ?\r\n\r\nWe could open an issue on `fsspec` but I think they won't change this since it's an important breaking change for them."

] | 2022-04-06T17:29:43 | 2022-06-01T13:04:16 | 2022-06-01T13:04:16 |

CONTRIBUTOR

| null | null | null |

**Is your feature request related to a problem? Please describe.**

I sometimes like to peek at the dataset images from jupyterlab. thus '.ipynb_checkpoints' folder appears where my dataset is and (just realized) leads to accidental duplicate image additions. I think this is an easy enough thing to miss especially if the dataset is very large.

**Describe the solution you'd like**

maybe have an option `ignore` or something .gitignore style

`dataset = load_dataset("imagefolder", data_dir="./data/original", ignore="regex?")`

**Describe alternatives you've considered**

Could filter out manually

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/4115/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/4115/timeline

| null |

completed

|

https://api.github.com/repos/huggingface/datasets/issues/4114

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/4114/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/4114/comments

|

https://api.github.com/repos/huggingface/datasets/issues/4114/events

|

https://github.com/huggingface/datasets/issues/4114

| 1,194,855,345 |

I_kwDODunzps5HOAux

| 4,114 |

Allow downloading just some columns of a dataset

|

{

"login": "osanseviero",

"id": 7246357,

"node_id": "MDQ6VXNlcjcyNDYzNTc=",

"avatar_url": "https://avatars.githubusercontent.com/u/7246357?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/osanseviero",

"html_url": "https://github.com/osanseviero",

"followers_url": "https://api.github.com/users/osanseviero/followers",

"following_url": "https://api.github.com/users/osanseviero/following{/other_user}",

"gists_url": "https://api.github.com/users/osanseviero/gists{/gist_id}",

"starred_url": "https://api.github.com/users/osanseviero/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/osanseviero/subscriptions",

"organizations_url": "https://api.github.com/users/osanseviero/orgs",

"repos_url": "https://api.github.com/users/osanseviero/repos",

"events_url": "https://api.github.com/users/osanseviero/events{/privacy}",

"received_events_url": "https://api.github.com/users/osanseviero/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892871,

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement",

"name": "enhancement",

"color": "a2eeef",

"default": true,

"description": "New feature or request"

}

] |

open

| false | null |

[] | null |

[

"In the general case you can’t always reduce the quantity of data to download, since you can’t parse CSV or JSON data without downloading the whole files right ? ^^ However we could explore this case-by-case I guess",

"Actually for csv pandas has `usecols` which allows loading a subset of columns in a more efficient way afaik, but yes, you're right this might be more complex than I thought.",

"Bumping the visibility of this :) Is there a recommended way of doing this?",

"Passing `columns=[...]` to `load_dataset()` does work if the dataset is in Parquet format, but for other formats it's either not possible or not implemented"

] | 2022-04-06T16:38:46 | 2024-02-21T11:29:35 | null |

MEMBER

| null | null | null |

**Is your feature request related to a problem? Please describe.**

Some people are interested in doing label analysis of a CV dataset without downloading all the images. Downloading the whole dataset does not always makes sense for this kind of use case

**Describe the solution you'd like**

Be able to just download some columns of a dataset, such as doing

```python

load_dataset("huggan/wikiart",columns=["artist", "genre"])

```

Although this might make things a bit complicated in terms of local caching of datasets.

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/4114/reactions",

"total_count": 3,

"+1": 3,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/4114/timeline

| null | null |

https://api.github.com/repos/huggingface/datasets/issues/4113

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/4113/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/4113/comments

|

https://api.github.com/repos/huggingface/datasets/issues/4113/events

|

https://github.com/huggingface/datasets/issues/4113

| 1,194,843,532 |

I_kwDODunzps5HN92M

| 4,113 |

Multiprocessing with FileLock fails in python 3.9

|

{

"login": "lhoestq",

"id": 42851186,

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lhoestq",

"html_url": "https://github.com/lhoestq",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] |

closed

| false | null |

[] | null |

[

"Closing this one because it must be used this way actually:\r\n```python\r\ndef main():\r\n with FileLock(\"tmp.lock\"):\r\n with Pool(2) as pool:\r\n pool.map(run, range(2))\r\n\r\nif __name__ == \"__main__\":\r\n main()\r\n```"

] | 2022-04-06T16:27:09 | 2022-11-28T11:49:14 | 2022-11-28T11:49:14 |

MEMBER

| null | null | null |

On python 3.9, this code hangs:

```python

from multiprocessing import Pool

from filelock import FileLock

def run(i):

print(f"got the lock in multi process [{i}]")

with FileLock("tmp.lock"):

with Pool(2) as pool:

pool.map(run, range(2))

```

This is because the subprocesses try to acquire the lock from the main process for some reason. This is not the case in older versions of python.

This can cause many issues in python 3.9. In particular, we use multiprocessing to fetch data files when you load a dataset (as long as there are >16 data files). Therefore `imagefolder` hangs, and I expect any dataset that needs to download >16 files to hang as well.

Let's see if we can fix this and have a CI that runs on 3.9.

cc @mariosasko @julien-c

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/4113/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/4113/timeline

| null |

completed

|

https://api.github.com/repos/huggingface/datasets/issues/4112

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/4112/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/4112/comments

|

https://api.github.com/repos/huggingface/datasets/issues/4112/events

|

https://github.com/huggingface/datasets/issues/4112

| 1,194,752,765 |

I_kwDODunzps5HNnr9

| 4,112 |

ImageFolder with Grayscale images dataset

|

{

"login": "chainyo",

"id": 50595514,

"node_id": "MDQ6VXNlcjUwNTk1NTE0",

"avatar_url": "https://avatars.githubusercontent.com/u/50595514?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/chainyo",

"html_url": "https://github.com/chainyo",

"followers_url": "https://api.github.com/users/chainyo/followers",

"following_url": "https://api.github.com/users/chainyo/following{/other_user}",

"gists_url": "https://api.github.com/users/chainyo/gists{/gist_id}",

"starred_url": "https://api.github.com/users/chainyo/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/chainyo/subscriptions",

"organizations_url": "https://api.github.com/users/chainyo/orgs",

"repos_url": "https://api.github.com/users/chainyo/repos",

"events_url": "https://api.github.com/users/chainyo/events{/privacy}",

"received_events_url": "https://api.github.com/users/chainyo/received_events",

"type": "User",

"site_admin": false

}

|

[] |

closed

| false | null |

[] | null |

[

"Hi! Replacing:\r\n```python\r\ntransformed_dataset = dataset.with_transform(transforms)\r\ntransformed_dataset.set_format(type=\"torch\", device=\"cuda\")\r\n```\r\n\r\nwith:\r\n```python\r\ndef transform_func(examples):\r\n examples[\"image\"] = [transforms(img).to(\"cuda\") for img in examples[\"image\"]]\r\n return examples\r\n\r\ntransformed_dataset = dataset.with_transform(transform_func)\r\n```\r\nshould fix the issue. `datasets` doesn't support chaining of transforms (you can think of `set_format`/`with_format` as a predefined transform func for `set_transform`/`with_transforms`), so the last transform (in your case, `set_format`) takes precedence over the previous ones (in your case `with_format`). And the PyTorch formatter is not supported by the Image feature, hence the error (adding support for that is on our short-term roadmap).",

"Ok thanks a lot for the code snippet!\r\n\r\nI love the way `datasets` is easy to use but it made it really long to pre-process all the images (400.000 in my case) before training anything. `ImageFolder` from pytorch is faster in my case but force me to have the images on my local machine.\r\n\r\nI don't know how to speed up the process without switching to `ImageFolder` :smile: ",

"You can pass `ignore_verifications=True` in `load_dataset` to skip checksum verification, which takes a lot of time if the number of files is large. We will consider making this the default behavior."

] | 2022-04-06T15:10:00 | 2022-04-22T10:21:53 | 2022-04-22T10:21:52 |

NONE

| null | null | null |

Hi, I'm facing a problem with a grayscale images dataset I have uploaded [here](https://huggingface.co/datasets/ChainYo/rvl-cdip) (RVL-CDIP)

I'm getting an error while I want to use images for training a model with PyTorch DataLoader. Here is the full traceback:

```bash

AttributeError: Caught AttributeError in DataLoader worker process 0.

Original Traceback (most recent call last):

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/torch/utils/data/_utils/worker.py", line 287, in _worker_loop

data = fetcher.fetch(index)

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/torch/utils/data/_utils/fetch.py", line 49, in fetch

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/torch/utils/data/_utils/fetch.py", line 49, in <listcomp>

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 1765, in __getitem__

return self._getitem(

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 1750, in _getitem

formatted_output = format_table(

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/datasets/formatting/formatting.py", line 532, in format_table

return formatter(pa_table, query_type=query_type)

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/datasets/formatting/formatting.py", line 281, in __call__

return self.format_row(pa_table)

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/datasets/formatting/torch_formatter.py", line 58, in format_row

return self.recursive_tensorize(row)

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/datasets/formatting/torch_formatter.py", line 54, in recursive_tensorize

return map_nested(self._recursive_tensorize, data_struct, map_list=False)

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/datasets/utils/py_utils.py", line 314, in map_nested

mapped = [

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/datasets/utils/py_utils.py", line 315, in <listcomp>

_single_map_nested((function, obj, types, None, True, None))

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/datasets/utils/py_utils.py", line 267, in _single_map_nested

return {k: _single_map_nested((function, v, types, None, True, None)) for k, v in pbar}

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/datasets/utils/py_utils.py", line 267, in <dictcomp>

return {k: _single_map_nested((function, v, types, None, True, None)) for k, v in pbar}

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/datasets/utils/py_utils.py", line 251, in _single_map_nested

return function(data_struct)

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/datasets/formatting/torch_formatter.py", line 51, in _recursive_tensorize

return self._tensorize(data_struct)

File "/home/chainyo/miniconda3/envs/gan-bird/lib/python3.8/site-packages/datasets/formatting/torch_formatter.py", line 38, in _tensorize

if np.issubdtype(value.dtype, np.integer):

AttributeError: 'bytes' object has no attribute 'dtype'

```

I don't really understand why the image is still a bytes object while I used transformations on it. Here the code I used to upload the dataset (and it worked well):

```python

train_dataset = load_dataset("imagefolder", data_dir="data/train")

train_dataset = train_dataset["train"]

test_dataset = load_dataset("imagefolder", data_dir="data/test")

test_dataset = test_dataset["train"]

val_dataset = load_dataset("imagefolder", data_dir="data/val")

val_dataset = val_dataset["train"]

dataset = DatasetDict({

"train": train_dataset,

"val": val_dataset,

"test": test_dataset

})

dataset.push_to_hub("ChainYo/rvl-cdip")

```

Now here is the code I am using to get the dataset and prepare it for training:

```python

img_size = 512

batch_size = 128

normalize = [(0.5), (0.5)]

data_dir = "ChainYo/rvl-cdip"

dataset = load_dataset(data_dir, split="train")

transforms = transforms.Compose([

transforms.Resize(img_size),

transforms.CenterCrop(img_size),

transforms.ToTensor(),

transforms.Normalize(*normalize)

])

transformed_dataset = dataset.with_transform(transforms)

transformed_dataset.set_format(type="torch", device="cuda")

train_dataloader = torch.utils.data.DataLoader(

transformed_dataset, batch_size=batch_size, shuffle=True, num_workers=4, pin_memory=True

)

```

But this get me the error above. I don't understand why it's doing this kind of weird thing?

Do I need to map something on the dataset? Something like this:

```python

labels = dataset.features["label"].names

num_labels = dataset.features["label"].num_classes

def preprocess_data(examples):

images = [ex.convert("RGB") for ex in examples["image"]]

labels = [ex for ex in examples["label"]]

return {"images": images, "labels": labels}

features = Features({

"images": Image(decode=True, id=None),

"labels": ClassLabel(num_classes=num_labels, names=labels)

})

decoded_dataset = dataset.map(preprocess_data, remove_columns=dataset.column_names, features=features, batched=True, batch_size=100)

```

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/4112/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/4112/timeline

| null |

completed

|

https://api.github.com/repos/huggingface/datasets/issues/4107

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/4107/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/4107/comments

|

https://api.github.com/repos/huggingface/datasets/issues/4107/events

|

https://github.com/huggingface/datasets/issues/4107

| 1,194,484,885 |

I_kwDODunzps5HMmSV

| 4,107 |

Unable to view the dataset and loading the same dataset throws the error - ArrowInvalid: Exceeded maximum rows

|

{

"login": "Pavithree",

"id": 23344465,

"node_id": "MDQ6VXNlcjIzMzQ0NDY1",

"avatar_url": "https://avatars.githubusercontent.com/u/23344465?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Pavithree",

"html_url": "https://github.com/Pavithree",

"followers_url": "https://api.github.com/users/Pavithree/followers",

"following_url": "https://api.github.com/users/Pavithree/following{/other_user}",

"gists_url": "https://api.github.com/users/Pavithree/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Pavithree/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Pavithree/subscriptions",

"organizations_url": "https://api.github.com/users/Pavithree/orgs",

"repos_url": "https://api.github.com/users/Pavithree/repos",

"events_url": "https://api.github.com/users/Pavithree/events{/privacy}",

"received_events_url": "https://api.github.com/users/Pavithree/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] |

closed

| false | null |

[] | null |

[

"Thanks for reporting. I'm looking at it",

" It's not related to the dataset viewer in itself. I can replicate the error with:\r\n\r\n```\r\n>>> import datasets as ds\r\n>>> d = ds.load_dataset('Pavithree/explainLikeImFive')\r\nUsing custom data configuration Pavithree--explainLikeImFive-b68b6d8112cd8a51\r\nDownloading and preparing dataset json/Pavithree--explainLikeImFive to /home/slesage/.cache/huggingface/datasets/json/Pavithree--explainLikeImFive-b68b6d8112cd8a51/0.0.0/ac0ca5f5289a6cf108e706efcf040422dbbfa8e658dee6a819f20d76bb84d26b...\r\nDownloading data: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 305M/305M [00:03<00:00, 98.6MB/s]\r\nDownloading data: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 17.9M/17.9M [00:00<00:00, 75.7MB/s]\r\nDownloading data: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 11.9M/11.9M [00:00<00:00, 70.6MB/s]\r\nDownloading data files: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 3/3 [00:05<00:00, 1.92s/it]\r\nExtracting data files: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 3/3 [00:00<00:00, 1948.42it/s]\r\nFailed to read file '/home/slesage/.cache/huggingface/datasets/downloads/5fee9c8819754df277aee6f252e4db6897d785231c21938407b8862ca871d246' with error <class 'pyarrow.lib.ArrowInvalid'>: Exceeded maximum rows\r\nTraceback (most recent call last):\r\n File \"/home/slesage/hf/datasets/src/datasets/packaged_modules/json/json.py\", line 144, in _generate_tables\r\n dataset = json.load(f)\r\n File \"/home/slesage/.pyenv/versions/3.8.11/lib/python3.8/json/__init__.py\", line 293, in load\r\n return loads(fp.read(),\r\n File \"/home/slesage/.pyenv/versions/3.8.11/lib/python3.8/json/__init__.py\", line 357, in loads\r\n return _default_decoder.decode(s)\r\n File \"/home/slesage/.pyenv/versions/3.8.11/lib/python3.8/json/decoder.py\", line 340, in decode\r\n raise JSONDecodeError(\"Extra data\", s, end)\r\njson.decoder.JSONDecodeError: Extra data: line 1 column 916 (char 915)\r\n\r\nDuring handling of the above exception, another exception occurred:\r\n\r\nTraceback (most recent call last):\r\n File \"<stdin>\", line 1, in <module>\r\n File \"/home/slesage/hf/datasets/src/datasets/load.py\", line 1691, in load_dataset\r\n builder_instance.download_and_prepare(\r\n File \"/home/slesage/hf/datasets/src/datasets/builder.py\", line 605, in download_and_prepare\r\n self._download_and_prepare(\r\n File \"/home/slesage/hf/datasets/src/datasets/builder.py\", line 694, in _download_and_prepare\r\n self._prepare_split(split_generator, **prepare_split_kwargs)\r\n File \"/home/slesage/hf/datasets/src/datasets/builder.py\", line 1151, in _prepare_split\r\n for key, table in logging.tqdm(\r\n File \"/home/slesage/.pyenv/versions/datasets/lib/python3.8/site-packages/tqdm/std.py\", line 1168, in __iter__\r\n for obj in iterable:\r\n File \"/home/slesage/hf/datasets/src/datasets/packaged_modules/json/json.py\", line 146, in _generate_tables\r\n raise e\r\n File \"/home/slesage/hf/datasets/src/datasets/packaged_modules/json/json.py\", line 122, in _generate_tables\r\n pa_table = paj.read_json(\r\n File \"pyarrow/_json.pyx\", line 246, in pyarrow._json.read_json\r\n File \"pyarrow/error.pxi\", line 143, in pyarrow.lib.pyarrow_internal_check_status\r\n File \"pyarrow/error.pxi\", line 99, in pyarrow.lib.check_status\r\npyarrow.lib.ArrowInvalid: Exceeded maximum rows\r\n```\r\n\r\ncc @lhoestq @albertvillanova @mariosasko ",

"It seems that train.json is not a valid JSON Lines file: it has several JSON objects in the first line (the 915th character in the first line starts a new object, and there's no \"\\n\")\r\n\r\nYou need to have one JSON object per line",

"I'm closing this issue.\r\n\r\n@Pavithree, please, feel free to re-open it if fixing the JSON file does not solve it.",

"Thank you! that fixes the issue."

] | 2022-04-06T11:37:15 | 2022-04-08T07:13:07 | 2022-04-06T14:39:55 |

NONE

| null | null | null |

## Dataset viewer issue - -ArrowInvalid: Exceeded maximum rows

**Link:** *https://huggingface.co/datasets/Pavithree/explainLikeImFive*

*This is the subset of original eli5 dataset https://huggingface.co/datasets/vblagoje/lfqa. I just filtered the data samples which belongs to one particular subreddit thread. However, the dataset preview for train split returns the below mentioned error:

Status code: 400

Exception: ArrowInvalid

Message: Exceeded maximum rows

When I try to load the same dataset it returns ArrowInvalid: Exceeded maximum rows error*

Am I the one who added this dataset ? Yes

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/4107/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/4107/timeline

| null |

completed

|

https://api.github.com/repos/huggingface/datasets/issues/4105

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/4105/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/4105/comments

|

https://api.github.com/repos/huggingface/datasets/issues/4105/events

|

https://github.com/huggingface/datasets/issues/4105

| 1,194,297,119 |

I_kwDODunzps5HL4cf

| 4,105 |

push to hub fails with huggingface-hub 0.5.0

|

{

"login": "frascuchon",

"id": 2518789,

"node_id": "MDQ6VXNlcjI1MTg3ODk=",

"avatar_url": "https://avatars.githubusercontent.com/u/2518789?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/frascuchon",

"html_url": "https://github.com/frascuchon",

"followers_url": "https://api.github.com/users/frascuchon/followers",

"following_url": "https://api.github.com/users/frascuchon/following{/other_user}",

"gists_url": "https://api.github.com/users/frascuchon/gists{/gist_id}",

"starred_url": "https://api.github.com/users/frascuchon/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/frascuchon/subscriptions",

"organizations_url": "https://api.github.com/users/frascuchon/orgs",

"repos_url": "https://api.github.com/users/frascuchon/repos",

"events_url": "https://api.github.com/users/frascuchon/events{/privacy}",

"received_events_url": "https://api.github.com/users/frascuchon/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] |

closed

| false | null |

[] | null |

[

"Hi ! Indeed there was a breaking change in `huggingface_hub` 0.5.0 in `HfApi.create_repo`, which is called here in `datasets` by passing the org name in both the `repo_id` and the `organization` arguments:\r\n\r\nhttps://github.com/huggingface/datasets/blob/2230f7f7d7fbaf102cff356f5a8f3bd1561bea43/src/datasets/arrow_dataset.py#L3363-L3369\r\n\r\nI think we should fix that in `huggingface_hub`, will keep you posted. In the meantime please use `huggingface_hub` 0.4.0",

"I'll be sending a fix for this later today on the `huggingface_hub` side.\r\n\r\nThe error would be converted to a `FutureWarning` if `datasets` uses kwargs instead of positional, for example here: \r\n\r\nhttps://github.com/huggingface/datasets/blob/2230f7f7d7fbaf102cff356f5a8f3bd1561bea43/src/datasets/arrow_dataset.py#L3363-L3369\r\n\r\nto be:\r\n\r\n``` python\r\n api.create_repo(\r\n name=dataset_name,\r\n token=token,\r\n repo_type=\"dataset\",\r\n organization=organization,\r\n private=private,\r\n )\r\n```\r\n\r\nBut `name` and `organization` are deprecated in `huggingface_hub=0.5`, and people should pass `repo_id='org/name` instead. Note that `repo_id` was introduced in 0.5 and if `datasets` wants to support older `huggingface_hub` versions (which I encourage it to do), there needs to be a helper function to do that. It can be something like:\r\n\r\n\r\n```python\r\ndef create_repo(\r\n client,\r\n name: str,\r\n token: Optional[str] = None,\r\n organization: Optional[str] = None,\r\n private: Optional[bool] = None,\r\n repo_type: Optional[str] = None,\r\n exist_ok: Optional[bool] = False,\r\n space_sdk: Optional[str] = None,\r\n) -> str:\r\n try:\r\n return client.create_repo(\r\n repo_id=f\"{organization}/{name}\",\r\n token=token,\r\n private=private,\r\n repo_type=repo_type,\r\n exist_ok=exist_ok,\r\n space_sdk=space_sdk,\r\n )\r\n except TypeError:\r\n return client.create_repo(\r\n name=name,\r\n organization=organization,\r\n token=token,\r\n private=private,\r\n repo_type=repo_type,\r\n exist_ok=exist_ok,\r\n space_sdk=space_sdk,\r\n )\r\n```\r\n\r\nin a `utils/_fixes.py` kinda file and and be used internally.\r\n\r\nI'll be sending a patch to `huggingface_hub` to convert the error reported in this issue to a `FutureWarning`.",

"PR with the hotfix on the `huggingface_hub` side: https://github.com/huggingface/huggingface_hub/pull/822",

"We can definitely change `push_to_hub` to use `repo_id` in `datasets` and require `huggingface_hub>=0.5.0`.\r\n\r\nLet me open a PR :)",

"`huggingface_hub` 0.5.1 just got released with a fix, feel free to update `huggingface_hub` ;)"

] | 2022-04-06T08:59:57 | 2022-04-13T14:30:47 | 2022-04-13T14:30:47 |

NONE

| null | null | null |

## Describe the bug

`ds.push_to_hub` is failing when updating a dataset in the form "org_id/repo_id"

## Steps to reproduce the bug

```python

from datasets import load_dataset

ds = load_dataset("rubrix/news_test")

ds.push_to_hub("<your-user>/news_test", token="<your-token>")

```

## Expected results

The dataset is successfully uploaded

## Actual results

An error validation is raised:

```bash

if repo_id and (name or organization):

> raise ValueError(

"Only pass `repo_id` and leave deprecated `name` and "

"`organization` to be None."

E ValueError: Only pass `repo_id` and leave deprecated `name` and `organization` to be None.

```

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 1.18.1

- `huggingface-hub`: 0.5

- Platform: macOS

- Python version: 3.8.12

- PyArrow version: 6.0.0

cc @adrinjalali

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/4105/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/4105/timeline

| null |

completed

|

https://api.github.com/repos/huggingface/datasets/issues/4104

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/4104/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/4104/comments

|

https://api.github.com/repos/huggingface/datasets/issues/4104/events

|

https://github.com/huggingface/datasets/issues/4104

| 1,194,072,966 |

I_kwDODunzps5HLBuG

| 4,104 |

Add time series data - stock market

|

{

"login": "INF800",

"id": 45640029,

"node_id": "MDQ6VXNlcjQ1NjQwMDI5",

"avatar_url": "https://avatars.githubusercontent.com/u/45640029?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/INF800",

"html_url": "https://github.com/INF800",

"followers_url": "https://api.github.com/users/INF800/followers",

"following_url": "https://api.github.com/users/INF800/following{/other_user}",

"gists_url": "https://api.github.com/users/INF800/gists{/gist_id}",

"starred_url": "https://api.github.com/users/INF800/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/INF800/subscriptions",

"organizations_url": "https://api.github.com/users/INF800/orgs",

"repos_url": "https://api.github.com/users/INF800/repos",

"events_url": "https://api.github.com/users/INF800/events{/privacy}",

"received_events_url": "https://api.github.com/users/INF800/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 2067376369,

"node_id": "MDU6TGFiZWwyMDY3Mzc2MzY5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20request",

"name": "dataset request",

"color": "e99695",

"default": false,

"description": "Requesting to add a new dataset"

}

] |

open

| false | null |

[] | null |

[

"Can I use instructions present in below link for time series dataset as well? \r\nhttps://github.com/huggingface/datasets/blob/master/ADD_NEW_DATASET.md ",

"cc'ing @kashif and @NielsRogge for visibility!",

"@INF800 happy to add this dataset! I will try to set a PR by the end of the day... if you can kindly point me to the dataset? Also, note we have a bunch of time series datasets checked in e.g. `electricity_load_diagrams` or `monash_tsf`, and ideally this dataset could also be in a similar format. ",

"Thankyou. This is how raw data looks like before cleaning for an individual stocks:\r\n\r\n1. https://github.com/INF800/marktech/tree/raw-data/f/data/raw\r\n2. https://github.com/INF800/marktech/tree/raw-data/t/data/raw\r\n3. https://github.com/INF800/marktech/tree/raw-data/rdfn/data/raw\r\n4. https://github.com/INF800/marktech/tree/raw-data/irbt/data/raw\r\n5. https://github.com/INF800/marktech/tree/raw-data/hll/data/raw\r\n6. https://github.com/INF800/marktech/tree/raw-data/infy/data/raw\r\n7. https://github.com/INF800/marktech/tree/raw-data/reli/data/raw\r\n8. https://github.com/INF800/marktech/tree/raw-data/hdbk/data/raw\r\n\r\n> Scraping is automated using GitHub Actions. So, everyday we will see a new file added in the above links.\r\n\r\nI can rewrite the cleaning scripts to make sure it fits HF dataset standards. (P.S I am very much new to HF dataset)\r\n\r\nThe data set above can be converted into univariate regression / multivariate regression / sequence to sequence generation dataset etc. So, do we have some kind of transformation modules that will read the dataset as some type of dataset (`GenericTimeData`) and convert it to other possible dataset relating to a specific ML task. **By having this kind of transformation module, I only have to add data once** and use transformation module whenever necessary\r\n\r\nAdditionally, having some kind of versioning for the dataset will be really helpful because it will keep on updating - especially time series datasets ",

"thanks @INF800 I'll have a look. I believe it should be possible to incorporate this into the time-series format.",

"Referencing https://github.com/qingsongedu/time-series-transformers-review",

"@INF800 yes I am aware of the review repository and paper which is more or less a collection of abstracts etc. I am working on a unified library of implementations of these papers together with datasets to be then able to compare/contrast and build upon the research etc. but I am not ready to share them publicly just yet.\r\n\r\nIn any case regarding your dataset at the moment its seems from looking at the csv files, its mixture of textual and numerical data, sometimes in the same column etc. As you know, for time series models we would need just numeric data so I would need your help in disambiguating the dataset you have collected and also perhaps starting with just numerical data to start with... \r\n\r\nDo you think you can make a version with just numerical data?",

"> @INF800 yes I am aware of the review repository and paper which is more or less a collection of abstracts etc. I am working on a unified library of implementations of these papers together with datasets to be then able to compare/contrast and build upon the research etc. but I am not ready to share them publicly just yet.\r\n> \r\n> In any case regarding your dataset at the moment its seems from looking at the csv files, its mixture of textual and numerical data, sometimes in the same column etc. As you know, for time series models we would need just numeric data so I would need your help in disambiguating the dataset you have collected and also perhaps starting with just numerical data to start with...\r\n> \r\n> Do you think you can make a version with just numerical data?\r\n\r\nWill share the numeric data and conversion script within end of this week. \r\n\r\nI am on a business trip currently - it is in my desktop."

] | 2022-04-06T05:46:58 | 2022-04-11T09:07:10 | null |

NONE

| null | null | null |

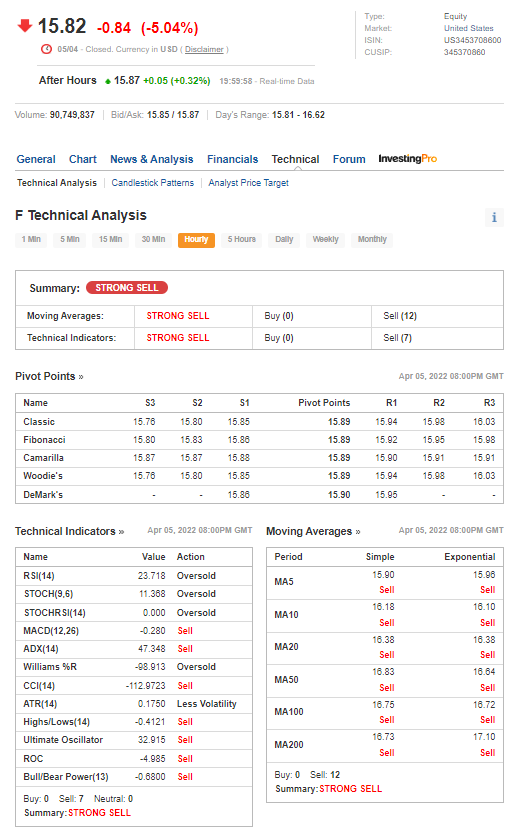

## Adding a Time Series Dataset

- **Name:** 2min ticker data for stock market

- **Description:** 8 stocks' data collected for 1month post ukraine-russia war. 4 NSE stocks and 4 NASDAQ stocks. Along with technical indicators (additional features) as shown in below image

- **Data:** Collected by myself from investing.com

- **Motivation:** Test applicability of transformer based model on stock market / time series problem

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/4104/reactions",

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 1,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/4104/timeline

| null | null |

https://api.github.com/repos/huggingface/datasets/issues/4101

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/4101/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/4101/comments

|

https://api.github.com/repos/huggingface/datasets/issues/4101/events

|

https://github.com/huggingface/datasets/issues/4101

| 1,193,399,204 |

I_kwDODunzps5HIdOk

| 4,101 |

How can I download only the train and test split for full numbers using load_dataset()?

|

{

"login": "Nakkhatra",

"id": 64383902,

"node_id": "MDQ6VXNlcjY0MzgzOTAy",

"avatar_url": "https://avatars.githubusercontent.com/u/64383902?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Nakkhatra",

"html_url": "https://github.com/Nakkhatra",

"followers_url": "https://api.github.com/users/Nakkhatra/followers",

"following_url": "https://api.github.com/users/Nakkhatra/following{/other_user}",

"gists_url": "https://api.github.com/users/Nakkhatra/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Nakkhatra/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Nakkhatra/subscriptions",

"organizations_url": "https://api.github.com/users/Nakkhatra/orgs",

"repos_url": "https://api.github.com/users/Nakkhatra/repos",

"events_url": "https://api.github.com/users/Nakkhatra/events{/privacy}",

"received_events_url": "https://api.github.com/users/Nakkhatra/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892871,

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement",

"name": "enhancement",

"color": "a2eeef",

"default": true,

"description": "New feature or request"

}

] |

open

| false | null |

[] | null |

[

"Hi! Can you please specify the full name of the dataset? IIRC `full_numbers` is one of the configs of the `svhn` dataset, and its generation is slow due to data being stored in binary Matlab files. Even if you specify a specific split, `datasets` downloads all of them, but we plan to fix that soon and only download the requested split.\r\n\r\nIf you are in a hurry, download the `svhn` script [here](`https://huggingface.co/datasets/svhn/blob/main/svhn.py`), remove [this code](https://huggingface.co/datasets/svhn/blob/main/svhn.py#L155-L162), and run:\r\n```python\r\nfrom datasets import load_dataset\r\ndset = load_dataset(\"path/to/your/local/script.py\", \"full_numbers\")\r\n```\r\n\r\nAnd to make loading easier in Colab, you can create a dataset repo on the Hub and upload the script there. Or push the script to Google Drive and mount the drive in Colab."

] | 2022-04-05T16:00:15 | 2022-04-06T13:09:01 | null |

NONE

| null | null | null |

How can I download only the train and test split for full numbers using load_dataset()?

I do not need the extra split and it will take 40 mins just to download in Colab. I have very short time in hand. Please help.

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/4101/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/4101/timeline

| null | null |

https://api.github.com/repos/huggingface/datasets/issues/4099

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/4099/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/4099/comments

|

https://api.github.com/repos/huggingface/datasets/issues/4099/events

|

https://github.com/huggingface/datasets/issues/4099

| 1,193,253,768 |

I_kwDODunzps5HH5uI

| 4,099 |

UnicodeDecodeError: 'ascii' codec can't decode byte 0xe5 in position 213: ordinal not in range(128)

|

{

"login": "andreybond",

"id": 20210017,

"node_id": "MDQ6VXNlcjIwMjEwMDE3",

"avatar_url": "https://avatars.githubusercontent.com/u/20210017?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/andreybond",

"html_url": "https://github.com/andreybond",

"followers_url": "https://api.github.com/users/andreybond/followers",

"following_url": "https://api.github.com/users/andreybond/following{/other_user}",

"gists_url": "https://api.github.com/users/andreybond/gists{/gist_id}",

"starred_url": "https://api.github.com/users/andreybond/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/andreybond/subscriptions",

"organizations_url": "https://api.github.com/users/andreybond/orgs",

"repos_url": "https://api.github.com/users/andreybond/repos",

"events_url": "https://api.github.com/users/andreybond/events{/privacy}",

"received_events_url": "https://api.github.com/users/andreybond/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] |

closed

| false |

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

] | null |

[

"Hi @andreybond, thanks for reporting.\r\n\r\nUnfortunately, I'm not able to able to reproduce your issue:\r\n```python\r\nIn [4]: from datasets import load_dataset\r\n ...: datasets = load_dataset(\"nielsr/XFUN\", \"xfun.ja\")\r\n\r\nIn [5]: datasets\r\nOut[5]: \r\nDatasetDict({\r\n train: Dataset({\r\n features: ['id', 'input_ids', 'bbox', 'labels', 'image', 'entities', 'relations'],\r\n num_rows: 194\r\n })\r\n validation: Dataset({\r\n features: ['id', 'input_ids', 'bbox', 'labels', 'image', 'entities', 'relations'],\r\n num_rows: 71\r\n })\r\n})\r\n```\r\n\r\nThe only reason I can imagine this issue may arise is if your default encoding is not \"UTF-8\" (and it is ASCII instead). This is usually the case on Windows machines; but you say your environment is a Linux machine. Maybe you change your machine default encoding?\r\n\r\nCould you please check this?\r\n```python\r\nIn [6]: import sys\r\n\r\nIn [7]: sys.getdefaultencoding()\r\nOut[7]: 'utf-8'\r\n```",

"I opened a PR in the original dataset loading script:\r\n- microsoft/unilm#677\r\n\r\nand fixed the corresponding dataset script on the Hub:\r\n- https://huggingface.co/datasets/nielsr/XFUN/commit/73ba5e026621e05fb756ae0f267eb49971f70ebd",

"import sys\r\nsys.getdefaultencoding()\r\n\r\nreturned: 'utf-8'\r\n\r\n---------------------\r\n\r\nI've just cloned master branch - your fix works! Thank you!"

] | 2022-04-05T14:42:38 | 2022-04-06T06:37:44 | 2022-04-06T06:35:54 |

NONE

| null | null | null |

## Describe the bug

Error "UnicodeDecodeError: 'ascii' codec can't decode byte 0xe5 in position 213: ordinal not in range(128)" is thrown when downloading dataset.

## Steps to reproduce the bug

```python

from datasets import load_dataset

datasets = load_dataset("nielsr/XFUN", "xfun.ja")

```

## Expected results

Dataset should be downloaded without exceptions

## Actual results

Stack trace (for the second-time execution):

Downloading and preparing dataset xfun/xfun.ja to /root/.cache/huggingface/datasets/nielsr___xfun/xfun.ja/0.0.0/e06e948b673d1be9a390a83c05c10e49438bf03dd85ae9a4fe06f8747a724477...

Downloading data files: 100%

2/2 [00:00<00:00, 88.48it/s]

Extracting data files: 100%

2/2 [00:00<00:00, 79.60it/s]

UnicodeDecodeErrorTraceback (most recent call last)

<ipython-input-31-79c26bd1109c> in <module>

1 from datasets import load_dataset

2

----> 3 datasets = load_dataset("nielsr/XFUN", "xfun.ja")

/usr/local/lib/python3.6/dist-packages/datasets/load.py in load_dataset(path, name, data_dir, data_files, split, cache_dir, features, download_config, download_mode, ignore_verifications, keep_in_memory, save_infos, revision, use_auth_token, task, streaming, **config_kwargs)

/usr/local/lib/python3.6/dist-packages/datasets/builder.py in download_and_prepare(self, download_config, download_mode, ignore_verifications, try_from_hf_gcs, dl_manager, base_path, use_auth_token, **download_and_prepare_kwargs)

604 )

605

--> 606 # By default, return all splits

607 if split is None:

608 split = {s: s for s in self.info.splits}

/usr/local/lib/python3.6/dist-packages/datasets/builder.py in _download_and_prepare(self, dl_manager, verify_infos)

/usr/local/lib/python3.6/dist-packages/datasets/builder.py in _download_and_prepare(self, dl_manager, verify_infos, **prepare_split_kwargs)

692 Args:

693 split: `datasets.Split` which subset of the data to read.

--> 694

695 Returns:

696 `Dataset`

/usr/local/lib/python3.6/dist-packages/datasets/builder.py in _prepare_split(self, split_generator, check_duplicate_keys)

/usr/local/lib/python3.6/dist-packages/tqdm/notebook.py in __iter__(self)

252 if not self.disable:

253 self.display(check_delay=False)

--> 254

255 def __iter__(self):

256 try:

/usr/local/lib/python3.6/dist-packages/tqdm/std.py in __iter__(self)

1183 for obj in iterable:

1184 yield obj

-> 1185 return

1186

1187 mininterval = self.mininterval

~/.cache/huggingface/modules/datasets_modules/datasets/nielsr--XFUN/e06e948b673d1be9a390a83c05c10e49438bf03dd85ae9a4fe06f8747a724477/XFUN.py in _generate_examples(self, filepaths)

140 logger.info("Generating examples from = %s", filepath)

141 with open(filepath[0], "r") as f:

--> 142 data = json.load(f)

143

144 for doc in data["documents"]:

/usr/lib/python3.6/json/__init__.py in load(fp, cls, object_hook, parse_float, parse_int, parse_constant, object_pairs_hook, **kw)

294

295 """

--> 296 return loads(fp.read(),

297 cls=cls, object_hook=object_hook,

298 parse_float=parse_float, parse_int=parse_int,

/usr/lib/python3.6/encodings/ascii.py in decode(self, input, final)

24 class IncrementalDecoder(codecs.IncrementalDecoder):

25 def decode(self, input, final=False):

---> 26 return codecs.ascii_decode(input, self.errors)[0]

27

28 class StreamWriter(Codec,codecs.StreamWriter):

UnicodeDecodeError: 'ascii' codec can't decode byte 0xe5 in position 213: ordinal not in range(128)

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 2.0.0 (but reproduced with many previous versions)

- Platform: Docker: Linux da5b74136d6b 5.3.0-1031-azure #32~18.04.1-Ubuntu SMP Mon Jun 22 15:27:23 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux ; Base docker image is : huggingface/transformers-pytorch-cpu

- Python version: 3.6.9

- PyArrow version: 6.0.1

|

{

"url": "https://api.github.com/repos/huggingface/datasets/issues/4099/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

}

|

https://api.github.com/repos/huggingface/datasets/issues/4099/timeline

| null |

completed

|

https://api.github.com/repos/huggingface/datasets/issues/4096

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/4096/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/4096/comments

|

https://api.github.com/repos/huggingface/datasets/issues/4096/events

|

https://github.com/huggingface/datasets/issues/4096

| 1,193,165,229 |

I_kwDODunzps5HHkGt

| 4,096 |

Add support for streaming Zarr stores for hosted datasets

|

{

"login": "jacobbieker",

"id": 7170359,

"node_id": "MDQ6VXNlcjcxNzAzNTk=",

"avatar_url": "https://avatars.githubusercontent.com/u/7170359?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jacobbieker",

"html_url": "https://github.com/jacobbieker",

"followers_url": "https://api.github.com/users/jacobbieker/followers",

"following_url": "https://api.github.com/users/jacobbieker/following{/other_user}",

"gists_url": "https://api.github.com/users/jacobbieker/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jacobbieker/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jacobbieker/subscriptions",

"organizations_url": "https://api.github.com/users/jacobbieker/orgs",

"repos_url": "https://api.github.com/users/jacobbieker/repos",

"events_url": "https://api.github.com/users/jacobbieker/events{/privacy}",

"received_events_url": "https://api.github.com/users/jacobbieker/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"id": 1935892871,

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement",

"name": "enhancement",

"color": "a2eeef",

"default": true,

"description": "New feature or request"

}

] |

closed

| false |

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

|

[

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",