model_id

stringlengths 7

105

| model_card

stringlengths 1

130k

| model_labels

listlengths 2

80k

|

|---|---|---|

hn11235/plant-seedlings-model

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# plant-seedlings-model

This model is a fine-tuned version of [google/vit-large-patch16-224-in21k](https://huggingface.co/google/vit-large-patch16-224-in21k) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1235

- Accuracy: 0.9783

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.3236 | 1.27 | 500 | 0.2747 | 0.9019 |

| 0.2273 | 2.54 | 1000 | 0.3031 | 0.9038 |

| 0.082 | 3.82 | 1500 | 0.2103 | 0.9280 |

| 0.061 | 5.09 | 2000 | 0.2235 | 0.9408 |

| 0.0668 | 6.36 | 2500 | 0.1633 | 0.9554 |

| 0.0739 | 7.63 | 3000 | 0.1561 | 0.9586 |

| 0.0836 | 8.91 | 3500 | 0.1904 | 0.9446 |

| 0.0078 | 10.18 | 4000 | 0.2045 | 0.9535 |

| 0.087 | 11.45 | 4500 | 0.4487 | 0.9146 |

| 0.0119 | 12.72 | 5000 | 0.2162 | 0.9567 |

| 0.0002 | 13.99 | 5500 | 0.1157 | 0.9758 |

| 0.0001 | 15.27 | 6000 | 0.1199 | 0.9771 |

| 0.0001 | 16.54 | 6500 | 0.1215 | 0.9790 |

| 0.0001 | 17.81 | 7000 | 0.1223 | 0.9790 |

| 0.0001 | 19.08 | 7500 | 0.1235 | 0.9783 |

### Framework versions

- Transformers 4.28.1

- Pytorch 2.0.0+cu118

- Datasets 2.11.0

- Tokenizers 0.13.3

|

[

"black-grass",

"charlock",

"small-flowered cranesbill",

"sugar beet",

"cleavers",

"common chickweed",

"common wheat",

"fat hen",

"loose silky-bent",

"maize",

"scentless mayweed",

"shepherds purse"

] |

Soulaimen/swin-tiny-patch4-window7-224-long_sleeve

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-tiny-patch4-window7-224-long_sleeve

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0078

- Accuracy: 0.9965

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.07 | 0.99 | 80 | 0.0078 | 0.9965 |

| 0.0402 | 1.99 | 161 | 0.0074 | 0.9965 |

| 0.0288 | 2.97 | 240 | 0.0068 | 0.9965 |

### Framework versions

- Transformers 4.28.1

- Pytorch 2.0.0+cu118

- Datasets 2.11.0

- Tokenizers 0.13.3

|

[

"chemise",

"hoodies"

] |

Soulaimen/swin-tiny-patch4-window7-224-short_sleeve

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-tiny-patch4-window7-224-short_sleeve

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0052

- Accuracy: 0.9973

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.1194 | 1.0 | 105 | 0.0181 | 0.992 |

| 0.1087 | 2.0 | 211 | 0.0174 | 0.992 |

| 0.0131 | 2.99 | 315 | 0.0052 | 0.9973 |

### Framework versions

- Transformers 4.28.1

- Pytorch 2.0.0+cu118

- Datasets 2.11.0

- Tokenizers 0.13.3

|

[

"chemise",

"t-shirt"

] |

harish03/catbreed

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# catbreed

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on the catbreed dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7210

- Accuracy: 0.9252

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 2.1329 | 0.99 | 29 | 1.7492 | 0.8376 |

| 1.3437 | 1.98 | 58 | 1.1638 | 0.9038 |

| 0.9266 | 2.97 | 87 | 0.9013 | 0.8974 |

| 0.7274 | 4.0 | 117 | 0.7345 | 0.9338 |

| 0.6652 | 4.96 | 145 | 0.7210 | 0.9252 |

### Framework versions

- Transformers 4.28.1

- Pytorch 2.0.0+cu118

- Datasets 2.11.0

- Tokenizers 0.13.3

|

[

"african_leopard",

"caracal",

"cheetah",

"clouded_leopard",

"jaguar",

"lion",

"ocelot",

"puma",

"snow_leopard",

"tiger"

] |

Soulaimen/swin-tiny-patch4-window7-224-bottom

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-tiny-patch4-window7-224-bottom

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1389

- Accuracy: 0.9513

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.3578 | 0.99 | 132 | 0.1946 | 0.9258 |

| 0.2537 | 2.0 | 265 | 0.1389 | 0.9513 |

| 0.1909 | 2.98 | 396 | 0.1285 | 0.9513 |

### Framework versions

- Transformers 4.28.1

- Pytorch 2.0.0+cu118

- Datasets 2.11.0

- Tokenizers 0.13.3

|

[

"jeans",

"legging",

"sweatpants"

] |

Soulaimen/swin-tiny-patch4-window7-224-bottom-112

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-tiny-patch4-window7-224-bottom-112

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1603

- Accuracy: 0.9576

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.3441 | 0.99 | 132 | 0.1944 | 0.9534 |

| 0.2171 | 2.0 | 265 | 0.1894 | 0.9449 |

| 0.1709 | 2.98 | 396 | 0.1603 | 0.9576 |

### Framework versions

- Transformers 4.28.1

- Pytorch 2.0.0+cu118

- Datasets 2.11.0

- Tokenizers 0.13.3

|

[

"jeans",

"legging",

"sweatpants"

] |

Soulaimen/swin-tiny-patch4-window7-224-bottom-macco

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-tiny-patch4-window7-224-bottom-macco

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1369

- Accuracy: 0.9486

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.427 | 1.0 | 175 | 0.2480 | 0.9021 |

| 0.241 | 2.0 | 350 | 0.2291 | 0.9085 |

| 0.2771 | 3.0 | 525 | 0.1369 | 0.9486 |

### Framework versions

- Transformers 4.28.1

- Pytorch 2.0.0+cu118

- Datasets 2.11.0

- Tokenizers 0.13.3

|

[

"jeans",

"leggings",

"macco",

"sweatpants"

] |

Soulaimen/swin-tiny-patch4-window7-224-bottom_cleaned_data

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-tiny-patch4-window7-224-bottom_cleaned_data

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0839

- Accuracy: 0.9726

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.01

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.4444 | 1.0 | 174 | 0.2271 | 0.9163 |

| 0.3518 | 2.0 | 349 | 0.2449 | 0.9034 |

| 0.225 | 3.0 | 523 | 0.1325 | 0.9501 |

| 0.2195 | 4.0 | 698 | 0.1024 | 0.9549 |

| 0.2627 | 5.0 | 872 | 0.1046 | 0.9630 |

| 0.142 | 6.0 | 1047 | 0.0839 | 0.9726 |

| 0.1516 | 7.0 | 1221 | 0.0918 | 0.9630 |

| 0.1498 | 8.0 | 1396 | 0.0780 | 0.9726 |

| 0.1189 | 9.0 | 1570 | 0.0721 | 0.9662 |

| 0.1594 | 9.97 | 1740 | 0.0668 | 0.9726 |

### Framework versions

- Transformers 4.28.1

- Pytorch 2.0.0+cu118

- Datasets 2.11.0

- Tokenizers 0.13.3

|

[

"jeans",

"leggings",

"macco",

"sweatpants"

] |

Soulaimen/swin-tiny-patch4-window7-224-bottom_cleaned_data-hpt

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-tiny-patch4-window7-224-bottom_cleaned_data-hpt

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0701

- Accuracy: 0.9694

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 7

- total_train_batch_size: 56

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.01

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.4307 | 0.99 | 99 | 0.2332 | 0.9227 |

| 0.3425 | 2.0 | 199 | 0.1904 | 0.9404 |

| 0.29 | 3.0 | 299 | 0.1316 | 0.9388 |

| 0.2597 | 3.99 | 398 | 0.1158 | 0.9533 |

| 0.2638 | 4.99 | 498 | 0.0987 | 0.9614 |

| 0.209 | 6.0 | 598 | 0.0802 | 0.9710 |

| 0.1776 | 7.0 | 698 | 0.0838 | 0.9597 |

| 0.1776 | 7.99 | 797 | 0.0787 | 0.9694 |

| 0.1502 | 9.0 | 897 | 0.0797 | 0.9726 |

| 0.1402 | 9.93 | 990 | 0.0701 | 0.9694 |

### Framework versions

- Transformers 4.28.1

- Pytorch 2.0.0+cu118

- Datasets 2.11.0

- Tokenizers 0.13.3

|

[

"jeans",

"leggings",

"macco",

"sweatpants"

] |

microsoft/focalnet-tiny

|

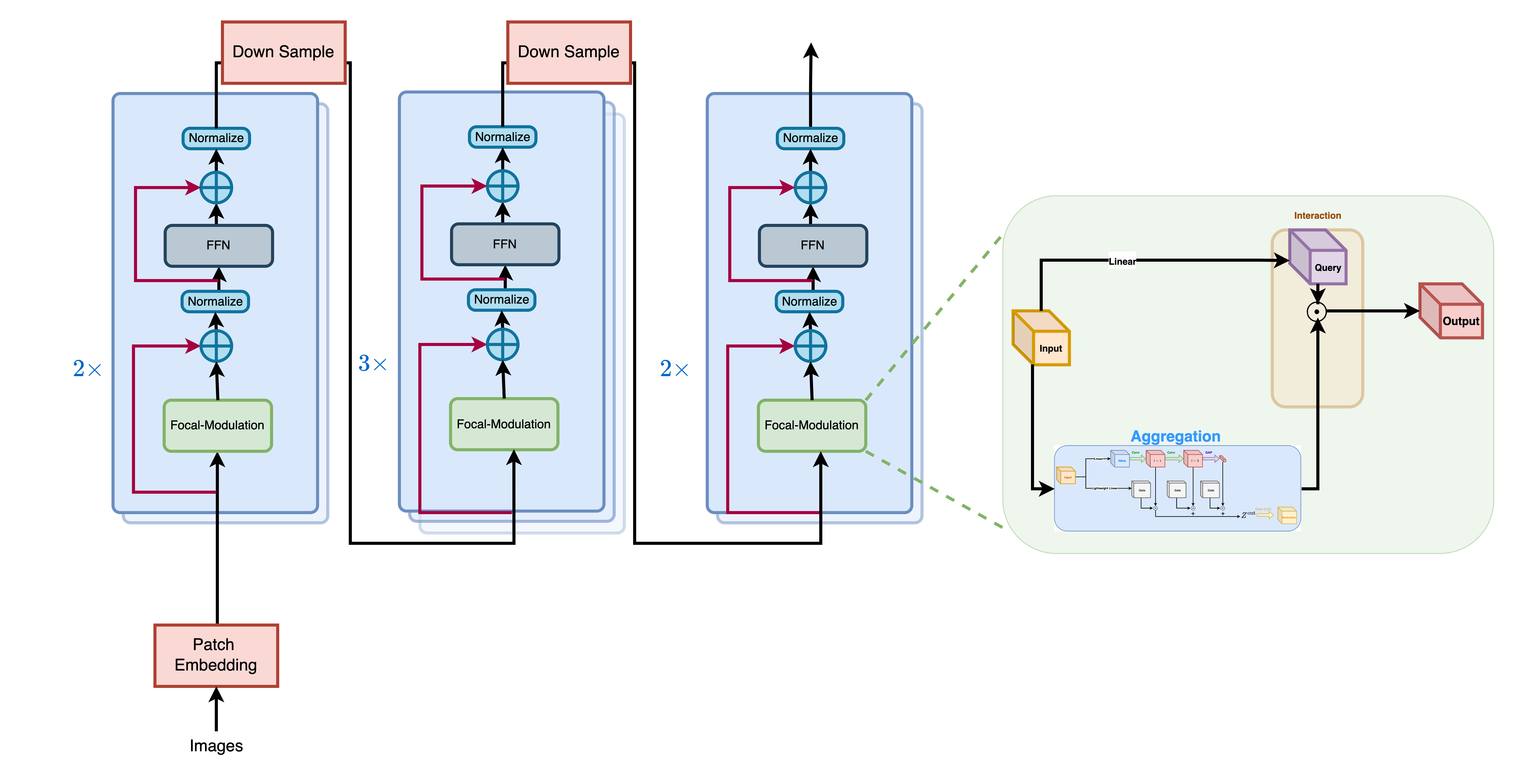

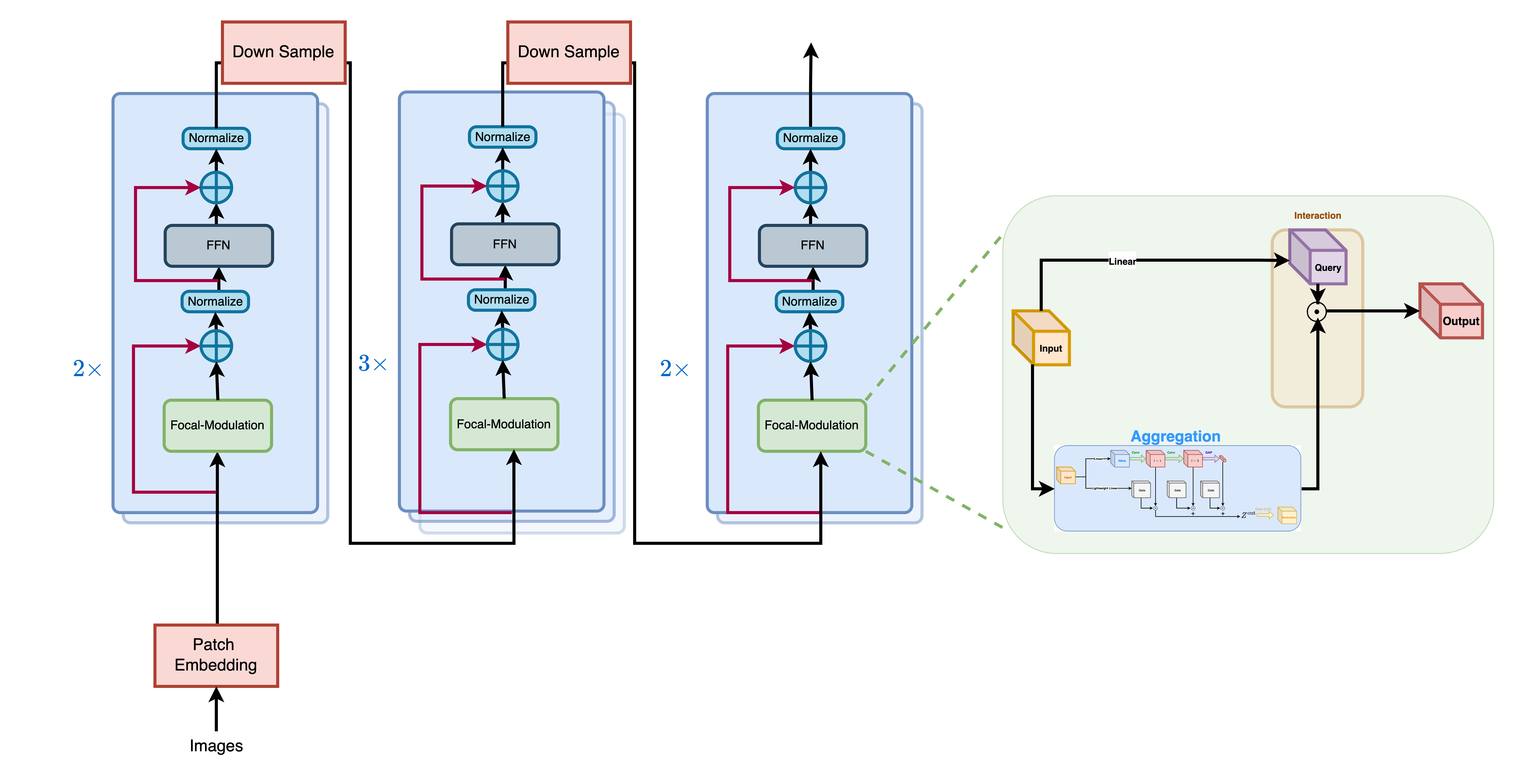

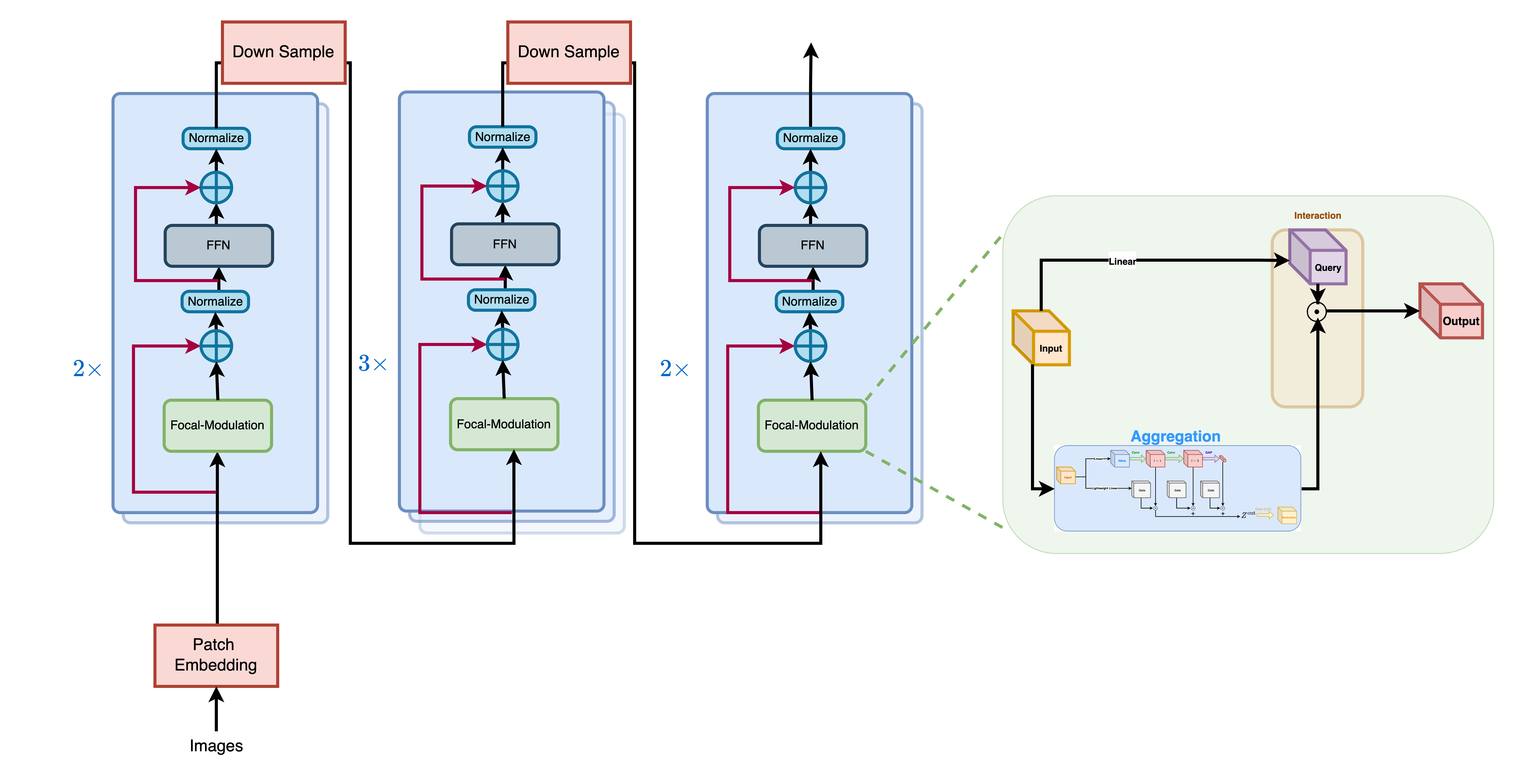

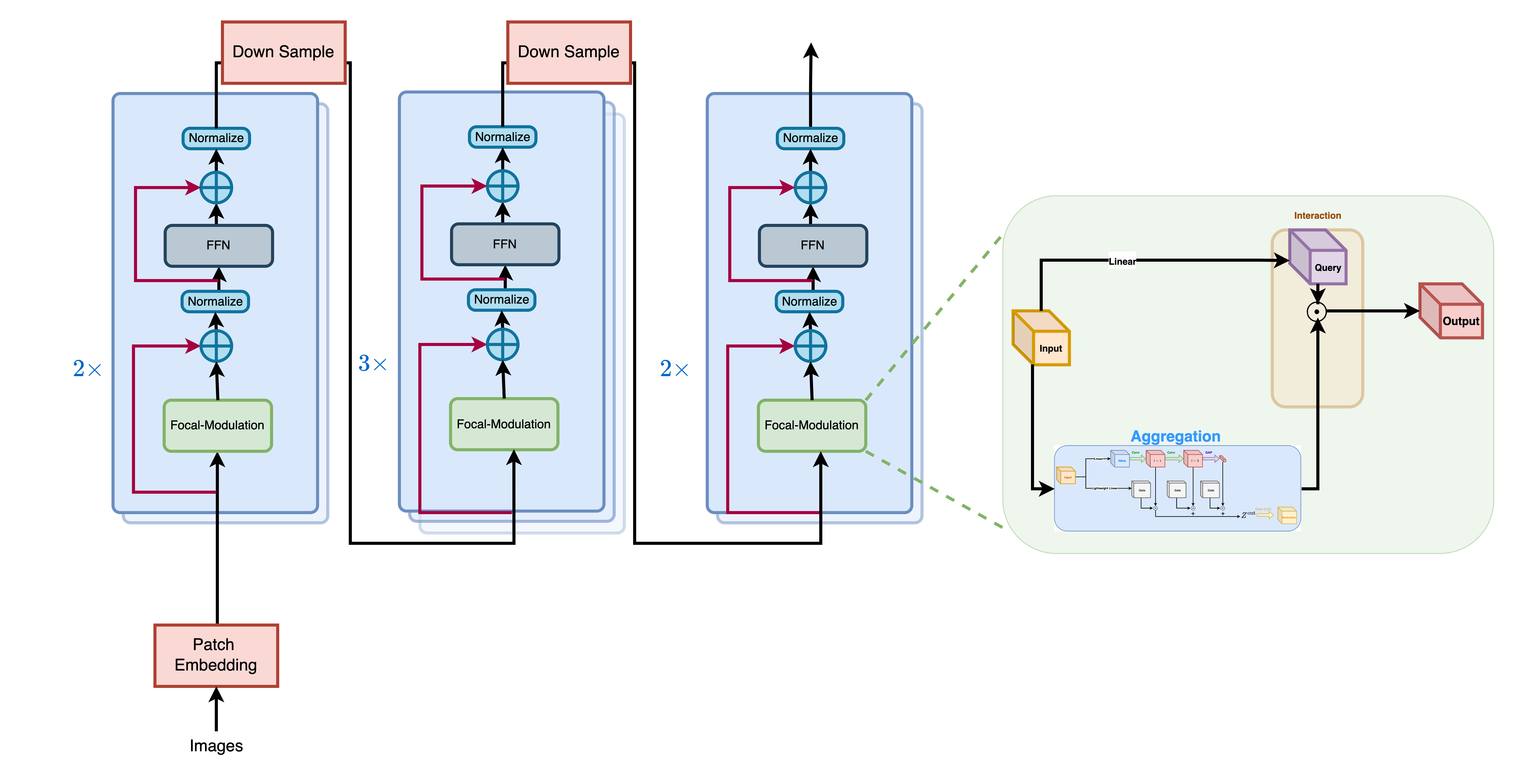

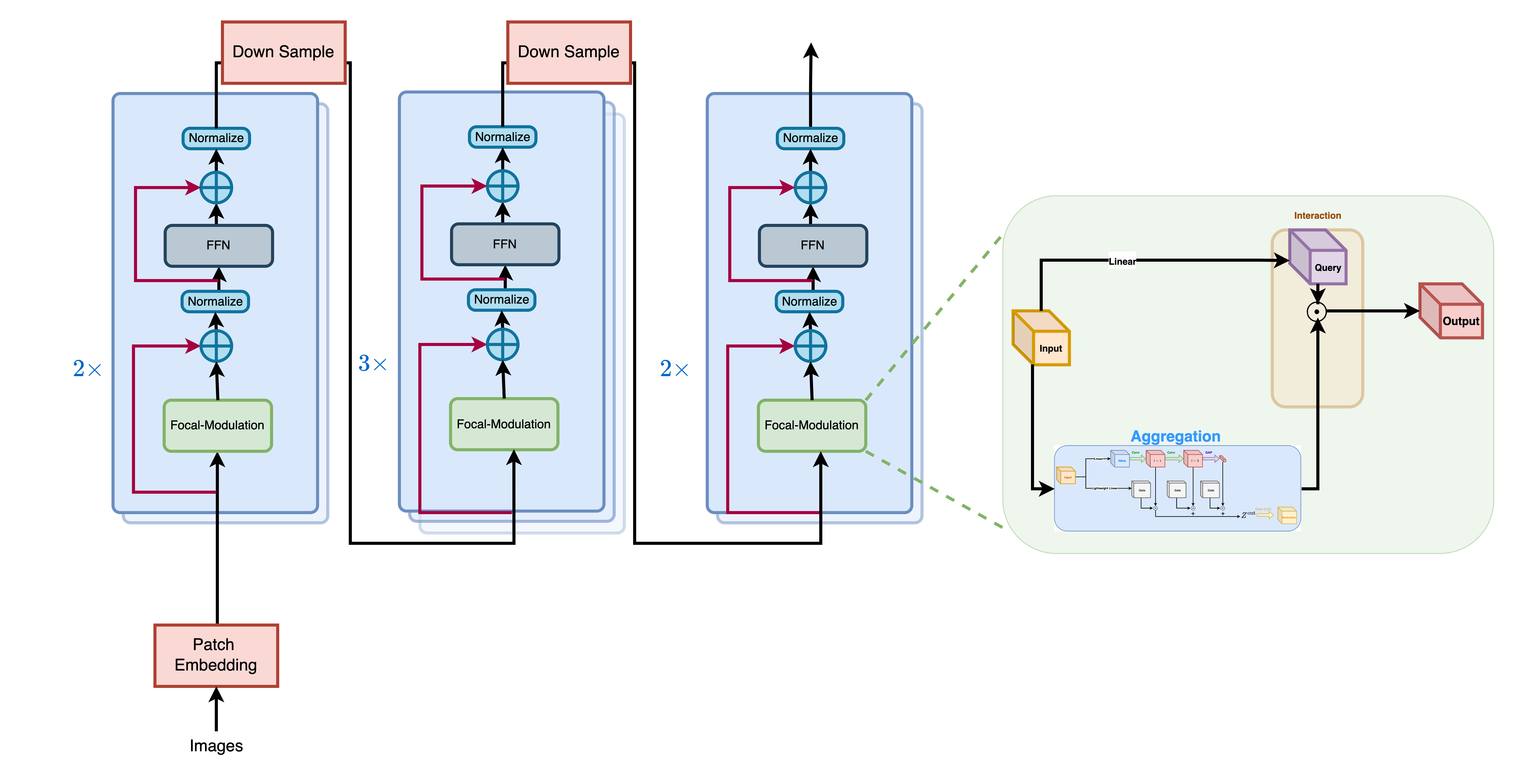

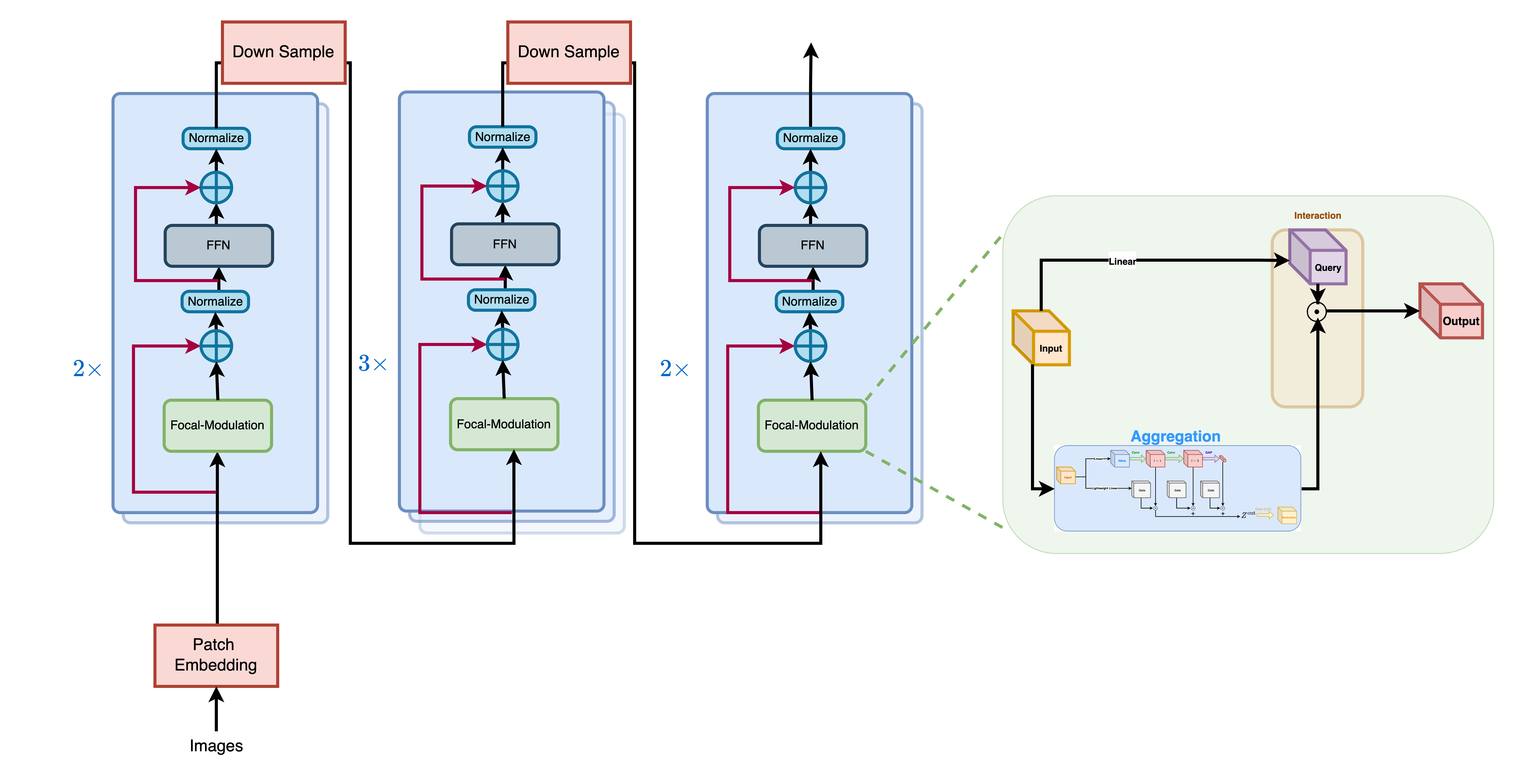

# FocalNet (tiny-sized model)

FocalNet model trained on ImageNet-1k at resolution 224x224. It was introduced in the paper [Focal Modulation Networks

](https://arxiv.org/abs/2203.11926) by Yang et al. and first released in [this repository](https://github.com/microsoft/FocalNet).

Disclaimer: The team releasing FocalNet did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

Focul Modulation Networks are an alternative to Vision Transformers, where self-attention (SA) is completely replaced by a focal modulation mechanism for modeling token interactions in vision.

Focal modulation comprises three components: (i) hierarchical contextualization, implemented using a stack of depth-wise convolutional layers, to encode visual contexts from short to long ranges, (ii) gated aggregation to selectively gather contexts for each query token based on its

content, and (iii) element-wise modulation or affine transformation to inject the aggregated context into the query. Extensive experiments show FocalNets outperform the state-of-the-art SA counterparts (e.g., Vision Transformers, Swin and Focal Transformers) with similar computational costs on the tasks of image classification, object detection, and segmentation.

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=focalnet) to look for

fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model to classify an image of the COCO 2017 dataset into one of the 1,000 ImageNet classes:

```python

from transformers import FocalNetImageProcessor, FocalNetForImageClassification

import torch

from datasets import load_dataset

dataset = load_dataset("huggingface/cats-image")

image = dataset["test"]["image"][0]

preprocessor = FocalNetImageProcessor.from_pretrained("microsoft/focalnet-tiny")

model = FocalNetForImageClassification.from_pretrained("microsoft/focalnet-tiny")

inputs = preprocessor(image, return_tensors="pt")

with torch.no_grad():

logits = model(**inputs).logits

# model predicts one of the 1000 ImageNet classes

predicted_label = logits.argmax(-1).item()

print(model.config.id2label[predicted_label]),

```

For more code examples, we refer to the [documentation](https://huggingface.co/docs/transformers/master/en/model_doc/focalnet).

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2203-11926,

author = {Jianwei Yang and

Chunyuan Li and

Jianfeng Gao},

title = {Focal Modulation Networks},

journal = {CoRR},

volume = {abs/2203.11926},

year = {2022},

url = {https://doi.org/10.48550/arXiv.2203.11926},

doi = {10.48550/arXiv.2203.11926},

eprinttype = {arXiv},

eprint = {2203.11926},

timestamp = {Tue, 29 Mar 2022 18:07:24 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2203-11926.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

[

"tench, tinca tinca",

"goldfish, carassius auratus",

"great white shark, white shark, man-eater, man-eating shark, carcharodon carcharias",

"tiger shark, galeocerdo cuvieri",

"hammerhead, hammerhead shark",

"electric ray, crampfish, numbfish, torpedo",

"stingray",

"cock",

"hen",

"ostrich, struthio camelus",

"brambling, fringilla montifringilla",

"goldfinch, carduelis carduelis",

"house finch, linnet, carpodacus mexicanus",

"junco, snowbird",

"indigo bunting, indigo finch, indigo bird, passerina cyanea",

"robin, american robin, turdus migratorius",

"bulbul",

"jay",

"magpie",

"chickadee",

"water ouzel, dipper",

"kite",

"bald eagle, american eagle, haliaeetus leucocephalus",

"vulture",

"great grey owl, great gray owl, strix nebulosa",

"european fire salamander, salamandra salamandra",

"common newt, triturus vulgaris",

"eft",

"spotted salamander, ambystoma maculatum",

"axolotl, mud puppy, ambystoma mexicanum",

"bullfrog, rana catesbeiana",

"tree frog, tree-frog",

"tailed frog, bell toad, ribbed toad, tailed toad, ascaphus trui",

"loggerhead, loggerhead turtle, caretta caretta",

"leatherback turtle, leatherback, leathery turtle, dermochelys coriacea",

"mud turtle",

"terrapin",

"box turtle, box tortoise",

"banded gecko",

"common iguana, iguana, iguana iguana",

"american chameleon, anole, anolis carolinensis",

"whiptail, whiptail lizard",

"agama",

"frilled lizard, chlamydosaurus kingi",

"alligator lizard",

"gila monster, heloderma suspectum",

"green lizard, lacerta viridis",

"african chameleon, chamaeleo chamaeleon",

"komodo dragon, komodo lizard, dragon lizard, giant lizard, varanus komodoensis",

"african crocodile, nile crocodile, crocodylus niloticus",

"american alligator, alligator mississipiensis",

"triceratops",

"thunder snake, worm snake, carphophis amoenus",

"ringneck snake, ring-necked snake, ring snake",

"hognose snake, puff adder, sand viper",

"green snake, grass snake",

"king snake, kingsnake",

"garter snake, grass snake",

"water snake",

"vine snake",

"night snake, hypsiglena torquata",

"boa constrictor, constrictor constrictor",

"rock python, rock snake, python sebae",

"indian cobra, naja naja",

"green mamba",

"sea snake",

"horned viper, cerastes, sand viper, horned asp, cerastes cornutus",

"diamondback, diamondback rattlesnake, crotalus adamanteus",

"sidewinder, horned rattlesnake, crotalus cerastes",

"trilobite",

"harvestman, daddy longlegs, phalangium opilio",

"scorpion",

"black and gold garden spider, argiope aurantia",

"barn spider, araneus cavaticus",

"garden spider, aranea diademata",

"black widow, latrodectus mactans",

"tarantula",

"wolf spider, hunting spider",

"tick",

"centipede",

"black grouse",

"ptarmigan",

"ruffed grouse, partridge, bonasa umbellus",

"prairie chicken, prairie grouse, prairie fowl",

"peacock",

"quail",

"partridge",

"african grey, african gray, psittacus erithacus",

"macaw",

"sulphur-crested cockatoo, kakatoe galerita, cacatua galerita",

"lorikeet",

"coucal",

"bee eater",

"hornbill",

"hummingbird",

"jacamar",

"toucan",

"drake",

"red-breasted merganser, mergus serrator",

"goose",

"black swan, cygnus atratus",

"tusker",

"echidna, spiny anteater, anteater",

"platypus, duckbill, duckbilled platypus, duck-billed platypus, ornithorhynchus anatinus",

"wallaby, brush kangaroo",

"koala, koala bear, kangaroo bear, native bear, phascolarctos cinereus",

"wombat",

"jellyfish",

"sea anemone, anemone",

"brain coral",

"flatworm, platyhelminth",

"nematode, nematode worm, roundworm",

"conch",

"snail",

"slug",

"sea slug, nudibranch",

"chiton, coat-of-mail shell, sea cradle, polyplacophore",

"chambered nautilus, pearly nautilus, nautilus",

"dungeness crab, cancer magister",

"rock crab, cancer irroratus",

"fiddler crab",

"king crab, alaska crab, alaskan king crab, alaska king crab, paralithodes camtschatica",

"american lobster, northern lobster, maine lobster, homarus americanus",

"spiny lobster, langouste, rock lobster, crawfish, crayfish, sea crawfish",

"crayfish, crawfish, crawdad, crawdaddy",

"hermit crab",

"isopod",

"white stork, ciconia ciconia",

"black stork, ciconia nigra",

"spoonbill",

"flamingo",

"little blue heron, egretta caerulea",

"american egret, great white heron, egretta albus",

"bittern",

"crane",

"limpkin, aramus pictus",

"european gallinule, porphyrio porphyrio",

"american coot, marsh hen, mud hen, water hen, fulica americana",

"bustard",

"ruddy turnstone, arenaria interpres",

"red-backed sandpiper, dunlin, erolia alpina",

"redshank, tringa totanus",

"dowitcher",

"oystercatcher, oyster catcher",

"pelican",

"king penguin, aptenodytes patagonica",

"albatross, mollymawk",

"grey whale, gray whale, devilfish, eschrichtius gibbosus, eschrichtius robustus",

"killer whale, killer, orca, grampus, sea wolf, orcinus orca",

"dugong, dugong dugon",

"sea lion",

"chihuahua",

"japanese spaniel",

"maltese dog, maltese terrier, maltese",

"pekinese, pekingese, peke",

"shih-tzu",

"blenheim spaniel",

"papillon",

"toy terrier",

"rhodesian ridgeback",

"afghan hound, afghan",

"basset, basset hound",

"beagle",

"bloodhound, sleuthhound",

"bluetick",

"black-and-tan coonhound",

"walker hound, walker foxhound",

"english foxhound",

"redbone",

"borzoi, russian wolfhound",

"irish wolfhound",

"italian greyhound",

"whippet",

"ibizan hound, ibizan podenco",

"norwegian elkhound, elkhound",

"otterhound, otter hound",

"saluki, gazelle hound",

"scottish deerhound, deerhound",

"weimaraner",

"staffordshire bullterrier, staffordshire bull terrier",

"american staffordshire terrier, staffordshire terrier, american pit bull terrier, pit bull terrier",

"bedlington terrier",

"border terrier",

"kerry blue terrier",

"irish terrier",

"norfolk terrier",

"norwich terrier",

"yorkshire terrier",

"wire-haired fox terrier",

"lakeland terrier",

"sealyham terrier, sealyham",

"airedale, airedale terrier",

"cairn, cairn terrier",

"australian terrier",

"dandie dinmont, dandie dinmont terrier",

"boston bull, boston terrier",

"miniature schnauzer",

"giant schnauzer",

"standard schnauzer",

"scotch terrier, scottish terrier, scottie",

"tibetan terrier, chrysanthemum dog",

"silky terrier, sydney silky",

"soft-coated wheaten terrier",

"west highland white terrier",

"lhasa, lhasa apso",

"flat-coated retriever",

"curly-coated retriever",

"golden retriever",

"labrador retriever",

"chesapeake bay retriever",

"german short-haired pointer",

"vizsla, hungarian pointer",

"english setter",

"irish setter, red setter",

"gordon setter",

"brittany spaniel",

"clumber, clumber spaniel",

"english springer, english springer spaniel",

"welsh springer spaniel",

"cocker spaniel, english cocker spaniel, cocker",

"sussex spaniel",

"irish water spaniel",

"kuvasz",

"schipperke",

"groenendael",

"malinois",

"briard",

"kelpie",

"komondor",

"old english sheepdog, bobtail",

"shetland sheepdog, shetland sheep dog, shetland",

"collie",

"border collie",

"bouvier des flandres, bouviers des flandres",

"rottweiler",

"german shepherd, german shepherd dog, german police dog, alsatian",

"doberman, doberman pinscher",

"miniature pinscher",

"greater swiss mountain dog",

"bernese mountain dog",

"appenzeller",

"entlebucher",

"boxer",

"bull mastiff",

"tibetan mastiff",

"french bulldog",

"great dane",

"saint bernard, st bernard",

"eskimo dog, husky",

"malamute, malemute, alaskan malamute",

"siberian husky",

"dalmatian, coach dog, carriage dog",

"affenpinscher, monkey pinscher, monkey dog",

"basenji",

"pug, pug-dog",

"leonberg",

"newfoundland, newfoundland dog",

"great pyrenees",

"samoyed, samoyede",

"pomeranian",

"chow, chow chow",

"keeshond",

"brabancon griffon",

"pembroke, pembroke welsh corgi",

"cardigan, cardigan welsh corgi",

"toy poodle",

"miniature poodle",

"standard poodle",

"mexican hairless",

"timber wolf, grey wolf, gray wolf, canis lupus",

"white wolf, arctic wolf, canis lupus tundrarum",

"red wolf, maned wolf, canis rufus, canis niger",

"coyote, prairie wolf, brush wolf, canis latrans",

"dingo, warrigal, warragal, canis dingo",

"dhole, cuon alpinus",

"african hunting dog, hyena dog, cape hunting dog, lycaon pictus",

"hyena, hyaena",

"red fox, vulpes vulpes",

"kit fox, vulpes macrotis",

"arctic fox, white fox, alopex lagopus",

"grey fox, gray fox, urocyon cinereoargenteus",

"tabby, tabby cat",

"tiger cat",

"persian cat",

"siamese cat, siamese",

"egyptian cat",

"cougar, puma, catamount, mountain lion, painter, panther, felis concolor",

"lynx, catamount",

"leopard, panthera pardus",

"snow leopard, ounce, panthera uncia",

"jaguar, panther, panthera onca, felis onca",

"lion, king of beasts, panthera leo",

"tiger, panthera tigris",

"cheetah, chetah, acinonyx jubatus",

"brown bear, bruin, ursus arctos",

"american black bear, black bear, ursus americanus, euarctos americanus",

"ice bear, polar bear, ursus maritimus, thalarctos maritimus",

"sloth bear, melursus ursinus, ursus ursinus",

"mongoose",

"meerkat, mierkat",

"tiger beetle",

"ladybug, ladybeetle, lady beetle, ladybird, ladybird beetle",

"ground beetle, carabid beetle",

"long-horned beetle, longicorn, longicorn beetle",

"leaf beetle, chrysomelid",

"dung beetle",

"rhinoceros beetle",

"weevil",

"fly",

"bee",

"ant, emmet, pismire",

"grasshopper, hopper",

"cricket",

"walking stick, walkingstick, stick insect",

"cockroach, roach",

"mantis, mantid",

"cicada, cicala",

"leafhopper",

"lacewing, lacewing fly",

"dragonfly, darning needle, devil's darning needle, sewing needle, snake feeder, snake doctor, mosquito hawk, skeeter hawk",

"damselfly",

"admiral",

"ringlet, ringlet butterfly",

"monarch, monarch butterfly, milkweed butterfly, danaus plexippus",

"cabbage butterfly",

"sulphur butterfly, sulfur butterfly",

"lycaenid, lycaenid butterfly",

"starfish, sea star",

"sea urchin",

"sea cucumber, holothurian",

"wood rabbit, cottontail, cottontail rabbit",

"hare",

"angora, angora rabbit",

"hamster",

"porcupine, hedgehog",

"fox squirrel, eastern fox squirrel, sciurus niger",

"marmot",

"beaver",

"guinea pig, cavia cobaya",

"sorrel",

"zebra",

"hog, pig, grunter, squealer, sus scrofa",

"wild boar, boar, sus scrofa",

"warthog",

"hippopotamus, hippo, river horse, hippopotamus amphibius",

"ox",

"water buffalo, water ox, asiatic buffalo, bubalus bubalis",

"bison",

"ram, tup",

"bighorn, bighorn sheep, cimarron, rocky mountain bighorn, rocky mountain sheep, ovis canadensis",

"ibex, capra ibex",

"hartebeest",

"impala, aepyceros melampus",

"gazelle",

"arabian camel, dromedary, camelus dromedarius",

"llama",

"weasel",

"mink",

"polecat, fitch, foulmart, foumart, mustela putorius",

"black-footed ferret, ferret, mustela nigripes",

"otter",

"skunk, polecat, wood pussy",

"badger",

"armadillo",

"three-toed sloth, ai, bradypus tridactylus",

"orangutan, orang, orangutang, pongo pygmaeus",

"gorilla, gorilla gorilla",

"chimpanzee, chimp, pan troglodytes",

"gibbon, hylobates lar",

"siamang, hylobates syndactylus, symphalangus syndactylus",

"guenon, guenon monkey",

"patas, hussar monkey, erythrocebus patas",

"baboon",

"macaque",

"langur",

"colobus, colobus monkey",

"proboscis monkey, nasalis larvatus",

"marmoset",

"capuchin, ringtail, cebus capucinus",

"howler monkey, howler",

"titi, titi monkey",

"spider monkey, ateles geoffroyi",

"squirrel monkey, saimiri sciureus",

"madagascar cat, ring-tailed lemur, lemur catta",

"indri, indris, indri indri, indri brevicaudatus",

"indian elephant, elephas maximus",

"african elephant, loxodonta africana",

"lesser panda, red panda, panda, bear cat, cat bear, ailurus fulgens",

"giant panda, panda, panda bear, coon bear, ailuropoda melanoleuca",

"barracouta, snoek",

"eel",

"coho, cohoe, coho salmon, blue jack, silver salmon, oncorhynchus kisutch",

"rock beauty, holocanthus tricolor",

"anemone fish",

"sturgeon",

"gar, garfish, garpike, billfish, lepisosteus osseus",

"lionfish",

"puffer, pufferfish, blowfish, globefish",

"abacus",

"abaya",

"academic gown, academic robe, judge's robe",

"accordion, piano accordion, squeeze box",

"acoustic guitar",

"aircraft carrier, carrier, flattop, attack aircraft carrier",

"airliner",

"airship, dirigible",

"altar",

"ambulance",

"amphibian, amphibious vehicle",

"analog clock",

"apiary, bee house",

"apron",

"ashcan, trash can, garbage can, wastebin, ash bin, ash-bin, ashbin, dustbin, trash barrel, trash bin",

"assault rifle, assault gun",

"backpack, back pack, knapsack, packsack, rucksack, haversack",

"bakery, bakeshop, bakehouse",

"balance beam, beam",

"balloon",

"ballpoint, ballpoint pen, ballpen, biro",

"band aid",

"banjo",

"bannister, banister, balustrade, balusters, handrail",

"barbell",

"barber chair",

"barbershop",

"barn",

"barometer",

"barrel, cask",

"barrow, garden cart, lawn cart, wheelbarrow",

"baseball",

"basketball",

"bassinet",

"bassoon",

"bathing cap, swimming cap",

"bath towel",

"bathtub, bathing tub, bath, tub",

"beach wagon, station wagon, wagon, estate car, beach waggon, station waggon, waggon",

"beacon, lighthouse, beacon light, pharos",

"beaker",

"bearskin, busby, shako",

"beer bottle",

"beer glass",

"bell cote, bell cot",

"bib",

"bicycle-built-for-two, tandem bicycle, tandem",

"bikini, two-piece",

"binder, ring-binder",

"binoculars, field glasses, opera glasses",

"birdhouse",

"boathouse",

"bobsled, bobsleigh, bob",

"bolo tie, bolo, bola tie, bola",

"bonnet, poke bonnet",

"bookcase",

"bookshop, bookstore, bookstall",

"bottlecap",

"bow",

"bow tie, bow-tie, bowtie",

"brass, memorial tablet, plaque",

"brassiere, bra, bandeau",

"breakwater, groin, groyne, mole, bulwark, seawall, jetty",

"breastplate, aegis, egis",

"broom",

"bucket, pail",

"buckle",

"bulletproof vest",

"bullet train, bullet",

"butcher shop, meat market",

"cab, hack, taxi, taxicab",

"caldron, cauldron",

"candle, taper, wax light",

"cannon",

"canoe",

"can opener, tin opener",

"cardigan",

"car mirror",

"carousel, carrousel, merry-go-round, roundabout, whirligig",

"carpenter's kit, tool kit",

"carton",

"car wheel",

"cash machine, cash dispenser, automated teller machine, automatic teller machine, automated teller, automatic teller, atm",

"cassette",

"cassette player",

"castle",

"catamaran",

"cd player",

"cello, violoncello",

"cellular telephone, cellular phone, cellphone, cell, mobile phone",

"chain",

"chainlink fence",

"chain mail, ring mail, mail, chain armor, chain armour, ring armor, ring armour",

"chain saw, chainsaw",

"chest",

"chiffonier, commode",

"chime, bell, gong",

"china cabinet, china closet",

"christmas stocking",

"church, church building",

"cinema, movie theater, movie theatre, movie house, picture palace",

"cleaver, meat cleaver, chopper",

"cliff dwelling",

"cloak",

"clog, geta, patten, sabot",

"cocktail shaker",

"coffee mug",

"coffeepot",

"coil, spiral, volute, whorl, helix",

"combination lock",

"computer keyboard, keypad",

"confectionery, confectionary, candy store",

"container ship, containership, container vessel",

"convertible",

"corkscrew, bottle screw",

"cornet, horn, trumpet, trump",

"cowboy boot",

"cowboy hat, ten-gallon hat",

"cradle",

"crane",

"crash helmet",

"crate",

"crib, cot",

"crock pot",

"croquet ball",

"crutch",

"cuirass",

"dam, dike, dyke",

"desk",

"desktop computer",

"dial telephone, dial phone",

"diaper, nappy, napkin",

"digital clock",

"digital watch",

"dining table, board",

"dishrag, dishcloth",

"dishwasher, dish washer, dishwashing machine",

"disk brake, disc brake",

"dock, dockage, docking facility",

"dogsled, dog sled, dog sleigh",

"dome",

"doormat, welcome mat",

"drilling platform, offshore rig",

"drum, membranophone, tympan",

"drumstick",

"dumbbell",

"dutch oven",

"electric fan, blower",

"electric guitar",

"electric locomotive",

"entertainment center",

"envelope",

"espresso maker",

"face powder",

"feather boa, boa",

"file, file cabinet, filing cabinet",

"fireboat",

"fire engine, fire truck",

"fire screen, fireguard",

"flagpole, flagstaff",

"flute, transverse flute",

"folding chair",

"football helmet",

"forklift",

"fountain",

"fountain pen",

"four-poster",

"freight car",

"french horn, horn",

"frying pan, frypan, skillet",

"fur coat",

"garbage truck, dustcart",

"gasmask, respirator, gas helmet",

"gas pump, gasoline pump, petrol pump, island dispenser",

"goblet",

"go-kart",

"golf ball",

"golfcart, golf cart",

"gondola",

"gong, tam-tam",

"gown",

"grand piano, grand",

"greenhouse, nursery, glasshouse",

"grille, radiator grille",

"grocery store, grocery, food market, market",

"guillotine",

"hair slide",

"hair spray",

"half track",

"hammer",

"hamper",

"hand blower, blow dryer, blow drier, hair dryer, hair drier",

"hand-held computer, hand-held microcomputer",

"handkerchief, hankie, hanky, hankey",

"hard disc, hard disk, fixed disk",

"harmonica, mouth organ, harp, mouth harp",

"harp",

"harvester, reaper",

"hatchet",

"holster",

"home theater, home theatre",

"honeycomb",

"hook, claw",

"hoopskirt, crinoline",

"horizontal bar, high bar",

"horse cart, horse-cart",

"hourglass",

"ipod",

"iron, smoothing iron",

"jack-o'-lantern",

"jean, blue jean, denim",

"jeep, landrover",

"jersey, t-shirt, tee shirt",

"jigsaw puzzle",

"jinrikisha, ricksha, rickshaw",

"joystick",

"kimono",

"knee pad",

"knot",

"lab coat, laboratory coat",

"ladle",

"lampshade, lamp shade",

"laptop, laptop computer",

"lawn mower, mower",

"lens cap, lens cover",

"letter opener, paper knife, paperknife",

"library",

"lifeboat",

"lighter, light, igniter, ignitor",

"limousine, limo",

"liner, ocean liner",

"lipstick, lip rouge",

"loafer",

"lotion",

"loudspeaker, speaker, speaker unit, loudspeaker system, speaker system",

"loupe, jeweler's loupe",

"lumbermill, sawmill",

"magnetic compass",

"mailbag, postbag",

"mailbox, letter box",

"maillot",

"maillot, tank suit",

"manhole cover",

"maraca",

"marimba, xylophone",

"mask",

"matchstick",

"maypole",

"maze, labyrinth",

"measuring cup",

"medicine chest, medicine cabinet",

"megalith, megalithic structure",

"microphone, mike",

"microwave, microwave oven",

"military uniform",

"milk can",

"minibus",

"miniskirt, mini",

"minivan",

"missile",

"mitten",

"mixing bowl",

"mobile home, manufactured home",

"model t",

"modem",

"monastery",

"monitor",

"moped",

"mortar",

"mortarboard",

"mosque",

"mosquito net",

"motor scooter, scooter",

"mountain bike, all-terrain bike, off-roader",

"mountain tent",

"mouse, computer mouse",

"mousetrap",

"moving van",

"muzzle",

"nail",

"neck brace",

"necklace",

"nipple",

"notebook, notebook computer",

"obelisk",

"oboe, hautboy, hautbois",

"ocarina, sweet potato",

"odometer, hodometer, mileometer, milometer",

"oil filter",

"organ, pipe organ",

"oscilloscope, scope, cathode-ray oscilloscope, cro",

"overskirt",

"oxcart",

"oxygen mask",

"packet",

"paddle, boat paddle",

"paddlewheel, paddle wheel",

"padlock",

"paintbrush",

"pajama, pyjama, pj's, jammies",

"palace",

"panpipe, pandean pipe, syrinx",

"paper towel",

"parachute, chute",

"parallel bars, bars",

"park bench",

"parking meter",

"passenger car, coach, carriage",

"patio, terrace",

"pay-phone, pay-station",

"pedestal, plinth, footstall",

"pencil box, pencil case",

"pencil sharpener",

"perfume, essence",

"petri dish",

"photocopier",

"pick, plectrum, plectron",

"pickelhaube",

"picket fence, paling",

"pickup, pickup truck",

"pier",

"piggy bank, penny bank",

"pill bottle",

"pillow",

"ping-pong ball",

"pinwheel",

"pirate, pirate ship",

"pitcher, ewer",

"plane, carpenter's plane, woodworking plane",

"planetarium",

"plastic bag",

"plate rack",

"plow, plough",

"plunger, plumber's helper",

"polaroid camera, polaroid land camera",

"pole",

"police van, police wagon, paddy wagon, patrol wagon, wagon, black maria",

"poncho",

"pool table, billiard table, snooker table",

"pop bottle, soda bottle",

"pot, flowerpot",

"potter's wheel",

"power drill",

"prayer rug, prayer mat",

"printer",

"prison, prison house",

"projectile, missile",

"projector",

"puck, hockey puck",

"punching bag, punch bag, punching ball, punchball",

"purse",

"quill, quill pen",

"quilt, comforter, comfort, puff",

"racer, race car, racing car",

"racket, racquet",

"radiator",

"radio, wireless",

"radio telescope, radio reflector",

"rain barrel",

"recreational vehicle, rv, r.v.",

"reel",

"reflex camera",

"refrigerator, icebox",

"remote control, remote",

"restaurant, eating house, eating place, eatery",

"revolver, six-gun, six-shooter",

"rifle",

"rocking chair, rocker",

"rotisserie",

"rubber eraser, rubber, pencil eraser",

"rugby ball",

"rule, ruler",

"running shoe",

"safe",

"safety pin",

"saltshaker, salt shaker",

"sandal",

"sarong",

"sax, saxophone",

"scabbard",

"scale, weighing machine",

"school bus",

"schooner",

"scoreboard",

"screen, crt screen",

"screw",

"screwdriver",

"seat belt, seatbelt",

"sewing machine",

"shield, buckler",

"shoe shop, shoe-shop, shoe store",

"shoji",

"shopping basket",

"shopping cart",

"shovel",

"shower cap",

"shower curtain",

"ski",

"ski mask",

"sleeping bag",

"slide rule, slipstick",

"sliding door",

"slot, one-armed bandit",

"snorkel",

"snowmobile",

"snowplow, snowplough",

"soap dispenser",

"soccer ball",

"sock",

"solar dish, solar collector, solar furnace",

"sombrero",

"soup bowl",

"space bar",

"space heater",

"space shuttle",

"spatula",

"speedboat",

"spider web, spider's web",

"spindle",

"sports car, sport car",

"spotlight, spot",

"stage",

"steam locomotive",

"steel arch bridge",

"steel drum",

"stethoscope",

"stole",

"stone wall",

"stopwatch, stop watch",

"stove",

"strainer",

"streetcar, tram, tramcar, trolley, trolley car",

"stretcher",

"studio couch, day bed",

"stupa, tope",

"submarine, pigboat, sub, u-boat",

"suit, suit of clothes",

"sundial",

"sunglass",

"sunglasses, dark glasses, shades",

"sunscreen, sunblock, sun blocker",

"suspension bridge",

"swab, swob, mop",

"sweatshirt",

"swimming trunks, bathing trunks",

"swing",

"switch, electric switch, electrical switch",

"syringe",

"table lamp",

"tank, army tank, armored combat vehicle, armoured combat vehicle",

"tape player",

"teapot",

"teddy, teddy bear",

"television, television system",

"tennis ball",

"thatch, thatched roof",

"theater curtain, theatre curtain",

"thimble",

"thresher, thrasher, threshing machine",

"throne",

"tile roof",

"toaster",

"tobacco shop, tobacconist shop, tobacconist",

"toilet seat",

"torch",

"totem pole",

"tow truck, tow car, wrecker",

"toyshop",

"tractor",

"trailer truck, tractor trailer, trucking rig, rig, articulated lorry, semi",

"tray",

"trench coat",

"tricycle, trike, velocipede",

"trimaran",

"tripod",

"triumphal arch",

"trolleybus, trolley coach, trackless trolley",

"trombone",

"tub, vat",

"turnstile",

"typewriter keyboard",

"umbrella",

"unicycle, monocycle",

"upright, upright piano",

"vacuum, vacuum cleaner",

"vase",

"vault",

"velvet",

"vending machine",

"vestment",

"viaduct",

"violin, fiddle",

"volleyball",

"waffle iron",

"wall clock",

"wallet, billfold, notecase, pocketbook",

"wardrobe, closet, press",

"warplane, military plane",

"washbasin, handbasin, washbowl, lavabo, wash-hand basin",

"washer, automatic washer, washing machine",

"water bottle",

"water jug",

"water tower",

"whiskey jug",

"whistle",

"wig",

"window screen",

"window shade",

"windsor tie",

"wine bottle",

"wing",

"wok",

"wooden spoon",

"wool, woolen, woollen",

"worm fence, snake fence, snake-rail fence, virginia fence",

"wreck",

"yawl",

"yurt",

"web site, website, internet site, site",

"comic book",

"crossword puzzle, crossword",

"street sign",

"traffic light, traffic signal, stoplight",

"book jacket, dust cover, dust jacket, dust wrapper",

"menu",

"plate",

"guacamole",

"consomme",

"hot pot, hotpot",

"trifle",

"ice cream, icecream",

"ice lolly, lolly, lollipop, popsicle",

"french loaf",

"bagel, beigel",

"pretzel",

"cheeseburger",

"hotdog, hot dog, red hot",

"mashed potato",

"head cabbage",

"broccoli",

"cauliflower",

"zucchini, courgette",

"spaghetti squash",

"acorn squash",

"butternut squash",

"cucumber, cuke",

"artichoke, globe artichoke",

"bell pepper",

"cardoon",

"mushroom",

"granny smith",

"strawberry",

"orange",

"lemon",

"fig",

"pineapple, ananas",

"banana",

"jackfruit, jak, jack",

"custard apple",

"pomegranate",

"hay",

"carbonara",

"chocolate sauce, chocolate syrup",

"dough",

"meat loaf, meatloaf",

"pizza, pizza pie",

"potpie",

"burrito",

"red wine",

"espresso",

"cup",

"eggnog",

"alp",

"bubble",

"cliff, drop, drop-off",

"coral reef",

"geyser",

"lakeside, lakeshore",

"promontory, headland, head, foreland",

"sandbar, sand bar",

"seashore, coast, seacoast, sea-coast",

"valley, vale",

"volcano",

"ballplayer, baseball player",

"groom, bridegroom",

"scuba diver",

"rapeseed",

"daisy",

"yellow lady's slipper, yellow lady-slipper, cypripedium calceolus, cypripedium parviflorum",

"corn",

"acorn",

"hip, rose hip, rosehip",

"buckeye, horse chestnut, conker",

"coral fungus",

"agaric",

"gyromitra",

"stinkhorn, carrion fungus",

"earthstar",

"hen-of-the-woods, hen of the woods, polyporus frondosus, grifola frondosa",

"bolete",

"ear, spike, capitulum",

"toilet tissue, toilet paper, bathroom tissue"

] |

microsoft/focalnet-tiny-lrf

|

# FocalNet (tiny-sized large reception field model)

FocalNet model trained on ImageNet-1k at resolution 224x224. It was introduced in the paper [Focal Modulation Networks

](https://arxiv.org/abs/2203.11926) by Yang et al. and first released in [this repository](https://github.com/microsoft/FocalNet).

Disclaimer: The team releasing FocalNet did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

Focul Modulation Networks are an alternative to Vision Transformers, where self-attention (SA) is completely replaced by a focal modulation mechanism for modeling token interactions in vision.

Focal modulation comprises three components: (i) hierarchical contextualization, implemented using a stack of depth-wise convolutional layers, to encode visual contexts from short to long ranges, (ii) gated aggregation to selectively gather contexts for each query token based on its

content, and (iii) element-wise modulation or affine transformation to inject the aggregated context into the query. Extensive experiments show FocalNets outperform the state-of-the-art SA counterparts (e.g., Vision Transformers, Swin and Focal Transformers) with similar computational costs on the tasks of image classification, object detection, and segmentation.

## Intended uses & limitations

You can use the raw model for image classification. See the [model hub](https://huggingface.co/models?search=focalnet) to look for

fine-tuned versions on a task that interests you.

### How to use

Here is how to use this model to classify an image of the COCO 2017 dataset into one of the 1,000 ImageNet classes:

```python

from transformers import FocalNetImageProcessor, FocalNetForImageClassification

import torch

from datasets import load_dataset

dataset = load_dataset("huggingface/cats-image")

image = dataset["test"]["image"][0]

preprocessor = FocalNetImageProcessor.from_pretrained("microsoft/focalnet-tiny-lrf")

model = FocalNetForImageClassification.from_pretrained("microsoft/focalnet-tiny-lrf")

inputs = preprocessor(image, return_tensors="pt")

with torch.no_grad():

logits = model(**inputs).logits

# model predicts one of the 1000 ImageNet classes

predicted_label = logits.argmax(-1).item()

print(model.config.id2label[predicted_label]),

```

For more code examples, we refer to the [documentation](https://huggingface.co/docs/transformers/master/en/model_doc/focalnet).

### BibTeX entry and citation info

```bibtex

@article{DBLP:journals/corr/abs-2203-11926,

author = {Jianwei Yang and

Chunyuan Li and

Jianfeng Gao},

title = {Focal Modulation Networks},

journal = {CoRR},

volume = {abs/2203.11926},

year = {2022},

url = {https://doi.org/10.48550/arXiv.2203.11926},

doi = {10.48550/arXiv.2203.11926},

eprinttype = {arXiv},

eprint = {2203.11926},

timestamp = {Tue, 29 Mar 2022 18:07:24 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2203-11926.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

```

|

[

"tench, tinca tinca",

"goldfish, carassius auratus",

"great white shark, white shark, man-eater, man-eating shark, carcharodon carcharias",

"tiger shark, galeocerdo cuvieri",

"hammerhead, hammerhead shark",

"electric ray, crampfish, numbfish, torpedo",

"stingray",

"cock",

"hen",

"ostrich, struthio camelus",

"brambling, fringilla montifringilla",

"goldfinch, carduelis carduelis",

"house finch, linnet, carpodacus mexicanus",

"junco, snowbird",

"indigo bunting, indigo finch, indigo bird, passerina cyanea",

"robin, american robin, turdus migratorius",

"bulbul",

"jay",

"magpie",

"chickadee",

"water ouzel, dipper",

"kite",

"bald eagle, american eagle, haliaeetus leucocephalus",

"vulture",

"great grey owl, great gray owl, strix nebulosa",

"european fire salamander, salamandra salamandra",

"common newt, triturus vulgaris",

"eft",

"spotted salamander, ambystoma maculatum",

"axolotl, mud puppy, ambystoma mexicanum",

"bullfrog, rana catesbeiana",

"tree frog, tree-frog",

"tailed frog, bell toad, ribbed toad, tailed toad, ascaphus trui",

"loggerhead, loggerhead turtle, caretta caretta",

"leatherback turtle, leatherback, leathery turtle, dermochelys coriacea",

"mud turtle",

"terrapin",

"box turtle, box tortoise",

"banded gecko",

"common iguana, iguana, iguana iguana",

"american chameleon, anole, anolis carolinensis",

"whiptail, whiptail lizard",

"agama",

"frilled lizard, chlamydosaurus kingi",

"alligator lizard",

"gila monster, heloderma suspectum",

"green lizard, lacerta viridis",

"african chameleon, chamaeleo chamaeleon",

"komodo dragon, komodo lizard, dragon lizard, giant lizard, varanus komodoensis",

"african crocodile, nile crocodile, crocodylus niloticus",

"american alligator, alligator mississipiensis",

"triceratops",

"thunder snake, worm snake, carphophis amoenus",

"ringneck snake, ring-necked snake, ring snake",

"hognose snake, puff adder, sand viper",

"green snake, grass snake",

"king snake, kingsnake",

"garter snake, grass snake",

"water snake",

"vine snake",

"night snake, hypsiglena torquata",

"boa constrictor, constrictor constrictor",

"rock python, rock snake, python sebae",

"indian cobra, naja naja",

"green mamba",

"sea snake",

"horned viper, cerastes, sand viper, horned asp, cerastes cornutus",

"diamondback, diamondback rattlesnake, crotalus adamanteus",

"sidewinder, horned rattlesnake, crotalus cerastes",

"trilobite",

"harvestman, daddy longlegs, phalangium opilio",

"scorpion",

"black and gold garden spider, argiope aurantia",

"barn spider, araneus cavaticus",

"garden spider, aranea diademata",

"black widow, latrodectus mactans",

"tarantula",

"wolf spider, hunting spider",

"tick",

"centipede",

"black grouse",

"ptarmigan",

"ruffed grouse, partridge, bonasa umbellus",

"prairie chicken, prairie grouse, prairie fowl",

"peacock",

"quail",

"partridge",

"african grey, african gray, psittacus erithacus",

"macaw",

"sulphur-crested cockatoo, kakatoe galerita, cacatua galerita",

"lorikeet",

"coucal",

"bee eater",

"hornbill",

"hummingbird",

"jacamar",

"toucan",

"drake",

"red-breasted merganser, mergus serrator",

"goose",

"black swan, cygnus atratus",

"tusker",

"echidna, spiny anteater, anteater",

"platypus, duckbill, duckbilled platypus, duck-billed platypus, ornithorhynchus anatinus",

"wallaby, brush kangaroo",

"koala, koala bear, kangaroo bear, native bear, phascolarctos cinereus",

"wombat",

"jellyfish",

"sea anemone, anemone",

"brain coral",

"flatworm, platyhelminth",

"nematode, nematode worm, roundworm",

"conch",

"snail",

"slug",

"sea slug, nudibranch",

"chiton, coat-of-mail shell, sea cradle, polyplacophore",

"chambered nautilus, pearly nautilus, nautilus",

"dungeness crab, cancer magister",

"rock crab, cancer irroratus",

"fiddler crab",

"king crab, alaska crab, alaskan king crab, alaska king crab, paralithodes camtschatica",

"american lobster, northern lobster, maine lobster, homarus americanus",

"spiny lobster, langouste, rock lobster, crawfish, crayfish, sea crawfish",

"crayfish, crawfish, crawdad, crawdaddy",

"hermit crab",

"isopod",

"white stork, ciconia ciconia",

"black stork, ciconia nigra",

"spoonbill",

"flamingo",

"little blue heron, egretta caerulea",

"american egret, great white heron, egretta albus",

"bittern",

"crane",

"limpkin, aramus pictus",

"european gallinule, porphyrio porphyrio",

"american coot, marsh hen, mud hen, water hen, fulica americana",

"bustard",

"ruddy turnstone, arenaria interpres",

"red-backed sandpiper, dunlin, erolia alpina",

"redshank, tringa totanus",

"dowitcher",

"oystercatcher, oyster catcher",

"pelican",

"king penguin, aptenodytes patagonica",

"albatross, mollymawk",

"grey whale, gray whale, devilfish, eschrichtius gibbosus, eschrichtius robustus",

"killer whale, killer, orca, grampus, sea wolf, orcinus orca",

"dugong, dugong dugon",

"sea lion",

"chihuahua",

"japanese spaniel",

"maltese dog, maltese terrier, maltese",

"pekinese, pekingese, peke",

"shih-tzu",

"blenheim spaniel",

"papillon",

"toy terrier",

"rhodesian ridgeback",

"afghan hound, afghan",

"basset, basset hound",

"beagle",

"bloodhound, sleuthhound",

"bluetick",

"black-and-tan coonhound",

"walker hound, walker foxhound",

"english foxhound",

"redbone",

"borzoi, russian wolfhound",

"irish wolfhound",

"italian greyhound",

"whippet",

"ibizan hound, ibizan podenco",

"norwegian elkhound, elkhound",

"otterhound, otter hound",

"saluki, gazelle hound",

"scottish deerhound, deerhound",

"weimaraner",

"staffordshire bullterrier, staffordshire bull terrier",

"american staffordshire terrier, staffordshire terrier, american pit bull terrier, pit bull terrier",

"bedlington terrier",

"border terrier",

"kerry blue terrier",

"irish terrier",

"norfolk terrier",

"norwich terrier",

"yorkshire terrier",

"wire-haired fox terrier",

"lakeland terrier",

"sealyham terrier, sealyham",

"airedale, airedale terrier",

"cairn, cairn terrier",

"australian terrier",

"dandie dinmont, dandie dinmont terrier",

"boston bull, boston terrier",

"miniature schnauzer",

"giant schnauzer",

"standard schnauzer",

"scotch terrier, scottish terrier, scottie",

"tibetan terrier, chrysanthemum dog",

"silky terrier, sydney silky",

"soft-coated wheaten terrier",

"west highland white terrier",

"lhasa, lhasa apso",

"flat-coated retriever",

"curly-coated retriever",

"golden retriever",

"labrador retriever",

"chesapeake bay retriever",

"german short-haired pointer",

"vizsla, hungarian pointer",

"english setter",

"irish setter, red setter",

"gordon setter",

"brittany spaniel",

"clumber, clumber spaniel",

"english springer, english springer spaniel",

"welsh springer spaniel",

"cocker spaniel, english cocker spaniel, cocker",

"sussex spaniel",

"irish water spaniel",

"kuvasz",

"schipperke",

"groenendael",

"malinois",

"briard",

"kelpie",

"komondor",

"old english sheepdog, bobtail",

"shetland sheepdog, shetland sheep dog, shetland",

"collie",

"border collie",

"bouvier des flandres, bouviers des flandres",

"rottweiler",

"german shepherd, german shepherd dog, german police dog, alsatian",

"doberman, doberman pinscher",

"miniature pinscher",

"greater swiss mountain dog",

"bernese mountain dog",

"appenzeller",

"entlebucher",

"boxer",

"bull mastiff",

"tibetan mastiff",

"french bulldog",

"great dane",

"saint bernard, st bernard",

"eskimo dog, husky",

"malamute, malemute, alaskan malamute",

"siberian husky",

"dalmatian, coach dog, carriage dog",

"affenpinscher, monkey pinscher, monkey dog",

"basenji",

"pug, pug-dog",

"leonberg",

"newfoundland, newfoundland dog",

"great pyrenees",

"samoyed, samoyede",

"pomeranian",

"chow, chow chow",

"keeshond",

"brabancon griffon",

"pembroke, pembroke welsh corgi",

"cardigan, cardigan welsh corgi",

"toy poodle",

"miniature poodle",

"standard poodle",

"mexican hairless",

"timber wolf, grey wolf, gray wolf, canis lupus",

"white wolf, arctic wolf, canis lupus tundrarum",

"red wolf, maned wolf, canis rufus, canis niger",

"coyote, prairie wolf, brush wolf, canis latrans",

"dingo, warrigal, warragal, canis dingo",

"dhole, cuon alpinus",

"african hunting dog, hyena dog, cape hunting dog, lycaon pictus",

"hyena, hyaena",

"red fox, vulpes vulpes",

"kit fox, vulpes macrotis",

"arctic fox, white fox, alopex lagopus",

"grey fox, gray fox, urocyon cinereoargenteus",

"tabby, tabby cat",

"tiger cat",

"persian cat",

"siamese cat, siamese",

"egyptian cat",

"cougar, puma, catamount, mountain lion, painter, panther, felis concolor",

"lynx, catamount",

"leopard, panthera pardus",

"snow leopard, ounce, panthera uncia",

"jaguar, panther, panthera onca, felis onca",

"lion, king of beasts, panthera leo",

"tiger, panthera tigris",

"cheetah, chetah, acinonyx jubatus",

"brown bear, bruin, ursus arctos",

"american black bear, black bear, ursus americanus, euarctos americanus",

"ice bear, polar bear, ursus maritimus, thalarctos maritimus",

"sloth bear, melursus ursinus, ursus ursinus",

"mongoose",

"meerkat, mierkat",

"tiger beetle",

"ladybug, ladybeetle, lady beetle, ladybird, ladybird beetle",

"ground beetle, carabid beetle",

"long-horned beetle, longicorn, longicorn beetle",

"leaf beetle, chrysomelid",

"dung beetle",

"rhinoceros beetle",

"weevil",

"fly",

"bee",

"ant, emmet, pismire",

"grasshopper, hopper",

"cricket",

"walking stick, walkingstick, stick insect",

"cockroach, roach",

"mantis, mantid",

"cicada, cicala",

"leafhopper",

"lacewing, lacewing fly",

"dragonfly, darning needle, devil's darning needle, sewing needle, snake feeder, snake doctor, mosquito hawk, skeeter hawk",

"damselfly",

"admiral",

"ringlet, ringlet butterfly",

"monarch, monarch butterfly, milkweed butterfly, danaus plexippus",

"cabbage butterfly",

"sulphur butterfly, sulfur butterfly",

"lycaenid, lycaenid butterfly",

"starfish, sea star",

"sea urchin",

"sea cucumber, holothurian",

"wood rabbit, cottontail, cottontail rabbit",

"hare",

"angora, angora rabbit",

"hamster",

"porcupine, hedgehog",

"fox squirrel, eastern fox squirrel, sciurus niger",

"marmot",

"beaver",

"guinea pig, cavia cobaya",

"sorrel",

"zebra",

"hog, pig, grunter, squealer, sus scrofa",

"wild boar, boar, sus scrofa",

"warthog",

"hippopotamus, hippo, river horse, hippopotamus amphibius",

"ox",

"water buffalo, water ox, asiatic buffalo, bubalus bubalis",

"bison",

"ram, tup",

"bighorn, bighorn sheep, cimarron, rocky mountain bighorn, rocky mountain sheep, ovis canadensis",

"ibex, capra ibex",

"hartebeest",

"impala, aepyceros melampus",

"gazelle",

"arabian camel, dromedary, camelus dromedarius",

"llama",

"weasel",

"mink",

"polecat, fitch, foulmart, foumart, mustela putorius",

"black-footed ferret, ferret, mustela nigripes",

"otter",

"skunk, polecat, wood pussy",

"badger",

"armadillo",

"three-toed sloth, ai, bradypus tridactylus",

"orangutan, orang, orangutang, pongo pygmaeus",

"gorilla, gorilla gorilla",

"chimpanzee, chimp, pan troglodytes",

"gibbon, hylobates lar",

"siamang, hylobates syndactylus, symphalangus syndactylus",

"guenon, guenon monkey",

"patas, hussar monkey, erythrocebus patas",

"baboon",

"macaque",

"langur",

"colobus, colobus monkey",

"proboscis monkey, nasalis larvatus",

"marmoset",

"capuchin, ringtail, cebus capucinus",

"howler monkey, howler",

"titi, titi monkey",

"spider monkey, ateles geoffroyi",

"squirrel monkey, saimiri sciureus",

"madagascar cat, ring-tailed lemur, lemur catta",

"indri, indris, indri indri, indri brevicaudatus",

"indian elephant, elephas maximus",

"african elephant, loxodonta africana",

"lesser panda, red panda, panda, bear cat, cat bear, ailurus fulgens",

"giant panda, panda, panda bear, coon bear, ailuropoda melanoleuca",

"barracouta, snoek",

"eel",

"coho, cohoe, coho salmon, blue jack, silver salmon, oncorhynchus kisutch",

"rock beauty, holocanthus tricolor",

"anemone fish",

"sturgeon",

"gar, garfish, garpike, billfish, lepisosteus osseus",

"lionfish",

"puffer, pufferfish, blowfish, globefish",

"abacus",

"abaya",

"academic gown, academic robe, judge's robe",

"accordion, piano accordion, squeeze box",

"acoustic guitar",

"aircraft carrier, carrier, flattop, attack aircraft carrier",

"airliner",

"airship, dirigible",

"altar",

"ambulance",

"amphibian, amphibious vehicle",

"analog clock",

"apiary, bee house",

"apron",

"ashcan, trash can, garbage can, wastebin, ash bin, ash-bin, ashbin, dustbin, trash barrel, trash bin",

"assault rifle, assault gun",

"backpack, back pack, knapsack, packsack, rucksack, haversack",

"bakery, bakeshop, bakehouse",

"balance beam, beam",

"balloon",

"ballpoint, ballpoint pen, ballpen, biro",

"band aid",

"banjo",

"bannister, banister, balustrade, balusters, handrail",

"barbell",

"barber chair",

"barbershop",

"barn",

"barometer",

"barrel, cask",

"barrow, garden cart, lawn cart, wheelbarrow",

"baseball",

"basketball",

"bassinet",

"bassoon",

"bathing cap, swimming cap",

"bath towel",

"bathtub, bathing tub, bath, tub",

"beach wagon, station wagon, wagon, estate car, beach waggon, station waggon, waggon",

"beacon, lighthouse, beacon light, pharos",

"beaker",

"bearskin, busby, shako",

"beer bottle",

"beer glass",

"bell cote, bell cot",

"bib",

"bicycle-built-for-two, tandem bicycle, tandem",

"bikini, two-piece",

"binder, ring-binder",

"binoculars, field glasses, opera glasses",

"birdhouse",

"boathouse",

"bobsled, bobsleigh, bob",

"bolo tie, bolo, bola tie, bola",

"bonnet, poke bonnet",

"bookcase",

"bookshop, bookstore, bookstall",

"bottlecap",

"bow",

"bow tie, bow-tie, bowtie",

"brass, memorial tablet, plaque",

"brassiere, bra, bandeau",

"breakwater, groin, groyne, mole, bulwark, seawall, jetty",

"breastplate, aegis, egis",

"broom",

"bucket, pail",

"buckle",

"bulletproof vest",

"bullet train, bullet",

"butcher shop, meat market",

"cab, hack, taxi, taxicab",

"caldron, cauldron",

"candle, taper, wax light",

"cannon",

"canoe",

"can opener, tin opener",

"cardigan",

"car mirror",

"carousel, carrousel, merry-go-round, roundabout, whirligig",

"carpenter's kit, tool kit",

"carton",

"car wheel",

"cash machine, cash dispenser, automated teller machine, automatic teller machine, automated teller, automatic teller, atm",

"cassette",

"cassette player",

"castle",

"catamaran",

"cd player",

"cello, violoncello",

"cellular telephone, cellular phone, cellphone, cell, mobile phone",

"chain",

"chainlink fence",

"chain mail, ring mail, mail, chain armor, chain armour, ring armor, ring armour",

"chain saw, chainsaw",

"chest",

"chiffonier, commode",

"chime, bell, gong",

"china cabinet, china closet",

"christmas stocking",

"church, church building",

"cinema, movie theater, movie theatre, movie house, picture palace",

"cleaver, meat cleaver, chopper",

"cliff dwelling",

"cloak",

"clog, geta, patten, sabot",

"cocktail shaker",

"coffee mug",

"coffeepot",

"coil, spiral, volute, whorl, helix",

"combination lock",

"computer keyboard, keypad",

"confectionery, confectionary, candy store",

"container ship, containership, container vessel",

"convertible",

"corkscrew, bottle screw",

"cornet, horn, trumpet, trump",

"cowboy boot",

"cowboy hat, ten-gallon hat",

"cradle",

"crane",

"crash helmet",

"crate",

"crib, cot",

"crock pot",

"croquet ball",

"crutch",

"cuirass",

"dam, dike, dyke",

"desk",

"desktop computer",

"dial telephone, dial phone",

"diaper, nappy, napkin",

"digital clock",

"digital watch",

"dining table, board",

"dishrag, dishcloth",

"dishwasher, dish washer, dishwashing machine",

"disk brake, disc brake",

"dock, dockage, docking facility",

"dogsled, dog sled, dog sleigh",

"dome",

"doormat, welcome mat",

"drilling platform, offshore rig",

"drum, membranophone, tympan",

"drumstick",

"dumbbell",

"dutch oven",

"electric fan, blower",

"electric guitar",

"electric locomotive",

"entertainment center",

"envelope",

"espresso maker",

"face powder",

"feather boa, boa",

"file, file cabinet, filing cabinet",

"fireboat",

"fire engine, fire truck",

"fire screen, fireguard",

"flagpole, flagstaff",

"flute, transverse flute",

"folding chair",

"football helmet",

"forklift",

"fountain",

"fountain pen",

"four-poster",

"freight car",

"french horn, horn",

"frying pan, frypan, skillet",

"fur coat",

"garbage truck, dustcart",

"gasmask, respirator, gas helmet",

"gas pump, gasoline pump, petrol pump, island dispenser",

"goblet",

"go-kart",

"golf ball",

"golfcart, golf cart",

"gondola",

"gong, tam-tam",

"gown",

"grand piano, grand",

"greenhouse, nursery, glasshouse",

"grille, radiator grille",

"grocery store, grocery, food market, market",

"guillotine",

"hair slide",

"hair spray",

"half track",

"hammer",

"hamper",

"hand blower, blow dryer, blow drier, hair dryer, hair drier",

"hand-held computer, hand-held microcomputer",

"handkerchief, hankie, hanky, hankey",

"hard disc, hard disk, fixed disk",

"harmonica, mouth organ, harp, mouth harp",

"harp",

"harvester, reaper",

"hatchet",

"holster",

"home theater, home theatre",

"honeycomb",

"hook, claw",

"hoopskirt, crinoline",

"horizontal bar, high bar",

"horse cart, horse-cart",

"hourglass",

"ipod",

"iron, smoothing iron",

"jack-o'-lantern",

"jean, blue jean, denim",

"jeep, landrover",

"jersey, t-shirt, tee shirt",

"jigsaw puzzle",

"jinrikisha, ricksha, rickshaw",

"joystick",

"kimono",

"knee pad",

"knot",

"lab coat, laboratory coat",

"ladle",

"lampshade, lamp shade",

"laptop, laptop computer",

"lawn mower, mower",

"lens cap, lens cover",

"letter opener, paper knife, paperknife",

"library",

"lifeboat",

"lighter, light, igniter, ignitor",

"limousine, limo",

"liner, ocean liner",

"lipstick, lip rouge",

"loafer",

"lotion",

"loudspeaker, speaker, speaker unit, loudspeaker system, speaker system",

"loupe, jeweler's loupe",

"lumbermill, sawmill",

"magnetic compass",

"mailbag, postbag",

"mailbox, letter box",

"maillot",

"maillot, tank suit",

"manhole cover",

"maraca",

"marimba, xylophone",

"mask",

"matchstick",

"maypole",

"maze, labyrinth",

"measuring cup",

"medicine chest, medicine cabinet",

"megalith, megalithic structure",

"microphone, mike",

"microwave, microwave oven",

"military uniform",

"milk can",

"minibus",

"miniskirt, mini",

"minivan",

"missile",

"mitten",

"mixing bowl",

"mobile home, manufactured home",

"model t",

"modem",

"monastery",

"monitor",

"moped",

"mortar",

"mortarboard",

"mosque",

"mosquito net",

"motor scooter, scooter",

"mountain bike, all-terrain bike, off-roader",

"mountain tent",

"mouse, computer mouse",

"mousetrap",

"moving van",

"muzzle",

"nail",

"neck brace",

"necklace",

"nipple",

"notebook, notebook computer",

"obelisk",

"oboe, hautboy, hautbois",

"ocarina, sweet potato",

"odometer, hodometer, mileometer, milometer",

"oil filter",

"organ, pipe organ",

"oscilloscope, scope, cathode-ray oscilloscope, cro",

"overskirt",

"oxcart",

"oxygen mask",

"packet",

"paddle, boat paddle",

"paddlewheel, paddle wheel",

"padlock",

"paintbrush",

"pajama, pyjama, pj's, jammies",

"palace",

"panpipe, pandean pipe, syrinx",

"paper towel",

"parachute, chute",

"parallel bars, bars",

"park bench",

"parking meter",

"passenger car, coach, carriage",

"patio, terrace",

"pay-phone, pay-station",

"pedestal, plinth, footstall",

"pencil box, pencil case",

"pencil sharpener",

"perfume, essence",

"petri dish",

"photocopier",

"pick, plectrum, plectron",

"pickelhaube",

"picket fence, paling",

"pickup, pickup truck",

"pier",

"piggy bank, penny bank",

"pill bottle",

"pillow",

"ping-pong ball",

"pinwheel",

"pirate, pirate ship",

"pitcher, ewer",

"plane, carpenter's plane, woodworking plane",

"planetarium",

"plastic bag",

"plate rack",

"plow, plough",

"plunger, plumber's helper",

"polaroid camera, polaroid land camera",

"pole",

"police van, police wagon, paddy wagon, patrol wagon, wagon, black maria",

"poncho",

"pool table, billiard table, snooker table",