script

stringlengths 113

767k

|

|---|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 20GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

epl_df = pd.read_csv("../input/english-premier-league202021/EPL_20_21.csv")

epl_df.head()

epl_df.info()

epl_df.describe()

epl_df.isna().sum()

epl_df[" MinsPerMatch"] = (epl_df["Mins"] / epl_df["Matches"]).astype(int)

epl_df[" GoalsPerMatch"] = (epl_df["Goals"] / epl_df["Matches"]).astype(float)

print(epl_df.head())

##total Goal

Total_Goals = epl_df["Goals"].sum()

print(Total_Goals)

Total_PenaltyGoals = epl_df["Penalty_Goals"].sum()

print(Total_PenaltyGoals)

Total_PenaltyAttempts = epl_df["Penalty_Attempted"].sum()

print(Total_PenaltyAttempts)

import os, sys

plt.figure(figsize=(13, 6))

pl_not_scored = epl_df["Penalty_Attempted"].sum() - Total_PenaltyGoals

data = [pl_not_scored, Total_PenaltyGoals]

labels = ["Penalty missed", "Penalties Scored"]

color = sns.color_palette("Set2")

plt.pie(data, labels=labels, colors=color, autopct="%.0f%%")

plt.show()

# unique position

epl_df["Position"].unique()

## total fw

epl_df[epl_df["Position"] == "FW"]

##plyer nation

np.size((epl_df["Nationality"].unique()))

##most player come

nationality = epl_df.groupby("Nationality").size().sort_values(ascending=False)

nationality.head(10).plot(kind="bar", figsize=(12, 6), color=sns.color_palette("magma"))

# max player in sqad

epl_df["Club"].value_counts().nlargest(5).plot(

kind="bar", color=sns.color_palette("viridis")

)

# player

epl_df["Club"].value_counts().nsmallest(5).plot(

kind="bar", color=sns.color_palette("viridis")

)

under20 = epl_df[epl_df["Age"] <= 20]

under20_25 = epl_df[(epl_df["Age"] > 20) & (epl_df["Age"] < 25)]

under25_30 = epl_df[(epl_df["Age"] > 25) & (epl_df["Age"] < 30)]

Above30 = epl_df[epl_df["Age"] > 30]

x = np.array(

[

under20["Name"].count(),

under20_25["Name"].count(),

under25_30["Name"].count(),

Above30["Name"].count(),

]

)

mylabels = ["<=20", ">20 & <=25", ">25 & <=30", ">30"]

plt.title("Total Player With Age", fontsize=20)

plt.pie(x, labels=mylabels, autopct="%.1f%%")

plt.show()

##total under20

players_under20 = epl_df[epl_df["Age"] < 20]

players_under20["Club"].value_counts().plot(

kind="bar", color=sns.color_palette("cubehelix")

)

# under 20 manu

players_under20[players_under20["Club"] == "Manchester United"]

# under 20 chelsi

players_under20[players_under20["Club"] == "Chelsea"]

## avarage age

plt.figure(figsize=(12, 6))

sns.boxenplot(x="Club", y="Age", data=epl_df)

plt.xticks(rotation=90)

num_player = epl_df.groupby("Club").size()

data = (epl_df.groupby("Club")["Age"].sum()) / num_player

data.sort_values(ascending=False)

## total assist

|

# # **Point Couds with zarr**

# Generate Point Clouds while leveraging efficient image loading with zarr. Since we will be able to use all surfaces let's see if we can denoise the point cloud.

# Credit: https://www.kaggle.com/code/brettolsen/efficient-image-loading-with-zarr/notebookimport os

import os

import shutil

from tifffile import tifffile

import time

import numpy as np

import PIL.Image as Image

import matplotlib.pyplot as plt

import matplotlib.patches as patches

from tqdm import tqdm

from ipywidgets import interact, fixed

from IPython.display import HTML, display

import zarr

import open3d as o3

INPUT_FOLDER = "/kaggle/input/vesuvius-challenge-ink-detection"

WORKING_FOLDER = "/kaggle/working/"

TEMP_FOLDER = "kaggle/temp/"

class TimerError(Exception):

pass

class Timer:

def __init__(self, text=None):

if text is not None:

self.text = text + ": {:0.4f} seconds"

else:

self.text = "Elapsed time: {:0.4f} seconds"

def logfunc(x):

print(x)

self.logger = logfunc

self._start_time = None

def start(self):

if self._start_time is not None:

raise TimerError("Timer is already running. Use .stop() to stop it.")

self._start_time = time.time()

def stop(self):

if self._start_time is None:

raise TimerError("Timer is not running. Use .start() to start it.")

elapsed_time = time.time() - self._start_time

self._start_time = None

if self.logger is not None:

self.logger(self.text.format(elapsed_time))

return elapsed_time

def __enter__(self):

self.start()

return self

def __exit__(self, exc_type, exc_value, exc_traceback):

self.stop()

class FragmentImageException(Exception):

pass

class FragmentImageData:

"""A general class that uses persistent zarr objects to store the surface volume data,

binary data mask, and for training sets, the truth data and infrared image of a papyrus

fragment, in a compressed and efficient way.

"""

def __init__(self, sample_type: str, sample_index: str, working: bool = True):

if sample_type not in ("test, train"):

raise FragmentImageException(

f"Invalid sample type f{sample_type}, must be one of 'test' or 'train'"

)

zarrpath = self._zarr_path(sample_type, sample_index, working)

if os.path.exists(zarrpath):

self.zarr = self.load_from_zarr(zarrpath)

else:

dirpath = os.path.join(INPUT_FOLDER, sample_type, sample_index)

if not os.path.exists(dirpath):

raise FragmentImageException(

f"No input data found at f{zarrpath} or f{dirpath}"

)

self.zarr = self.load_from_directory(dirpath, zarrpath)

@property

def surface_volume(self):

return self.zarr.surface_volume

@property

def mask(self):

return self.zarr.mask

@property

def truth(self):

return self.zarr.truth

@property

def infrared(self):

return self.zarr.infrared

@staticmethod

def _zarr_path(sample_type: str, sample_index: str, working: bool = True):

filename = f"{sample_type}-{sample_index}.zarr"

if working:

return os.path.join(WORKING_FOLDER, filename)

else:

return os.path.join(TEMP_FOLDER, filename)

@staticmethod

def clean_zarr(sample_type: str, sample_index: str, working: bool = True):

zarrpath = FragmentImageData._zarr_path(sample_type, sample_index, working)

if os.path.exists(zarrpath):

shutil.rmtree(zarrpath)

@staticmethod

def load_from_zarr(filepath):

with Timer("Loading from existing zarr"):

return zarr.open(filepath, mode="r")

@staticmethod

def load_from_directory(dirpath, zarrpath):

if os.path.exists(zarrpath):

raise FragmentImageException(

f"Trying to overwrite existing zarr at f{zarrpath}"

)

# Initialize the root zarr group and write the file

root = zarr.open_group(zarrpath, mode="w")

# Load in the surface volume tif files

with Timer("Surface volume loading"):

init = True

imgfiles = sorted(

[

imgfile

for imgfile in os.listdir(os.path.join(dirpath, "surface_volume"))

]

)

for imgfile in imgfiles:

print(f"Loading file {imgfile}", end="\r")

# img_data = np.array(

# Image.open(os.path.join(dirpath, "surface_volume", imgfile))

# )

img_data = tifffile.imread(

os.path.join(dirpath, "surface_volume", imgfile)

)

if init:

surface_volume = root.zeros(

name="surface_volume",

shape=(img_data.shape[0], img_data.shape[1], len(imgfiles)),

chunks=(1000, 1000, 4),

dtype=img_data.dtype,

write_empty_chunks=False,

)

init = False

z_index = int(imgfile.split(".")[0])

surface_volume[:, :, z_index] = img_data

# Load in the mask

with Timer("Mask loading"):

img_data = np.array(

Image.open(os.path.join(dirpath, "mask.png")), dtype=bool

)

mask = root.array(

name="mask",

data=img_data,

shape=img_data.shape,

chunks=(1000, 1000),

dtype=img_data.dtype,

write_empty_chunks=False,

)

# Load in the truth set (if it exists)

with Timer("Truth set loading"):

truthfile = os.path.join(dirpath, "inklabels.png")

if os.path.exists(truthfile):

img_data = np.array(Image.open(truthfile), dtype=bool)

truth = root.array(

name="truth",

data=img_data,

shape=img_data.shape,

chunks=(1000, 1000),

dtype=img_data.dtype,

write_empty_chunks=False,

)

# Load in the infrared image (if it exists)

with Timer("Infrared image loading"):

irfile = os.path.join(dirpath, "ir.png")

if os.path.exists(irfile):

img_data = np.array(Image.open(irfile))

infrared = root.array(

name="infrared",

data=img_data,

shape=img_data.shape,

chunks=(1000, 1000),

dtype=img_data.dtype,

write_empty_chunks=False,

)

return root

# # Load data

FragmentImageData.clean_zarr("train", 1)

data = FragmentImageData("train", "1")

print(data.surface_volume.info)

print(data.mask.info)

print(data.truth.info)

print(data.infrared.info)

with Timer():

plt.imshow(data.mask, cmap="gray")

with Timer():

plt.imshow(data.surface_volume[:, :, 20], cmap="gray")

# ### Plot vertical slices of the surface volumes

with Timer():

plt.figure(figsize=(10, 1))

plt.imshow(data.surface_volume[2000, :, :].T, cmap="gray", aspect="auto")

with Timer():

plt.figure(figsize=(10, 1))

plt.imshow(data.surface_volume[:, 2000, :].T, cmap="gray", aspect="auto")

# # Create Point Cloud

# ## Sample from Surface Volumes

ROWS = data.surface_volume.shape[0]

COLS = data.surface_volume.shape[1]

Z_DIM = data.surface_volume.shape[2] # number of volume slices

N_SAMPLES = 10000

with Timer():

# sample from valid regions of surface volume

c = np.ravel(data.mask).cumsum()

samples = np.random.uniform(low=0, high=c[-1], size=(N_SAMPLES, Z_DIM)).astype(int)

# get valid indexes

x, y = np.unravel_index(c.searchsorted(samples), data.mask.shape)

x, y = x[np.newaxis, ...], y[np.newaxis, ...]

# get z dimensions from surface volume locations

z = np.arange(0, Z_DIM)

z = np.tile(z, N_SAMPLES).reshape(N_SAMPLES, -1)[np.newaxis, ...]

# get point cloud

xyz = np.vstack((x, y, z))

xyz.shape

# ### Get Normalized Intensities

intensities = np.zeros((N_SAMPLES, Z_DIM))

with Timer():

for i in range(Z_DIM):

img = data.surface_volume[:, :, i]

intensities[:, i] = img[xyz[0, :, i], xyz[1, :, i]] / 65535.0

intensities = intensities.astype(np.float32)

# #### Sanity Check

print(xyz[:, 20, 1], intensities[20, 1])

print(

xyz.T.reshape((-1, 3))[20 + N_SAMPLES, :],

intensities.T.reshape((-1))[20 + N_SAMPLES],

)

# ### Reshape and Normalize

xyz = xyz.T.reshape((-1, 3))

xyz = xyz / xyz.max(axis=0)

intensities = intensities.T.reshape((-1)).repeat((3)).reshape((-1, 3))

# ## Get Colormap and Convert to Point Cloud

colors = plt.get_cmap("bone") # also use 'cool', 'bone'

colors

pcd = o3.geometry.PointCloud()

pcd.points = o3.utility.Vector3dVector(xyz)

pcd.colors = o3.utility.Vector3dVector(colors(intensities)[:, 0, :3])

pcd

# # Display Point Cloud

o3.visualization.draw_plotly([pcd])

# # Inspect distribution at each layer

# ### Animate intensity Histograms for each surface layer

# Animation code resused from: https://www.kaggle.com/code/leonidkulyk/eda-vc-id-volume-layers-animation

from celluloid import Camera

fig, ax = plt.subplots(1, 1)

camera = Camera(fig) # define the camera that gets the fig we'll plot

for i in range(Z_DIM):

cnts, bins, _ = plt.hist(

np.ravel(data.surface_volume[:, :, i][data.mask]) / 65535.0, bins=100

)

ax.set_title(f"Surfacer Layer: {i}")

ax.text(

0.5,

1.08,

f"Surfacer Layer: {i}",

fontweight="bold",

fontsize=18,

transform=ax.transAxes,

horizontalalignment="center",

)

camera.snap() # the camera takes a snapshot of the plot

plt.close(fig) # close figure

animation = camera.animate() # get plt animation

fix_video_adjust = (

"<style> video {margin: 0px; padding: 0px; width:100%; height:auto;} </style>"

)

display(HTML(fix_video_adjust + animation.to_html5_video())) # displaying the animation

# There seems to be a mix of modes, especially in the lower surface volume layers. There seems to be a single mode around 0.35 for all surface volumes, while the lower volumes contain a mode with a wider spread with a center that seems to move across each layer.

# The 0.35 centered mode is the dominate mode for the upper surface volumes which seem to contain less papyrus according to the volume slice cuts above. Also ccording to these histograms, the majority of the variance comes from the lower surface cuts. This can also be seen in the point cloud and the surface volume cuts.

# Could this dominate mode be noisy data? Let's take a closer look at with a new point cloud.

# # Denoise Point Cloud

# Hypothesis/Idea: The top layers do not contain useful data, they are just nosie.

# In the surface volume slices and point cloud the top layers look like they may not contain much useful data. The histograms may also support this hypothesis, since the dominate mode at 0.35 is the only mode at the top layers.

# Let's use the top surface volume to estimate to estimate the distribution of the noisy data. All we need is the mean and standard deviation.

mu = np.mean(np.ravel(data.surface_volume[:, :, -1][data.mask]) / 65535.0)

sig = np.std(np.ravel(data.surface_volume[:, :, -1][data.mask]) / 65535.0)

mu, sig

# Let's get upper and lower bounds of data to remove. We will just go up to 4 sigma for now to get the lower and upper bounds to remove.

# We should also be weary of just naively truncating data. We will need to investigate this further, but that will be for another time.

lower, upper = mu - 4 * sig, mu + 4 * sig

lower, upper

# get intensity mask

i_mask = (intensities[:, 0] > lower) & (intensities[:, 0] < upper)

# remove 0 intensities?

i_mask = ~i_mask & (intensities[:, 0] != 0)

pcd_2 = o3.geometry.PointCloud()

pcd_2.points = o3.utility.Vector3dVector(xyz[i_mask])

pcd_2.colors = o3.utility.Vector3dVector(colors(intensities[i_mask])[:, 0, :3])

pcd_2

o3.visualization.draw_plotly([pcd_2])

# Let's go ahead and plot plot the mean and standard dev for each surface layer.

# Code from: https://www.kaggle.com/code/brettolsen/fast-efficient-image-storing-and-manipulation

#

with Timer():

zindices = np.arange(Z_DIM)

means = np.zeros_like(zindices)

stdevs = np.zeros_like(zindices)

# mask = data.mask[:,:,0]

for z in zindices:

print(z, end="\r")

array = data.surface_volume[:, :, z][data.mask]

means[z] = array.mean()

stdevs[z] = np.std(array)

plt.figure()

plt.grid()

plt.errorbar(zindices, means, stdevs, marker="o")

plt.plot(zindices, stdevs)

|

# Source Code: https://github.com/rasbt/machine-learning-book with additional explanation

#

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from matplotlib.colors import ListedColormap

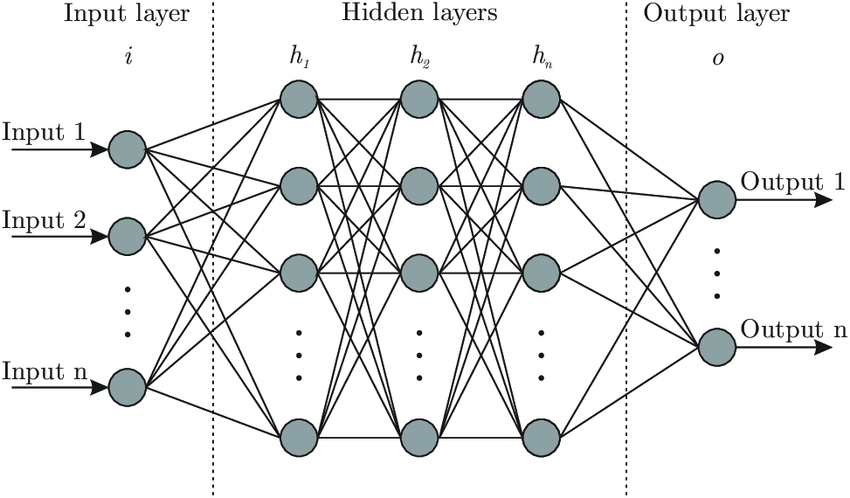

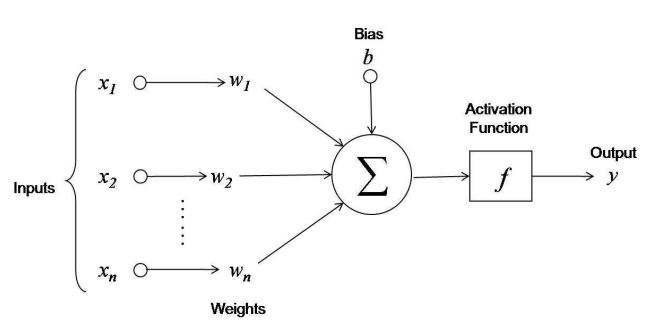

# The whole idea behind the perceptron model is to use a simplified approach to mimic how a single neuron in the brain works. The Perceptron Rule is simple, and can be summarized in the following steps:

# * Initialize the weights and bias unit to 0 or small random numbers

# * For each training example, x superscript i:

# * Compute output value y hat

# * Update the weights and bias unit

# Weights can be initialized to 0, however the learning rate would have no effect onn the decision boundary. If all w's are initialized to 0 , then the learning rate parameter affects only the scale oof the weight vector, ot the direction

class Perceptron:

"""

Perceptron Classifier

Parameters:

lr: float

learnning rate 0.0 >= lr <=1.0

n_iter: int

passes over the training dataset

random_state: int

Random number generator seed for random weights initialization

Attributes:

w_: 1d array - weights after fitting

b_: Scalar - bias unit after fitting

errors_: list - Number of missclassification (updates) in epoch

"""

def __init__(self, lr=0.01, n_iter=50, random_state=1):

self.lr = lr

self.n_iter = n_iter

self.random_state = random_state

def fit(self, X, y):

"""

Fit Training Data

Parameters:

X: {array-like}, shape=[n_examples, n_features]

y: {array-like}, shape=[n_examples], Target values

Returns:

self: object

"""

rgen = np.random.RandomState(self.random_state)

# generate random weights

self.w_ = rgen.normal(loc=0.0, scale=0.1, size=X.shape[1])

# self.w_ = np.zeros(X.shape[1])

self.b_ = np.float(0.0)

self.errors_ = []

for _ in range(self.n_iter):

errors = 0

for xi, target in zip(X, y):

update = self.lr * (target - self.predict(xi))

self.w_ += update * xi

self.b_ += update

errors += int(update != 0.0)

self.errors_.append(errors)

return self

def net_input(self, X):

"""Calculate net input"""

return np.dot(X, self.w_) + self.b_

def predict(self, X):

"""Return Class Label after each unit step"""

return np.where(self.net_input(X) > 0.0, 1, 0)

df = pd.read_csv("/kaggle/input/irisdataset/iris.data", header=None, encoding="utf-8")

df

# select setosa and versicolor

y = df.iloc[:100, 4].values

y = np.where(y == "Iris-setosa", 0, 1)

# extract sepal length and petal length

X = df.iloc[0:100, [0, 2]].values

# plot data

plt.scatter(X[:50, 0], X[:50, 1], color="red", marker="o", label="Setosa")

plt.scatter(X[50:100, 0], X[50:100, 1], color="blue", marker="s", label="Versicolor")

plt.xlabel("Sepal length [cm]")

plt.ylabel("Petal length [cm]")

plt.legend(loc="upper left")

plt.show()

# ### ***Full Code***

class Perceptron:

"""

Perceptron Classifier

Parameters:

lr: float

learnning rate 0.0 >= lr <=1.0

n_iter: int

passes over the training dataset

random_state: int

Random number generator seed for random weights initialization

Attributes:

w_: 1d array - weights after fitting

b_: Scalar - bias unit after fitting

errors_: list - Number of missclassification (updates) in epoch

"""

def __init__(self, lr=0.01, n_iter=50, random_state=1):

self.lr = lr

self.n_iter = n_iter

self.random_state = random_state

def fit(self, X, y):

"""

Fit Training Data

Parameters:

X: {array-like}, shape=[n_examples, n_features]

y: {array-like}, shape=[n_examples], Target values

Returns:

self: object

"""

rgen = np.random.RandomState(self.random_state)

# generate random weights

self.w_ = rgen.normal(loc=0.0, scale=0.1, size=X.shape[1])

# self.w_ = np.zeros(X.shape[1])

self.b_ = np.float(0.0)

self.errors_ = []

for _ in range(self.n_iter):

errors = 0

for xi, target in zip(X, y):

update = self.lr * (target - self.predict(xi))

self.w_ += update * xi

self.b_ += update

errors += int(update != 0.0)

self.errors_.append(errors)

return self

def net_input(self, X):

"""Calculate net input"""

return np.dot(X, self.w_) + self.b_

def predict(self, X):

"""Return Class Label after each unit step"""

return np.where(self.net_input(X) > 0.0, 1, 0)

ppn = Perceptron(lr=0.1, n_iter=10)

ppn.fit(X, y)

plt.plot(range(1, len(ppn.errors_) + 1), ppn.errors_, marker="o")

plt.xlabel("Epochs")

plt.ylabel("Number of updates")

plt.show()

def plot_decision_regions(X, y, classifier, resolution=0.02):

# setup marker generator and color map

markers = ("o", "s", "^", "v", "<")

colors = ("red", "blue", "lightgreen", "gray", "cyan")

cmap = ListedColormap(colors[: len(np.unique(y))])

# plot the decision surface

x1_min, x1_max = X[:, 0].min() - 1, X[:, 0].max() + 1

x2_min, x2_max = X[:, 1].min() - 1, X[:, 1].max() + 1

xx1, xx2 = np.meshgrid(

np.arange(x1_min, x1_max, resolution), np.arange(x2_min, x2_max, resolution)

)

lab = classifier.predict(np.array([xx1.ravel(), xx2.ravel()]).T)

lab = lab.reshape(xx1.shape)

plt.contourf(xx1, xx2, lab, alpha=0.3, cmap=cmap)

plt.xlim(xx1.min(), xx1.max())

plt.ylim(xx2.min(), xx2.max())

# plot class examples

for idx, cl in enumerate(np.unique(y)):

plt.scatter(

x=X[y == cl, 0],

y=X[y == cl, 1],

alpha=0.8,

c=colors[idx],

marker=markers[idx],

label=f"Class {cl}",

edgecolor="black",

)

plot_decision_regions(X, y, classifier=ppn)

plt.xlabel("Sepal length [cm]")

plt.ylabel("Petal length [cm]")

plt.legend(loc="upper left")

plt.show()

|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 20GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session

train_df = pd.read_csv("/kaggle/input/playground-series-s3e12/train.csv")

train_df.head()

train_df.info()

train_df.describe()

# 1. There is no null value.

import plotly.express as px

import matplotlib

import matplotlib.pyplot as plt

import seaborn as sns

sns.set_style("darkgrid")

matplotlib.rcParams["font.size"] = 14

matplotlib.rcParams["figure.figsize"] = (10, 6)

matplotlib.rcParams["figure.facecolor"] = "#00000000"

px.histogram(train_df, x="target")

px.histogram(train_df, x="gravity", marginal="box")

# 1. Lesser the gravity, lesser the chance of getting stones.

#

px.histogram(train_df, x="ph", marginal="box")

# Low ph cause kidney stones.

px.histogram(train_df, x="cond", marginal="box")

# Majorly conductivity range of 20mMho - 30mMho cause kidney stones.

px.histogram(train_df, x="calc", marginal="box")

# Larger the amount of calcium present in the urine, larger the chance of kidney stones.

train_df.corr()

sns.heatmap(train_df.corr(), annot=True)

# This heatmap proves our previous observation.

# #### Modeling

import xgboost as xgb

from sklearn import metrics

def modelfit(

alg, dtrain, predictors, useTrainCV=True, cv_folds=5, early_stopping_rounds=50

):

if useTrainCV:

xgb_param = alg.get_xgb_params()

xgtrain = xgb.DMatrix(dtrain[predictors].values, label=dtrain["target"].values)

cvresult = xgb.cv(

xgb_param,

xgtrain,

num_boost_round=alg.get_params()["n_estimators"],

nfold=cv_folds,

metrics="auc",

early_stopping_rounds=early_stopping_rounds,

verbose_eval=True,

)

alg.set_params(n_estimators=cvresult.shape[0])

# Fit the algorithm on the data

alg.fit(dtrain[predictors], dtrain["target"], eval_metric="auc")

# Predict training set:

dtrain_predictions = alg.predict(dtrain[predictors])

dtrain_predprob = alg.predict_proba(dtrain[predictors])[:, 1]

# Print model report:

print("\nModel Report")

print(

"Accuracy : %.4g"

% metrics.accuracy_score(dtrain["target"].values, dtrain_predictions)

)

print(

"AUC Score (Train): %f"

% metrics.roc_auc_score(dtrain["target"], dtrain_predprob)

)

predictors = [x for x in train_df.columns if x not in ["target", "id"]]

xgb1 = xgb.XGBClassifier(

learning_rate=0.1,

n_estimators=500,

max_depth=3,

min_child_weight=1,

gamma=0,

subsample=0.8,

colsample_bytree=0.8,

objective="binary:logistic",

seed=27,

)

modelfit(xgb1, train_df, predictors)

from sklearn.model_selection import GridSearchCV

param_test1 = {"max_depth": range(1, 10, 2), "min_child_weight": range(1, 6, 2)}

gsearch1 = GridSearchCV(

estimator=xgb.XGBClassifier(

learning_rate=0.1,

n_estimators=17,

max_depth=3,

min_child_weight=1,

objective="binary:logistic",

seed=27,

),

param_grid=param_test1,

scoring="roc_auc",

n_jobs=4,

cv=5,

)

gsearch1.fit(train_df[predictors], train_df["target"])

gsearch1.best_params_, gsearch1.best_score_

param_test3 = {"gamma": [i / 10.0 for i in range(0, 5)]}

gsearch3 = GridSearchCV(

estimator=xgb.XGBClassifier(

learning_rate=0.1,

n_estimators=17,

max_depth=1,

min_child_weight=1,

gamma=0,

objective="binary:logistic",

seed=27,

),

param_grid=param_test3,

scoring="roc_auc",

n_jobs=4,

cv=5,

)

gsearch3.fit(train_df[predictors], train_df["target"])

gsearch3.best_params_, gsearch3.best_score_

predictors = [x for x in train_df.columns if x not in ["target", "id"]]

xgb1 = xgb.XGBClassifier(

learning_rate=0.1,

n_estimators=500,

max_depth=3,

min_child_weight=1,

gamma=0,

subsample=0.8,

colsample_bytree=0.8,

objective="binary:logistic",

seed=27,

)

modelfit(xgb1, train_df, predictors)

param_test1 = {"max_depth": range(1, 10, 2), "min_child_weight": range(1, 6, 2)}

gsearch1 = GridSearchCV(

estimator=xgb.XGBClassifier(

learning_rate=0.01,

n_estimators=29,

max_depth=3,

min_child_weight=1,

objective="binary:logistic",

seed=27,

),

param_grid=param_test1,

scoring="roc_auc",

n_jobs=4,

cv=5,

)

gsearch1.fit(train_df[predictors], train_df["target"])

gsearch1.best_params_, gsearch1.best_score_

param_test3 = {"gamma": [i / 10.0 for i in range(0, 5)]}

gsearch3 = GridSearchCV(

estimator=xgb.XGBClassifier(

learning_rate=0.01,

n_estimators=29,

max_depth=3,

min_child_weight=1,

gamma=0,

objective="binary:logistic",

seed=27,

),

param_grid=param_test3,

scoring="roc_auc",

n_jobs=4,

cv=5,

)

gsearch3.fit(train_df[predictors], train_df["target"])

gsearch3.best_params_, gsearch3.best_score_

from sklearn.model_selection import train_test_split

X = train_df.drop(["target"], axis=1)

y = train_df["target"]

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.33, random_state=42

)

xgb_classifier1 = xgb.XGBClassifier(

learning_rate=0.1,

n_estimators=17,

max_depth=1,

min_child_weight=1,

)

xgb_classifier1.fit(X_train, y_train)

xgb_classifier1.score(X_train, y_train)

xgb_classifier1.score(X_test, y_test)

# #### Test Prediction

test_df = pd.read_csv("/kaggle/input/playground-series-s3e12/test.csv")

Y_pred = xgb_classifier1.predict_proba(test_df)[:, 1]

submission = pd.DataFrame({"id": test_df["id"], "target": Y_pred})

submission.to_csv("submission.csv", index=False)

|

#

# # 1. Import Libraries

# ([Go to top](#top))

from tensorflow.keras.datasets import imdb

from tensorflow.keras.preprocessing.text import text_to_word_sequence

from tensorflow.keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

from tensorflow.keras import models

from tensorflow.keras import layers

from tensorflow.keras import losses

from tensorflow.keras import metrics

from tensorflow.keras import optimizers

from tensorflow.keras.utils import plot_model

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.model_selection import train_test_split

from collections import Counter

from pathlib import Path

import os

import numpy as np

import re

import string

import nltk

nltk.download("punkt")

from nltk.tokenize import word_tokenize

from nltk.corpus import stopwords

nltk.download("stopwords")

from nltk.stem.porter import PorterStemmer

from nltk.stem import WordNetLemmatizer

nltk.download("wordnet")

from nltk.corpus import wordnet

import unicodedata

import html

stop_words = stopwords.words("english")

#

# # 2. Load Data

# ([Go to top](#top))

#

t_set = pd.read_csv("/kaggle/input/nlp-getting-started/train.csv")

t_set.shape

t_set.head()

# For training our deep learning model, we will use the 'text' column as our input feature or `Train_X`, and the 'target' column as our output or `y_train`. Any other columns that are not required will be dropped as we are only interested in the 'text' column.

Train_X_raw = t_set["text"].values

Train_X_raw.shape

Train_X_raw[5]

_ = list(map(print, Train_X_raw[:5] + "\n"))

y_train = t_set["target"].values

y_train.shape

y_train[:5]

#

# # 3. Data Splitting

# ([Go to top](#top))

# As we see there's a little bit class imbalance, so we'll use `stratify` parameter with `train_test_split`.

_ = sns.countplot(x=y_train)

from sklearn.model_selection import train_test_split

Train_X_raw, Validate_X_raw, y_train, y_validation = train_test_split(

Train_X_raw, y_train, test_size=0.2, stratify=y_train

)

print(Train_X_raw.shape)

print(y_train.shape)

print()

print(Validate_X_raw.shape)

print(y_validation.shape)

#

# # 4. Text Preprocessing

# ([Go to top](#top))

# In this phase, we apply some operations on the text, to make it in the most usable form for the task at hand. Mainly we clean it up to be more appealing to the problem we try to solve. The input is __text__ and the output is a transformed __text__.

def remove_special_chars(text):

recoup = re.compile(r" +")

x1 = (

text.lower()

.replace("#39;", "'")

.replace("amp;", "&")

.replace("#146;", "'")

.replace("nbsp;", " ")

.replace("#36;", "$")

.replace("\\n", "\n")

.replace("quot;", "'")

.replace("<br />", "\n")

.replace('\\"', '"')

.replace("<unk>", "u_n")

.replace(" @.@ ", ".")

.replace(" @-@ ", "-")

.replace("\\", " \\ ")

)

return recoup.sub(" ", html.unescape(x1))

def remove_non_ascii(text):

"""Remove non-ASCII characters from list of tokenized words"""

return (

unicodedata.normalize("NFKD", text)

.encode("ascii", "ignore")

.decode("utf-8", "ignore")

)

def to_lowercase(text):

return text.lower()

def remove_punctuation(text):

"""Remove punctuation from list of tokenized words"""

translator = str.maketrans("", "", string.punctuation)

return text.translate(translator)

def replace_numbers(text):

"""Replace all interger occurrences in list of tokenized words with textual representation"""

return re.sub(r"\d+", "", text)

def remove_whitespaces(text):

return text.strip()

def remove_stopwords(words, stop_words):

"""

:param words:

:type words:

:param stop_words: from sklearn.feature_extraction.stop_words import ENGLISH_STOP_WORDS

or

from spacy.lang.en.stop_words import STOP_WORDS

:type stop_words:

:return:

:rtype:

"""

return [word for word in words if word not in stop_words]

def stem_words(words):

"""Stem words in text"""

stemmer = PorterStemmer()

return [stemmer.stem(word) for word in words]

def lemmatize_words(words):

"""Lemmatize words in text"""

lemmatizer = WordNetLemmatizer()

return [lemmatizer.lemmatize(word) for word in words]

def lemmatize_verbs(words):

"""Lemmatize verbs in text"""

lemmatizer = WordNetLemmatizer()

return " ".join([lemmatizer.lemmatize(word, pos="v") for word in words])

def text2words(text):

return word_tokenize(text)

def normalize_text(text):

text = remove_special_chars(text)

text = remove_non_ascii(text)

text = remove_punctuation(text)

text = to_lowercase(text)

text = replace_numbers(text)

words = text2words(text)

words = remove_stopwords(words, stop_words)

# words = stem_words(words)# Either stem ovocar lemmatize

words = lemmatize_words(words)

words = lemmatize_verbs(words)

return "".join(words)

Train_X_clean = Train_X_raw.copy()

Validate_X_clean = Validate_X_raw.copy()

Train_X_clean = list(map(normalize_text, Train_X_clean))

Validate_X_clean = list(map(normalize_text, Validate_X_clean))

# Train_X_clean

#

# # 5. Model

# ([Go to top](#top))

evaluation_df = pd.DataFrame()

models_dict = {}

#

# ## 5.1 BOW

# ([Go to top](#top))

#

# ##### Text Preparation for BOW

vectorizer = CountVectorizer()

Train_X = vectorizer.fit_transform(Train_X_clean)

Validate_X = vectorizer.transform(Validate_X_clean)

# vectorizer.vocabulary_

Train_X = Train_X.toarray()

Validate_X = Validate_X.toarray()

print(Train_X.shape)

print(Validate_X.shape)

#

# ##### Building Model

model = models.Sequential()

model.add(layers.Dense(16, activation="relu", input_shape=(Train_X.shape[1],)))

model.add(layers.Dense(16, activation="relu"))

model.add(layers.Dense(1, activation="sigmoid"))

model.summary()

from keras.utils import plot_model

plot_model(model)

from keras import optimizers

model.compile(

optimizer=optimizers.RMSprop(learning_rate=0.001),

loss="binary_crossentropy",

metrics=["accuracy"],

)

history = model.fit(

Train_X,

y_train,

epochs=50,

batch_size=512,

validation_data=(Validate_X, y_validation),

)

#

# ##### Training VS Validation

accuracy = history.history["accuracy"]

loss = history.history["loss"]

val_accuracy = history.history["val_accuracy"]

val_loss = history.history["val_loss"]

plt.plot(loss, label="Training loss")

plt.plot(val_loss, label="Validation loss")

plt.title("Training and validation loss")

plt.legend()

plt.show()

plt.plot(accuracy, label="Training accuracy")

plt.plot(val_accuracy, label="Validation accuracy")

plt.title("Training and validation accuracy")

plt.legend()

plt.show()

#

# ##### Save Model

model.save("/kaggle/working/bow.h5")

#

# ##### Save Performance

model_name = "BOW"

models_dict[model_name] = "/kaggle/working/bow.h5"

train_loss, train_accuracy = model.evaluate(Train_X, y_train)

validation_loss, validation_accuracy = model.evaluate(Validate_X, y_validation)

evaluation = pd.DataFrame(

{

"Model": [model_name],

"Train": [train_accuracy],

"Validation": [validation_accuracy],

}

)

evaluation_df = pd.concat([evaluation_df, evaluation], ignore_index=True)

#

# ## 5.2 BOW Vectors

# ([Go to top](#top))

#

# ##### Text Preparation for BOW Vectors

from keras.preprocessing.text import Tokenizer

t = Tokenizer()

t.fit_on_texts(Train_X_clean)

vocab_size = len(t.word_index) + 1

# integer encode the documents

train_encoded_docs = t.texts_to_sequences(Train_X_clean)

validation_encoded_docs = t.texts_to_sequences(Validate_X_clean)

# print(train_encoded_docs)

# Determine the optimal maximum padding length

figure, subplots = plt.subplots(1, 2, figsize=(20, 5))

_ = sns.countplot(x=list(map(len, train_encoded_docs)), ax=subplots[0])

_ = sns.kdeplot(list(map(len, train_encoded_docs)), fill=True, ax=subplots[1])

from statistics import mode

train_encoded_docs_length = list(map(len, train_encoded_docs))

mode(train_encoded_docs_length)

# As we see the most frequent value in the histogram (mode) = 12.

# If we set the max padding length to be equal to the most frequent value in the histogram (mode = 12), let's see how many sentences we can get rid of.

len(

list(

filter(

lambda x: x >= mode(train_encoded_docs_length), train_encoded_docs_length

)

)

)

len(train_encoded_docs_length)

# We will lose around 34% of the data (6090 tweets).

round(

len(

list(

filter(

lambda x: x >= mode(train_encoded_docs_length),

train_encoded_docs_length,

)

)

)

/ len(train_encoded_docs_length)

* 100,

2,

)

# That's why we will set the max padding length to be equal to the length of the longest tweet.

max(train_encoded_docs_length)

max_length = max(train_encoded_docs_length)

train_seq = pad_sequences(train_encoded_docs, maxlen=max_length, padding="post")

validate_seq = pad_sequences(validation_encoded_docs, maxlen=max_length, padding="post")

print(train_seq)

#

# ##### Building Model

c_latent_factors = 32

model = models.Sequential()

model.add(layers.Embedding(vocab_size + 1, c_latent_factors, input_length=max_length))

model.add(layers.Flatten())

model.add(layers.Dense(16, activation="relu"))

model.add(layers.Dense(16, activation="relu"))

model.add(layers.Dense(1, activation="sigmoid"))

model.summary()

from keras.utils import plot_model

plot_model(model)

from keras import optimizers

model.compile(

optimizer=optimizers.RMSprop(learning_rate=0.001),

loss="binary_crossentropy",

metrics=["accuracy"],

)

history = model.fit(

train_seq,

y_train,

epochs=50,

batch_size=512,

validation_data=(validate_seq, y_validation),

)

#

# ##### Training VS Validation

accuracy = history.history["accuracy"]

loss = history.history["loss"]

val_accuracy = history.history["val_accuracy"]

val_loss = history.history["val_loss"]

plt.plot(loss, label="Training loss")

plt.plot(val_loss, label="Validation loss")

plt.title("Training and validation loss")

plt.legend()

plt.show()

plt.plot(accuracy, label="Training accuracy")

plt.plot(val_accuracy, label="Validation accuracy")

plt.title("Training and validation accuracy")

plt.legend()

plt.show()

#

# ##### Save Model

model.save("/kaggle/working/bow_vectors.h5")

#

# ##### Save Performance

model_name = "BOW Vectors"

models_dict[model_name] = "/kaggle/working/bow_vectors.h5"

train_loss, train_accuracy = model.evaluate(train_seq, y_train)

validation_loss, validation_accuracy = model.evaluate(validate_seq, y_validation)

evaluation = pd.DataFrame(

{

"Model": [model_name],

"Train": [train_accuracy],

"Validation": [validation_accuracy],

}

)

evaluation_df = pd.concat([evaluation_df, evaluation], ignore_index=True)

#

# ## 5.3 LSTM

# ([Go to top](#top))

#

# ##### Building Model

c_latent_factors = 32

model = models.Sequential()

model.add(layers.Embedding(vocab_size + 1, c_latent_factors, input_length=max_length))

model.add(layers.LSTM(32, dropout=0.2, recurrent_dropout=0.4))

model.add(layers.Flatten())

model.add(layers.Dense(16, activation="relu"))

model.add(layers.Dense(16, activation="relu"))

model.add(layers.Dense(1, activation="sigmoid"))

model.summary()

from keras.utils import plot_model

plot_model(model)

from keras import optimizers

model.compile(

optimizer=optimizers.RMSprop(learning_rate=0.001),

loss="binary_crossentropy",

metrics=["accuracy"],

)

history = model.fit(

train_seq,

y_train,

epochs=50,

batch_size=512,

validation_data=(validate_seq, y_validation),

)

#

# ##### Training VS Validation

accuracy = history.history["accuracy"]

loss = history.history["loss"]

val_accuracy = history.history["val_accuracy"]

val_loss = history.history["val_loss"]

plt.plot(loss, label="Training loss")

plt.plot(val_loss, label="Validation loss")

plt.title("Training and validation loss")

plt.legend()

plt.show()

plt.plot(accuracy, label="Training accuracy")

plt.plot(val_accuracy, label="Validation accuracy")

plt.title("Training and validation accuracy")

plt.legend()

plt.show()

#

# ##### Save Model

model.save("/kaggle/working/lstm.h5")

#

# ##### Save Performance

model_name = "LSTM"

models_dict[model_name] = "/kaggle/working/lstm.h5"

train_loss, train_accuracy = model.evaluate(train_seq, y_train)

validation_loss, validation_accuracy = model.evaluate(validate_seq, y_validation)

evaluation = pd.DataFrame(

{

"Model": [model_name],

"Train": [train_accuracy],

"Validation": [validation_accuracy],

}

)

evaluation_df = pd.concat([evaluation_df, evaluation], ignore_index=True)

#

# ## 5.4 GRU

# ([Go to top](#top))

#

# ##### Building Model

c_latent_factors = 32

model = models.Sequential()

model.add(layers.Embedding(vocab_size + 1, c_latent_factors, input_length=max_length))

model.add(layers.GRU(32, dropout=0.2, recurrent_dropout=0.4))

model.add(layers.Flatten())

model.add(layers.Dense(16, activation="relu"))

model.add(layers.Dense(16, activation="relu"))

model.add(layers.Dense(1, activation="sigmoid"))

model.summary()

from keras.utils import plot_model

plot_model(model)

from keras import optimizers

model.compile(

optimizer=optimizers.RMSprop(learning_rate=0.001),

loss="binary_crossentropy",

metrics=["accuracy"],

)

history = model.fit(

train_seq,

y_train,

epochs=50,

batch_size=512,

validation_data=(validate_seq, y_validation),

)

#

# ##### Training VS Validation

accuracy = history.history["accuracy"]

loss = history.history["loss"]

val_accuracy = history.history["val_accuracy"]

val_loss = history.history["val_loss"]

plt.plot(loss, label="Training loss")

plt.plot(val_loss, label="Validation loss")

plt.title("Training and validation loss")

plt.legend()

plt.show()

plt.plot(accuracy, label="Training accuracy")

plt.plot(val_accuracy, label="Validation accuracy")

plt.title("Training and validation accuracy")

plt.legend()

plt.show()

#

# ##### Save Model

model.save("/kaggle/working/gru.h5")

#

# ##### Save Performance

model_name = "GRU"

models_dict[model_name] = "/kaggle/working/gru.h5"

train_loss, train_accuracy = model.evaluate(train_seq, y_train)

validation_loss, validation_accuracy = model.evaluate(validate_seq, y_validation)

evaluation = pd.DataFrame(

{

"Model": [model_name],

"Train": [train_accuracy],

"Validation": [validation_accuracy],

}

)

evaluation_df = pd.concat([evaluation_df, evaluation], ignore_index=True)

#

# # 6. Evaluation

# ([Go to top](#top))

evaluation_df

from keras.models import load_model

# Get best model according to validation score.

best_model = evaluation_df[

evaluation_df["Validation"] == evaluation_df["Validation"].max()

]["Model"].values[0]

best_model

model = load_model(models_dict[best_model])

model.summary()

#

# # 7. Submission File Generation

# ([Go to top](#top))

test_data = pd.read_csv("/kaggle/input/nlp-getting-started/test.csv")

test_data.shape

test_data.head()

# Text preprocessing utilized during training

test_data["clean text"] = test_data["text"].apply(normalize_text)

test_data.head()

# Get the appropriate preparation based on model

if best_model == "BOW":

X_test = vectorizer.transform(test_data["clean text"])

X_test = X_test.toarray()

else:

X_test = t.texts_to_sequences(test_data["clean text"])

X_test = pad_sequences(X_test, maxlen=max_length, padding="post")

predictions = model.predict(X_test).round()

submission = pd.read_csv("/kaggle/input/nlp-getting-started/sample_submission.csv")

submission["target"] = np.round(predictions).astype("int")

submission.head()

submission.to_csv("submission.csv", index=False)

|

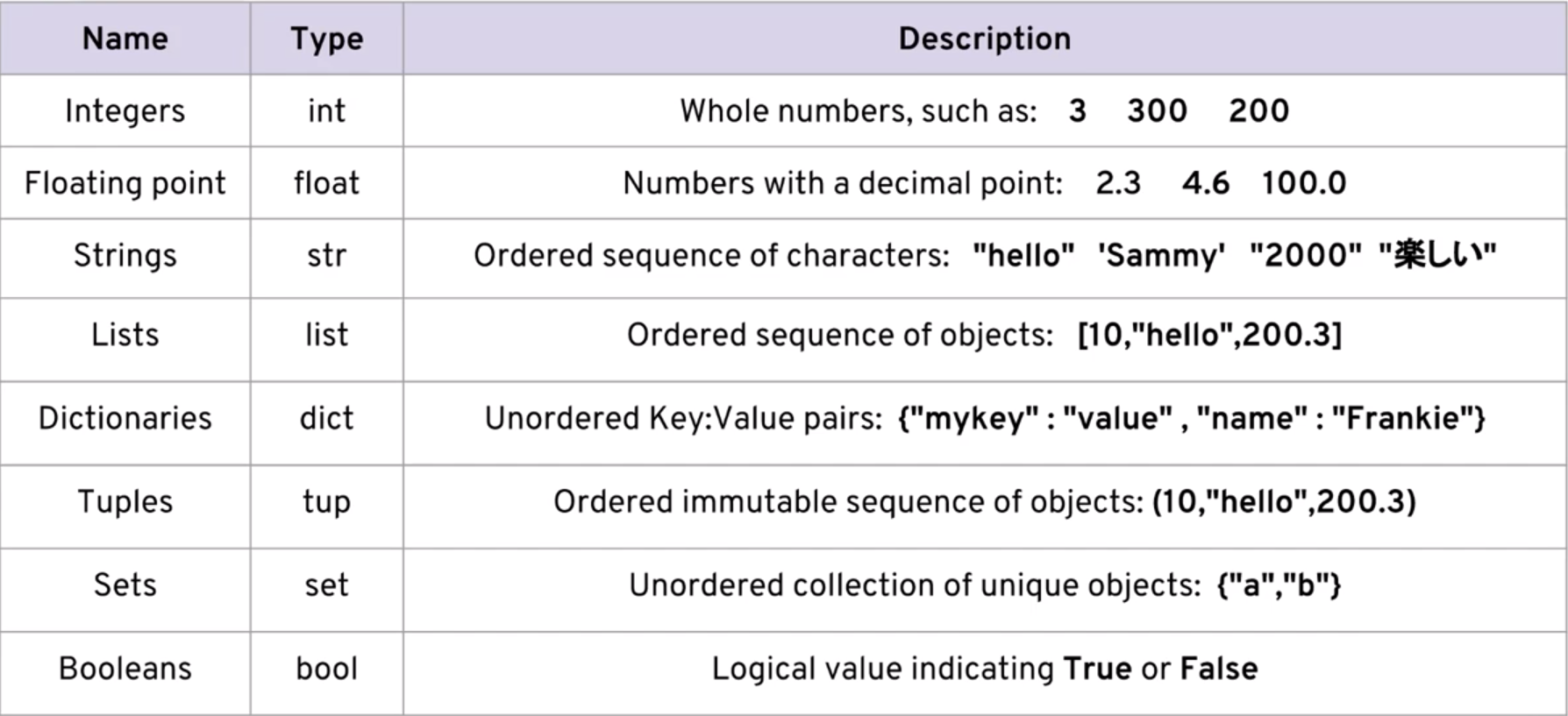

# Data Types and Data Structures in Python

#

# Data types in Python are the different types of data that can be used to store information in a program. There are several built-in data types in Python, including numeric, string, Boolean, list, tuple, set, and dictionary. Each data type is used for a specific purpose, and they have their own properties and operations.

# * Numeric data types include integers, floats, and complex numbers. Integers are whole numbers, floats are numbers with decimal points, and complex numbers have a real and imaginary part. For example, the length of a rectangle can be stored as an integer, the weight of a person can be stored as a float, and the impedance of a circuit can be stored as a complex number.

# * String data types are used to store text data, such as names, addresses, and messages. For example, a person's name can be stored as a string, a product description can be stored as a string, and a message sent through a chat application can be stored as a string.

# * Boolean data types are used to represent true or false values. They are often used in conditional statements and loops to control the flow of a program. For example, a program that checks whether a user is logged in or not can use a Boolean value to represent the login status.

# * List, tuple, set, and dictionary data types are used to store collections of data. Lists are used to store ordered collections of data, tuples are used to store immutable collections of data, sets are used to store unordered collections of unique data, and dictionaries are used to store key-value pairs. For example, a list can be used to store the grades of a group of students, a tuple can be used to store the coordinates of a point on a map, a set can be used to store the unique values in a dataset, and a dictionary can be used to store the properties of a person, such as their name, age, and address.

# * Data structures in Python are different ways of organizing and storing data in a program. They are used to efficiently store and manipulate large amounts of data. Some common data structures in Python include arrays, linked lists, stacks, queues, trees, and graphs. Each data structure has its own properties and operations, and is used for a specific purpose.

#

# Operations in Numeric Datatypes

x = 10

y = 3.5

z = 2 + 3j

# Arithmetic operations

print(x + y) # 13.5

print(x - y) # 6.5

print(x * y) # 35.0

print(x / y) # 2.857142857142857

print(x // y) # 2

print(x % 3) # 1

print(z.real) # 2.0

print(z.imag) # 3.0

# Operations in Boolean Datatypes

a = True

b = False

# Logical operations

print(a and b) # False

print(a or b) # True

print(not a) # False

|

# ## İş Problemi

# Scout’lar tarafından izlenen futbolcuların özelliklerine verilen puanlara göre, oyuncuların hangi sınıf (average, highlighted) oyuncu olduğunu tahminleme

# ## Veri Seti Hikayesi

# Veri seti Scoutium’dan maçlarda gözlemlenen futbolcuların özelliklerine göre scoutların değerlendirdikleri futbolcuların, maç içerisinde puanlanan özellikleri ve puanlarını içeren bilgilerden oluşmaktadır.

import pandas as pd

import seaborn as sns

import numpy as np

import matplotlib.pyplot as plt

from sklearn.ensemble import RandomForestClassifier, GradientBoostingClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import (

train_test_split,

GridSearchCV,

cross_validate,

validation_curve,

)

from sklearn.metrics import precision_score, f1_score, recall_score, roc_auc_score

from sklearn.preprocessing import StandardScaler, LabelEncoder

from xgboost import XGBClassifier

from lightgbm import LGBMClassifier

from catboost import CatBoostClassifier

pd.set_option("display.max_columns", None)

pd.set_option("display.float_format", lambda x: "%.3f" % x)

pd.set_option("display.width", 500)

# Veri setlerine genel bakış.

att_df = pd.read_csv(

"/kaggle/input/scoutiumattributes/scoutium_attributes.csv", sep=";"

)

pot_df = pd.read_csv(

"/kaggle/input/scoutiumpotentiallabels/scoutium_potential_labels.csv", sep=";"

)

att_df.head()

pot_df.head()

# Veri setlerini birleştirelim.

df = att_df.merge(

pot_df, on=["task_response_id", "match_id", "evaluator_id", "player_id"]

)

df.head()

# position_id içerisindeki Kaleci (1) sınıfını veri setinden kaldıralım.

df = df.loc[~(df["position_id"] == 1)]

df.head()

# Adım 4: potential_label içerisindeki below_average sınıfını veri setinden kaldıralım.( below_average sınıfı tüm verisetinin %1'ini oluşturur)

df = df.loc[~(df["potential_label"] == "below_average")]

df.head()

# İndekste “player_id”,“position_id” ve “potential_label”, sütunlarda “attribute_id” ve değerlerde scout’ların oyunculara verdiği puan“attribute_value” olacak şekilde pivot table’ı oluşturalım.

pivot_df = pd.pivot_table(

df,

values="attribute_value",

index=["player_id", "position_id", "potential_label"],

columns=["attribute_id"],

)

pivot_df.head()

# İndeksleri değişken olarak atayalım ve “attribute_id” sütunlarının isimlerini stringe çevirelim.

pivot_df = pivot_df.reset_index()

pivot_df.columns = pivot_df.columns.astype("str")

# Label Encoder fonksiyonunu kullanarak “potential_label” kategorilerini (average, highlighted) sayısal olarak ifade edelim.

le = LabelEncoder()

pivot_df["potential_label"] = le.fit_transform(pivot_df["potential_label"])

pivot_df.head()

# Sayısal değişken kolonlarını “num_cols” adıyla bir listeye atayalım.

num_cols = [

col

for col in pivot_df.columns

if pivot_df[col].dtypes != "O" and pivot_df[col].nunique() > 5

]

num_cols = [col for col in num_cols if col not in "player_id"]

num_cols = num_cols[1:]

# Kaydettiğimiz bütün “num_cols” değişkenlerindeki veriyi ölçeklendirmek için StandardScaler uygulayalım.

ss = StandardScaler()

df = pivot_df.copy()

df[num_cols] = ss.fit_transform(df[num_cols])

df.head()

# ## Model

X = df.drop(["potential_label"], axis=1)

y = df["potential_label"]

def base_models(X, y, scoring="roc_auc"):

print("Base Models....")

models = [

("LR", LogisticRegression()),

("KNN", KNeighborsClassifier()),

("CART", DecisionTreeClassifier()),

("RF", RandomForestClassifier()),

("GBM", GradientBoostingClassifier()),

("XGBoost", XGBClassifier(eval_metric="logloss")),

("LightGBM", LGBMClassifier()),

("CatBoost", CatBoostClassifier(verbose=False)),

]

for name, classifier in models:

cv_results = cross_validate(classifier, X, y, cv=3, scoring=scoring)

print(f"{scoring}: {round(cv_results['test_score'].mean(), 4)} ({name}) ")

# roc_auc skorları

base_models(X, y)

# f1 skorları

base_models(X, y, scoring="f1")

# accuracy skorları

base_models(X, y, scoring="accuracy")

# precision skorları

base_models(X, y, scoring="precision")

# Catboost'a göre hiperparametre optimizasyonu yapalım.

catboost_model = CatBoostClassifier(random_state=17, verbose=False)

catboost_params = {

"iterations": [200, 500],

"learning_rate": [0.01, 0.1],

"depth": [3, 6],

}

catboost_grid = GridSearchCV(

catboost_model, catboost_params, cv=5, n_jobs=-1, verbose=False

).fit(X, y)

catboost_final = catboost_model.set_params(

**catboost_grid.best_params_, random_state=17

).fit(X, y)

cv_results = cross_validate(

catboost_final, X, y, cv=5, scoring=["accuracy", "f1", "roc_auc", "precision"]

)

# Hata metriklerimize bakalım.

cv_results["test_accuracy"].mean()

cv_results["test_f1"].mean()

cv_results["test_roc_auc"].mean()

cv_results["test_precision"].mean()

# Değişkenlerin önem düzeyini belirten feature_importance fonksiyonunu kullanarak özelliklerin sıralamasını çizdirelim.

def plot_importance(model, features, num=len(X), save=False):

feature_imp = pd.DataFrame(

{"Value": model.feature_importances_, "Feature": features.columns}

)

plt.figure(figsize=(10, 10))

sns.set(font_scale=1)

sns.barplot(

x="Value",

y="Feature",

data=feature_imp.sort_values(by="Value", ascending=False)[0:num],

)

plt.title("Features")

plt.tight_layout()

plt.show()

if save:

plt.savefig("importances.png")

plot_importance(catboost_final, X)

|

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.utils.data import DataLoader, random_split

from torchvision import datasets, transforms, models

from torchvision.utils import make_grid

from sklearn.model_selection import train_test_split

from sklearn.metrics import classification_report

from sklearn.metrics import roc_curve, auc

import torch.optim as optim

from tqdm import tqdm

from torchsummary import summary

import os

import random

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.metrics import confusion_matrix

import seaborn as sns

import shutil

import json

from PIL import Image as PilImage

from omnixai.data.image import Image

from omnixai.explainers.vision.specific.gradcam.pytorch.gradcam import GradCAM

import warnings

warnings.filterwarnings("ignore")

BATCH_SIZE = 128

LR = 0.0001

# Define the preprocessing transforms

transform = transforms.Compose(

[

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]),

]

)

train_dataset = datasets.ImageFolder(

root="/kaggle/input/flower-classification-5-classes-roselilyetc/Flower Classification V2/V2/Training Data",

transform=transform,

)

test_dataset = datasets.ImageFolder(

root="/kaggle/input/flower-classification-5-classes-roselilyetc/Flower Classification V2/V2/Testing Data",

transform=transform,

)

val_dataset = datasets.ImageFolder(

root="/kaggle/input/flower-classification-5-classes-roselilyetc/Flower Classification V2/V2/Validation Data",

transform=transform,

)

# Define the dataloaders

train_dataloader = DataLoader(train_dataset, batch_size=BATCH_SIZE, shuffle=True)

val_dataloader = DataLoader(val_dataset, batch_size=BATCH_SIZE, shuffle=False)

test_dataloader = DataLoader(test_dataset, batch_size=BATCH_SIZE, shuffle=False)

class_names = train_dataset.classes

print(class_names)

print(len(class_names))

class VGG16(nn.Module):

def __init__(self, num_classes=5):

super(VGG16, self).__init__()

self.features = models.vgg16(pretrained=False).features

self.classifier = nn.Linear(512 * 7 * 7, num_classes)

def forward(self, x):

x = self.features(x)

x = torch.flatten(x, 1)

x = self.classifier(x)

return x

preprocess = lambda ims: torch.stack([transform(im.to_pil()) for im in ims])

# Set the device

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

device

model = VGG16(num_classes=len(class_names)).to(device)

best_checkpoint = torch.load(

"/kaggle/input/d2-vgg16-model/d2_vgg16_model_checkpoint.pth"

)

print(best_checkpoint.keys())

model.load_state_dict(best_checkpoint["state_dict"])

# load the saved weights from a .pth file

# set the model to evaluation mode

model.eval()

img_path = "/kaggle/input/flower-classification-5-classes-roselilyetc/Flower Classification V2/V2/Testing Data/Aster/Aster-Test (105).jpeg"

img = Image(PilImage.open(img_path).convert("RGB"))

explainer = GradCAM(

model=model, target_layer=model.features[-1], preprocess_function=preprocess

)

explanations = explainer.explain(img)

explanations.ipython_plot(index=0, class_names=class_names)

import os

import matplotlib.pyplot as plt

import numpy as np

test_dir = "/kaggle/input/flower-classification-5-classes-roselilyetc/Flower Classification V2/V2/Training Data/"

classes = [

"Aster",

"Daisy",

"Iris",

"Lavender",

"Lily",

"Marigold",

"Orchid",

"Poppy",

"Rose",

"Sunflower",

]

num_images = 2

for i, cls in enumerate(classes):

cls_dir = os.path.join(test_dir, cls)

img_files = os.listdir(cls_dir)

img_files = np.random.choice(img_files, size=num_images, replace=False)

for j, img_file in enumerate(img_files):

img_path = os.path.join(cls_dir, img_file)

img = Image(PilImage.open(img_path).convert("RGB"))

explainer = GradCAM(

model=model, target_layer=model.features[-1], preprocess_function=preprocess

)

explanations = explainer.explain(img)

explanations.ipython_plot(index=0, class_names=class_names)

|

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 20GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session

# Titanic Project Example Walk Through

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sbs

train_data = pd.read_csv("/kaggle/input/train-data/train.csv")

test_data = pd.read_csv("/kaggle/input/train-data/train.csv")

train_data["train_test"] = 1

test_data["train_test"] = 0

test_data["Survived"] = np.NaN

all_data = pd.concat([train_data, test_data])

all_data.columns

train_data.head()

train_data.isnull()

sbs.heatmap(train_data.isnull())

sbs.set_style("whitegrid")

sbs.countplot(x="Survived", data=train_data)

sbs.set_style("whitegrid")

sbs.countplot(x="Survived", hue="Sex", data=train_data)

sbs.set_style("whitegrid")

sbs.countplot(x="Survived", hue="Pclass", data=train_data)

sbs.distplot(train_data["Age"].dropna(), kde=False, bins=10)

sbs.countplot(x="SibSp", data=train_data)

sbs.distplot(train_data["Fare"], bins=20)

sbs.boxplot(x="Pclass", y="Age", data=train_data)

def function(columns):

Age = columns[0]

Pclass = columns[1]

if pd.isnull(Age):

if Pclass == 1:

return 37

elif Pclass == 2:

return 28

else:

return 25

else:

return Age

train_data["Age"] = train_data[["Age", "Pclass"]].apply(function, axis=1)

sbs.heatmap(train_data.isnull())

train_data.drop("Cabin", axis=1, inplace=True)

train_data.head()

# To calculate the required percenatge of women who survived

women = train_data.loc[train_data.Sex == "female"]["Survived"]

rate_women = (sum(women) / len(women)) * 100

print("Percentage of women who survived", rate_women)

# To calculate the required number of men who survived

men = train_data.loc[train_data.Sex == "male"]["Survived"]

rate_men = (sum(men) / len(men)) * 100

print("Percentage of women who survived", rate_men)

train_data.head()

sbs.displot(data=train_data, x="Age", row="Sex", col="Pclass", hue="Survived")

# Graphic Representation to check how many women survived and how many men survived.

labels = ["Women who Survived", "Men who Suvived"]

x = [rate_women, rate_men]

plt.pie(x, labels=labels)

# Through this presentation, we conclude that more percenatge of women survived than men

|

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# #### This is my first machine learning project that I build myself from scratch.

# # IMPORT LIBRARIES

import pandas as pd

import sklearn

from sklearn.feature_selection import RFE

from sklearn.preprocessing import StandardScaler, MinMaxScaler

from sklearn.linear_model import SGDRegressor

# from sklearn.linear_model import Ridge

from sklearn.preprocessing import PolynomialFeatures, OneHotEncoder

# from sklearn.linear_model import LinearRegression

from tensorflow.keras import Sequential

import tensorflow as tf

import numpy as np

from sklearn.metrics import mean_squared_error as mse

from sklearn.model_selection import train_test_split

from sklearn.utils import resample

from sklearn.metrics import mean_squared_log_error

from tensorflow.keras.activations import relu, linear

from tensorflow.keras.layers import Dense

from tensorflow.keras.optimizers import Adam

import matplotlib

# import matplotlib.pyplot as plt

# # IMPORT DATA

holidays_original = pd.read_csv(

"/kaggle/input/store-sales-time-series-forecasting/holidays_events.csv"

)

oil_original = pd.read_csv("/kaggle/input/store-sales-time-series-forecasting/oil.csv")

stores_original = pd.read_csv(

"/kaggle/input/store-sales-time-series-forecasting/stores.csv"

)

train_original = pd.read_csv(

"/kaggle/input/store-sales-time-series-forecasting/train.csv"

)

transactions_original = pd.read_csv(

"/kaggle/input/store-sales-time-series-forecasting/transactions.csv"

)

test_original = pd.read_csv(

"/kaggle/input/store-sales-time-series-forecasting/test.csv"

)

# # DATA WRANGLING

# #### Convert date (object) -> datetime

train_original["date"] = pd.to_datetime(train_original["date"])

test_original["date"] = pd.to_datetime(test_original["date"])

transactions_original["date"] = pd.to_datetime(transactions_original["date"])

holidays_original["date"] = pd.to_datetime(holidays_original["date"])

oil_original["date"] = pd.to_datetime(oil_original["date"])

# ### HOLIDAYS DATASET

# Remove transferred column

holidays = holidays_original.loc[(holidays_original["transferred"] == False)][

["date", "type", "locale", "locale_name"]

]

# Mutate city and state column

holidays["city_of_holidays"] = np.where(

holidays["locale"] == "Local", holidays["locale_name"], np.nan

)

holidays["state_of_holidays"] = np.where(

holidays["locale"] == "Regional", holidays["locale_name"], np.nan

)

# Group the date so we have unique date for each holiday

holidays.loc[holidays["city_of_holidays"].isnull() == False].groupby("date").agg(

np.array

)

# ### OIL DATA

# #### Make oil data for everyday in the period

full_date_oil = pd.DataFrame(

pd.date_range(start="2013-01-01", end="2017-08-31", name="date")

)

oil_price = np.zeros(len(full_date_oil))

oil_index = 0

for i in range(len(oil_price)):

if full_date_oil.iloc[i][0] < oil_original.iloc[oil_index + 1]["date"]:

oil_price[i] = oil_original.iloc[oil_index]["dcoilwtico"]

elif oil_index == len(oil_original) - 1:

break

else:

oil_index += 1

oil_price[i] = oil_original.iloc[oil_index]["dcoilwtico"]

full_date_oil["oil_price"] = oil_price

# Fill NA values

full_date_oil.fillna(method="backfill", inplace=True)

full_date_oil

# ## JOIN DATASETS

train_with_stores = pd.merge(

train_original,

stores_original,

left_on="store_nbr",

right_on="store_nbr",

how="left",

)

train_joined = pd.merge(

train_with_stores, full_date_oil, left_on=["date"], right_on=["date"], how="left"

)

train_joined = pd.merge(

train_joined,

holidays[

[

"date",

"type",

"locale",

"locale_name",

"city_of_holidays",

"state_of_holidays",

]

]

.groupby("date")

.agg(" ".join),

left_on=["date"],

right_on=["date"],

how="left",

)

test_with_stores = pd.merge(

test_original,

stores_original,

left_on="store_nbr",

right_on="store_nbr",

how="left",

)

test_joined = pd.merge(

test_with_stores, full_date_oil, left_on=["date"], right_on=["date"], how="left"

)

test_joined = pd.merge(

test_joined,

holidays[

[

"date",

"type",

"locale",

"locale_name",

"city_of_holidays",

"state_of_holidays",

]

]

.groupby("date")

.agg(" ".join),

left_on=["date"],

right_on=["date"],

how="left",

)

train_joined

# ### One hot for holidays

# Create a column to determine whether the store is located near the holiday or not. If the holiday is national then value equals 1, or the state or the city of the store match with the holidays then the value equals 1. Otherwise the value is 0.

#

def condition(row):

if (

"National" in str(row["locale"])

or str(row["city"]) in str(row["locale_name"])

or str(row["state"]) in str(row["locale_name"])

):

return 1

else:

return 0

train_joined["holidays?"] = train_joined.apply(condition, axis=1)

test_joined["holidays?"] = test_joined.apply(condition, axis=1)

# ### Add salary day

train_joined["month"] = train_joined["date"].dt.month

train_joined["day_of_month"] = train_joined["date"].dt.day

train_joined["year"] = train_joined["date"].dt.year

train_joined["salary_day?"] = train_joined["date"].apply(

lambda x: 1 if (x.is_month_end == True or x.date().day == 15) else 0

)

## For test dataset

test_joined["month"] = test_joined["date"].dt.month

test_joined["day_of_month"] = test_joined["date"].dt.day

test_joined["year"] = test_joined["date"].dt.year

test_joined["salary_day?"] = test_joined["date"].apply(

lambda x: 1 if (x.is_month_end == True or x.date().day == 15) else 0

)

# # RENAME COLUMN FOR BETTER INTEPRETATION

train_joined = train_joined.rename(

columns={"type_x": "store_type", "type_y": "holiday_type"}

)

train_joined

test_joined = test_joined.rename(

columns={"type_x": "store_type", "type_y": "holiday_type"}

)

test_joined

# # RESAMPLE FOR SMALLER TRAINING DATA

train_resampled = resample(train_joined, n_samples=1500000)

train_resampled

# # NORMALIZING DATA

# Select features for training

ohc = OneHotEncoder(sparse=False, drop="first")

one_hot = ohc.fit(

train_resampled[

[

"holidays?",

"salary_day?",

"locale",

"family",

"city",

"state",

"store_type",

"locale_name",

"holiday_type",

]

]

)

train_one_hot = ohc.fit_transform(

train_resampled[

[

"holidays?",

"salary_day?",

"locale",

"family",

"city",

"state",

"store_type",

"locale_name",

"holiday_type",

]

]

)

# train_one_hot=pd.get_dummies(train_resampled[['holidays?','salary_day?','locale','family','city','state','store_type','locale_name','holiday_type']])

train_unscaled_x = train_resampled[

["year", "store_nbr", "onpromotion", "oil_price", "month", "cluster"]

]

poly = PolynomialFeatures(degree=4, include_bias=False)

poly_fit = poly.fit(train_unscaled_x)

item_poly = poly_fit.transform(train_unscaled_x)

# Scale

train_unscaled_y = train_resampled["sales"]

scaler = StandardScaler()

scaler = scaler.fit(item_poly)

item_train = scaler.fit_transform(item_poly)

y_for_train = np.array(train_unscaled_y).reshape(-1, 1)

train_final = np.concatenate((item_train, np.array(train_one_hot)), axis=1)

train_final.shape

pd.DataFrame(train_final).describe()

test_one_hot = pd.get_dummies(

test_joined[

[

"holidays?",

"salary_day?",

"locale",

"family",

"city",

"state",

"store_type",

"locale_name",

"holiday_type",

]

]

)

test_one_hot = ohc.transform(

test_joined[

[

"holidays?",

"salary_day?",

"locale",

"family",

"city",

"state",

"store_type",

"locale_name",

"holiday_type",

]

]

)

test_unscaled = test_joined[

["year", "store_nbr", "onpromotion", "oil_price", "month", "cluster"]

]

test_poly = poly_fit.transform(test_unscaled)

test_scaled = scaler.transform(test_poly)

test_final = np.concatenate((test_scaled, np.array(test_one_hot)), axis=1)

test_final.shape

# # SPLIT DATA FOR TRAINING

X_train, X_val, y_train, y_val = train_test_split(

train_final, y_for_train, test_size=0.10, random_state=1

)

print("X_train.shape", X_train.shape, "y_train.shape", y_train.shape)

print("X_val.shape", X_val.shape, "y_val.shape", y_val.shape)

# # BUILD THE MODEL

# Initialize learning rate decay

initial_learning_rate = 0.001

lr_schedule = tf.keras.optimizers.schedules.ExponentialDecay(

initial_learning_rate, decay_steps=30000, decay_rate=0.9, staircase=True

)

opt = Adam(lr_schedule)

model = Sequential(

[

tf.keras.Input(shape=(342,)),

Dense(

342, activation="relu", activity_regularizer=tf.keras.regularizers.L1(0.01)

),

Dense(

32, activation="relu", activity_regularizer=tf.keras.regularizers.L2(0.02)

),

Dense(1, activation="relu"),

],

name="my_model",

)

model.compile(optimizer=opt, loss=tf.keras.losses.MeanSquaredLogarithmicError())

history = model.fit(X_train, y_train, epochs=80)

model.evaluate(X_val, y_val)

submit = pd.DataFrame(model.predict(test_final))

submit_ = pd.concat((test_joined["id"], submit[0]), axis=1)

submit_ = submit_.rename(columns={0: "sales"})

submit_.to_csv("submit6.csv", index=False)

submit_

# Save weights

model.save_weights("./saved/checkpoints")

# # PLOT THE RESULT

model.predict(X_val)

lim = y_val.max()

matplotlib.pyplot.scatter(y_val, model.predict(X_val), marker="o")

matplotlib.pyplot.xlabel("Ground Truth")

matplotlib.pyplot.ylabel("Predict")

matplotlib.pyplot.xlim(0, lim)

matplotlib.pyplot.ylim(0, lim)

|

# ## Visualization Technique

# For this demonstration I will be using the jointplot and the backbone of it the JointGrid from the seaborn library.

# This plotting technique is a way to see the interaction between two continuous variables. The grid is split into three sections, the joint plot which is in the center and the marginal plots, one of which is on top and the other which is on the right side. The joint plot shows the interaction of the variables, and the marginal plots show the distributions. Using The jointplot we can quickly graph a scatterplot and also see the histograms of those variables in the joint and marginal plots respectively. We can specify different plot types in the jointplot, but once we select the type we want to use in the joint plot we are locked into the choice for the marginal plots. To overcome this, we can use the JointGrid instead. The jointplot is built on top of the JointGrid, using the latter gives us more control but is a bit more involved. "This is intended to be a fairly lightweight wrapper; if you need more flexibility, you should use JointGrid directly."(Seaborn.jointplot — Seaborn 0.11.1 Documentation)

# Plotting with the jointplot and JointGrid interface is a great way to see bivariate data as well as each univariate distribution on the same figure. These techniques can be used with any bivariate and continuous data. Just like a scatter plot we can see the relationship between the variables, and we can even specify a regression plot to fit a regression line. Although this technically works with categorical data and we can make barplots in the JointGrid, at least one of the marginal plots won't make sense and it would be better visualized using another plotting function. This can be seen in Example 9.

# ## Visulaization library

# The seaborn Library is an open source statistical plotting library derived from matplotlib. It is more intuitive and makes quick plotting easy with nice looking default values. Seaborn has a quick and easy way to create this jointplot, which is technically possible to create in matplotlib's pyplot, but it would take a lot of code and testing to get right. Using seaborn to create the jointplot has its limitations. With many of the seaborn functions they can take a matplotlib axis as an argument and most also return that axis for further customization. This is not the case with jointplot. It is built on top of the JointGrid and has access to the axis objects created from the jointgrid for customization but no axis or figure objects can be passed to JointGrid initially. Unlike matplotlib's pyplot, seaborn is declarative not procedural. As stated in the seaborn introduction "Its dataset-oriented, declarative API lets you focus on what the different elements of your plots mean, rather than on the details of how to draw them"(An Introduction to Seaborn — Seaborn 0.9.0 Documentation). The Seaborn library was created by Michael Waskom and can be integrated wherever matplotlib can be which creates a very diverse array of environments where it can be used including the jupyter environment without any alteration.

# ### Installation

# `pip install seaborn`

# or

# `conda install seaborn`

# ### Dependencies

# `numpy`

# `scipy`

# `pandas`

# `matplotlib`

# ### Optional Dependencies

# `statsmodels`

# `fastcluster`

# (Seaborn.jointplot — Seaborn 0.11.1 Documentation)

# ## Visualization demonstration

# For this demonstration I am going to use one of the built-in datasets from the seaborn library. Specifically, the miles per gallon (mpg.csv) dataset. it can be retrieved by `seaborn.load_dataset('mpg')` or by going to https://github.com/mwaskom/seaborn-data.

# ### Import and data cleaning

# first we import our modules

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

# set the pandas DataFrame to display all columns