script

stringlengths 113

767k

|

|---|

import numpy as np

import pandas as pd

from pandas_profiling import ProfileReport

import os

for dirname, _, filenames in os.walk("/kaggle/input"):

for filename in filenames:

print(os.path.join(dirname, filename))

# # 1. Load Data & Check Information

df_net = pd.read_csv("../input/netflix-shows/netflix_titles.csv")

# dfqwefdsafqwe

df_net.head()

ProfileReport(df_net)

|

import pandas as pd

import numpy as np

import os

import matplotlib.pyplot as plt

from keras import models

from keras.utils import to_categorical, np_utils

from tensorflow import convert_to_tensor

from tensorflow.image import grayscale_to_rgb

from tensorflow.data import Dataset

from tensorflow.keras.layers import Flatten, Dense, GlobalAvgPool2D, GlobalMaxPool2D

from tensorflow.keras.callbacks import Callback, EarlyStopping, ReduceLROnPlateau

from tensorflow.keras import optimizers

from tensorflow.keras.utils import plot_model

# import tensorflow as tf

# tf.__version__

# Define the input path and show all files

path = "/kaggle/input/challenges-in-representation-learning-facial-expression-recognition-challenge/"

os.listdir(path)

# Load the image data with labels.

data = pd.read_csv(path + "icml_face_data.csv")

data.head()

# Overview

data[" Usage"].value_counts()

emotions = {

0: "Angry",

1: "Disgust",

2: "Fear",

3: "Happy",

4: "Sad",

5: "Surprise",

6: "Neutral",

}

def prepare_data(data):

"""Prepare data for modeling

input: data frame with labels und pixel data

output: image and label array"""

image_array = np.zeros(shape=(len(data), 48, 48))

image_label = np.array(list(map(int, data["emotion"])))

for i, row in enumerate(data.index):

image = np.fromstring(data.loc[row, " pixels"], dtype=int, sep=" ")

image = np.reshape(image, (48, 48))

image_array[i] = image

return image_array, image_label

# Define training, validation and test data:

train_image_array, train_image_label = prepare_data(data[data[" Usage"] == "Training"])

val_image_array, val_image_label = prepare_data(data[data[" Usage"] == "PrivateTest"])

test_image_array, test_image_label = prepare_data(data[data[" Usage"] == "PublicTest"])

# Reshape and scale the images:

train_images = train_image_array.reshape((train_image_array.shape[0], 48, 48, 1))

train_images = train_images.astype("float32") / 255

val_images = val_image_array.reshape((val_image_array.shape[0], 48, 48, 1))

val_images = val_images.astype("float32") / 255

test_images = test_image_array.reshape((test_image_array.shape[0], 48, 48, 1))

test_images = test_images.astype("float32") / 255

# As the pretrained model expects rgb images, we convert our grayscale images with a single channel to pseudo-rgb images with 3 channels

train_images_rgb = grayscale_to_rgb(convert_to_tensor(train_images))

val_images_rgb = grayscale_to_rgb(convert_to_tensor(val_images))

test_images_rgb = grayscale_to_rgb(convert_to_tensor(test_images))

# Data Augmentation using ImageDataGenerator

# sources:

# https://www.tensorflow.org/api_docs/python/tf/keras/preprocessing/image/ImageDataGenerator

# https://pyimagesearch.com/2019/07/08/keras-imagedatagenerator-and-data-augmentation/

from tensorflow.keras.preprocessing.image import ImageDataGenerator

train_rgb_datagen = ImageDataGenerator(

rotation_range=0.15,

width_shift_range=0.15,

height_shift_range=0.15,

shear_range=0.15,

zoom_range=0.15,

horizontal_flip=True,

zca_whitening=False,

)

train_rgb_datagen.fit(train_images_rgb)

# Encoding of the target value:

train_labels = to_categorical(train_image_label)

val_labels = to_categorical(val_image_label)

test_labels = to_categorical(test_image_label)

def plot_examples(label=0):

fig, axs = plt.subplots(1, 5, figsize=(25, 12))

fig.subplots_adjust(hspace=0.2, wspace=0.2)

axs = axs.ravel()

for i in range(5):

idx = data[data["emotion"] == label].index[i]

axs[i].imshow(train_images[idx][:, :, 0], cmap="gray")

axs[i].set_title(emotions[train_labels[idx].argmax()])

axs[i].set_xticklabels([])

axs[i].set_yticklabels([])

plot_examples(label=0)

plot_examples(label=1)

plot_examples(label=2)

plot_examples(label=3)

plot_examples(label=4)

plot_examples(label=5)

plot_examples(label=6)

# In case we may want to save some examples:

from PIL import Image

def save_all_emotions(channels=1, imgno=0):

for i in range(7):

idx = data[data["emotion"] == i].index[imgno]

emotion = emotions[train_labels[idx].argmax()]

img = train_images[idx]

if channels == 1:

img = img.squeeze()

else:

img = grayscale_to_rgb(

convert_to_tensor(img)

).numpy() # convert to tensor, then to 3ch, back to numpy

img_shape = img.shape

# print(f'img shape: {img_shape[0]},{img_shape[1]}, type: {type(img)}') #(48,48)

img = img * 255

img = img.astype(np.uint8)

suf = "_%d_%d_%d" % (img_shape[0], img_shape[1], channels)

os.makedirs("examples" + suf, exist_ok=True)

fname = os.path.join("examples" + suf, emotion + suf + ".png")

Image.fromarray(img).save(fname)

print(f"saved: {fname}")

save_all_emotions(channels=3, imgno=0)

def plot_compare_distributions(array1, array2, title1="", title2=""):

df_array1 = pd.DataFrame()

df_array2 = pd.DataFrame()

df_array1["emotion"] = array1.argmax(axis=1)

df_array2["emotion"] = array2.argmax(axis=1)

fig, axs = plt.subplots(1, 2, figsize=(12, 6), sharey=False)

x = emotions.values()

y = df_array1["emotion"].value_counts()

keys_missed = list(set(emotions.keys()).difference(set(y.keys())))

for key_missed in keys_missed:

y[key_missed] = 0

axs[0].bar(x, y.sort_index(), color="orange")

axs[0].set_title(title1)

axs[0].grid()

y = df_array2["emotion"].value_counts()

keys_missed = list(set(emotions.keys()).difference(set(y.keys())))

for key_missed in keys_missed:

y[key_missed] = 0

axs[1].bar(x, y.sort_index())

axs[1].set_title(title2)

axs[1].grid()

plt.show()

plot_compare_distributions(

train_labels, val_labels, title1="train labels", title2="val labels"

)

# Calculate the class weights of the label distribution:

class_weight = dict(

zip(

range(0, 7),

(

(

(

data[data[" Usage"] == "Training"]["emotion"].value_counts()

).sort_index()

)

/ len(data[data[" Usage"] == "Training"]["emotion"])

).tolist(),

)

)

class_weight

# ## General defintions and helper functions

# Define callbacks

early_stopping = EarlyStopping(

monitor="val_accuracy",

min_delta=0.00008,

patience=11,

verbose=1,

restore_best_weights=True,

)

lr_scheduler = ReduceLROnPlateau(

monitor="val_accuracy",

min_delta=0.0001,

factor=0.25,

patience=4,

min_lr=1e-7,

verbose=1,

)

callbacks = [

early_stopping,

lr_scheduler,

]

# General shape parameters

IMG_SIZE = 48

NUM_CLASSES = 7

BATCH_SIZE = 64

# A plotting function to visualize training progress

def render_history(history, suf=""):

fig, (ax1, ax2) = plt.subplots(1, 2)

plt.subplots_adjust(left=0.1, bottom=0.1, right=0.95, top=0.9, wspace=0.4)

ax1.set_title("Losses")

ax1.plot(history.history["loss"], label="loss")

ax1.plot(history.history["val_loss"], label="val_loss")

ax1.set_xlabel("epochs")

ax1.set_ylabel("value of the loss function")

ax1.legend()

ax2.set_title("Accuracies")

ax2.plot(history.history["accuracy"], label="accuracy")

ax2.plot(history.history["val_accuracy"], label="val_accuracy")

ax2.set_xlabel("epochs")

ax2.set_ylabel("value of accuracy")

ax2.legend()

plt.show()

suf = "" if suf == "" else "_" + suf

fig.savefig("loss_and_acc" + suf + ".png")

# ## Model construction

from tensorflow.keras.applications import MobileNet

from tensorflow.keras.models import Model

# By specifying the include_top=False argument, we load a network that

# doesn't include the classification layers at the top, which is ideal for feature extraction.

base_net = MobileNet(

input_shape=(IMG_SIZE, IMG_SIZE, 3), include_top=False, weights="imagenet"

)

# plot_model(base_net, show_shapes=True, show_layer_names=True, expand_nested=True, dpi=50, to_file='mobilenet_full.png')

# For these small images, mobilenet is a very large model. Observing that there is nothing left to convolve further, we take the model only until the 12.block

base_model = Model(

inputs=base_net.input,

outputs=base_net.get_layer("conv_pw_12_relu").output,

name="mobilenet_trunc",

)

# this is the same as:

# base_model = Model(inputs = base_net.input,outputs = base_net.layers[-7].output)

# plot_model(base_model, show_shapes=True, show_layer_names=True, expand_nested=True, dpi=50, to_file='mobilenet_truncated.png')

# from: https://www.tensorflow.org/tutorials/images/transfer_learning

from tensorflow.keras import Sequential, layers

from tensorflow.keras import Input, Model

# from tensor

# base_model.trainable = False

# This model expects pixel values in [-1, 1], but at this point, the pixel values in your images are in [0, 255].

# To rescale them, use the preprocessing method included with the model.

# preprocess_input = tf.keras.applications.mobilenet_v2.preprocess_input

# Add a classification head: To generate predictions from the block of features,

# average over the spatial 2x2 spatial locations, using a tf.keras.layers.GlobalAveragePooling2D layer

# to convert the features to a single 1280-element vector per image.

global_average_layer = GlobalAvgPool2D()

# feature_batch_average = global_average_layer(feature_batch)

# print(feature_batch_average.shape)

# Apply a tf.keras.layers.Dense layer to convert these features into a single prediction per image.

# You don't need an activation function here because this prediction will be treated as a logit,

# or a raw prediction value. Positive numbers predict class 1, negative numbers predict class 0.

prediction_layer = Dense(NUM_CLASSES, activation="softmax", name="pred")

# prediction_batch = prediction_layer(feature_batch_average)

# print(prediction_batch.shape)

# Build a model by chaining together the data augmentation, rescaling, base_model and feature extractor layers

# using the Keras Functional API. As previously mentioned, use training=False as our model contains a BatchNormalization layer.

inputs_raw = Input(shape=(IMG_SIZE, IMG_SIZE, 3))

# inputs_pp = preprocess_input(inputs_aug)

# x = base_model(inputs_pp, training=False)

x = base_model(inputs_raw, training=False)

x = global_average_layer(x)

# x = tf.keras.layers.Dropout(0.2)(x)

outputs = prediction_layer(x)

model = Model(inputs=inputs_raw, outputs=outputs)

model.summary()

plot_model(

model,

show_shapes=True,

show_layer_names=True,

expand_nested=True,

dpi=50,

to_file="MobileNet12blocks_structure.png",

)

# Train the classification head:

# base_model.trainable = True #if we included the model layers, but not the model itself, this doesn't have any effect

for layer in base_model.layers[:]:

layer.trainable = False

# for layer in base_model.layers[81:]:

# layer.trainable = True

optims = {

"sgd": optimizers.SGD(lr=0.1, momentum=0.9, decay=0.01),

"adam": optimizers.Adam(0.01),

"nadam": optimizers.Nadam(

learning_rate=0.1, beta_1=0.9, beta_2=0.999, epsilon=1e-07

),

}

model.compile(

loss="categorical_crossentropy", optimizer=optims["adam"], metrics=["accuracy"]

)

model.summary()

initial_epochs = 5

# total_epochs = initial_epochs + 5

history = model.fit_generator(

train_rgb_datagen.flow(train_images_rgb, train_labels, batch_size=BATCH_SIZE),

validation_data=(val_images_rgb, val_labels),

class_weight=class_weight,

steps_per_epoch=len(train_images) / BATCH_SIZE,

# initial_epoch = history.epoch[-1],

# epochs = total_epochs,

epochs=initial_epochs,

callbacks=callbacks,

use_multiprocessing=True,

)

# ### Fine-tuning

iterative_finetuning = False

# #### First iteration: partial fine-tuning of the base_model

if iterative_finetuning:

# fine-tune the top layers (blocks 7-12):

# Let's take a look to see how many layers are in the base model

print("Number of layers in the base model: ", len(base_model.layers))

# base_model.trainable = True #if we included the model layers, but not the model itself, this doesn't have any effect

for layer in base_model.layers:

layer.trainable = False

for layer in base_model.layers[-37:]: # blocks 7-12

layer.trainable = True

optims = {

"sgd": optimizers.SGD(lr=0.01, momentum=0.9, decay=0.01),

"adam": optimizers.Adam(0.001),

"nadam": optimizers.Nadam(

learning_rate=0.01, beta_1=0.9, beta_2=0.999, epsilon=1e-07

),

}

model.compile(

loss="categorical_crossentropy", optimizer=optims["adam"], metrics=["accuracy"]

)

model.summary()

if iterative_finetuning:

fine_tune_epochs = 40

total_epochs = history.epoch[-1] + fine_tune_epochs

history = model.fit_generator(

train_rgb_datagen.flow(train_images_rgb, train_labels, batch_size=BATCH_SIZE),

validation_data=(val_images_rgb, val_labels),

class_weight=class_weight,

steps_per_epoch=len(train_images) / BATCH_SIZE,

initial_epoch=history.epoch[-1],

epochs=total_epochs,

callbacks=callbacks,

use_multiprocessing=True,

)

if iterative_finetuning:

test_loss, test_acc = model.evaluate(test_images_rgb, test_labels) # , test_labels

print("test caccuracy:", test_acc)

if iterative_finetuning:

render_history(history, "mobilenet12blocks_wdgenaug_finetuning1")

# #### Second Iteration (or the main iteration, if iterative_finetuning was set to False): fine-tuning of the entire base_model

if iterative_finetuning:

ftsuf = "ft_2"

else:

ftsuf = "ft_atonce"

# fine-tune all layers

# Let's take a look to see how many layers are in the base model

print("Number of layers in the base model: ", len(base_model.layers))

# base_model.trainable = True #if we included the model layers, but not the model itself, this doesn't have any effect

for layer in base_model.layers:

layer.trainable = False

for layer in base_model.layers[:]:

layer.trainable = True

optims = {

"sgd": optimizers.SGD(lr=0.01, momentum=0.9, decay=0.01),

"adam": optimizers.Adam(0.0001),

"nadam": optimizers.Nadam(

learning_rate=0.01, beta_1=0.9, beta_2=0.999, epsilon=1e-07

),

}

model.compile(

loss="categorical_crossentropy", optimizer=optims["adam"], metrics=["accuracy"]

)

model.summary()

fine_tune_epochs = 100

total_epochs = history.epoch[-1] + fine_tune_epochs

history = model.fit_generator(

train_rgb_datagen.flow(train_images_rgb, train_labels, batch_size=BATCH_SIZE),

validation_data=(val_images_rgb, val_labels),

class_weight=class_weight,

steps_per_epoch=len(train_images) / BATCH_SIZE,

initial_epoch=history.epoch[-1],

epochs=total_epochs,

callbacks=callbacks,

use_multiprocessing=True,

)

test_loss, test_acc = model.evaluate(test_images_rgb, test_labels) # , test_labels

print("test caccuracy:", test_acc)

render_history(history, "mobilenet12blocks_wdgenaug_" + ftsuf)

pred_test_labels = model.predict(test_images_rgb)

model_yaml = model.to_yaml()

with open(

"MobileNet12blocks_wdgenaug_onrawdata_valacc_" + ftsuf + ".yaml", "w"

) as yaml_file:

yaml_file.write(model_yaml)

model.save("MobileNet12blocks_wdgenaug_onrawdata_valacc_" + ftsuf + ".h5")

# ### Analyze the predictions made for the test data

def plot_image_and_emotion(

test_image_array, test_image_label, pred_test_labels, image_number

):

"""Function to plot the image and compare the prediction results with the label"""

fig, axs = plt.subplots(1, 2, figsize=(12, 6), sharey=False)

bar_label = emotions.values()

axs[0].imshow(test_image_array[image_number], "gray")

axs[0].set_title(emotions[test_image_label[image_number]])

axs[1].bar(bar_label, pred_test_labels[image_number], color="orange", alpha=0.7)

axs[1].grid()

plt.show()

import ipywidgets as widgets

@widgets.interact

def f(x=106):

# print(x)

plot_image_and_emotion(test_image_array, test_image_label, pred_test_labels, x)

# ### Make inference for a single image from scratch:

def predict_emotion_of_image(

test_image_array, test_image_label, pred_test_labels, image_number

):

input_arr = test_image_array[image_number] / 255

input_arr = input_arr.reshape((48, 48, 1))

input_arr_rgb = grayscale_to_rgb(convert_to_tensor(input_arr))

predictions = model.predict(np.array([input_arr_rgb]))

predictions_f = [

"%s:%5.2f" % (emotions[i], p * 100) for i, p in enumerate(predictions[0])

]

label = emotions[test_image_label[image_number]]

return f"Label: {label}\nPredictions: {predictions_f}"

import ipywidgets as widgets

@widgets.interact

def f(x=106):

result = predict_emotion_of_image(

test_image_array, test_image_label, pred_test_labels, x

)

print(result)

# ## Compare the distribution of labels and predicted labels

def plot_compare_distributions(array1, array2, title1="", title2=""):

df_array1 = pd.DataFrame()

df_array2 = pd.DataFrame()

df_array1["emotion"] = array1.argmax(axis=1)

df_array2["emotion"] = array2.argmax(axis=1)

fig, axs = plt.subplots(1, 2, figsize=(12, 6), sharey=False)

x = emotions.values()

y = df_array1["emotion"].value_counts()

keys_missed = list(set(emotions.keys()).difference(set(y.keys())))

for key_missed in keys_missed:

y[key_missed] = 0

axs[0].bar(x, y.sort_index(), color="orange")

axs[0].set_title(title1)

axs[0].grid()

y = df_array2["emotion"].value_counts()

keys_missed = list(set(emotions.keys()).difference(set(y.keys())))

for key_missed in keys_missed:

y[key_missed] = 0

axs[1].bar(x, y.sort_index())

axs[1].set_title(title2)

axs[1].grid()

plt.show()

plot_compare_distributions(

test_labels, pred_test_labels, title1="test labels", title2="predict labels"

)

df_compare = pd.DataFrame()

df_compare["real"] = test_labels.argmax(axis=1)

df_compare["pred"] = pred_test_labels.argmax(axis=1)

df_compare["wrong"] = np.where(df_compare["real"] != df_compare["pred"], 1, 0)

from sklearn.metrics import confusion_matrix

from mlxtend.plotting import plot_confusion_matrix

conf_mat = confusion_matrix(test_labels.argmax(axis=1), pred_test_labels.argmax(axis=1))

fig, ax = plot_confusion_matrix(

conf_mat=conf_mat,

show_normed=True,

show_absolute=False,

class_names=emotions.values(),

figsize=(8, 8),

)

fig.show()

|

# #### EEMT 5400 IT for E-Commerce Applications

# ##### HW4 Max score: (1+1+1)+(1+1+2+2)+(1+2)+2

# You will use two different datasets in this homework and you can find their csv files in the below hyperlinks.

# 1. Car Seat:

# https://raw.githubusercontent.com/selva86/datasets/master/Carseats.csv

# 2. Bank Personal Loan:

# https://raw.githubusercontent.com/ChaithrikaRao/DataChime/master/Bank_Personal_Loan_Modelling.csv

# #### Q1.

# a) Perform PCA for both datasets. Create the scree plots (eigenvalues).

# b) Suggest the optimum number of compenents for each dataset with explanation.

# c) Save the PCAs as carseat_pca and ploan_pca respectively.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.decomposition import PCA

from sklearn.preprocessing import StandardScaler

carseat_df = pd.read_csv(

"https://raw.githubusercontent.com/selva86/datasets/master/Carseats.csv"

)

ploan_df = pd.read_csv(

"https://raw.githubusercontent.com/ChaithrikaRao/DataChime/master/Bank_Personal_Loan_Modelling.csv"

)

scaler = StandardScaler()

numeric_carseat = carseat_df.select_dtypes(include=[np.number])

carseat_std = scaler.fit_transform(numeric_carseat)

numeric_ploan = ploan_df.select_dtypes(include=[np.number])

ploan_std = scaler.fit_transform(numeric_ploan)

pca_carseat = PCA()

pca_ploan = PCA()

carseat_pca_result = pca_carseat.fit(carseat_std)

ploan_pca_result = pca_ploan.fit(ploan_std)

def scree_plot(pca_result, title):

plt.figure()

plt.plot(np.cumsum(pca_result.explained_variance_ratio_))

plt.xlabel("Number of Components")

plt.ylabel("Cumulative Explained Variance")

plt.title(title)

plt.show()

scree_plot(carseat_pca_result, "Car Seat Dataset")

scree_plot(ploan_pca_result, "Bank Personal Loan Dataset")

# (b)The optimal number of components can be determined by looking at the point where the cumulative explained variance "elbows" or starts to level off.

# For the Car Seat dataset, it appears to be around 3 components.

# For the Bank Personal Loan dataset, it appears to be around 4 components.

carseat_pca = PCA(n_components=3)

ploan_pca = PCA(n_components=4)

carseat_pca_result = carseat_pca.fit_transform(carseat_std)

ploan_pca_result = ploan_pca.fit_transform(ploan_std)

# #### Q2. (Car Seat Dataset)

# a) Convert the non-numeric variables to numeric by using get_dummies() method in pandas. Use it in this question.

# b) Use the scikit learn variance filter to reduce the dimension of the dataset. Try different threshold and suggest the best one.

# c) Some columns may have high correlation. For each set of highly correlated variables, keep one variable only and remove the rest of highly correlated columns. (Tips: You can find the correlations among columns by using .corr() method of pandas dataframe. Reference: https://pandas.pydata.org/docs/reference/api/pandas.DataFrame.corr.html)

# d) Perform linear regression to predict the Sales with datasets from part b and part c respectively and compare the result

#

carseat_dummies = pd.get_dummies(carseat_df, drop_first=True)

from sklearn.feature_selection import VarianceThreshold

def filter_by_variance(data, threshold):

var_filter = VarianceThreshold(threshold=threshold)

return pd.DataFrame(

var_filter.fit_transform(data), columns=data.columns[var_filter.get_support()]

)

carseat_filtered_001 = filter_by_variance(carseat_dummies, 0.01)

carseat_filtered_01 = filter_by_variance(carseat_dummies, 0.1)

carseat_filtered_1 = filter_by_variance(carseat_dummies, 1)

print(f"0.01 threshold: {carseat_filtered_001.shape[1]} columns")

print(f"0.1 threshold: {carseat_filtered_01.shape[1]} columns")

print(f"1 threshold: {carseat_filtered_1.shape[1]} columns")

def remove_high_corr(data, threshold):

corr_matrix = data.corr().abs()

upper = corr_matrix.where(np.triu(np.ones(corr_matrix.shape), k=1).astype(np.bool))

to_drop = [column for column in upper.columns if any(upper[column] > threshold)]

return data.drop(columns=to_drop)

carseat_no_high_corr = remove_high_corr(carseat_filtered_01, 0.8)

def linear_regression_score(X, y):

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.3, random_state=42

)

lr = LinearRegression()

lr.fit(X_train, y_train)

y_pred = lr.predict(X_test)

return r2_score(y_test, y_pred)

X_filtered = carseat_filtered_01.drop(columns=["Sales"])

y_filtered = carseat_filtered_01["Sales"]

X_no_high_corr = carseat_no_high_corr.drop(columns=["Sales"])

y_no_high_corr = carseat_no_high_corr["Sales"]

filtered_score = linear_regression_score(X_filtered, y_filtered)

no_high_corr_score = linear_regression_score(X_no_high_corr, y_no_high_corr)

print(f"Filtered dataset R-squared: {filtered_score}")

print(f"No high correlation dataset R-squared: {no_high_corr_score}")

# #### Q3. (Bank Personal Loan Dataset)

# a) Find the variable which has the highest correlations with CCAvg

# b) Perform polynomial regression to predict CCAvg with the variable identified in part a.

# ##### Tips:

# step 1 - convert the dataset to polynomial using PolynomialFeatures from scikit learn (https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.PolynomialFeatures.html)

# step 2 - Perform linear regression using scikit learn

ploan_df = pd.read_csv(

"https://raw.githubusercontent.com/ChaithrikaRao/DataChime/master/Bank_Personal_Loan_Modelling.csv"

)

correlations = ploan_df.corr().abs()

highest_corr = correlations["CCAvg"].sort_values(ascending=False).index[1]

print(f"The variable with the highest correlation with CCAvg is {highest_corr}")

X = ploan_df[[highest_corr]]

y = ploan_df["CCAvg"]

poly = PolynomialFeatures(degree=2)

X_poly = poly.fit_transform(X)

X_train, X_test, y_train, y_test = train_test_split(

X_poly, y, test_size=0.3, random_state=42

)

lr = LinearRegression()

lr.fit(X_train, y_train)

y_pred = lr.predict(X_test)

poly_r_squared = r2_score(y_test, y_pred)

print(f"Polynomial regression R-squared: {poly_r_squared}")

# #### Q4. (Bank Personal Loan Dataset)

# Perform linear regression with all variables in the dataset and compare the result with the model in question 3 using R-Squared value.

X_all = ploan_df.drop(columns=["ID", "CCAvg"])

y_all = ploan_df["CCAvg"]

X_train, X_test, y_train, y_test = train_test_split(

X_all, y_all, test_size=0.3, random_state=42

)

lr_all = LinearRegression()

lr_all.fit(X_train, y_train)

y_pred_all = lr_all.predict(X_test)

all_r_squared = r2_score(y_test, y_pred_all)

print(f"All variables linear regression R-squared: {all_r_squared}")

print(f"Polynomial regression R-squared: {poly_r_squared}")

print(f"All variables linear regression R-squared: {all_r_squared}")

|

#

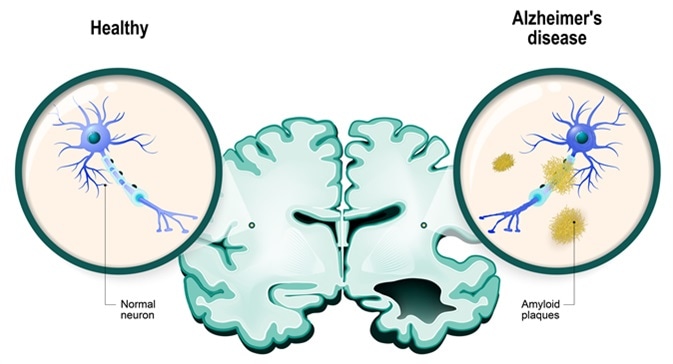

# **Alzheimer's disease** is the most common type of dementia. It is a progressive disease beginning with mild memory loss and possibly leading to loss of the ability to carry on a conversation and respond to the environment. Alzheimer's disease involves parts of the brain that control thought, memory, and language.

# # **Importing libraries**

import pandas as pd

import numpy as np

import os

import matplotlib.pyplot as plt

import warnings

from tensorflow.keras.applications.vgg19 import preprocess_input

from tensorflow.keras.preprocessing import image, image_dataset_from_directory

from tensorflow.keras.preprocessing.image import ImageDataGenerator as IDG

from imblearn.over_sampling import SMOTE

from tensorflow.keras.models import Sequential

from tensorflow import keras

import tensorflow

import tensorflow as tf

from tensorflow.keras.models import Model

from tensorflow.keras.applications.vgg16 import VGG16

from tensorflow.keras.layers import Input, Lambda, Dense, Flatten, Dropout

from tensorflow.keras.models import Model

from sklearn.model_selection import train_test_split

import seaborn as sns

import pathlib

from tensorflow.keras.utils import plot_model

from keras.callbacks import ModelCheckpoint, ReduceLROnPlateau, EarlyStopping

from sklearn.metrics import classification_report, confusion_matrix

# # **Identify dataset**

train_ds = tf.keras.preprocessing.image_dataset_from_directory(

"/kaggle/input/adni-extracted-axial/Axial",

validation_split=0.2,

subset="training",

seed=1337,

image_size=[180, 180],

batch_size=16,

)

val_ds = tf.keras.preprocessing.image_dataset_from_directory(

"/kaggle/input/adni-extracted-axial/Axial",

validation_split=0.2,

subset="validation",

seed=1337,

image_size=[180, 180],

batch_size=16,

)

# number and names of Classes

classnames = train_ds.class_names

len(classnames), train_ds.class_names

# # **Data Visualization**

plt.figure(figsize=(10, 10))

for images, labels in train_ds.take(1):

for i in range(9):

ax = plt.subplot(3, 3, i + 1)

plt.imshow(images[i].numpy().astype("uint8"))

plt.title(train_ds.class_names[labels[i]])

plt.axis("off")

# Number of images in each class

NUM_IMAGES = []

for label in classnames:

dir_name = "/kaggle/input/adni-extracted-axial/Axial" + "/" + label

NUM_IMAGES.append(len([name for name in os.listdir(dir_name)]))

NUM_IMAGES, classnames

# Rename class names

class_names = ["Alzheimer Disease", "Cognitively Impaired", "Cognitively Normal"]

train_ds.class_names = class_names

val_ds.class_names = class_names

NUM_CLASSES = len(class_names)

NUM_CLASSES

# Before Oversampling

# Visualization of each class with pie chart

import matplotlib.pyplot as plt

fig1, ax1 = plt.subplots()

ax1.pie(NUM_IMAGES, autopct="%1.1f%%", labels=train_ds.class_names)

plt.legend(title="Three Classes:", bbox_to_anchor=(0.75, 1.15))

# Performing Image Augmentation to have more data samples

IMG_SIZE = 180

IMAGE_SIZE = [180, 180]

DIM = (IMG_SIZE, IMG_SIZE)

ZOOM = [0.99, 1.01]

BRIGHT_RANGE = [0.8, 1.2]

HORZ_FLIP = True

FILL_MODE = "constant"

DATA_FORMAT = "channels_last"

WORK_DIR = "/kaggle/input/adni-extracted-axial/Axial"

work_dr = IDG(

rescale=1.0 / 255,

brightness_range=BRIGHT_RANGE,

zoom_range=ZOOM,

data_format=DATA_FORMAT,

fill_mode=FILL_MODE,

horizontal_flip=HORZ_FLIP,

)

train_data_gen = work_dr.flow_from_directory(

directory=WORK_DIR, target_size=DIM, batch_size=8000, shuffle=False

)

train_data, train_labels = train_data_gen.next()

# # **Oversampling technique**

#

# Shape of data before oversampling

print(train_data.shape, train_labels.shape)

# Performing over-sampling of the data, since the classes are imbalanced

# After oversampling using SMOTE

sm = SMOTE(random_state=42)

train_data, train_labels = sm.fit_resample(

train_data.reshape(-1, IMG_SIZE * IMG_SIZE * 3), train_labels

)

train_data = train_data.reshape(-1, IMG_SIZE, IMG_SIZE, 3)

print(train_data.shape, train_labels.shape)

# Show pie plot for dataset (after oversampling)

# Visualization of each class with pie chart

images_after = [2590, 2590, 2590]

import matplotlib.pyplot as plt

fig1, ax1 = plt.subplots()

ax1.pie(images_after, autopct="%1.1f%%", labels=train_ds.class_names)

plt.legend(title="Three Classes:", bbox_to_anchor=(0.75, 1.15))

# # **Spliting data**

train_data, test_data, train_labels, test_labels = train_test_split(

train_data, train_labels, test_size=0.2, random_state=42

)

train_data, val_data, train_labels, val_labels = train_test_split(

train_data, train_labels, test_size=0.2, random_state=42

)

# # **Building the model**

# -------VGG16--------

vgg = VGG16(input_shape=(180, 180, 3), weights="imagenet", include_top=False)

for layer in vgg.layers:

layer.trainable = False

x = Flatten()(vgg.output)

prediction = Dense(3, activation="softmax")(x)

modelvgg = Model(inputs=vgg.input, outputs=prediction)

# Plotting layers as an image

plot_model(modelvgg, to_file="alzahimer.png", show_shapes=True)

# Optimizing model

modelvgg.compile(

optimizer="adam", loss="categorical_crossentropy", metrics=["accuracy"]

)

# Callbacks

checkpoint = ModelCheckpoint(

filepath="best_weights.hdf5", save_best_only=True, save_weights_only=True

)

lr_reduce = ReduceLROnPlateau(

monitor="val_loss", factor=0.3, patience=2, verbose=2, mode="max"

)

early_stop = EarlyStopping(monitor="val_loss", min_delta=0.1, patience=1, mode="min")

# # **Training model using my data**

# Fitting the model

hist = modelvgg.fit(

train_data,

train_labels,

epochs=10,

validation_data=(val_data, val_labels),

callbacks=[checkpoint, lr_reduce],

)

# Plotting accuracy and loss of the model

fig, ax = plt.subplots(1, 2, figsize=(20, 3))

ax = ax.ravel()

for i, met in enumerate(["accuracy", "loss"]):

ax[i].plot(hist.history[met])

ax[i].plot(hist.history["val_" + met])

ax[i].set_title("Model {}".format(met))

ax[i].set_xlabel("epochs")

ax[i].set_ylabel(met)

ax[i].legend(["train", "val"])

# Evaluation using test data

test_scores = modelvgg.evaluate(test_data, test_labels)

print("Testing Accuracy: %.2f%%" % (test_scores[1] * 100))

pred_labels = modelvgg.predict(test_data)

# # **Confusion matrix**

pred_ls = np.argmax(pred_labels, axis=1)

test_ls = np.argmax(test_labels, axis=1)

conf_arr = confusion_matrix(test_ls, pred_ls)

plt.figure(figsize=(8, 6), dpi=80, facecolor="w", edgecolor="k")

ax = sns.heatmap(

conf_arr,

cmap="Greens",

annot=True,

fmt="d",

xticklabels=classnames,

yticklabels=classnames,

)

plt.title("Alzheimer's Disease Diagnosis")

plt.xlabel("Prediction")

plt.ylabel("Truth")

plt.show(ax)

# # **Classification report**

print(classification_report(test_ls, pred_ls, target_names=classnames))

# # **Save the model for a Mobile app as tflite**

export_dir = "/kaggle/working/"

tf.saved_model.save(modelvgg, export_dir)

tflite_model_name = "alzheimerfinaly.tflite"

# Convert the model.

converter = tf.lite.TFLiteConverter.from_saved_model(export_dir)

converter.optimizations = [tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

tflite_model = converter.convert()

tflite_model_file = pathlib.Path(tflite_model_name)

tflite_model_file.write_bytes(tflite_model)

# # **Save the model for a Web app as hdf5**

tf.keras.models.save_model(modelvgg, "Alzheimer_finaly.hdf5")

|

# # Tracking COVID-19 from New York City wastewater

# **TABLE OF CONTENTS**

# * [1. Introduction](#chapter_1)

# * [2. Data exploration](#chapter_2)

# * [3. Analysis](#chapter_3)

# * [4. Baseline model](#chapter_4)

# ## 1. Introduction

# The **New York City OpenData Project** (*link:* __[project home page](https://opendata.cityofnewyork.us)__) has hundreds of open, New York-related datasets available for everyone to use. On the website, all datasets are labeled by different city functions (business, government, education etc.).

# While browsing through different subcategories, I came across data by the Department of Environmental Protection (DEP). One dataset they had made available in public concerned the SARS-CoV-2 gene level concentrations measured in NYC wastewater (*link:* __[dataset page](https://data.cityofnewyork.us/Health/SARS-CoV-2-concentrations-measured-in-NYC-Wastewat/f7dc-2q9f)__). As one can guess, SARS-CoV-2 is the virus causing COVID-19.

# Since I had earlier used the NYC data on COVID (*link:* __[dataset page](https://data.cityofnewyork.us/Health/COVID-19-Daily-Counts-of-Cases-Hospitalizations-an/rc75-m7u3)__) in several notebooks since the pandemic, I decided to create a new notebook combining these two datasets.

# This notebook is a brief exploratory analysis on the relationship between the COVID-causing virus concentrations in NYC wastewater and actual COVID-19 cases detected in New York. Are these two related, and if so, how? Let's find out.

# *All data sources are read directly from the OpenData Project website, so potential errors are caused by temporary issues (update etc.) in the website data online availability.*

# **April 13th, 2023

# Jari Peltola**

# ******

# ## 2. Data Exploration

# import modules

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.ensemble import RandomForestRegressor

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler

from sklearn.metrics import r2_score, mean_absolute_error, mean_squared_error

from sklearn.model_selection import cross_val_score

# set column and row display

pd.set_option("display.max_columns", None)

pd.set_option("display.max_rows", None)

# disable warnings

pd.options.mode.chained_assignment = None

# wastewater dataset url

url = "https://data.cityofnewyork.us/api/views/f7dc-2q9f/rows.csv?accessType=DOWNLOAD"

# read the data from url

data = pd.read_csv(url, low_memory=False)

# drop rows with NaN values

data = data.dropna()

# reset index

data.reset_index(drop=True, inplace=True)

data.head()

data.shape

# A large metropolis area such as New York City has several wastewater resource recovery facilities (WRRF). Each of these facilities takes care of a specific area in the city's wastewater system (*link:* __[list of NYC wastewater treatment facilities](https://www.nyc.gov/site/dep/water/wastewater-treatment-plants.page)__). The 14 facilities serve city population ranging from less than one hundred thousand (Rockaway) up to one million people (Newtown Creek, Wards Island).

# We start by adding some additional data from the webpage linked above. For each facility, the added data includes receiving waterbody, drainage acres as well as verbal description of the drainage area. This is done to get a better comprehension of where exactly in the city each facility is located. Another possible solution would be to create some kind of map of all WRRF facilities in the area, but since we are actually dealing with water with ever-evolving drainage instead of buildings, this approach would not in my opinion work too well.

# It's good to keep the original data intact, so first we make a list **wrrf_list** consisting of all unique WRRF names, create a new dataframe **df_location** and add the facility names as a column **WRRF Name**.

# create list of all pickup locations

wrrf_list = data["WRRF Name"].unique()

# create new dataframe with empty column

df_location = pd.DataFrame(columns=["WRRF Name"])

# add column values from list

df_location["WRRF Name"] = np.array(wrrf_list)

df_location.head()

df_location["WRRF Name"].unique()

# Next we add three more columns including the additional data.

# list of receiving waterbodies

Receiving_Waterbody = [

"Jamaica Bay",

"Jamaica Bay",

"East River",

"Jamaica Bay",

"Upper East River",

"Jamaica Bay",

"Lower East River",

"Hudson River",

"Upper New York Bay",

"Lower New York Bay",

"Upper East River",

"Upper East River",

"Kill Van Kull",

"Upper East River",

]

# list of drainage acres

Drainage_Acres = [

5907,

15087,

15656,

6259,

16860,

25313,

3200,

6030,

12947,

10779,

16664,

12056,

9665,

15203,

]

# list of drainage areas

Drainage_Area = [

"Eastern section of Brooklyn, near Jamaica Bay",

"South and central Brooklyn",

"South and eastern midtown sections of Manhattan, northeast section of Brooklyn and western section of Queens",

"Rockaway Peninsula",

"Northeast section of Queens",

"Southern section of Queens",

"Northwest section of Brooklyn and Governors Island",

"West side of Manhattan above Bank Street",

"Western section of Brooklyn",

"Southern section of Staten Island",

"Eastern section of the Bronx",

"Western section of the Bronx and upper east side of Manhattan",

"Northern section of Staten Island",

"Northeast section of Queens",

]

# add new columns

df_location["Receiving_Waterbody"] = np.array(Receiving_Waterbody)

df_location["Drainage_Acres"] = np.array(Drainage_Acres)

df_location["Drainage_Area"] = np.array(Drainage_Area)

df_location.head()

# Now we may merge the **df_location** dataframe with our original data. For this we can use the common column **WRRF Name**. For clarity we keep calling also the merged results **data**.

# merge dataframes

data = pd.merge(data, df_location, on="WRRF Name", how="left")

data.dtypes

data.head()

# The date data we have is not in datetime format, so we will take care of that next, along with renaming some columns and dropping others.

# column to datetime

data["Test date"] = pd.to_datetime(data["Test date"])

# rename columns by their index location

data = data.rename(columns={data.columns[1]: "TestDate"})

data = data.rename(columns={data.columns[4]: "SARS_CoV2_Concentration"})

data = data.rename(columns={data.columns[5]: "SARS_CoV2_Concentration_PerCap"})

data = data.rename(columns={data.columns[7]: "Population"})

# drop columns by their index location

data = data.drop(data.columns[[0, 3, 6]], axis=1)

data.head()

# Let's take a look at the COVID concentration when some specific WRRF facilities and their respective waterbodies are concerned. For this example, we choose the **Coney Island** and **Jamaica Bay** facilities and plot the result.

# select facility data to two dataframes

df_coney = data.loc[data["WRRF Name"] == "Coney Island"]

df_jamaica = data.loc[data["WRRF Name"] == "Jamaica Bay"]

# set figure size

plt.figure(figsize=(10, 8))

# set parameters

plt.plot(

df_coney.TestDate,

df_coney.SARS_CoV2_Concentration,

label="Coney Island",

linewidth=3,

)

plt.plot(

df_jamaica.TestDate,

df_jamaica.SARS_CoV2_Concentration,

color="red",

label="Jamaica Bay",

linewidth=3,

)

# add title and axis labels

plt.title(

"COVID virus concentration (Coney Island, Jamaica Bay)", weight="bold", fontsize=16

)

plt.xlabel("Date", weight="bold", fontsize=14)

plt.ylabel("Concentration", weight="bold", fontsize=14)

# add legend

plt.legend()

plt.show()

# We can see that the COVID virus concentration in the two selected wastewater facilites is for the most time pretty similar. The big exception is the New Year period 2021-2022, when Coney Island recorded a significant concentration spike, but in Jamaica Bay the change was much more moderate.

# Next we bring in the New York City COVID-19 dataset and upload it as dataframe **covid_cases**.

# COVID-19 dataset url

url = "https://data.cityofnewyork.us/api/views/rc75-m7u3/rows.csv?accessType=DOWNLOAD"

# read the data from url

covid_cases = pd.read_csv(url, low_memory=False)

# drop rows with NaN values

covid_cases = covid_cases.dropna()

covid_cases.head()

covid_cases.dtypes

# One thing we can see is that the COVID-19 data includes both overall data and more specific figures on the city's five boroughs (the Bronx, Brooklyn, Manhattan, Queens, Staten Island).

# In order to merge the data, we rename and reformat the **date_of_interest** column to fit our existing data, since that's the common column we will use.

# rename column

covid_cases = covid_cases.rename(columns={covid_cases.columns[0]: "TestDate"})

# change format to datetime

covid_cases["TestDate"] = pd.to_datetime(covid_cases["TestDate"])

# merge dataframes

data = pd.merge(data, covid_cases, on="TestDate", how="left")

data.head()

data.shape

# What we don't know is the workload of different facilities when it comes to wastewater treatment. Let's see that next.

# check percentages

wrrf_perc = data["WRRF Name"].value_counts(normalize=True) * 100

wrrf_perc

# The percentages tell us that there is no dominant facility when it comes to wastewater treatment in New York City: it's all one big puzzle with relatively equal pieces.

# Now we are ready to find out more about the potential relationship between COVID virus concentration in wastewater and actual COVID cases.

# ******

# ## 3. Analysis

# First it would be good to know more about boroughs and their different wastewater facilities. Mainly it would be useful to find out if there are differences between wastewater treatment facilities and their measured COVID virus concentration levels when a particular borough is concerned.

# Let's take Brooklyn for example. Based on the verbal descriptions of different wastewater facilities, Brooklyn area is mostly served by the **26th Ward**, **Coney Island** and **Owl's Head** facilities. Next we select those three locations with all necessary columns to dataframe **brooklyn_data**.

# select Brooklyn data

brooklyn_data = data.loc[

data["WRRF Name"].isin(["26th Ward", "Coney Island", "Owls Head"])

]

# select columns

brooklyn_data = brooklyn_data.iloc[:, np.r_[0:8, 29:40]]

brooklyn_data.shape

brooklyn_data.head()

# To access the data more conveniently, we take a step back and create separate dataframes for the three facilities before plotting the result.

# create dataframes

df_26th = brooklyn_data.loc[brooklyn_data["WRRF Name"] == "26th Ward"]

df_coney = brooklyn_data.loc[brooklyn_data["WRRF Name"] == "Coney Island"]

df_owls = brooklyn_data.loc[brooklyn_data["WRRF Name"] == "Owls Head"]

# set figure size

plt.figure(figsize=(10, 8))

# set parameters

plt.plot(df_26th.TestDate, df_26th.SARS_CoV2_Concentration, label="26th", linewidth=3)

plt.plot(

df_coney.TestDate,

df_coney.SARS_CoV2_Concentration,

color="red",

label="Coney Island",

linewidth=3,

)

plt.plot(

df_owls.TestDate,

df_owls.SARS_CoV2_Concentration,

color="green",

label="Owls Head",

linewidth=3,

)

# add title and axis labels

plt.title(

"Virus concentration (26th Ward, Coney Island, Owls Head)",

weight="bold",

fontsize=16,

)

plt.xlabel("Date", weight="bold", fontsize=14)

plt.ylabel("Concentration", weight="bold", fontsize=14)

# add legend

plt.legend()

plt.show()

# There are some differences in concentration intensity, but all in all the figures pretty much follow the same pattern. Also, one must remember that for example the Coney Island facility serves population about twice as large as the Owl's Head facility. Then again, this makes the data from beginning of year 2023 even more intriguing, since Owl's Head had much larger COVID concentration then compared to other two Brooklyn facilities.

# Next we plot the third Brooklyn facility (26th Ward) data and compare it to the 7-day average of hospitalized COVID-19 patients in the area. It is notable that in the plot the concentration is multiplied by 0.01 to make a better visual fit with patient data. This change is made merely for plotting purposes and does not alter the actual values in our data.

plt.figure(figsize=(10, 8))

plt.plot(

df_26th.TestDate,

df_26th.SARS_CoV2_Concentration * 0.01,

label="CoV-2 concentration",

linewidth=3,

)

plt.plot(

df_26th.TestDate,

df_26th.BK_HOSPITALIZED_COUNT_7DAY_AVG,

color="red",

label="Hospitalizations avg",

linewidth=3,

)

# add title and axis labels

plt.title(

"Virus concentration in 26th Ward and COVID hospitalizations",

weight="bold",

fontsize=16,

)

plt.xlabel("Date", weight="bold", fontsize=14)

plt.ylabel("Count", weight="bold", fontsize=14)

# add legend

plt.legend()

# display plot

plt.show()

# The two lines definitely share a similar pattern, which in the end is not that surprising considering they actually describe two different viewpoints to the same phenomenon (COVID-19 pandemic/endemic).

# Next we take a closer look at the first 18 months of the COVID-19 pandemic and narrow our date selection accordingly. Also, we change our COVID-19 measurement to daily hospitalized patients instead of a weekly average.

# mask dataframe

start_date = "2020-01-01"

end_date = "2021-12-31"

# wear a mask

mask = (df_26th["TestDate"] >= start_date) & (df_26th["TestDate"] < end_date)

df_26th_mask = df_26th.loc[mask]

plt.figure(figsize=(10, 8))

plt.plot(

df_26th_mask.TestDate,

df_26th_mask.SARS_CoV2_Concentration * 0.01,

label="CoV-2 concentration",

linewidth=3,

)

plt.plot(

df_26th_mask.TestDate,

df_26th_mask.BK_HOSPITALIZED_COUNT,

color="red",

label="Hospitalizations",

linewidth=3,

)

plt.title(

"26th Ward virus concentration and daily hospitalizations",

weight="bold",

fontsize=16,

)

plt.xlabel("Date", weight="bold", fontsize=14)

plt.ylabel("Count", weight="bold", fontsize=14)

plt.legend()

plt.show()

# Now the similarities are even more clear. Taking a look at the late 2020/early 2021 situation, it seems that **some sort of threshold of daily COVID virus concentration difference in wastewater might actually become a valid predictor for future hospitalizations**, if one omitted the viewpoint of a ML model constructor.

# In this notebook we will not go that far, but next a simple baseline model is created as a sort of first step toward that goal.

# ******

# ## 4. Baseline model

# The regressive baseline model we will produce is all about comparing relative (per capita) COVID virus concentration in wasterwater with different features presented in COVID-19 patient data.

# For our baseline model we will use wastewater data from all five boroughs and their wastewater facilities, meaning we must return to our original **data**.

data.head(2)

data.shape

# As we need only some of the numerical data here, next we select the approriate columns to a new dataframe **df_model**.

# select columns by index

df_model = data.iloc[:, np.r_[3, 8:18]]

df_model.head()

df_model.dtypes

# To ensure better compatibility between different data features, the dataframe is next scaled with MinMaxScaler. This is not a necessary step, but as the COVID virus concentration per capita values are relatively large compared to COVID patient data, by scaling all data we may get a bit better results in actual baseline modeling.

# scale data

scaler = MinMaxScaler()

scaler.fit(df_model)

scaled = scaler.fit_transform(df_model)

scaled_df = pd.DataFrame(scaled, columns=df_model.columns)

scaled_df.head()

# plot scatterplot of COVID-19 case count and virus concentration level

scaled_df.plot(kind="scatter", x="SARS_CoV2_Concentration_PerCap", y="CASE_COUNT")

plt.show()

# Some outliers excluded, the data we have is relatively well concentrated and should therefore fit regressive model pretty well. Just to make sure, we drop potential NaN rows before proceeding.

# drop NaN rows

data = scaled_df.dropna()

# set random seed

np.random.seed(42)

# select features

X = data.drop("SARS_CoV2_Concentration_PerCap", axis=1)

y = data["SARS_CoV2_Concentration_PerCap"]

# separate data into training and validation sets

X_train, X_test, y_train, y_test = train_test_split(

X, y, train_size=0.8, test_size=0.2, random_state=1

)

# define model

model = RandomForestRegressor()

# fit model

model.fit(X_train, y_train)

# Now we can make predictions with the model based on our data.

y_preds = model.predict(X_test)

print("Regression model metrics on test set")

print(f"R2: {r2_score(y_test, y_preds)}")

print(f"MAE: {mean_absolute_error(y_test, y_preds)}")

print(f"MSE: {mean_squared_error(y_test, y_preds)}")

# The worst R2 score is basically minus infinity and the perfect score 1, with zero predicting the mean value every time. Taking this into account, the score of about 0.5 (the exact result changes every time the notebook is run as the online datasets are constantly updated) for a test set score is quite typical when using only a simple baseline fit.

# Also, as seen below, the score on train dataset is significantly better than the one of test dataset. As we had less than 500 datapoints i.e. rows overall for the baseline model to learn from, this is in the end not a big surprise.

print("Train score:")

print(model.score(X_train, y_train))

print("Test score:")

print(model.score(X_test, y_test))

# We can also use the cross-valuation score to run the same baseline model several times and take the average R2 score of the process. In this case we will run the model five times (cv=5).

np.random.seed(42)

# create regressor

rf_reg = RandomForestRegressor(n_estimators=100)

# create five models for cross-valuation

# setting "scoring=None" uses default scoring parameter

# cross_val_score function's default scoring parameter is R2

cv_r2 = cross_val_score(rf_reg, X_train, y_train, cv=5, scoring=None)

# print average R2 score of five baseline regressor models

np.mean(cv_r2)

|

# ## Project 4

# We're going to start with the dataset from Project 1.

# This time the goal is to compare data wrangling runtime by either using **Pandas** or **Polar**.

data_dir = "/kaggle/input/project-4-dataset/data-p1"

sampled = False

path_suffix = "" if not sampled else "_sampled"

from time import time

import pandas as pd

import numpy as np

import polars as pl

from polars import col

def print_time(t):

"""Function that converts time period in seconds into %m:%s:%ms expression.

Args:

t (int): time period in seconds

Returns:

s (string): time period formatted

"""

ms = t * 1000

m, ms = divmod(ms, 60000)

s, ms = divmod(ms, 1000)

return "%dm:%ds:%dms" % (m, s, ms)

# ## Load data

# #### Pandas

start = time()

pandas_data = pd.read_csv(f"{data_dir}/transactions_data{path_suffix}.csv")

print("\nProcessing took {}".format(print_time(time() - start)))

start = time()

pandas_data["date"] = pd.to_datetime(pandas_data["date"])

print("\nProcessing took {}".format(print_time(time() - start)))

## Create sales column

start = time()

pandas_data = (

pandas_data.groupby(

[

pandas_data.date.dt.date,

"id",

"item_id",

"dept_id",

"cat_id",

"store_id",

"state_id",

]

)

.agg("count")

.rename(columns={"date": "sales"})

.reset_index()

.assign(date=lambda df: pd.to_datetime(df.date))

)

print("\nProcessing took {}".format(print_time(time() - start)))

## Convert data types

start = time()

pandas_data = pandas_data.assign(

id=pandas_data.id.astype("category"),

item_id=pandas_data.item_id.astype("category"),

dept_id=pandas_data.dept_id.astype("category"),

cat_id=pandas_data.cat_id.astype("category"),

store_id=pandas_data.store_id.astype("category"),

state_id=pandas_data.state_id.astype("category"),

)

print("\nProcessing took {}".format(print_time(time() - start)))

## Filling with zeros

start = time()

pandas_data = pandas_data.set_index(["date", "id"])

min_date, max_date = (

pandas_data.index.get_level_values("date").min(),

pandas_data.index.get_level_values("date").max(),

)

dates_to_select = pd.date_range(min_date, max_date, freq="1D")

ids = pandas_data.index.get_level_values("id").unique()

index_to_select = pd.MultiIndex.from_product(

[dates_to_select, ids], names=["date", "id"]

)

def fill_category_nans(df, col_name, level_start, level_end):

return np.where(

df[col_name].isna(),

df.index.get_level_values("id")

.str.split("_")

.str[level_start:level_end]

.str.join("_"),

df[col_name],

)

pandas_data = (

pandas_data.reindex(index_to_select)

.fillna({"sales": 0})

.assign(

sales=lambda df: df.sales.astype("int"),

item_id=lambda df: fill_category_nans(df, "item_id", 0, 3),

dept_id=lambda df: fill_category_nans(df, "dept_id", 0, 2),

cat_id=lambda df: fill_category_nans(df, "cat_id", 0, 1),

store_id=lambda df: fill_category_nans(df, "store_id", 3, 5),

state_id=lambda df: fill_category_nans(df, "state_id", 3, 4),

)

.assign(

item_id=lambda df: df.item_id.astype("category"),

dept_id=lambda df: df.dept_id.astype("category"),

cat_id=lambda df: df.cat_id.astype("category"),

store_id=lambda df: df.store_id.astype("category"),

state_id=lambda df: df.state_id.astype("category"),

)

)

print("\nProcessing took {}".format(print_time(time() - start)))

# #### Polars

start = time()

transactions_pl = pl.read_csv(f"{data_dir}/transactions_data{path_suffix}.csv")

print("\nProcessing took {}".format(print_time(time() - start)))

start = time()

transactions_pl = transactions_pl.with_columns(

pl.col("date").str.strptime(pl.Date, fmt="%Y-%m-%d %H:%M:%S", strict=False)

)

print("\nProcessing took {}".format(print_time(time() - start)))

## Create sales column

start = time()

polars_data = (

transactions_pl.lazy()

.with_column(pl.lit(1).alias("sales"))

.groupby(["date", "id", "item_id", "dept_id", "cat_id", "store_id", "state_id"])

.agg(pl.col("sales").sum())

.collect()

)

print("\nProcessing took {}".format(print_time(time() - start)))

## Convert data types

start = time()

polars_data = (

polars_data.lazy()

.with_columns(

[

pl.col("id").cast(pl.Categorical),

pl.col("item_id").cast(pl.Categorical),

pl.col("dept_id").cast(pl.Categorical),

pl.col("cat_id").cast(pl.Categorical),

pl.col("store_id").cast(pl.Categorical),

pl.col("state_id").cast(pl.Categorical),

]

)

.collect()

)

print("\nProcessing took {}".format(print_time(time() - start)))

## Filling with zeros

start = time()

min_date, max_date = (

polars_data.with_columns(pl.col("date")).min()["date"][0],

polars_data.with_columns(pl.col("date")).max()["date"][0],

)

dates_to_select = pl.date_range(min_date, max_date, "1d")

# df with all combinations of daily dates and ids

date_id_df = pl.DataFrame({"date": dates_to_select}).join(

polars_data.select(pl.col("id").unique()), how="cross"

)

# join with original df

polars_data = polars_data.join(date_id_df, on=["date", "id"], how="outer").sort(

"id", "date"

)

# create tmp columns to assemble strings from item_id to fill columns for cells with null values

polars_data = (

polars_data.lazy()

.with_columns(

[

col("id"),

*[

col("id").apply(lambda s, i=i: s.split("_")[i]).alias(col_name)

for i, col_name in enumerate(["1", "2", "3", "4", "5", "6"])

],

]

)

.drop(["item_id", "dept_id", "cat_id", "store_id", "state_id"])

.collect()

)

# concat string components

item_id = polars_data.select(

pl.concat_str(

[

pl.col("1"),

pl.col("2"),

pl.col("3"),

],

separator="_",

).alias("item_id")

)

dept_id = polars_data.select(

pl.concat_str(

[

pl.col("1"),

pl.col("2"),

],

separator="_",

).alias("dept_id")

)

cat_id = polars_data.select(

pl.concat_str(

[

pl.col("1"),

],

separator="_",

).alias("cat_id")

)

store_id = polars_data.select(

pl.concat_str(

[

pl.col("4"),

pl.col("5"),

],

separator="_",

).alias("store_id")

)

state_id = polars_data.select(

pl.concat_str(

[

pl.col("4"),

],

separator="_",

).alias("state_id")

)

# fill sales columns with null values with 0

polars_data = (

polars_data.lazy()

.with_column(

pl.col("sales").fill_null(0),

)

.collect()

)

# recreate other columns with the string components

polars_data = (

pl.concat(

[polars_data, item_id, dept_id, cat_id, store_id, state_id], how="horizontal"

)

.drop(["1", "2", "3", "4", "5", "6"])

.sort("date", "id")

)

print("\nProcessing took {}".format(print_time(time() - start)))

# #### Comparison

polars_data.sort("sales", descending=True).head()

len(polars_data)

pandas_data.reset_index(drop=True).sort_values(by=["sales"], ascending=False).head()

len(pandas_data)

|

# This notebook reveals my solution for __RFM Analysis Task__ offered by Renat Alimbekov.

# This task is part of the __Task Series__ for Data Analysts/Scientists

# __Task Series__ - is a rubric where Alimbekov challenges his followers to solve tasks and share their solutions.

# So here I am :)

# Original solution can be found at - https://alimbekov.com/rfm-python/

# The task is to perform RFM Analysis.

# * __olist_orders_dataset.csv__ and __olist_order_payments_dataset.csv__ should be used

# * order_delivered_carrier_date - should be used in this task

# * Since the dataset is not actual by 2021, thus we should assume that we were asked to perform RFM analysis the day after the last record

# # Importing the modules

import pandas as pd

import numpy as np

import squarify

import matplotlib.pyplot as plt

import seaborn as sns

plt.style.use("ggplot")

import warnings

warnings.filterwarnings("ignore")

# # Loading the data

orders = pd.read_csv("../input/brazilian-ecommerce/olist_orders_dataset.csv")

payments = pd.read_csv("../input/brazilian-ecommerce/olist_order_payments_dataset.csv")

# # Dataframes join

orders["order_delivered_carrier_date"] = pd.to_datetime(

orders["order_delivered_carrier_date"]

) # datetime conversion

payments = payments.set_index("order_id") # preparation before the join

orders = orders.set_index("order_id") # preparation before the join

joined = orders.join(payments) # join on order_id

joined.isna().sum().sort_values(ascending=False)

joined.nunique().sort_values(ascending=False)

# It seems like we have missing values. And unfortunately order_delivered_carrier_date also has missing values. Thus, they should be dropped

last_date = joined["order_delivered_carrier_date"].max() + pd.to_timedelta(1, "D")

RFM = (

joined.dropna(subset=["order_delivered_carrier_date"])

.reset_index()

.groupby("customer_id")

.agg(

Recency=("order_delivered_carrier_date", lambda x: (last_date - x.max()).days),

Frequency=("order_id", "size"),

Monetary=("payment_value", "sum"),

)

)

# Sanity check - do we have NaN values or not?

RFM.isna().sum()

RFM.describe([0.01, 0.05, 0.1, 0.25, 0.5, 0.75, 0.9, 0.95, 0.99]).T

# So, here we can see that we have some outliers in Freqency and Monetary groups. Thus, they should be dropped and be analyzed separately

# # Recency

plt.figure(figsize=(12, 6))

sns.boxplot(x="Recency", data=RFM)

plt.title("Boxplot of Recency")

# # Frequency

RFM["Frequency"].value_counts(normalize=True) * 100

# I guess here we should select only frequency values that are greater than 5, because by doing this we only drop 0.11% of records

RFM["Frequency"].apply(

lambda x: "less or equal to 5" if x <= 5 else "greater than 5"

).value_counts(normalize=True) * 100

RFM = RFM[RFM["Frequency"] <= 5]

# # Monetary

RFM["Monetary"].describe([0.25, 0.5, 0.75, 0.9, 0.95, 0.99])

# Here, it seems like 95% percentile should be used to drop the outliers

plt.figure(figsize=(12, 6))

plt.title("Distribution of Monetary < 95%")

sns.distplot(RFM[RFM["Monetary"] < 447].Monetary)

RFM = RFM[RFM["Monetary"] < 447]

# # RFM groups

# I have used quantiles for assigning scores for Recency and Monetary.

# * groups are 0-33, 33-66, 66-100 quantiles

# For Frequency I have decided to group them by hand

# * score=1 if the frequency value is 1

# * otherwise, the score will be 2

RFM["R_score"] = pd.qcut(RFM["Recency"], 3, labels=[1, 2, 3]).astype(str)

RFM["M_score"] = pd.qcut(RFM["Monetary"], 3, labels=[1, 2, 3]).astype(str)

RFM["F_score"] = RFM["Frequency"].apply(lambda x: "1" if x == 1 else "2")

RFM["RFM_score"] = RFM["R_score"] + RFM["F_score"] + RFM["M_score"]

# 1. CORE - '123' - most recent, frequent, revenue generating - core customers that should be considered as most valuable clients

# 2. GONE - '311', '312', '313' - gone, one-timers - those clients are probably gone;

# 3. ROOKIE - '111', '112', '113' - just have joined - new clients that have joined recently

# 4. WHALES - '323', '213', '223 - most revenue generating - whales that generate revenue

# 5. LOYAL - '221', '222', '321', '322' - loyal users

# 6. REGULAR - '121', '122', '211', '212', - average users - just regular customers that don't stand out

#

def segment(x):

if x == "123":

return "Core"

elif x in ["311", "312", "313"]:

return "Gone"

elif x in ["111", "112", "113"]:

return "Rookies"

elif x in ["323", "213", "223"]:

return "Whales"

elif x in ["221", "222", "321", "322"]:

return "Loyal"

else:

return "Regular"

RFM["segments"] = RFM["RFM_score"].apply(segment)

RFM["segments"].value_counts(normalize=True) * 100

segmentwise = RFM.groupby("segments").agg(

RecencyMean=("Recency", "mean"),

FrequencyMean=("Frequency", "mean"),

MonetaryMean=("Monetary", "mean"),

GroupSize=("Recency", "size"),

)

segmentwise

font = {"family": "normal", "weight": "normal", "size": 18}

plt.rc("font", **font)

fig = plt.gcf()

ax = fig.add_subplot()

fig.set_size_inches(16, 16)

squarify.plot(

sizes=segmentwise["GroupSize"],

label=segmentwise.index,

color=["gold", "teal", "steelblue", "limegreen", "darkorange", "coral"],

alpha=0.8,

)

plt.title("RFM Segments", fontsize=18, fontweight="bold")

plt.axis("off")

plt.show()

# # Cohort Analysis

#

from operator import attrgetter

joined["order_purchase_timestamp"] = pd.to_datetime(joined["order_purchase_timestamp"])

joined["order_months"] = joined["order_purchase_timestamp"].dt.to_period("M")

joined["cohorts"] = joined.groupby("customer_id")["order_months"].transform("min")

cohorts_data = (

joined.reset_index()

.groupby(["cohorts", "order_months"])

.agg(

ClientsCount=("customer_id", "nunique"),

Revenue=("payment_value", "sum"),

Orders=("order_id", "count"),

)

.reset_index()

)

cohorts_data["periods"] = (cohorts_data.order_months - cohorts_data.cohorts).apply(

attrgetter("n")

) # periods for which the client have stayed

cohorts_data.head()

# Since, majority of our clients are not recurring ones, we can't perform proper cohort analysis on retention and other possible metrics.

# Fortunately, we can analyze dynamics of the bussiness and maybe will be even able to identify some relatively good cohorts that might be used as a prototype (e.g. by marketers).

font = {"family": "normal", "weight": "normal", "size": 12}

plt.rc("font", **font)

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(24, 6)) # for 2 parrallel plots

cohorts_data.set_index("cohorts").Revenue.plot(ax=ax1)

ax1.set_title("Cohort-wise revenue")

cohorts_data.set_index("cohorts").ClientsCount.plot(ax=ax2, c="b")

ax2.set_title("Cohort-wise clients counts")

# The figure above reveals the dynamics of Revenue and Number of Clients per cohort.

# On the left side we can see Revenue plot and on the right side can see ClientsCount plot.

# Overall, we can come to the next conclusions:

# * dynamics of two graphs are almost identical. Thus, it seems like the Average Order Amount was the same almost for each cohort. It could mean that the only way to get more revenue is to get more clients. Also, we know that we have 97% of non-recurring clients, thus maybe resolving this issue and stimulating customers to comeback would also result in revenue increase

# * I suspect that we don't have the full data for the last several months, because we can see abnormal drop. Thus, these last months shouldn't be taken into considerations

# * Cohort of November-2017 looks like out of trend, since this cohort showed outstanding results. It can be due to Black Friday sales that often happen at Novembers, or maybe during the November of 2017 some experimental marketing campaigns were performed that lead to good results. Thus, this cohort should be investigated by the company in order to identify the reason behind such an outstanding result, and take it into account

ig, (ax1, ax2) = plt.subplots(1, 2, figsize=(24, 6))

(cohorts_data["Revenue"] / cohorts_data["Orders"]).plot(ax=ax1)

ax1.set_title("Average Order Amount per cohort")

sns.boxplot((cohorts_data["Revenue"] / cohorts_data["Orders"]), ax=ax2)

ax2.set_title("Boxplot of the Average Order Amount")

|

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.preprocessing import LabelEncoder

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import r2_score

from sklearn.model_selection import train_test_split, GridSearchCV, cross_val_score

# from bayes_opt import BayesianOptimization

import xgboost as xgb

import warnings

warnings.filterwarnings("ignore")

# ## 1. Data Preprocessing

train = pd.read_csv(

"/kaggle/input/house-prices-advanced-regression-techniques/train.csv"

)

test = pd.read_csv("/kaggle/input/house-prices-advanced-regression-techniques/test.csv")

# combining training set and testing set

df = train.drop(["SalePrice"], axis=1).append(test, ignore_index=True)

# check data types and missing values

df.info()

# 80 predictors in the data frame.

# Data types are string, integer, and float.

# Variables like 'Alley','FireplaceQu','PoolQC','Fence','MiscFeature' contains limited information (too much missing values). But note that some NA values represent "no such equipment" not missing values. (see data description)

# ### 1.1 Missing value analysis

# extract columns contain any null values

missing_values = df.columns[df.isna().any()].tolist()

df[missing_values].info()

# check number of missing values

df[missing_values].isnull().sum()

# Some columns contain limited information (only 198 records in Alley), but note that some of them represent "no such equipment/facility" not missing values (see data description).

# It is true that some predictors there contains a mixing of missing values and "no equipment/facility". "BsmtQual", "BsmtCond" and "BsmtExposure" supposed to be same counts and same number of NAs if no missing values inside.

# To fix this problem I will assume all of the missing values in **some predictors** as "no equipment/facility" if NA is explained in the description file.

# ### 1.2 Missing values imputation (part 1)

df["Alley"] = df["Alley"].fillna("No Access")

df["MasVnrType"] = df["MasVnrType"].fillna("None")

df["BsmtQual"] = df["BsmtQual"].fillna("No Basement")

df["FireplaceQu"] = df["FireplaceQu"].fillna("No Fireplace")

df["GarageType"] = df["GarageType"].fillna("No Garage")

df["PoolQC"] = df["PoolQC"].fillna("No Pool")

df["Fence"] = df["Fence"].fillna("No Fence")

df["MiscFeature"] = df["MiscFeature"].fillna("None")

# the following predictors are linked to the above predictors, should apply a further analysis

# df['BsmtCond'].fillna('No Basement')

# df['BsmtExposure'].fillna('No Basement')

# df['BsmtFinType1'].fillna('No Basement')

# df['BsmtFinType2'].fillna('No Basement')

# df['GarageFinish'].fillna('No Garage')

#

# Now consider other variables (in the comment), there are relationship between variables like 'BsmQual' and 'BsmtCond': only if the value of 'BsmQual' is "no equipment", another should be considered "no equipment" as well.

# check the remaining missing values

missing_values = df.columns[df.isna().any()].tolist()

df[missing_values].isnull().sum()

# clean categorical variables

# fill missing values in 'BsmtCond' column where 'BamtQual' column is 'No Basement'

df.loc[df["BsmtQual"] == "No Basement", "BsmtCond"] = df.loc[

df["BsmtQual"] == "No Basement", "BsmtCond"

].fillna("No Basement")

df.loc[df["BsmtQual"] == "No Basement", "BsmtExposure"] = df.loc[

df["BsmtQual"] == "No Basement", "BsmtExposure"

].fillna("No Basement")

df.loc[df["BsmtQual"] == "No Basement", "BsmtFinType1"] = df.loc[

df["BsmtQual"] == "No Basement", "BsmtFinType1"

].fillna("No Basement")

df.loc[df["BsmtQual"] == "No Basement", "BsmtFinType2"] = df.loc[

df["BsmtQual"] == "No Basement", "BsmtFinType2"

].fillna("No Basement")

df.loc[df["GarageType"] == "No Garage", "GarageFinish"] = df.loc[

df["GarageType"] == "No Garage", "GarageFinish"

].fillna("No Garage")

df.loc[df["GarageType"] == "No Garage", "GarageQual"] = df.loc[

df["GarageType"] == "No Garage", "GarageQual"

].fillna("No Garage")

df.loc[df["GarageType"] == "No Garage", "GarageCond"] = df.loc[

df["GarageType"] == "No Garage", "GarageCond"

].fillna("No Garage")

# clean numerical variables

# fill missing values in 'MasVnrArea' columns where 'MasVnrType' column is 'None'

df.loc[df["MasVnrType"] == "None", "MasVnrArea"] = df.loc[

df["MasVnrType"] == "None", "MasVnrArea"

].fillna(0)

df.loc[df["GarageType"] == "No Garage", "GarageYrBlt"] = df.loc[

df["GarageType"] == "No Garage", "GarageYrBlt"

].fillna(0)

missing_values = df.columns[df.isna().any()].tolist()

df[missing_values].isnull().sum()

# Now most of them only contains one or two missing values.

# Now let's check 'BsmtFinSF1', 'BsmtFinSF2', and 'TotalBsmtSF', since there are only one missing value

df.loc[df["BsmtFinSF1"].isna()].iloc[:, 30:39]

# They are from the same observation index = 2120, since BsmtQual and other variables are labels as "No basement", the Nan values can be labeled as '0'

df.loc[2120] = df.loc[2120].fillna(0)

# check na values in a row

df.loc[2120].isnull().sum()

missing_values = df.columns[df.isna().any()].tolist()

df[missing_values].isnull().sum()

df[missing_values].info()

# ### 1.3 Missing value imputation (part 2)

# Now that most of them only contains 1 or 2 missing values, I will check the distribution of the variables to see what imputation method is more reasonable.

# categorical variables

v1 = [

"MSZoning",

"Utilities",

"Exterior1st",

"Exterior2nd",

"BsmtCond",

"BsmtExposure",

"BsmtFinType2",

"Electrical",

"KitchenQual",

"Functional",

"GarageFinish",

"GarageQual",

"GarageCond",

"SaleType",

]

# quantitative variables

v2 = [x for x in missing_values if x not in v1]

v2.remove("LotFrontage")

for var in v1:

sns.countplot(x=df[var])

plt.show()

for var in v2:

plt.hist(df[var])

plt.title(var)

plt.show()

# Impute categorical variables using **mode**

#

# Impute quantitative variables using **median**

for var in v1:

df[var].fillna(df[var].mode()[0], inplace=True)

for var in v2:

df[var].fillna(df[var].median(), inplace=True)

# Check the missing values one more time, supposed to only have one variable "LotFrontage"

missing_values = df.columns[df.isna().any()].tolist()

df[missing_values].info()

# ### 1.4 Missing value imputation (step 3)

# Missing imputation using Random Forest(RF)

# Encoding

df = df.apply(

lambda x: pd.Series(

LabelEncoder().fit_transform(x[x.notnull()]), index=x[x.notnull()].index

)

)

df.info()

l_known = df[df.LotFrontage.notnull()]

l_unknown = df[df.LotFrontage.isnull()]

l_ytrain = l_known.iloc[:, 3]