modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

BitanBiswas/mbert-bengali-ner-finetuned-ner

|

[

"pytorch",

"tensorboard",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

] |

token-classification

|

{

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 4 | null |

---

tags:

- autotrain

- summarization

language:

- en

widget:

- text: "I love AutoTrain 🤗"

datasets:

- joel89/autotrain-data-rwlv_summarizer

co2_eq_emissions:

emissions: 0.007272812398046086

---

# Model Trained Using AutoTrain

- Problem type: Summarization

- Model ID: 55443129210

- CO2 Emissions (in grams): 0.0073

## Validation Metrics

- Loss: 1.625

- Rouge1: 47.446

- Rouge2: 25.858

- RougeL: 43.937

- RougeLsum: 43.961

- Gen Len: 15.395

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_HUGGINGFACE_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/joel89/autotrain-rwlv_summarizer-55443129210

```

|

BlindMan820/Sarcastic-News-Headlines

|

[

"pytorch",

"distilbert",

"text-classification",

"English",

"dataset:Kaggle Dataset",

"transformers",

"Text",

"Sequence-Classification",

"Sarcasm",

"DistilBert"

] |

text-classification

|

{

"architectures": [

"DistilBertForSequenceClassification"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 28 | null |

---

language:

- en

tags:

- causal-lm

- llama

inference: false

---

# Wizard-Vicuna-13B-GGML

This is GGML format quantised 4bit and 5bit models of [junelee's wizard-vicuna 13B](https://huggingface.co/junelee/wizard-vicuna-13b).

It is the result of quantising to 4bit and 5bit GGML for CPU inference using [llama.cpp](https://github.com/ggerganov/llama.cpp).

## Repositories available

* [4bit GPTQ models for GPU inference](https://huggingface.co/TheBloke/wizard-vicuna-13B-GPTQ).

* [4bit and 5bit GGML models for CPU inference](https://huggingface.co/TheBloke/wizard-vicuna-13B-GGML).

* [float16 HF format model for GPU inference](https://huggingface.co/TheBloke/wizard-vicuna-13B-HF).

## THE FILES IN MAIN BRANCH REQUIRES LATEST LLAMA.CPP (May 19th 2023 - commit 2d5db48)!

llama.cpp recently made another breaking change to its quantisation methods - https://github.com/ggerganov/llama.cpp/pull/1508

I have quantised the GGML files in this repo with the latest version. Therefore you will require llama.cpp compiled on May 19th or later (commit `2d5db48` or later) to use them.

For files compatible with the previous version of llama.cpp, please see branch `previous_llama_ggmlv2`.

## Provided files

| Name | Quant method | Bits | Size | RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

`wizard-vicuna-13B.ggmlv3.q4_0.bin` | q4_0 | 4bit | 8.14GB | 10.5GB | 4-bit. |

`wizard-vicuna-13B.ggmlv3.q4_1.bin` | q4_1 | 4bit | 8.95GB | 11.0GB | 4-bit. Higher accuracy than q4_0 but not as high as q5_0. However has quicker inference than q5 models. |

`wizard-vicuna-13B.ggmlv3.q5_0.bin` | q5_0 | 5bit | 8.95GB | 11.0GB | 5-bit. Higher accuracy, higher resource usage and slower inference. |

`wizard-vicuna-13B.ggmlv3.q5_1.bin` | q5_1 | 5bit | 9.76GB | 12.25GB | 5-bit. Even higher accuracy, and higher resource usage and slower inference. |

`wizard-vicuna-13B.ggmlv3.q8_0.bin` | q5_1 | 5bit | 16GB | 18GB | 8-bit. Almost indistinguishable from float16. Huge resource use and slow. Not recommended for normal use.|

## How to run in `llama.cpp`

I use the following command line; adjust for your tastes and needs:

```

./main -t 18 -m wizard-vicuna-13B.ggmlv3.q4_0.bin --color -c 2048 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "### Instruction: write a story about llamas ### Response:"

```

Change `-t 18` to the number of physical CPU cores you have. For example if your system has 8 cores/16 threads, use `-t 8`.

## How to run in `text-generation-webui`

GGML models can be loaded into text-generation-webui by installing the llama.cpp module, then placing the ggml model file in a model folder as usual.

Further instructions here: [text-generation-webui/docs/llama.cpp-models.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/llama.cpp-models.md).

Note: at this time text-generation-webui may not support the new May 19th llama.cpp quantisation methods for q4_0, q4_1 and q8_0 files.

# Original WizardVicuna-13B model card

Github page: https://github.com/melodysdreamj/WizardVicunaLM

# WizardVicunaLM

### Wizard's dataset + ChatGPT's conversation extension + Vicuna's tuning method

I am a big fan of the ideas behind WizardLM and VicunaLM. I particularly like the idea of WizardLM handling the dataset itself more deeply and broadly, as well as VicunaLM overcoming the limitations of single-turn conversations by introducing multi-round conversations. As a result, I combined these two ideas to create WizardVicunaLM. This project is highly experimental and designed for proof of concept, not for actual usage.

## Benchmark

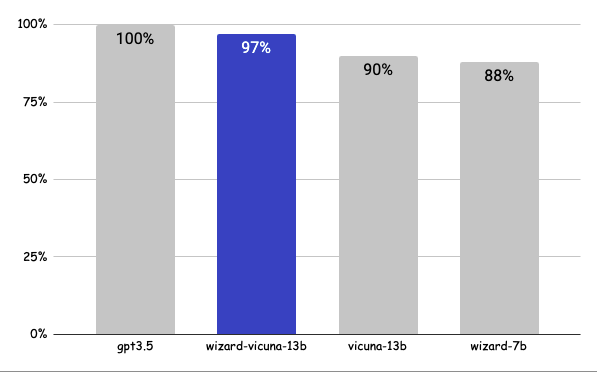

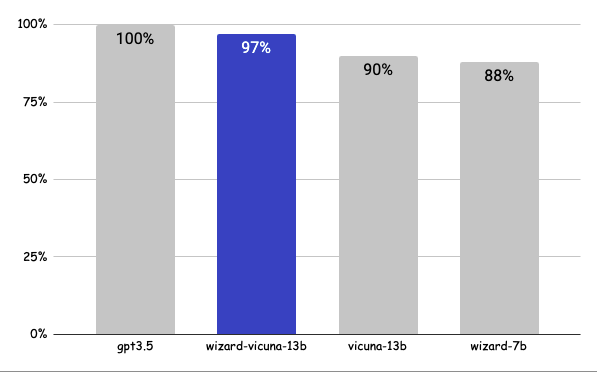

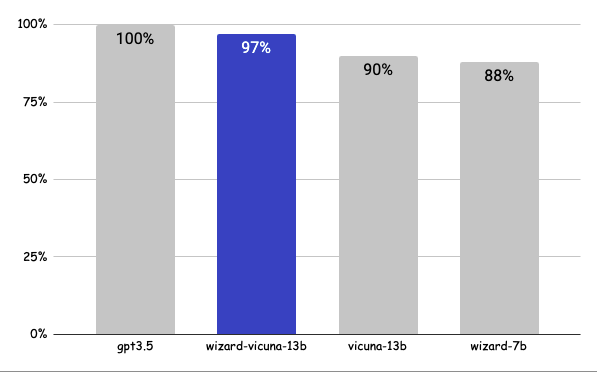

### Approximately 7% performance improvement over VicunaLM

### Detail

The questions presented here are not from rigorous tests, but rather, I asked a few questions and requested GPT-4 to score them. The models compared were ChatGPT 3.5, WizardVicunaLM, VicunaLM, and WizardLM, in that order.

| | gpt3.5 | wizard-vicuna-13b | vicuna-13b | wizard-7b | link |

|-----|--------|-------------------|------------|-----------|----------|

| Q1 | 95 | 90 | 85 | 88 | [link](https://sharegpt.com/c/YdhIlby) |

| Q2 | 95 | 97 | 90 | 89 | [link](https://sharegpt.com/c/YOqOV4g) |

| Q3 | 85 | 90 | 80 | 65 | [link](https://sharegpt.com/c/uDmrcL9) |

| Q4 | 90 | 85 | 80 | 75 | [link](https://sharegpt.com/c/XBbK5MZ) |

| Q5 | 90 | 85 | 80 | 75 | [link](https://sharegpt.com/c/AQ5tgQX) |

| Q6 | 92 | 85 | 87 | 88 | [link](https://sharegpt.com/c/eVYwfIr) |

| Q7 | 95 | 90 | 85 | 92 | [link](https://sharegpt.com/c/Kqyeub4) |

| Q8 | 90 | 85 | 75 | 70 | [link](https://sharegpt.com/c/M0gIjMF) |

| Q9 | 92 | 85 | 70 | 60 | [link](https://sharegpt.com/c/fOvMtQt) |

| Q10 | 90 | 80 | 75 | 85 | [link](https://sharegpt.com/c/YYiCaUz) |

| Q11 | 90 | 85 | 75 | 65 | [link](https://sharegpt.com/c/HMkKKGU) |

| Q12 | 85 | 90 | 80 | 88 | [link](https://sharegpt.com/c/XbW6jgB) |

| Q13 | 90 | 95 | 88 | 85 | [link](https://sharegpt.com/c/JXZb7y6) |

| Q14 | 94 | 89 | 90 | 91 | [link](https://sharegpt.com/c/cTXH4IS) |

| Q15 | 90 | 85 | 88 | 87 | [link](https://sharegpt.com/c/GZiM0Yt) |

| | 91 | 88 | 82 | 80 | |

## Principle

We adopted the approach of WizardLM, which is to extend a single problem more in-depth. However, instead of using individual instructions, we expanded it using Vicuna's conversation format and applied Vicuna's fine-tuning techniques.

Turning a single command into a rich conversation is what we've done [here](https://sharegpt.com/c/6cmxqq0).

After creating the training data, I later trained it according to the Vicuna v1.1 [training method](https://github.com/lm-sys/FastChat/blob/main/scripts/train_vicuna_13b.sh).

## Detailed Method

First, we explore and expand various areas in the same topic using the 7K conversations created by WizardLM. However, we made it in a continuous conversation format instead of the instruction format. That is, it starts with WizardLM's instruction, and then expands into various areas in one conversation using ChatGPT 3.5.

After that, we applied the following model using Vicuna's fine-tuning format.

## Training Process

Trained with 8 A100 GPUs for 35 hours.

## Weights

You can see the [dataset](https://huggingface.co/datasets/junelee/wizard_vicuna_70k) we used for training and the [13b model](https://huggingface.co/junelee/wizard-vicuna-13b) in the huggingface.

## Conclusion

If we extend the conversation to gpt4 32K, we can expect a dramatic improvement, as we can generate 8x more, more accurate and richer conversations.

## License

The model is licensed under the LLaMA model, and the dataset is licensed under the terms of OpenAI because it uses ChatGPT. Everything else is free.

## Author

[JUNE LEE](https://github.com/melodysdreamj) - He is active in Songdo Artificial Intelligence Study and GDG Songdo.

|

Brendan/cse244b-hw2-roberta

|

[

"pytorch",

"roberta",

"text-classification",

"transformers"

] |

text-classification

|

{

"architectures": [

"RobertaForSequenceClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 28 | null |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- f1

- recall

- accuracy

- precision

model-index:

- name: roberta-bne-fine-tuned-text-classification-SL-dss

results: []

language:

- es

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-bne-fine-tuned-text-classification-SL-dss

This model is a fine-tuned version of [PlanTL-GOB-ES/roberta-base-bne](https://huggingface.co/PlanTL-GOB-ES/roberta-base-bne) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 2.5089

- F1: 0.4781

- Recall: 0.4750

- Accuracy: 0.4750

- Precision: 0.5009

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 | Recall | Accuracy | Precision |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:--------:|:---------:|

| 3.235 | 1.0 | 836 | 2.4142 | 0.3995 | 0.4471 | 0.4471 | 0.4786 |

| 2.0006 | 2.0 | 1672 | 2.1013 | 0.4672 | 0.4942 | 0.4942 | 0.4867 |

| 1.2424 | 3.0 | 2508 | 2.1138 | 0.4861 | 0.4852 | 0.4852 | 0.5132 |

| 0.7242 | 4.0 | 3344 | 2.2694 | 0.4828 | 0.4747 | 0.4747 | 0.5126 |

| 0.3403 | 5.0 | 4180 | 2.5089 | 0.4781 | 0.4750 | 0.4750 | 0.5009 |

### Framework versions

- Transformers 4.28.1

- Pytorch 2.0.0+cu118

- Datasets 2.12.0

- Tokenizers 0.13.3

|

Bryanwong/wangchanberta-ner

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

tags:

- autotrain

- summarization

language:

- unk

widget:

- text: "I love AutoTrain 🤗"

datasets:

- DmitriyVasiliev/autotrain-data-mbart-rua-sent

co2_eq_emissions:

emissions: 0.032307287679585996

---

# Model Trained Using AutoTrain

- Problem type: Summarization

- Model ID: 55454129221

- CO2 Emissions (in grams): 0.0323

## Validation Metrics

- Loss: 0.958

- Rouge1: 8.528

- Rouge2: 2.583

- RougeL: 8.489

- RougeLsum: 8.606

- Gen Len: 21.020

## Usage

You can use cURL to access this model:

```

$ curl -X POST -H "Authorization: Bearer YOUR_HUGGINGFACE_API_KEY" -H "Content-Type: application/json" -d '{"inputs": "I love AutoTrain"}' https://api-inference.huggingface.co/DmitriyVasiliev/autotrain-mbart-rua-sent-55454129221

```

|

CAMeL-Lab/bert-base-arabic-camelbert-da-pos-egy

|

[

"pytorch",

"tf",

"bert",

"token-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] |

token-classification

|

{

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 32 | null |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 292.45 +/- 20.23

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

CAMeL-Lab/bert-base-arabic-camelbert-msa-ner

|

[

"pytorch",

"tf",

"bert",

"token-classification",

"ar",

"arxiv:2103.06678",

"transformers",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

] |

token-classification

|

{

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 229 | null |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- billsum

metrics:

- rouge

model-index:

- name: search_summarize_v1

results:

- task:

name: Sequence-to-sequence Language Modeling

type: text2text-generation

dataset:

name: billsum

type: billsum

config: default

split: ca_test

args: default

metrics:

- name: Rouge1

type: rouge

value: 0.1476

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# search_summarize_v1

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the billsum dataset.

It achieves the following results on the evaluation set:

- Loss: 2.5224

- Rouge1: 0.1476

- Rouge2: 0.0551

- Rougel: 0.1228

- Rougelsum: 0.1228

- Gen Len: 19.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:------:|:---------:|:-------:|

| No log | 1.0 | 62 | 2.8176 | 0.1281 | 0.0401 | 0.1087 | 0.1086 | 19.0 |

| No log | 2.0 | 124 | 2.5989 | 0.1372 | 0.0476 | 0.1138 | 0.1137 | 19.0 |

| No log | 3.0 | 186 | 2.5386 | 0.1464 | 0.0541 | 0.1218 | 0.1219 | 19.0 |

| No log | 4.0 | 248 | 2.5224 | 0.1476 | 0.0551 | 0.1228 | 0.1228 | 19.0 |

### Framework versions

- Transformers 4.28.1

- Pytorch 2.0.0+cu118

- Datasets 2.12.0

- Tokenizers 0.13.3

|

CLTL/icf-domains

|

[

"pytorch",

"roberta",

"nl",

"transformers",

"license:mit",

"text-classification"

] |

text-classification

|

{

"architectures": [

"RobertaForMultiLabelSequenceClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 35 | null |

---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 264.55 +/- 21.90

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

CLTL/icf-levels-adm

|

[

"pytorch",

"roberta",

"text-classification",

"nl",

"transformers",

"license:mit"

] |

text-classification

|

{

"architectures": [

"RobertaForSequenceClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 33 | null |

---

license: other

tags:

- generated_from_trainer

datasets:

- scene_parse_150

model-index:

- name: relu-segformer-b0-scene-parse-150-cvfinal

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# relu-segformer-b0-scene-parse-150-cvfinal

This model is a fine-tuned version of [nvidia/mit-b0](https://huggingface.co/nvidia/mit-b0) on the scene_parse_150 dataset.

It achieves the following results on the evaluation set:

- Loss: 2.3127

- Mean Iou: 0.0939

- Mean Accuracy: 0.1473

- Overall Accuracy: 0.5650

- Per Category Iou: [0.5336831298064109, 0.7946016311618073, 0.9542612124083791, 0.4698340415687516, 0.7895064764715527, 0.6196465123602583, 0.0, 0.28656549336868975, 0.10462468913822695, nan, 0.0, 0.0, 0.024119941721107298, 0.0, 0.0, 0.0, 0.0, nan, 0.11790298802632052, 0.0, nan, nan, 0.0, 0.0, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan]

- Per Category Accuracy: [0.9584331199463405, 0.9359158986175116, 0.9802837552806004, 0.7382528506925844, 0.797905481540399, 0.704932715344484, nan, 0.3646332654803336, 0.13570692997334627, nan, 0.0, nan, 0.024543985001960842, nan, 0.0, 0.0, nan, nan, 0.39719777113785026, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan]

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 6e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

### Training results

| Training Loss | Epoch | Step | Validation Loss | Mean Iou | Mean Accuracy | Overall Accuracy | Per Category Iou | Per Category Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:-------------:|:----------------:|:-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------:|:------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------:|

| 4.8909 | 1.0 | 20 | 4.8871 | 0.0125 | 0.0437 | 0.2028 | [0.2508561911058272, 0.04515398550724638, 0.44804797891594955, 0.21111439623524775, 0.31233771405814414, 7.014449766519029e-05, 0.0, 0.0, 0.002980895171853123, nan, 0.0, nan, 0.027754770004437213, nan, 0.0, 0.0205260184545854, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, 0.0003843936190659235, nan, nan, 0.0, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, nan, 0.0, 0.0, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.004370956146657081, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, 0.0, 0.0, 0.0, nan, 0.0, 0.0, nan, nan, 0.0, nan, 0.0, 0.0, 0.0] | [0.39185298015397546, 0.06756573597180808, 0.6783385713684792, 0.21857860768857937, 0.32489891959965533, 7.836815118335908e-05, nan, 0.0, 0.0029985461594378483, nan, 0.0, nan, 0.0358977302074665, nan, 0.0, 0.06666893200584458, nan, nan, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0003843936190659235, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.005191611448869459, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 4.7422 | 2.0 | 40 | 4.5099 | 0.0250 | 0.0841 | 0.3808 | [0.34984703137884793, 0.3589339267863093, 0.4974385245901639, 0.37037865924560065, 0.5684109932116259, 0.01841334153311869, 0.0, 0.001964517481175478, 0.0029039377395748637, nan, 0.0, nan, 0.06118068223901683, nan, 0.0, 0.03404671283875128, 0.0, 0.0, 0.0, 0.0, 0.0, nan, 0.03711163107543761, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, 0.0, nan, nan, 0.0, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, nan, 0.0, 0.0, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, 0.0, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, 0.0, nan, 0.0, 0.0, 0.0] | [0.7327161706761063, 0.5109870561127677, 0.9394270811424725, 0.4854978189331905, 0.5716510903426791, 0.02279393654418844, nan, 0.0019648747076680623, 0.003028834504482675, nan, 0.0, nan, 0.08533004294719121, nan, 0.0, 0.05562540351354107, nan, nan, 0.0, 0.0, nan, nan, 0.03711163107543761, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 4.0553 | 3.0 | 60 | 3.8580 | 0.0431 | 0.0969 | 0.4522 | [0.39298635765482803, 0.5115893703108798, 0.7615359747458901, 0.3781477627471384, 0.608186554204912, 0.07249520170070661, 0.0, 0.00026263422123902746, 0.0002654632333421821, nan, 0.0, nan, 0.031058319540453704, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, nan, 0.004609370441682712, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, 0.0, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, 0.0, nan, nan, nan, nan, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, 0.0, nan] | [0.890797371986272, 0.8525853889943074, 0.9625745894201705, 0.5214471569602817, 0.6093656790614437, 0.09429927677391908, nan, 0.00026265677326154047, 0.00027259510540344074, nan, 0.0, nan, 0.03661511090705616, nan, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, nan, 0.004679678938482955, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 4.187 | 4.0 | 80 | 3.6772 | 0.0470 | 0.1028 | 0.4582 | [0.41381878117227805, 0.6254016101621327, 0.723075600130345, 0.4342820137592303, 0.5828271454173473, 0.03514338327468898, 0.0, 0.0013178423739339867, 0.0032081449763366166, nan, 0.0, nan, 0.08433073627395674, nan, 0.0, 0.05683375940128191, 0.0, 0.0, 0.0013988020515763424, 0.0, nan, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, 0.0, nan, nan, nan, nan, 0.0, 0.0, 0.0, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.8694650748085648, 0.8541525481160206, 0.9600627362373881, 0.6586184025917706, 0.6089514151256048, 0.04550950493719352, nan, 0.0013183349581011937, 0.003664889750424037, nan, 0.0, nan, 0.10580887065147733, nan, 0.0, 0.10425090896734514, nan, nan, 0.0015747395623031575, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 3.9225 | 5.0 | 100 | 3.4916 | 0.0535 | 0.1072 | 0.4745 | [0.45367797998078097, 0.6130674322082363, 0.7883869096437359, 0.44548332225614223, 0.6276848544314471, 0.03952981755468821, 0.0, 0.008173727017699651, 0.0006412792839502804, nan, 0.0, nan, 0.019051546391752577, nan, 0.0, 0.034960804888587126, 0.0, 0.0, 0.020120972719417356, 0.0, nan, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, 0.0, nan, nan, nan, nan, 0.0, 0.0, 0.0, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.8962918531278948, 0.9171862293304418, 0.9473472306518199, 0.7566005969235479, 0.6784317624444887, 0.0487561854862184, nan, 0.008182768705455683, 0.0007117761085534286, nan, 0.0, nan, 0.019885792992625325, nan, 0.0, 0.09623160827754935, nan, nan, 0.026326415246709197, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 3.3489 | 6.0 | 120 | 3.4303 | 0.0520 | 0.1086 | 0.4474 | [0.42187173154853513, 0.708063699670283, 0.5798242120634122, 0.3861172591019147, 0.6270752544837616, 0.02814913099490091, 0.0, 0.00023740131429408467, 0.017860624731895643, nan, 0.0, nan, 0.027602765692416008, nan, 0.0, 0.0016909891128098215, 0.0, 0.0, 0.11093712358467839, 0.0, nan, nan, 0.00014647722279185587, 0.0, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.7836365221219719, 0.8768382352941176, 0.9973919993269675, 0.8288627841126502, 0.6859713660767548, 0.040852197666868185, nan, 0.00023740131429408467, 0.020808093045795978, nan, 0.0, nan, 0.029250002391268997, nan, 0.0, 0.0024805463998097115, nan, nan, 0.18594040216425745, 0.0, nan, nan, 0.00014809110564819477, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 3.185 | 7.0 | 140 | 3.2048 | 0.0641 | 0.1138 | 0.4879 | [0.4625065290705925, 0.7300395796369592, 0.8077190100423401, 0.42056456498035616, 0.6794219083593365, 0.044713581380198694, 0.0, 0.028159846611837125, 0.024135304777162067, nan, 0.0, 0.0, 0.03210493557646753, nan, 0.0, 0.009216222146861098, 0.0, 0.0, 0.09707657301147606, 0.0, nan, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9052804046916575, 0.9062415288696124, 0.9927588921405436, 0.7748463049412004, 0.7540763571286538, 0.06762051902106983, nan, 0.028190143299474182, 0.027834989096195785, nan, 0.0, nan, 0.033056902637091455, nan, 0.0, 0.015698800502905296, nan, nan, 0.15848340466768956, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 3.0486 | 8.0 | 160 | 3.0909 | 0.0698 | 0.1105 | 0.4975 | [0.4651597922984161, 0.7260885140196046, 0.9206514284429353, 0.45898710456104685, 0.7276357698339558, 0.04093562606727295, 0.0, 0.015882548879086716, 0.005692492407655908, nan, 0.0, 0.0, 0.010177352573912554, nan, 0.0, 0.008494170905639253, 0.0, 0.0, 0.04026935665611418, 0.0, nan, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9556969599371216, 0.9443616156139876, 0.9841356641087428, 0.7372643555011352, 0.7457579372970107, 0.05770135017129039, nan, 0.01589578587411669, 0.006103101526532591, nan, 0.0, nan, 0.010368542378069194, nan, 0.0, 0.011859050596350538, nan, nan, 0.06060728417992409, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.9697 | 9.0 | 180 | 2.9824 | 0.0718 | 0.1146 | 0.5030 | [0.47571929063641566, 0.7620331717703751, 0.9091288105282214, 0.45845890761854624, 0.6875787794176307, 0.06392846340574371, 0.0, 0.029610548726600787, 0.009870234653398776, nan, 0.0, 0.0, 0.04909003605961605, nan, 0.0, 0.0034267639047535366, 0.0, 0.0, 0.06778747181648857, 0.0, nan, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9398650535338866, 0.9216335727839523, 0.99588368557367, 0.8275235325629449, 0.7140916020414927, 0.09228409574348985, nan, 0.02978628830621739, 0.011115822631451418, nan, 0.0, nan, 0.04987230623547304, nan, 0.0, 0.005300893676305685, nan, nan, 0.11168537511103933, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.975 | 10.0 | 200 | 2.9308 | 0.0751 | 0.1232 | 0.5169 | [0.49561224561439093, 0.7497106384686921, 0.9130948912615037, 0.3754963905075124, 0.7629068787092295, 0.22973931751081408, 0.0, 0.05083747710023554, 0.015496721582456333, nan, 0.0, 0.0, 0.012014619855137467, nan, 0.0, 0.0, 0.0, 0.0, 0.07644850942663618, 0.0, nan, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9386077791452011, 0.9492494578476552, 0.9605134275979352, 0.8774010867069718, 0.7647146550009942, 0.33713978639081077, nan, 0.05102107820605424, 0.017037194087715046, nan, 0.0, nan, 0.01204243067711173, nan, 0.0, 0.0, nan, nan, 0.14309941048211258, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.5359 | 11.0 | 220 | 2.7183 | 0.0707 | 0.1091 | 0.4964 | [0.436494042818339, 0.7312176249886404, 0.8698091735772315, 0.449270633714399, 0.715452001258549, 0.07408947729180626, 0.0, 0.023337516948682666, 0.0007162605469365537, nan, 0.0, nan, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.023290337478969417, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9563026973041733, 0.9542474247763622, 0.9920918689269339, 0.6937386801357108, 0.7159143633591833, 0.10770023062627349, nan, 0.023386555003864086, 0.0007572086261206688, nan, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, nan, 0.028506823871436646, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.5711 | 12.0 | 240 | 2.8566 | 0.0763 | 0.1213 | 0.5148 | [0.48463778209415787, 0.7972517840126281, 0.8992532843509164, 0.3620071881258745, 0.7991759196438913, 0.23374793779079467, 0.0, 0.05480187511495767, 0.017412369340310773, nan, 0.0, 0.0, 0.010156830611505994, nan, 0.0, 0.0024294237037948215, 0.0, 0.0, 0.07926785671727163, 0.0, nan, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9499483645829794, 0.9284274193548387, 0.9472931476885542, 0.8414836356215403, 0.8002750712533969, 0.2823492532634737, nan, 0.055683235931446584, 0.0197025684516598, nan, 0.0, nan, 0.010196371010167675, nan, 0.0, 0.0026844269258214687, nan, nan, 0.13429702010821287, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.4239 | 13.0 | 260 | 2.8176 | 0.0785 | 0.1216 | 0.5215 | [0.4772270741554508, 0.7986058009962806, 0.9456717376101984, 0.38306746584232015, 0.7562297037577043, 0.16837090306762553, 0.0, 0.12381028314015488, 0.015467904098994586, nan, 0.0, 0.0, 0.0, nan, 0.0, 0.0001597290994473373, 0.0, nan, 0.09785982304164544, 0.0, nan, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9578687500092338, 0.9112649091894822, 0.9914008088407618, 0.8282059131144613, 0.7563299529396169, 0.2298761783211303, nan, 0.12549942670108144, 0.016355706324206444, nan, 0.0, nan, 0.0, nan, 0.0, 0.00016990043834313093, nan, nan, 0.17059678591617541, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.5524 | 14.0 | 280 | 2.6836 | 0.0803 | 0.1330 | 0.5227 | [0.47872884863743637, 0.7690534821348954, 0.8143990086741016, 0.39197327448075703, 0.7833511205976521, 0.4254791369299206, 0.0, 0.05692139759856595, 0.015772870662460567, nan, 0.0, 0.0, 5.655842013479757e-05, nan, 0.0, 0.0, 0.0, nan, 0.12069485104184605, 0.0, nan, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.910749769893671, 0.9497661968013011, 0.987344586595838, 0.8268475293997603, 0.790581295154769, 0.687467813080764, nan, 0.05742081150840754, 0.016658589774654713, nan, 0.0, nan, 5.739045596717266e-05, nan, 0.0, 0.0, nan, nan, 0.22639909553420012, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.2365 | 15.0 | 300 | 2.6524 | 0.0783 | 0.1216 | 0.5209 | [0.4727612635992119, 0.7967315791368201, 0.9461993821443159, 0.4549778460982006, 0.7957215731446438, 0.1510305721400498, 0.0, 0.09256875307543579, 0.010900638156564927, nan, 0.0, 0.0, 0.0019851210451189653, nan, 0.0, 0.00021183876044062461, 0.0, 0.0, 0.11264323169547485, 0.0, nan, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9702627275023971, 0.9375, 0.9883661536797447, 0.8022116782735135, 0.8012858752568437, 0.16907368845301268, nan, 0.09407153356197942, 0.011433850254422099, nan, 0.0, nan, 0.002008665958851043, nan, 0.0, 0.0002378606136803833, nan, nan, 0.2107728337236534, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.3117 | 16.0 | 320 | 2.5956 | 0.0860 | 0.1316 | 0.5415 | [0.4894104007776673, 0.779094307350649, 0.9424582493688185, 0.4388413214151246, 0.7654435517269941, 0.4694480829822636, 0.0, 0.14206474651680132, 0.012345500959083894, nan, 0.0, 0.0, 0.0, nan, 0.0, 0.0, 0.0, nan, 0.08905017355632691, 0.0, nan, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9692019484059504, 0.9569327731092437, 0.978030298477865, 0.8027792658350552, 0.7656591767747067, 0.5938290678668189, nan, 0.14709284411825616, 0.01296341167918585, nan, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, nan, 0.17091980941613502, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.8282 | 17.0 | 340 | 2.6868 | 0.0776 | 0.1316 | 0.5153 | [0.4743226115554707, 0.7954512666628195, 0.7070193198952633, 0.4438320057367676, 0.7353437159012003, 0.35684062059238364, 0.0, 0.14815248552530494, 0.0068802802972507135, nan, 0.0, 0.0, 0.0024155733434376926, 0.0, 0.0, 0.0, 0.0, nan, 0.13392355469165237, 0.0, nan, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.8951394747222842, 0.9341539034968827, 0.9946517958548413, 0.7302237187826841, 0.7370252535295287, 0.5778195741250756, nan, 0.15975088015274502, 0.0073752120184153135, nan, 0.0, nan, 0.002439094378604838, nan, 0.0, 0.0, nan, nan, 0.35609303076798837, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.0514 | 18.0 | 360 | 2.5944 | 0.0846 | 0.1299 | 0.5291 | [0.4706921853235463, 0.762537340607025, 0.9442168569685349, 0.4813733604418935, 0.7709313108564615, 0.30066122830344627, 0.0, 0.15233106243610275, 0.010987503600773652, nan, 0.0, 0.0, 0.0, nan, 0.0, 0.0013024142312579416, 0.0, nan, 0.16403877693519314, 0.0, nan, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9700204325555764, 0.9579323664949851, 0.9764679017613018, 0.6824889671181857, 0.7714423013190164, 0.3746669353574707, nan, 0.17009046505402142, 0.012130482190453113, nan, 0.0, nan, 0.0, nan, 0.0, 0.0013931835944136735, nan, nan, 0.40789792457401275, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.1405 | 19.0 | 380 | 2.6256 | 0.0898 | 0.1426 | 0.5498 | [0.5185031999297933, 0.8150003672959671, 0.9434324339806381, 0.4280491080929272, 0.8144273942862782, 0.4402868608799049, 0.0, 0.23071341887392194, 0.0, nan, 0.0, 0.0, 0.0, nan, 0.0, 0.0, 0.0, nan, 0.11919999121834488, 0.0, nan, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9427297480575776, 0.9398380319869883, 0.9896881816706828, 0.7824609066095253, 0.8205408629946311, 0.6632744452654441, nan, 0.2698343747000914, 0.0, nan, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, nan, 0.438464023257692, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.7111 | 20.0 | 400 | 2.5802 | 0.0873 | 0.1327 | 0.5361 | [0.4831464880075981, 0.8199493255831284, 0.9468093671643164, 0.45873317459935703, 0.8320261007118376, 0.2898698861398996, 0.0, 0.17530824031501036, 0.004702239712946351, nan, 0.0, 0.0, 0.0, nan, 0.0, 0.0, 0.0, nan, 0.18089675000909852, 0.0, nan, nan, 0.0, 0.0, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.972520207250824, 0.9320700054215234, 0.9893276285822452, 0.7298410754827683, 0.836713727049778, 0.3873401849488368, nan, 0.18507705440530972, 0.004921856069784347, nan, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, nan, 0.4013970766373254, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.5598 | 21.0 | 420 | 2.5502 | 0.0870 | 0.1403 | 0.5487 | [0.5087946262173165, 0.7867801857585139, 0.876644631963781, 0.35241226393455155, 0.8468368479467259, 0.4543256761763616, 0.0, 0.2858426005132592, 0.0036147422467467318, nan, 0.0, 0.0, 0.0, nan, 0.0, 0.0, 0.0, nan, 0.14941308790216548, 0.0, nan, nan, 0.0, 0.0, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9337426739020271, 0.8611073461642722, 0.991364753531918, 0.8818397489859953, 0.8471034665606151, 0.5491256353417971, nan, 0.303808018103113, 0.003710322267991277, nan, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, nan, 0.38084470645239443, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 1.9659 | 22.0 | 440 | 2.5646 | 0.0885 | 0.1421 | 0.5519 | [0.5045213604927574, 0.8042200331977878, 0.9418047369854599, 0.45272025606142935, 0.8257880700359456, 0.49502223166994386, 0.0, 0.2643218071018291, 0.009075791313171171, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00026377394572851066, 0.0, nan, 0.12570543441761717, 0.0, nan, nan, 0.0, 0.0, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9617011469119953, 0.9398803876389266, 0.9765880861241144, 0.7279342363715211, 0.8260754291774375, 0.625702514498108, nan, 0.28985185147769693, 0.009752847104434213, nan, 0.0, nan, 0.0, nan, 0.0, 0.0002718407013490095, nan, nan, 0.46858596462892677, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 1.7829 | 23.0 | 460 | 2.5177 | 0.0846 | 0.1345 | 0.5462 | [0.4930088449307022, 0.7882521985751578, 0.9101626308882405, 0.44989356331658825, 0.8198836997398983, 0.40375010070087813, 0.0, 0.255825791600409, 0.006403338369666883, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, 0.10222337548824124, 0.0, nan, nan, 0.0, 0.0, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9612653114893605, 0.8876050420168067, 0.9877291765568382, 0.8127343690211984, 0.8200603168290581, 0.44887037907794275, nan, 0.28685150295236317, 0.006739156772473952, nan, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, nan, 0.3022288621497214, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.1919 | 24.0 | 480 | 2.5331 | 0.0882 | 0.1434 | 0.5555 | [0.5115400805821155, 0.7956842451360199, 0.8616338898084154, 0.45560905508889904, 0.8224094829727, 0.5002673724089801, 0.0, 0.3255104125870554, 0.012325574865934807, nan, 0.0, 0.0, 0.0007629074402548111, 0.0, 0.0, 0.0, 0.0, nan, 0.12330337703247325, 0.0, nan, nan, 0.0, 0.0, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9425568912967359, 0.9430147058823529, 0.9791480130520218, 0.7440562740746409, 0.8231590110691324, 0.6179440675309554, nan, 0.3862317339893018, 0.013296583474678943, nan, 0.0, nan, 0.0007652060795623021, nan, 0.0, 0.0, nan, nan, 0.42990390050876204, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.4307 | 25.0 | 500 | 2.4986 | 0.0878 | 0.1390 | 0.5565 | [0.5067241688681817, 0.8073821293627443, 0.9322059866647752, 0.44890528812665903, 0.8061694443524071, 0.41967209810856837, 0.0, 0.3408865002560274, 0.016331731047663174, nan, 0.0, 0.0, 0.000765023141950044, 0.0, 0.0, 0.0, 0.0, nan, 0.11318709117849012, 0.0, nan, nan, 0.0, 0.0, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.9637754280420943, 0.9159240309026837, 0.987182337706041, 0.7603568786510548, 0.8063564658315105, 0.4662569131904794, nan, 0.40015254297216346, 0.017082626605282286, nan, 0.0, nan, 0.0007652060795623021, nan, 0.0, 0.0, nan, nan, 0.3793911007025761, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |

| 2.7454 | 26.0 | 520 | 2.4647 | 0.0931 | 0.1433 | 0.5644 | [0.5157605506728834, 0.803062049148433, 0.9502783532598003, 0.4501630383564226, 0.8001457822543238, 0.5544864444466602, 0.0, 0.34386147348502605, 0.03533719952693749, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, 0.10768117416918258, 0.0, nan, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, 0.0, nan, 0.0, nan, nan] | [0.966183603428178, 0.9091047709406344, 0.9867737108724783, 0.7615685824341216, 0.8003579240405647, 0.6225789839009427, nan, 0.407542290266041, 0.03755754785558517, nan, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, nan, 0.38451909876443513, 0.0, nan, nan, 0.0, 0.0, nan, nan, nan, 0.0, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, 0.0, 0.0, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, 0.0, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, 0.0, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, nan, nan, 0.0, nan, nan, nan, nan, 0.0, nan, nan] |