LAE-DINO

Collection

The model, training, and evaluation data of LAE-DINO.

•

3 items

•

Updated

Error code: FeaturesError

Exception: ArrowInvalid

Message: JSON parse error: Invalid value. in row 0

Traceback: Traceback (most recent call last):

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/packaged_modules/json/json.py", line 174, in _generate_tables

df = pandas_read_json(f)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/packaged_modules/json/json.py", line 38, in pandas_read_json

return pd.read_json(path_or_buf, **kwargs)

File "/src/services/worker/.venv/lib/python3.9/site-packages/pandas/io/json/_json.py", line 791, in read_json

json_reader = JsonReader(

File "/src/services/worker/.venv/lib/python3.9/site-packages/pandas/io/json/_json.py", line 905, in __init__

self.data = self._preprocess_data(data)

File "/src/services/worker/.venv/lib/python3.9/site-packages/pandas/io/json/_json.py", line 917, in _preprocess_data

data = data.read()

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/utils/file_utils.py", line 813, in read_with_retries

out = read(*args, **kwargs)

File "/usr/local/lib/python3.9/codecs.py", line 322, in decode

(result, consumed) = self._buffer_decode(data, self.errors, final)

UnicodeDecodeError: 'utf-8' codec can't decode byte 0xff in position 0: invalid start byte

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/split/first_rows.py", line 228, in compute_first_rows_from_streaming_response

iterable_dataset = iterable_dataset._resolve_features()

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/iterable_dataset.py", line 3422, in _resolve_features

features = _infer_features_from_batch(self.with_format(None)._head())

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/iterable_dataset.py", line 2187, in _head

return next(iter(self.iter(batch_size=n)))

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/iterable_dataset.py", line 2391, in iter

for key, example in iterator:

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/iterable_dataset.py", line 1882, in __iter__

for key, pa_table in self._iter_arrow():

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/iterable_dataset.py", line 1904, in _iter_arrow

yield from self.ex_iterable._iter_arrow()

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/iterable_dataset.py", line 499, in _iter_arrow

for key, pa_table in iterator:

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/iterable_dataset.py", line 346, in _iter_arrow

for key, pa_table in self.generate_tables_fn(**gen_kwags):

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/packaged_modules/json/json.py", line 177, in _generate_tables

raise e

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/packaged_modules/json/json.py", line 151, in _generate_tables

pa_table = paj.read_json(

File "pyarrow/_json.pyx", line 308, in pyarrow._json.read_json

File "pyarrow/error.pxi", line 154, in pyarrow.lib.pyarrow_internal_check_status

File "pyarrow/error.pxi", line 91, in pyarrow.lib.check_status

pyarrow.lib.ArrowInvalid: JSON parse error: Invalid value. in row 0Need help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

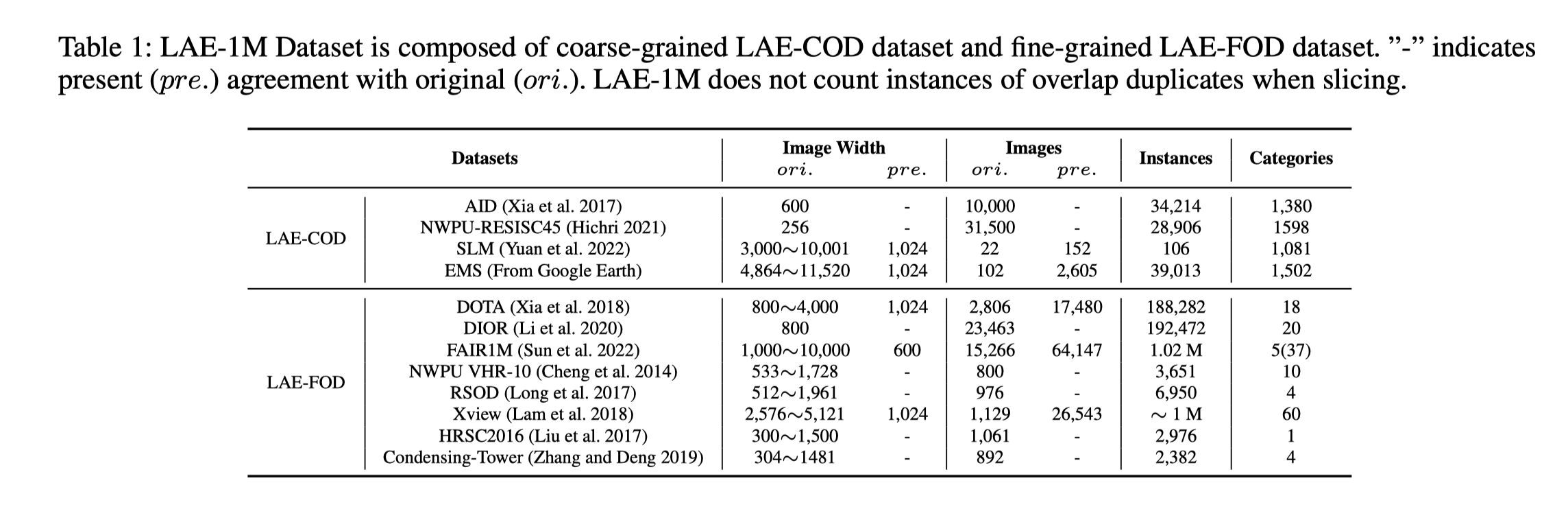

LAE-1M (Locate Anything on Earth - 1 Million) is a large-scale open-vocabulary remote sensing object detection dataset introduced in the paper "Locate Anything on Earth: Advancing Open-Vocabulary Object Detection for Remote Sensing Community" (AAAI 2025).

It contains over 1M images with coarse-grained (LAE-COD) and fine-grained (LAE-FOD) annotations, unified in COCO format, enabling zero-shot and few-shot detection in remote sensing.

| Subset | # Images | # Classes | Format | Description |

|---|---|---|---|---|

| LAE-COD | 400k+ | 20+ | COCO | Coarse-grained detection (AID, EMS, SLM) |

| LAE-FOD | 600k+ | 50+ | COCO | Fine-grained detection (DIOR, DOTAv2, FAIR1M) |

| LAE-80C | 20k (val) | 80 | COCO | Benchmark with 80 semantically distinct classes |

All annotations are in COCO JSON format with bounding boxes and categories.

from datasets import load_dataset

# Load the dataset

dataset = load_dataset("jaychempan/LAE-1M", split="train")

# Access one example

example = dataset[0]

print(example.keys()) # image, annotations, category_id, etc.

# Show the image (requires Pillow)

from PIL import Image

import io

img = Image.open(io.BytesIO(example["image"]))

img.show()

Totally Free + Zero Barriers + No Login Required