File size: 3,101 Bytes

50c531a 3ffca9d 631debe 3ffca9d 65bac21 988a75e 5c21ce8 988a75e 631debe 5c21ce8 631debe 50c531a a769207 447588a a769207 447588a a769207 86f33ff 3f07c41 82b3bce e44768c 3ffca9d d2a39ef 3ffca9d 447588a 7ae61a7 20028b5 8a46fbc 115ca83 a769207 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 |

---

license: apache-2.0

base_model:

- Qwen/Qwen-Image-Edit

pipeline_tag: image-to-image

tags:

- gguf-connector

- gguf-node

widget:

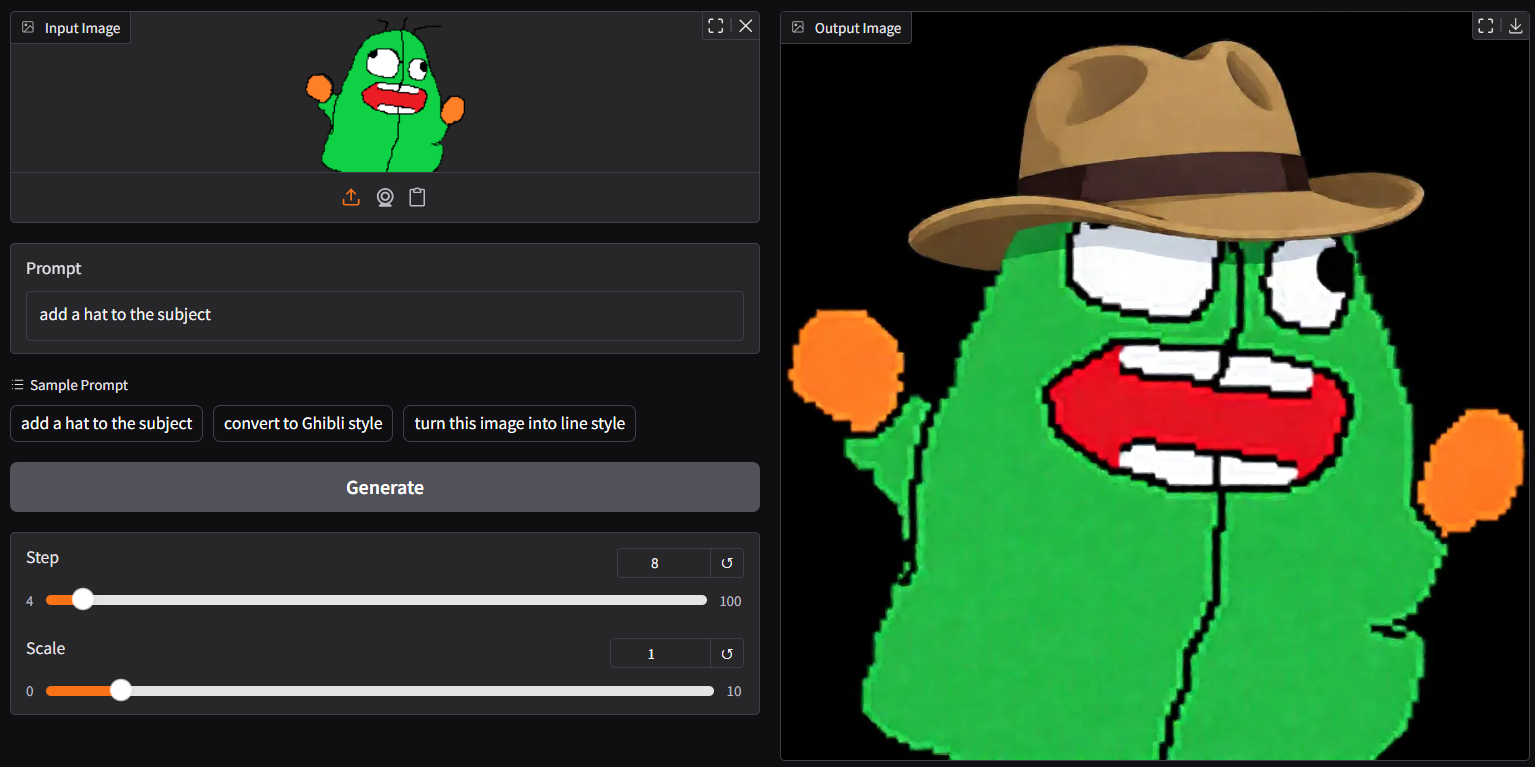

- text: >-

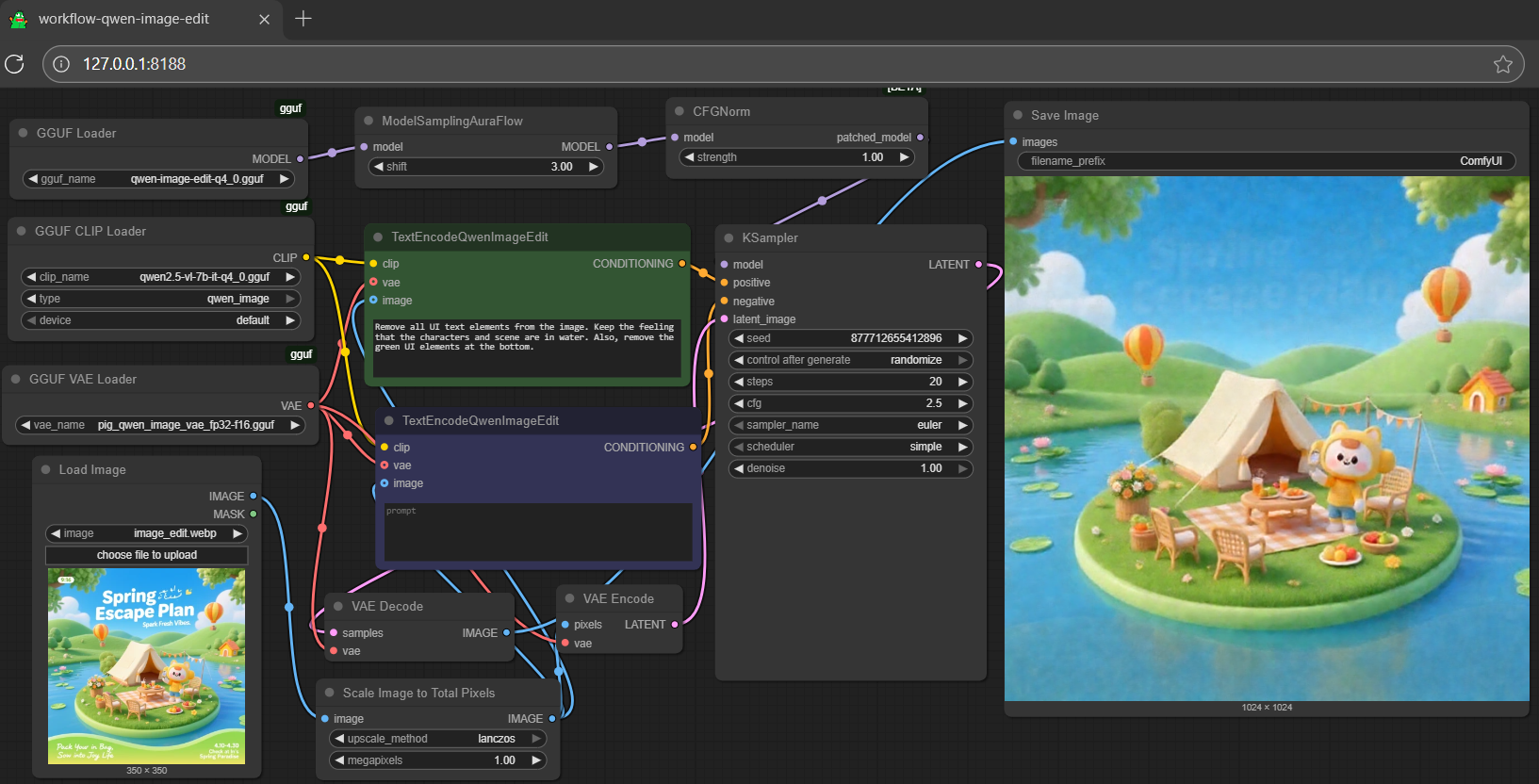

remove all UI text elements from the image. Keep the feeling that the characters and scene are in water. Also, remove the green UI elements at the bottom

output:

url: workflow-demo1.png

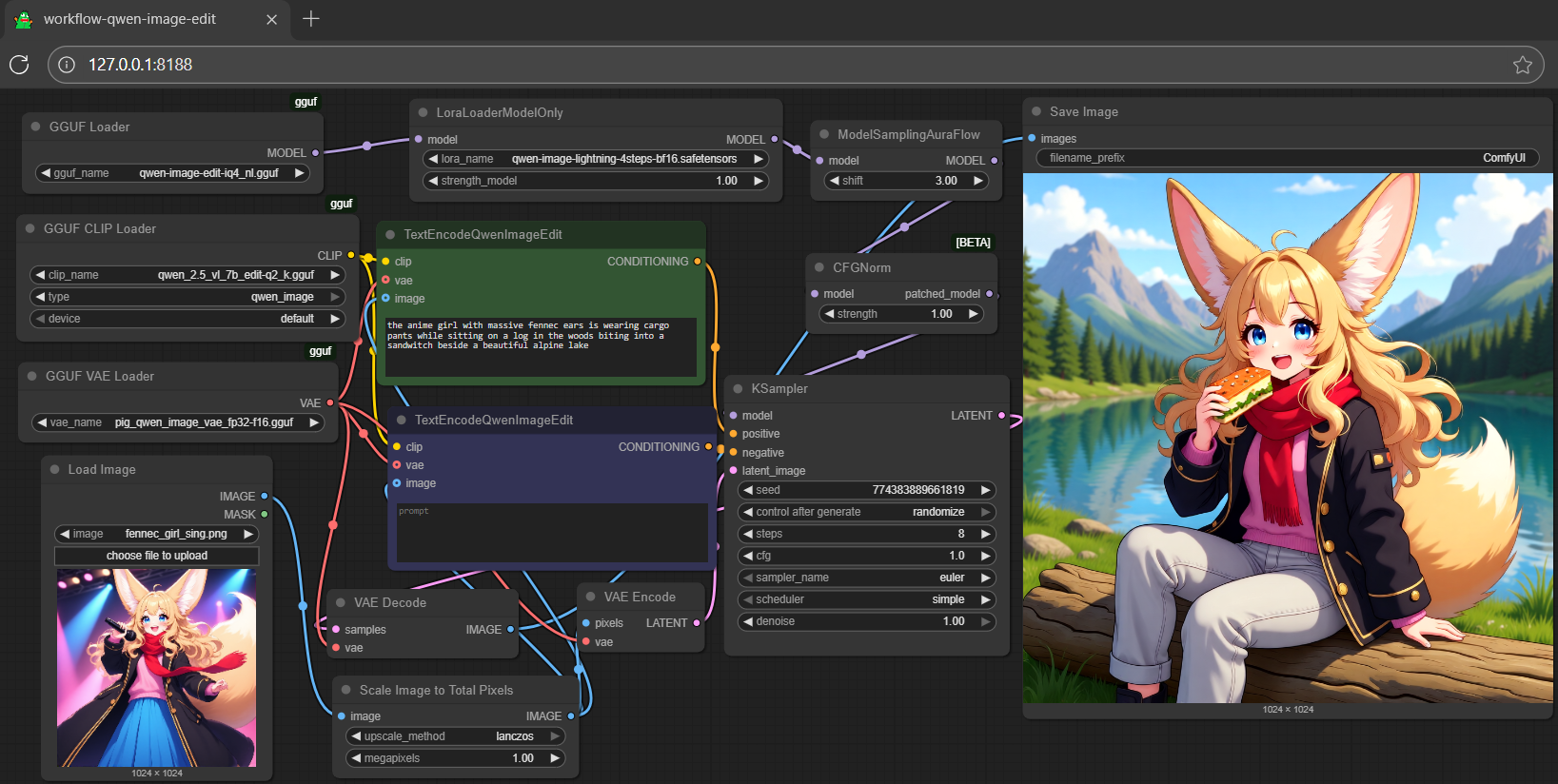

- text: >-

the anime girl with massive fennec ears is wearing cargo pants while sitting on a log in the woods biting into a sandwitch beside a beautiful alpine lake

output:

url: workflow-demo2.png

- text: >-

the anime girl with massive fennec ears is wearing a maid outfit with a long black gold leaf pattern dress and a white apron mouth open holding a fancy black forest cake with candles on top in the kitchen of an old dark Victorian mansion lit by candlelight with a bright window to the foggy forest and very expensive stuff everywhere

output:

url: workflow-demo3.png

---

## **qwen-image-edit-gguf**

- use 8-step (lite-lora auto applied); save up to 70% loading time

- run it with `gguf-connector`; simply execute the command below in console/terminal

```

ggc q6

```

>

>GGUF file(s) available. Select which one to use:

>

>1. qwen-image-edit-iq4_nl.gguf

>2. qwen-image-edit-q2_k.gguf

>3. qwen-image-edit-q4_0.gguf

>4. qwen-image-edit-q8_0.gguf

>

>Enter your choice (1 to 4): _

>

- opt a `gguf` file in your current directory to interact with; nothing else

## **run it with gguf-node via comfyui**

- drag **qwen-image-edit** to > `./ComfyUI/models/diffusion_models`

- either 1 or 2 below, drag it to > `./ComfyUI/models/text_encoders`

- option 1: just **qwen2.5-vl-7b-edit** [[7.95GB](https://huggingface.co/calcuis/pig-encoder/blob/main/qwen_2.5_vl_7b_edit-q2_k.gguf)] *

- option 2: both **qwen2.5-vl-7b** [[4.43GB](https://huggingface.co/chatpig/qwen2.5-vl-7b-it-gguf/blob/main/qwen2.5-vl-7b-it-q4_0.gguf)] and **mmproj-clip** [[608MB](https://huggingface.co/chatpig/qwen2.5-vl-7b-it-gguf/blob/main/mmproj-qwen2.5-vl-7b-it-q4_0.gguf)]

- drag **pig** [[254MB](https://huggingface.co/calcuis/pig-vae/blob/main/pig_qwen_image_vae_fp32-f16.gguf)] to > `./ComfyUI/models/vae`

*note: option 1 (pig quant) is an all-in-one choice; for option 2 (llama.cpp quant), you need to prepare both text-model and mmproj-clip

<Gallery />

- get more gguf encoder either [here](https://huggingface.co/calcuis/pig-encoder/tree/main) (pig quant) or [here](https://huggingface.co/chatpig/qwen2.5-vl-7b-it-gguf/tree/main) (llama.cpp quant)

### **reference**

- base model from [qwen](https://huggingface.co/Qwen)

- comfyui from [comfyanonymous](https://github.com/comfyanonymous/ComfyUI)

- gguf-node ([pypi](https://pypi.org/project/gguf-node)|[repo](https://github.com/calcuis/gguf)|[pack](https://github.com/calcuis/gguf/releases))

- gguf-connector ([pypi](https://pypi.org/project/gguf-connector)) |