Update README.md

Browse files

README.md

CHANGED

|

@@ -21,7 +21,7 @@ widget:

|

|

| 21 |

url: workflow-demo3.png

|

| 22 |

---

|

| 23 |

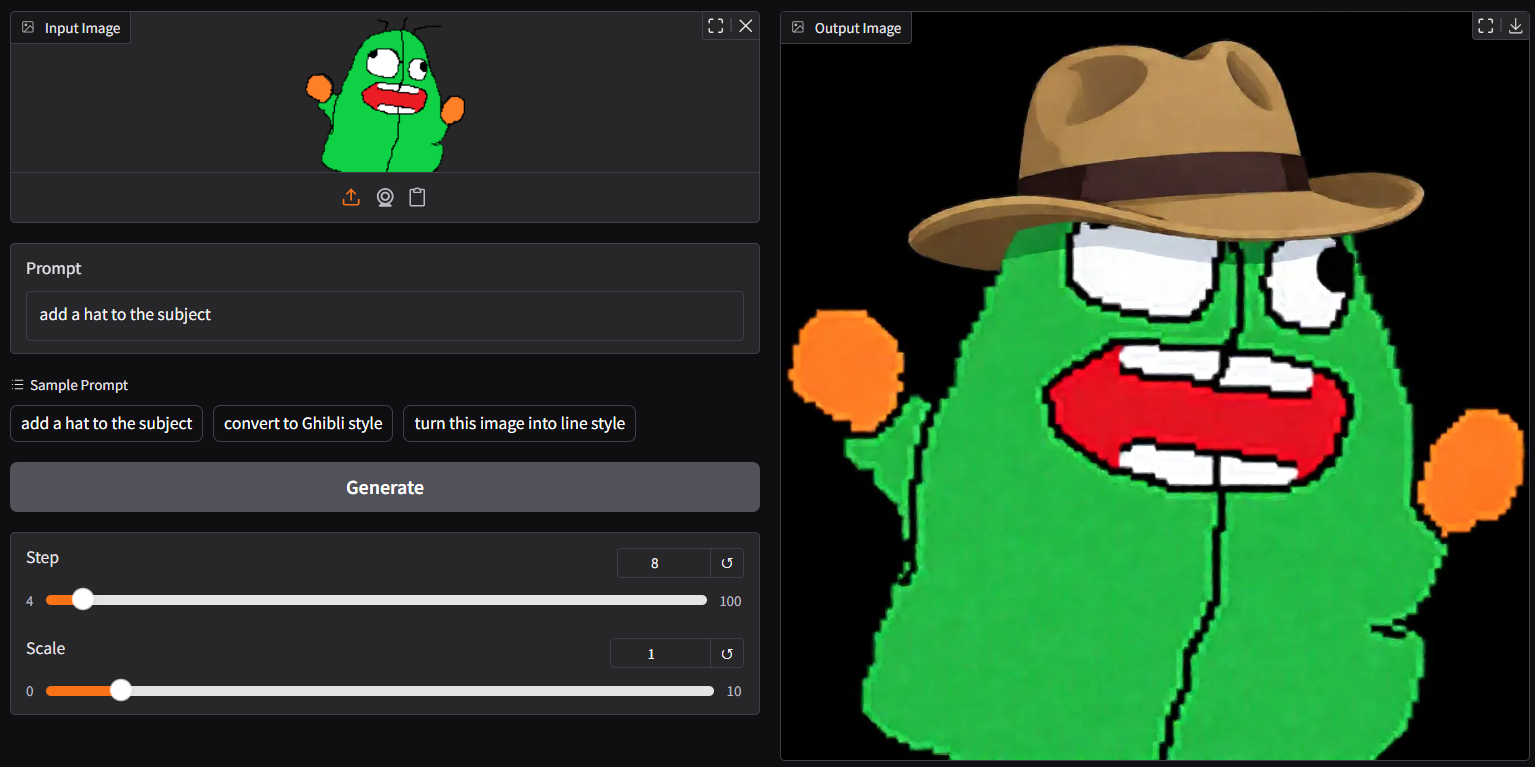

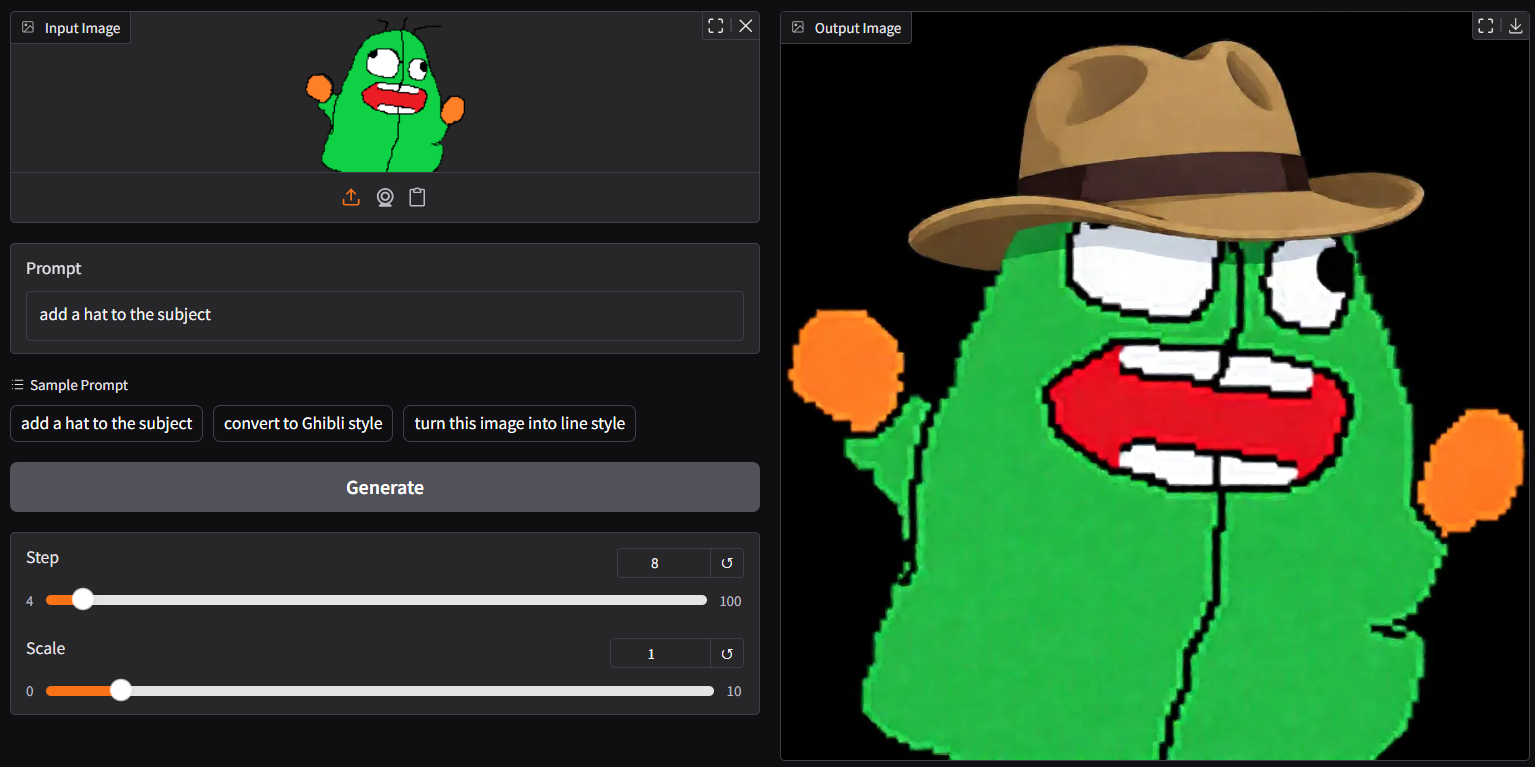

## **qwen-image-edit-gguf**

|

| 24 |

-

- use 8-step (lite-lora auto applied)

|

| 25 |

- run it with `gguf-connector`; simply execute the command below in console/terminal

|

| 26 |

```

|

| 27 |

ggc q6

|

|

@@ -36,20 +36,20 @@ ggc q6

|

|

| 36 |

>

|

| 37 |

>Enter your choice (1 to 4): _

|

| 38 |

>

|

| 39 |

-

- opt a `gguf` file in

|

| 40 |

|

| 41 |

|

| 42 |

|

| 43 |

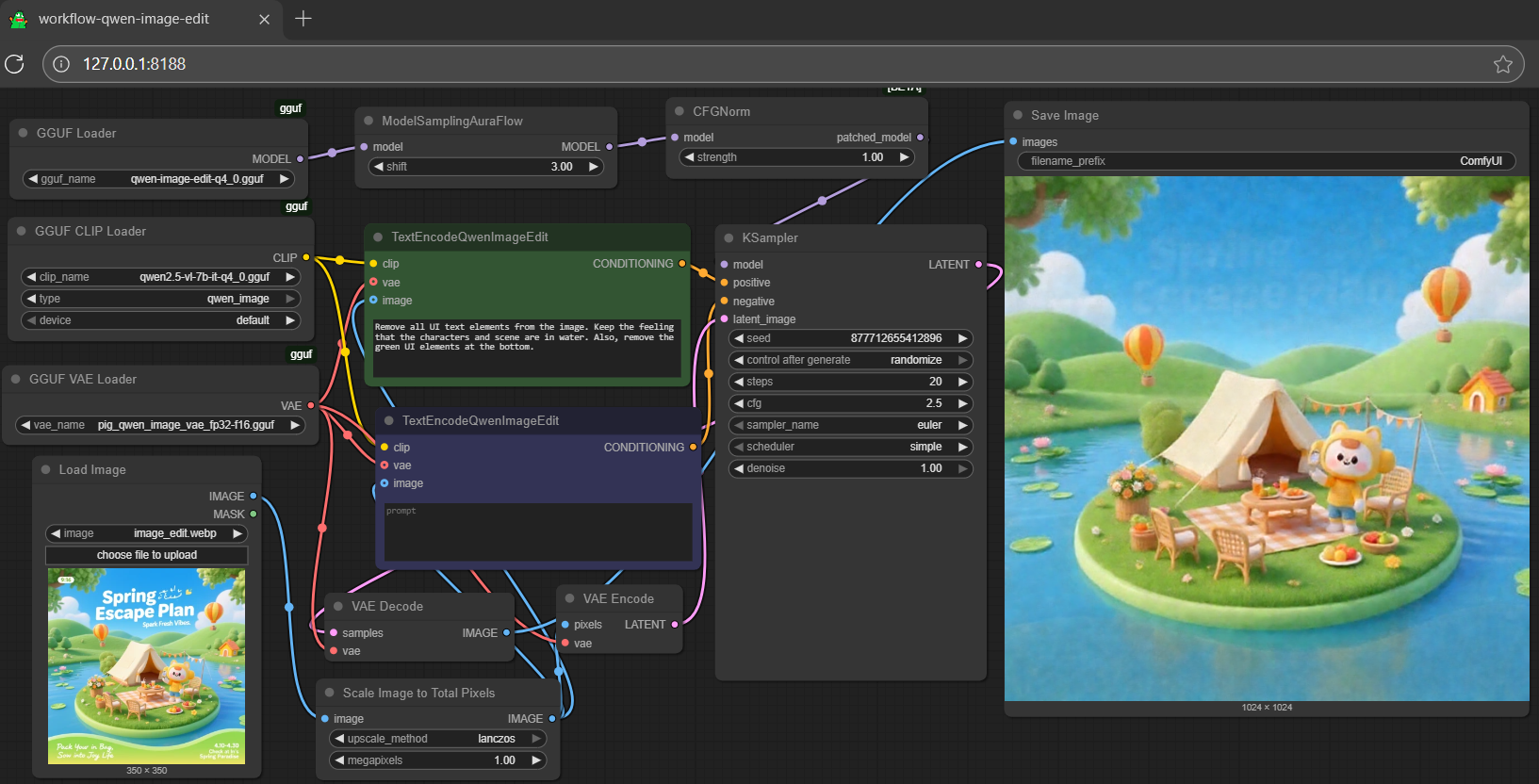

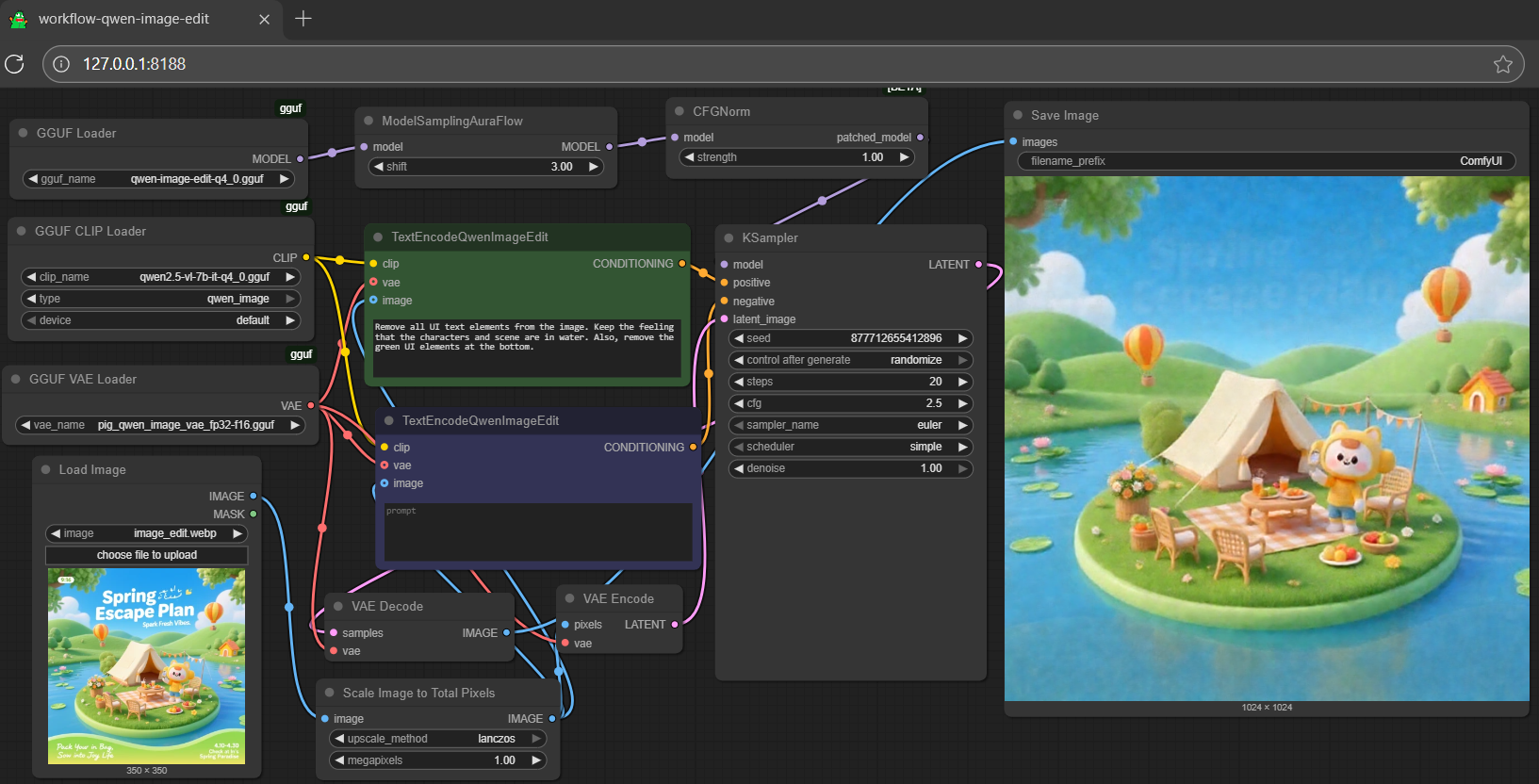

## **run it with gguf-node via comfyui**

|

| 44 |

- drag **qwen-image-edit** to > `./ComfyUI/models/diffusion_models`

|

| 45 |

- either 1 or 2 below, drag it to > `./ComfyUI/models/text_encoders`

|

| 46 |

-

- option 1: just **qwen2.5-vl-7b-edit** [[7.95GB](https://huggingface.co/calcuis/pig-encoder/blob/main/qwen_2.5_vl_7b_edit-q2_k.gguf)] (recommended)

|

| 47 |

- option 2: both **qwen2.5-vl-7b** [[4.43GB](https://huggingface.co/chatpig/qwen2.5-vl-7b-it-gguf/blob/main/qwen2.5-vl-7b-it-q4_0.gguf)] and **mmproj-clip** [[1.35GB](https://huggingface.co/chatpig/qwen2.5-vl-7b-it-gguf/blob/main/mmproj-qwen2.5-vl-7b-it-f16.gguf)]

|

| 48 |

- drag **pig** [[254MB](https://huggingface.co/calcuis/pig-vae/blob/main/pig_qwen_image_vae_fp32-f16.gguf)] to > `./ComfyUI/models/vae`

|

| 49 |

|

| 50 |

|

| 51 |

|

| 52 |

-

note: option 1 (pig quant) is an all-in-one choice; for option 2 (llama.cpp quant), you need to prepare both text-model and mmproj-clip

|

| 53 |

|

| 54 |

<Gallery />

|

| 55 |

|

|

@@ -57,7 +57,6 @@ note: option 1 (pig quant) is an all-in-one choice; for option 2 (llama.cpp quan

|

|

| 57 |

|

| 58 |

- get more gguf encoder either [here](https://huggingface.co/calcuis/pig-encoder/tree/main) (pig quant) or [here](https://huggingface.co/chatpig/qwen2.5-vl-7b-it-gguf/tree/main) (llama.cpp quant)

|

| 59 |

|

| 60 |

-

|

| 61 |

### **reference**

|

| 62 |

- base model from [qwen](https://huggingface.co/Qwen)

|

| 63 |

- comfyui from [comfyanonymous](https://github.com/comfyanonymous/ComfyUI)

|

|

|

|

| 21 |

url: workflow-demo3.png

|

| 22 |

---

|

| 23 |

## **qwen-image-edit-gguf**

|

| 24 |

+

- use 8-step (lite-lora auto applied); save up to 70% loading time

|

| 25 |

- run it with `gguf-connector`; simply execute the command below in console/terminal

|

| 26 |

```

|

| 27 |

ggc q6

|

|

|

|

| 36 |

>

|

| 37 |

>Enter your choice (1 to 4): _

|

| 38 |

>

|

| 39 |

+

- opt a `gguf` file in your current directory to interact with; nothing else

|

| 40 |

|

| 41 |

|

| 42 |

|

| 43 |

## **run it with gguf-node via comfyui**

|

| 44 |

- drag **qwen-image-edit** to > `./ComfyUI/models/diffusion_models`

|

| 45 |

- either 1 or 2 below, drag it to > `./ComfyUI/models/text_encoders`

|

| 46 |

+

- option 1: just **qwen2.5-vl-7b-edit** [[7.95GB](https://huggingface.co/calcuis/pig-encoder/blob/main/qwen_2.5_vl_7b_edit-q2_k.gguf)] (recommended*)

|

| 47 |

- option 2: both **qwen2.5-vl-7b** [[4.43GB](https://huggingface.co/chatpig/qwen2.5-vl-7b-it-gguf/blob/main/qwen2.5-vl-7b-it-q4_0.gguf)] and **mmproj-clip** [[1.35GB](https://huggingface.co/chatpig/qwen2.5-vl-7b-it-gguf/blob/main/mmproj-qwen2.5-vl-7b-it-f16.gguf)]

|

| 48 |

- drag **pig** [[254MB](https://huggingface.co/calcuis/pig-vae/blob/main/pig_qwen_image_vae_fp32-f16.gguf)] to > `./ComfyUI/models/vae`

|

| 49 |

|

| 50 |

|

| 51 |

|

| 52 |

+

*note: option 1 (pig quant) is an all-in-one choice; for option 2 (llama.cpp quant), you need to prepare both text-model and mmproj-clip

|

| 53 |

|

| 54 |

<Gallery />

|

| 55 |

|

|

|

|

| 57 |

|

| 58 |

- get more gguf encoder either [here](https://huggingface.co/calcuis/pig-encoder/tree/main) (pig quant) or [here](https://huggingface.co/chatpig/qwen2.5-vl-7b-it-gguf/tree/main) (llama.cpp quant)

|

| 59 |

|

|

|

|

| 60 |

### **reference**

|

| 61 |

- base model from [qwen](https://huggingface.co/Qwen)

|

| 62 |

- comfyui from [comfyanonymous](https://github.com/comfyanonymous/ComfyUI)

|