Qwen-Image Image Structure Control Model

Model Introduction

This model is a LoRA for image structure control, trained based on Qwen-Image, adopting the In Context technical approach. It supports multiple conditions: canny, depth, lineart, softedge, normal, and openpose. The training framework is built upon DiffSynth-Studio , and the dataset used isQwen-Image-Self-Generated-Dataset It is recommended to start the input Prompt with "Context_Control. ".

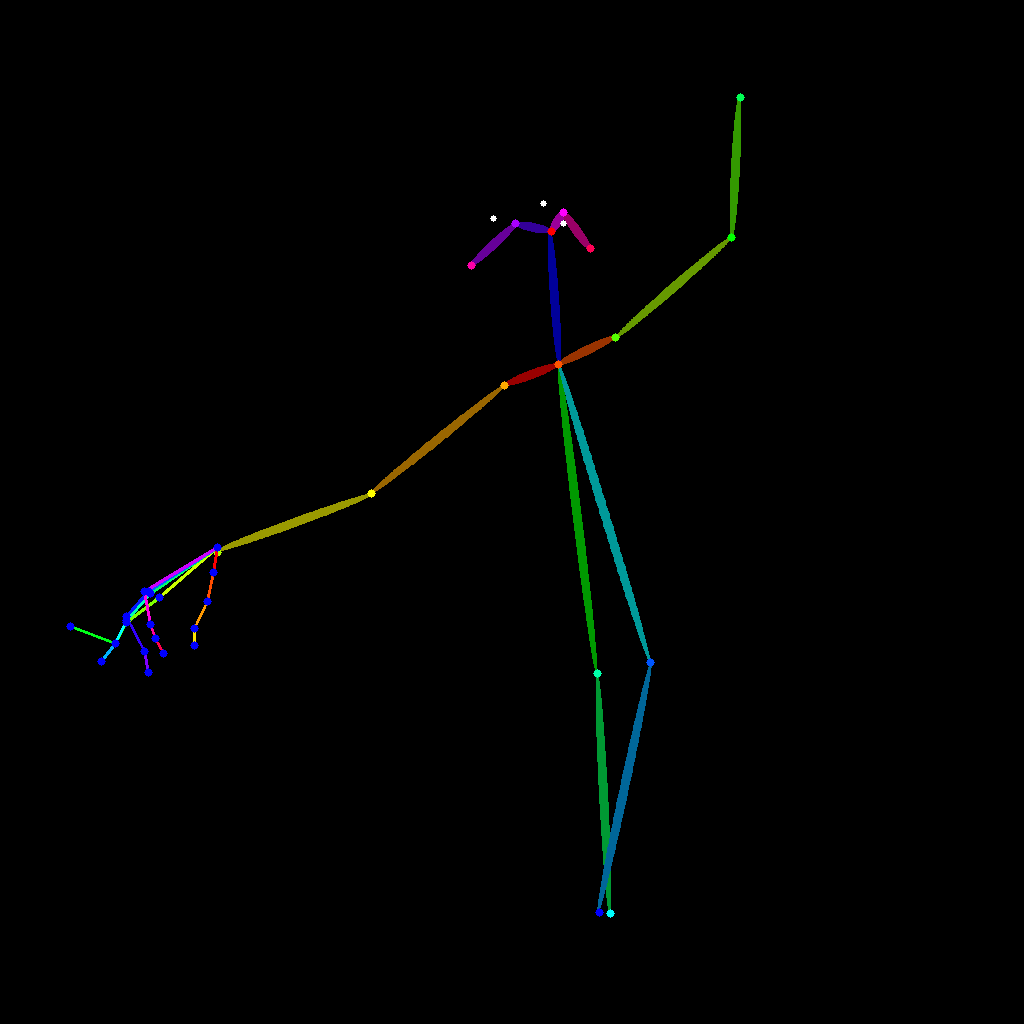

Please note that when using Openpose control, due to the particularity of this type of control, it cannot achieve a similar "point-to-point" control effect as other control types.

Effect Demonstration

| Control Condition | Control Image | Generated Image 1 | Generated Image 2 |

|---|---|---|---|

| canny |  |

|

|

| depth |  |

|

|

| lineart |  |

|

|

| softedge |  |

|

|

| normal |  |

|

|

| openpose |  |

|

|

Inference Code

git clone https://github.com/modelscope/DiffSynth-Studio.git

cd DiffSynth-Studio

pip install -e .

from PIL import Image

import torch

from modelscope import dataset_snapshot_download, snapshot_download

from diffsynth.pipelines.qwen_image import QwenImagePipeline, ModelConfig

from diffsynth.controlnets.processors import Annotator

allow_file_pattern = ["sk_model.pth", "sk_model2.pth", "dpt_hybrid-midas-501f0c75.pt", "ControlNetHED.pth", "body_pose_model.pth", "hand_pose_model.pth", "facenet.pth", "scannet.pt"]

snapshot_download("lllyasviel/Annotators", local_dir="models/Annotators", allow_file_pattern=allow_file_pattern)

pipe = QwenImagePipeline.from_pretrained(

torch_dtype=torch.bfloat16,

device="cuda",

model_configs=[

ModelConfig(model_id="Qwen/Qwen-Image", origin_file_pattern="transformer/diffusion_pytorch_model*.safetensors"),

ModelConfig(model_id="Qwen/Qwen-Image", origin_file_pattern="text_encoder/model*.safetensors"),

ModelConfig(model_id="Qwen/Qwen-Image", origin_file_pattern="vae/diffusion_pytorch_model.safetensors"),

],

tokenizer_config=ModelConfig(model_id="Qwen/Qwen-Image", origin_file_pattern="tokenizer/"),

)

snapshot_download("DiffSynth-Studio/Qwen-Image-In-Context-Control-Union", local_dir="models/DiffSynth-Studio/Qwen-Image-In-Context-Control-Union", allow_file_pattern="model.safetensors")

pipe.load_lora(pipe.dit, "models/DiffSynth-Studio/Qwen-Image-In-Context-Control-Union/model.safetensors")

dataset_snapshot_download(dataset_id="DiffSynth-Studio/examples_in_diffsynth", local_dir="./", allow_file_pattern=f"data/examples/qwen-image-context-control/image.jpg")

origin_image = Image.open("data/examples/qwen-image-context-control/image.jpg").resize((1024, 1024))

annotator_ids = ['openpose', 'canny', 'depth', 'lineart', 'softedge', 'normal']

for annotator_id in annotator_ids:

annotator = Annotator(processor_id=annotator_id, device="cuda")

control_image = annotator(origin_image)

control_image.save(f"{annotator.processor_id}.png")

control_prompt = "Context_Control. "

prompt = f"{control_prompt}一A beautiful girl in light blue is dancing against a dreamy starry sky with interweaving light and shadow and exquisite details."

negative_prompt = "Mesh, regular grid, blurry, low resolution, low quality, distorted, deformed, wrong anatomy, distorted hands, distorted body, distorted face, distorted hair, distorted eyes, distorted mouth"

image = pipe(prompt, seed=1, negative_prompt=negative_prompt, context_image=control_image, height=1024, width=1024)

image.save(f"image_{annotator.processor_id}.png")

license: apache-2.0

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for SahilCarterr/Qwen-Image-In-Context-Control-Union

Base model

Qwen/Qwen-Image