Improve model card: Add tags, update paper link, add GitHub link, and expand content

#31

by

nielsr

HF Staff

- opened

README.md

CHANGED

|

@@ -1,16 +1,21 @@

|

|

| 1 |

---

|

| 2 |

-

license: apache-2.0

|

| 3 |

language:

|

| 4 |

- en

|

| 5 |

- zh

|

| 6 |

library_name: diffusers

|

|

|

|

| 7 |

pipeline_tag: text-to-image

|

|

|

|

|

|

|

|

|

|

|

|

|

| 8 |

---

|

|

|

|

| 9 |

<p align="center">

|

| 10 |

<img src="https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen-Image/qwen_image_logo.png" width="400"/>

|

| 11 |

<p>

|

| 12 |

-

<p align="center">

|

| 13 |

-

|

| 14 |

<br>

|

| 15 |

🖥️ <a href="https://huggingface.co/spaces/Qwen/qwen-image">Demo</a>   |   💬 <a href="https://github.com/QwenLM/Qwen-Image/blob/main/assets/wechat.png">WeChat (微信)</a>   |   🫨 <a href="https://discord.gg/CV4E9rpNSD">Discord</a>

|

| 16 |

</p>

|

|

@@ -25,14 +30,22 @@ We are thrilled to release **Qwen-Image**, an image generation foundation model

|

|

| 25 |

|

| 26 |

|

| 27 |

## News

|

| 28 |

-

- 2025.08.

|

| 29 |

-

- 2025.08.

|

| 30 |

-

- 2025.08.

|

|

|

|

|

|

|

| 31 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

|

| 33 |

## Quick Start

|

| 34 |

|

| 35 |

-

|

|

|

|

|

|

|

| 36 |

```

|

| 37 |

pip install git+https://github.com/huggingface/diffusers

|

| 38 |

```

|

|

@@ -56,15 +69,15 @@ else:

|

|

| 56 |

pipe = DiffusionPipeline.from_pretrained(model_name, torch_dtype=torch_dtype)

|

| 57 |

pipe = pipe.to(device)

|

| 58 |

|

| 59 |

-

positive_magic =

|

| 60 |

-

"en": "Ultra HD, 4K, cinematic composition." # for english prompt

|

| 61 |

-

"zh": "超清,4K,电影级构图" # for chinese prompt

|

| 62 |

-

|

| 63 |

|

| 64 |

# Generate image

|

| 65 |

-

prompt = '''A coffee shop entrance features a chalkboard sign reading "Qwen Coffee 😊 $2 per cup," with a neon light beside it displaying "通义千问". Next to it hangs a poster showing a beautiful Chinese woman, and beneath the poster is written "π≈3.1415926-53589793-23846264-33832795-02384197".

|

| 66 |

|

| 67 |

-

negative_prompt = " "

|

| 68 |

|

| 69 |

|

| 70 |

# Generate with different aspect ratios

|

|

@@ -72,8 +85,10 @@ aspect_ratios = {

|

|

| 72 |

"1:1": (1328, 1328),

|

| 73 |

"16:9": (1664, 928),

|

| 74 |

"9:16": (928, 1664),

|

| 75 |

-

"4:3": (1472,

|

| 76 |

-

"3:4": (

|

|

|

|

|

|

|

| 77 |

}

|

| 78 |

|

| 79 |

width, height = aspect_ratios["16:9"]

|

|

@@ -93,7 +108,7 @@ image.save("example.png")

|

|

| 93 |

|

| 94 |

## Show Cases

|

| 95 |

|

| 96 |

-

One of its standout capabilities is high-fidelity text rendering across diverse images. Whether it

|

| 97 |

|

| 98 |

|

| 99 |

|

|

@@ -105,12 +120,90 @@ When it comes to image editing, Qwen-Image goes far beyond simple adjustments. I

|

|

| 105 |

|

| 106 |

|

| 107 |

|

| 108 |

-

But Qwen-Image doesn

|

| 109 |

|

| 110 |

|

| 111 |

|

| 112 |

Together, these features make Qwen-Image not just a tool for generating pretty pictures, but a comprehensive foundation model for intelligent visual creation and manipulation—where language, layout, and imagery converge.

|

| 113 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 114 |

|

| 115 |

## License Agreement

|

| 116 |

|

|

@@ -121,10 +214,23 @@ Qwen-Image is licensed under Apache 2.0.

|

|

| 121 |

We kindly encourage citation of our work if you find it useful.

|

| 122 |

|

| 123 |

```bibtex

|

| 124 |

-

@

|

| 125 |

-

|

| 126 |

-

|

| 127 |

-

|

| 128 |

-

|

|

|

|

|

|

|

|

|

|

| 129 |

}

|

| 130 |

-

```

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

|

|

|

| 2 |

language:

|

| 3 |

- en

|

| 4 |

- zh

|

| 5 |

library_name: diffusers

|

| 6 |

+

license: apache-2.0

|

| 7 |

pipeline_tag: text-to-image

|

| 8 |

+

tags:

|

| 9 |

+

- qwen-image

|

| 10 |

+

- image-editing

|

| 11 |

+

- text-rendering

|

| 12 |

---

|

| 13 |

+

|

| 14 |

<p align="center">

|

| 15 |

<img src="https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen-Image/qwen_image_logo.png" width="400"/>

|

| 16 |

<p>

|

| 17 |

+

<p align="center">  💜 <a href="https://chat.qwen.ai/"><b>Qwen Chat</b></a>   |

|

| 18 |

+

🤗 <a href="https://huggingface.co/Qwen/Qwen-Image">Hugging Face</a>   |   🤖 <a href="https://modelscope.cn/models/Qwen/Qwen-Image">ModelScope</a>   |   📑 <a href="https://huggingface.co/papers/2508.02324">Tech Report</a>    |    📑 <a href="https://qwenlm.github.io/blog/qwen-image/">Blog</a>    |    💻 <a href="https://github.com/QwenLM/Qwen-Image">GitHub</a>

|

| 19 |

<br>

|

| 20 |

🖥️ <a href="https://huggingface.co/spaces/Qwen/qwen-image">Demo</a>   |   💬 <a href="https://github.com/QwenLM/Qwen-Image/blob/main/assets/wechat.png">WeChat (微信)</a>   |   🫨 <a href="https://discord.gg/CV4E9rpNSD">Discord</a>

|

| 21 |

</p>

|

|

|

|

| 30 |

|

| 31 |

|

| 32 |

## News

|

| 33 |

+

- 2025.08.05: Qwen-Image is now natively supported in ComfyUI, see [Qwen-Image in ComfyUI: New Era of Text Generation in Images!](https://blog.comfy.org/p/qwen-image-in-comfyui-new-era-of)

|

| 34 |

+

- 2025.08.05: Qwen-Image is now on Qwen Chat. Click [Qwen Chat](https://chat.qwen.ai/) and choose "Image Generation".

|

| 35 |

+

- 2025.08.05: We released our [Technical Report](https://arxiv.org/abs/2508.02324) on Arxiv!

|

| 36 |

+

- 2025.08.04: We released Qwen-Image weights! Check at [Huggingface](https://huggingface.co/Qwen/Qwen-Image) and [Modelscope](https://modelscope.cn/models/Qwen/Qwen-Image)!

|

| 37 |

+

- 2025.08.04: We released Qwen-Image! Check our [Blog](https://qwenlm.github.io/blog/qwen-image) for more details!

|

| 38 |

|

| 39 |

+

> [!NOTE]

|

| 40 |

+

> The editing version of Qwen-Image will be released soon. Stay tuned!

|

| 41 |

+

>

|

| 42 |

+

> Due to heavy traffic, if you'd like to experience our demo online, we also recommend visiting DashScope, WaveSpeed, and LibLib. Please find the links below in the community support.

|

| 43 |

|

| 44 |

## Quick Start

|

| 45 |

|

| 46 |

+

1. Make sure your transformers>=4.51.3 (Supporting Qwen2.5-VL)

|

| 47 |

+

|

| 48 |

+

2. Install the latest version of diffusers

|

| 49 |

```

|

| 50 |

pip install git+https://github.com/huggingface/diffusers

|

| 51 |

```

|

|

|

|

| 69 |

pipe = DiffusionPipeline.from_pretrained(model_name, torch_dtype=torch_dtype)

|

| 70 |

pipe = pipe.to(device)

|

| 71 |

|

| 72 |

+

positive_magic = {

|

| 73 |

+

"en": "Ultra HD, 4K, cinematic composition.", # for english prompt

|

| 74 |

+

"zh": "超清,4K,电影级构图" # for chinese prompt

|

| 75 |

+

}

|

| 76 |

|

| 77 |

# Generate image

|

| 78 |

+

prompt = '''A coffee shop entrance features a chalkboard sign reading "Qwen Coffee 😊 $2 per cup," with a neon light beside it displaying "通义千问". Next to it hangs a poster showing a beautiful Chinese woman, and beneath the poster is written "π≈3.1415926-53589793-23846264-33832795-02384197".'''

|

| 79 |

|

| 80 |

+

negative_prompt = " " # Recommended if you don't use a negative prompt.

|

| 81 |

|

| 82 |

|

| 83 |

# Generate with different aspect ratios

|

|

|

|

| 85 |

"1:1": (1328, 1328),

|

| 86 |

"16:9": (1664, 928),

|

| 87 |

"9:16": (928, 1664),

|

| 88 |

+

"4:3": (1472, 1104),

|

| 89 |

+

"3:4": (1104, 1472),

|

| 90 |

+

"3:2": (1584, 1056),

|

| 91 |

+

"2:3": (1056, 1584),

|

| 92 |

}

|

| 93 |

|

| 94 |

width, height = aspect_ratios["16:9"]

|

|

|

|

| 108 |

|

| 109 |

## Show Cases

|

| 110 |

|

| 111 |

+

One of its standout capabilities is high-fidelity text rendering across diverse images. Whether it's alphabetic languages like English or logographic scripts like Chinese, Qwen-Image preserves typographic details, layout coherence, and contextual harmony with stunning accuracy. Text isn't just overlaid, it's seamlessly integrated into the visual fabric.

|

| 112 |

|

| 113 |

|

| 114 |

|

|

|

|

| 120 |

|

| 121 |

|

| 122 |

|

| 123 |

+

But Qwen-Image doesn't just create or edit, it understands. It supports a suite of image understanding tasks, including object detection, semantic segmentation, depth and edge (Canny) estimation, novel view synthesis, and super-resolution. These capabilities, while technically distinct, can all be seen as specialized forms of intelligent image editing, powered by deep visual comprehension.

|

| 124 |

|

| 125 |

|

| 126 |

|

| 127 |

Together, these features make Qwen-Image not just a tool for generating pretty pictures, but a comprehensive foundation model for intelligent visual creation and manipulation—where language, layout, and imagery converge.

|

| 128 |

|

| 129 |

+

### Advanced Usage

|

| 130 |

+

|

| 131 |

+

#### Prompt Enhance

|

| 132 |

+

For enhanced prompt optimization and multi-language support, we recommend using our official Prompt Enhancement Tool powered by Qwen-Plus .

|

| 133 |

+

|

| 134 |

+

You can integrate it directly into your code:

|

| 135 |

+

```python

|

| 136 |

+

from tools.prompt_utils import rewrite

|

| 137 |

+

prompt = rewrite(prompt)

|

| 138 |

+

```

|

| 139 |

+

|

| 140 |

+

Alternatively, run the example script from the command line:

|

| 141 |

+

|

| 142 |

+

```bash

|

| 143 |

+

cd src

|

| 144 |

+

DASHSCOPE_API_KEY=sk-xxxxxxxxxxxxxxxxxxxx python examples/generate_w_prompt_enhance.py

|

| 145 |

+

```

|

| 146 |

+

|

| 147 |

+

|

| 148 |

+

## Deploy Qwen-Image

|

| 149 |

+

|

| 150 |

+

Qwen-Image supports Multi-GPU API Server for local deployment:

|

| 151 |

+

|

| 152 |

+

### Multi-GPU API Server Pipeline & Usage

|

| 153 |

+

|

| 154 |

+

The Multi-GPU API Server will start a Gradio-based web interface with:

|

| 155 |

+

- Multi-GPU parallel processing

|

| 156 |

+

- Queue management for high concurrency

|

| 157 |

+

- Automatic prompt optimization

|

| 158 |

+

- Support for multiple aspect ratios

|

| 159 |

+

|

| 160 |

+

Configuration via environment variables:

|

| 161 |

+

```bash

|

| 162 |

+

export NUM_GPUS_TO_USE=4 # Number of GPUs to use

|

| 163 |

+

export TASK_QUEUE_SIZE=100 # Task queue size

|

| 164 |

+

export TASK_TIMEOUT=300 # Task timeout in seconds

|

| 165 |

+

```

|

| 166 |

+

|

| 167 |

+

```bash

|

| 168 |

+

# Start the gradio demo server, api key for prompt enhance

|

| 169 |

+

cd src

|

| 170 |

+

DASHSCOPE_API_KEY=sk-xxxxxxxxxxxxxxxxx python examples/demo.py

|

| 171 |

+

```

|

| 172 |

+

|

| 173 |

+

|

| 174 |

+

## AI Arena

|

| 175 |

+

|

| 176 |

+

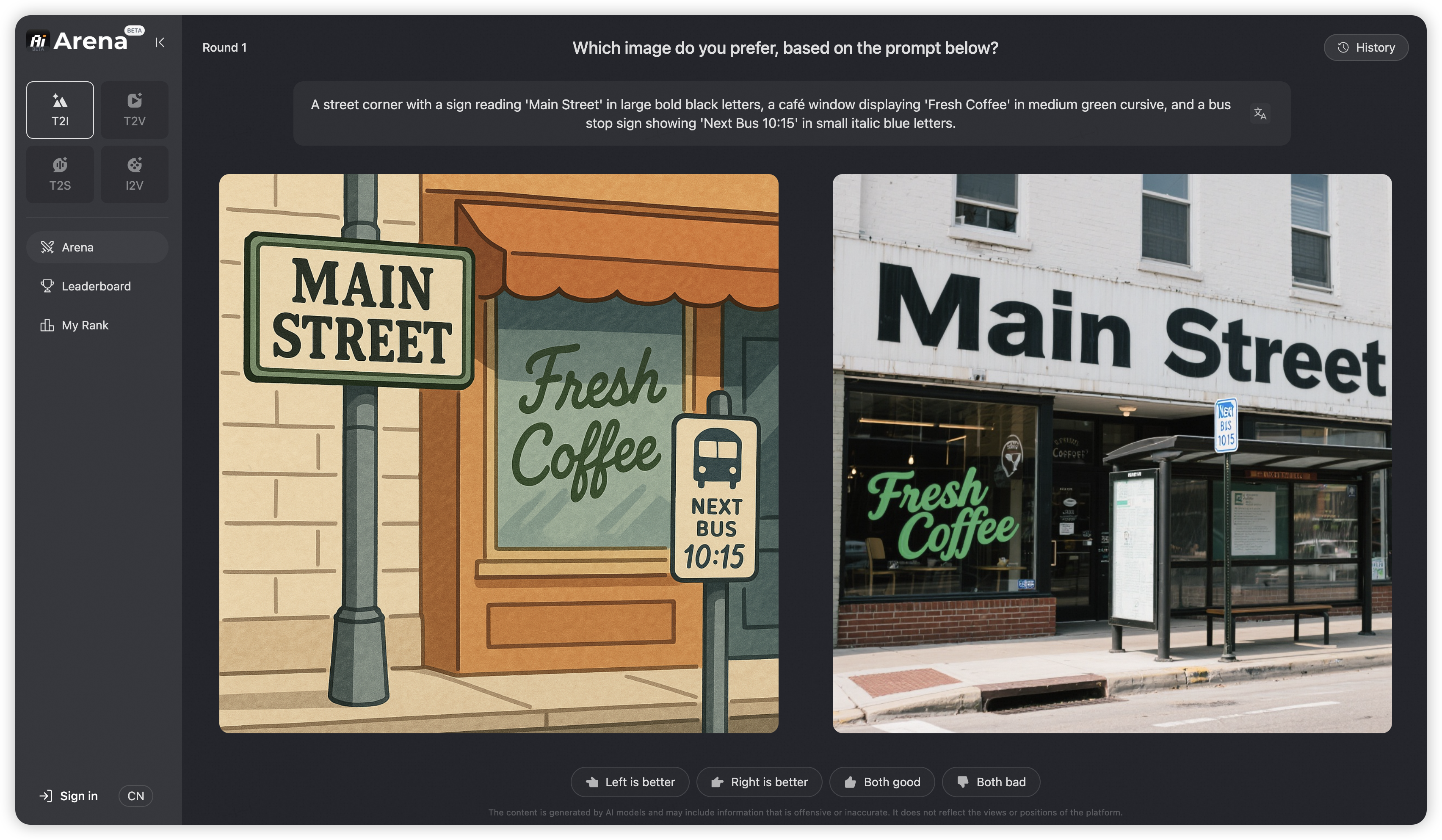

To comprehensively evaluate the general image generation capabilities of Qwen-Image and objectively compare it with state-of-the-art closed-source APIs, we introduce [AI Arena](https://aiarena.alibaba-inc.com), an open benchmarking platform built on the Elo rating system. AI Arena provides a fair, transparent, and dynamic environment for model evaluation.

|

| 177 |

+

|

| 178 |

+

In each round, two images—generated by randomly selected models from the same prompt—are anonymously presented to users for pairwise comparison. Users vote for the better image, and the results are used to update both personal and global leaderboards via the Elo algorithm, enabling developers, researchers, and the public to assess model performance in a robust and data-driven way. AI Arena is now publicly available, welcoming everyone to participate in model evaluations.

|

| 179 |

+

|

| 180 |

+

|

| 181 |

+

|

| 182 |

+

The latest leaderboard rankings can be viewed at [AI Arena Learboard](https://aiarena.alibaba-inc.com/corpora/arena/leaderboard?arenaType=text2image)

|

| 183 |

+

|

| 184 |

+

If you wish to deploy your model on AI Arena and participate in the evaluation, please contact [email protected].

|

| 185 |

+

|

| 186 |

+

## Community Support

|

| 187 |

+

|

| 188 |

+

### Huggingface

|

| 189 |

+

|

| 190 |

+

Diffusers has supported Qwen-Image since day 0. Support for LoRA and finetuning workflows is currently in development and will be available soon.

|

| 191 |

+

|

| 192 |

+

### Modelscope

|

| 193 |

+

* **[DiffSynth-Studio](https://github.com/modelscope/DiffSynth-Studio)** provides comprehensive support for Qwen-Image, including low-GPU-memory layer-by-layer offload (inference within 4GB VRAM), FP8 quantization, LoRA / full training.

|

| 194 |

+

* **[DiffSynth-Engine](https://github.com/modelscope/DiffSynth-Engine)** delivers advanced optimizations for Qwen-Image inference and deployment, including FBCache-based acceleration, classifier-free guidance (CFG) parallel, and more.

|

| 195 |

+

* **[ModelScope AIGC Central](https://www.modelscope.cn/aigc)** provides hands-on experiences on Qwen Image, including:

|

| 196 |

+

- [Image Generation](https://www.modelscope.cn/aigc/imageGeneration): Generate high fidelity images using the Qwen Image model.

|

| 197 |

+

- [LoRA Training](https://www.modelscope.cn/aigc/modelTraining): Easily train Qwen Image LoRAs for personalized concepts.

|

| 198 |

+

|

| 199 |

+

### WaveSpeedAI

|

| 200 |

+

|

| 201 |

+

WaveSpeed has deployed Qwen-Image on their platform from day 0, visit their [model page](https://wavespeed.ai/models/wavespeed-ai/qwen-image/text-to-image) for more details.

|

| 202 |

+

|

| 203 |

+

### LiblibAI

|

| 204 |

+

|

| 205 |

+

LiblibAI offers native support for Qwen-Image from day 0. Visit their [community](https://www.liblib.art/modelinfo/c62a103bd98a4246a2334e2d952f7b21?from=sd&versionUuid=75e0be0c93b34dd8baeec9c968013e0c) page for more details and discussions.

|

| 206 |

+

|

| 207 |

|

| 208 |

## License Agreement

|

| 209 |

|

|

|

|

| 214 |

We kindly encourage citation of our work if you find it useful.

|

| 215 |

|

| 216 |

```bibtex

|

| 217 |

+

@misc{wu2025qwenimagetechnicalreport,

|

| 218 |

+

title={Qwen-Image Technical Report},

|

| 219 |

+

author={Chenfei Wu and Jiahao Li and Jingren Zhou and Junyang Lin and Kaiyuan Gao and Kun Yan and Sheng-ming Yin and Shuai Bai and Xiao Xu and Yilei Chen and Yuxiang Chen and Zecheng Tang and Zekai Zhang and Zhengyi Wang and An Yang and Bowen Yu and Chen Cheng and Dayiheng Liu and Deqing Li and Hang Zhang and Hao Meng and Hu Wei and Jingyuan Ni and Kai Chen and Kuan Cao and Liang Peng and Lin Qu and Minggang Wu and Peng Wang and Shuting Yu and Tingkun Wen and Wensen Feng and Xiaoxiao Xu and Yi Wang and Yichang Zhang and Yongqiang Zhu and Yujia Wu and Yuxuan Cai and Zenan Liu},

|

| 220 |

+

year={2025},

|

| 221 |

+

eprint={2508.02324},

|

| 222 |

+

archivePrefix={arXiv},

|

| 223 |

+

primaryClass={cs.CV},

|

| 224 |

+

url={https://arxiv.org/abs/2508.02324},

|

| 225 |

}

|

| 226 |

+

```

|

| 227 |

+

|

| 228 |

+

|

| 229 |

+

## Contact and Join Us

|

| 230 |

+

|

| 231 |

+

|

| 232 |

+

If you'd like to get in touch with our research team, we'd love to hear from you! Join our [Discord](https://discord.gg/z3GAxXZ9Ce) or scan the QR code to connect via our [WeChat groups](https://github.com/QwenLM/Qwen-Image/blob/main/assets/wechat.png) — we're always open to discussion and collaboration.

|

| 233 |

+

|

| 234 |

+

If you have questions about this repository, feedback to share, or want to contribute directly, we welcome your issues and pull requests on GitHub. Your contributions help make Qwen-Image better for everyone.

|

| 235 |

+

|

| 236 |

+

If you're passionate about fundamental research, we're hiring full-time employees (FTEs) and research interns. Don't wait — reach out to us at [email protected]

|