---

license: apache-2.0

pipeline_tag: image-to-image

library_name: transformers

---

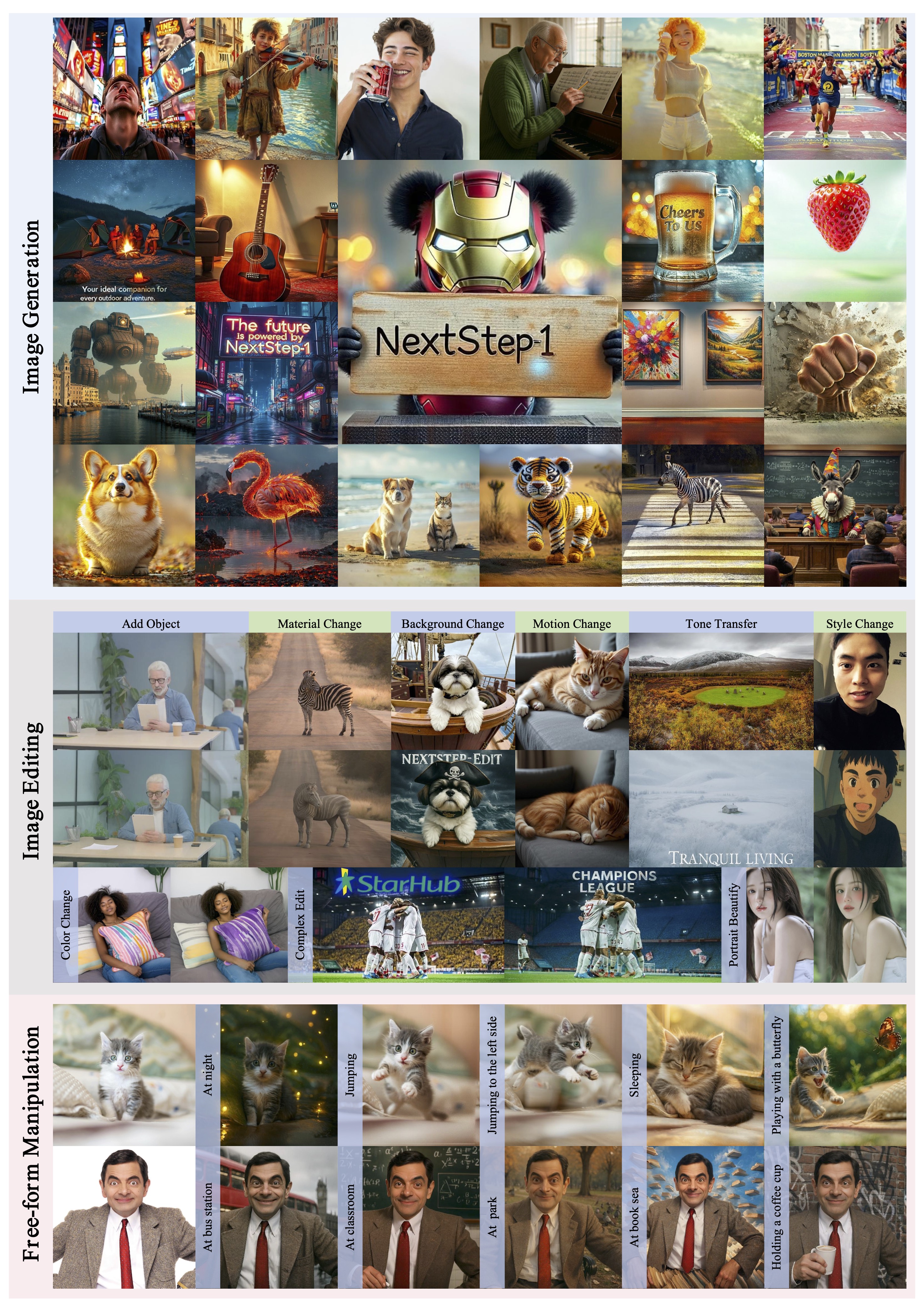

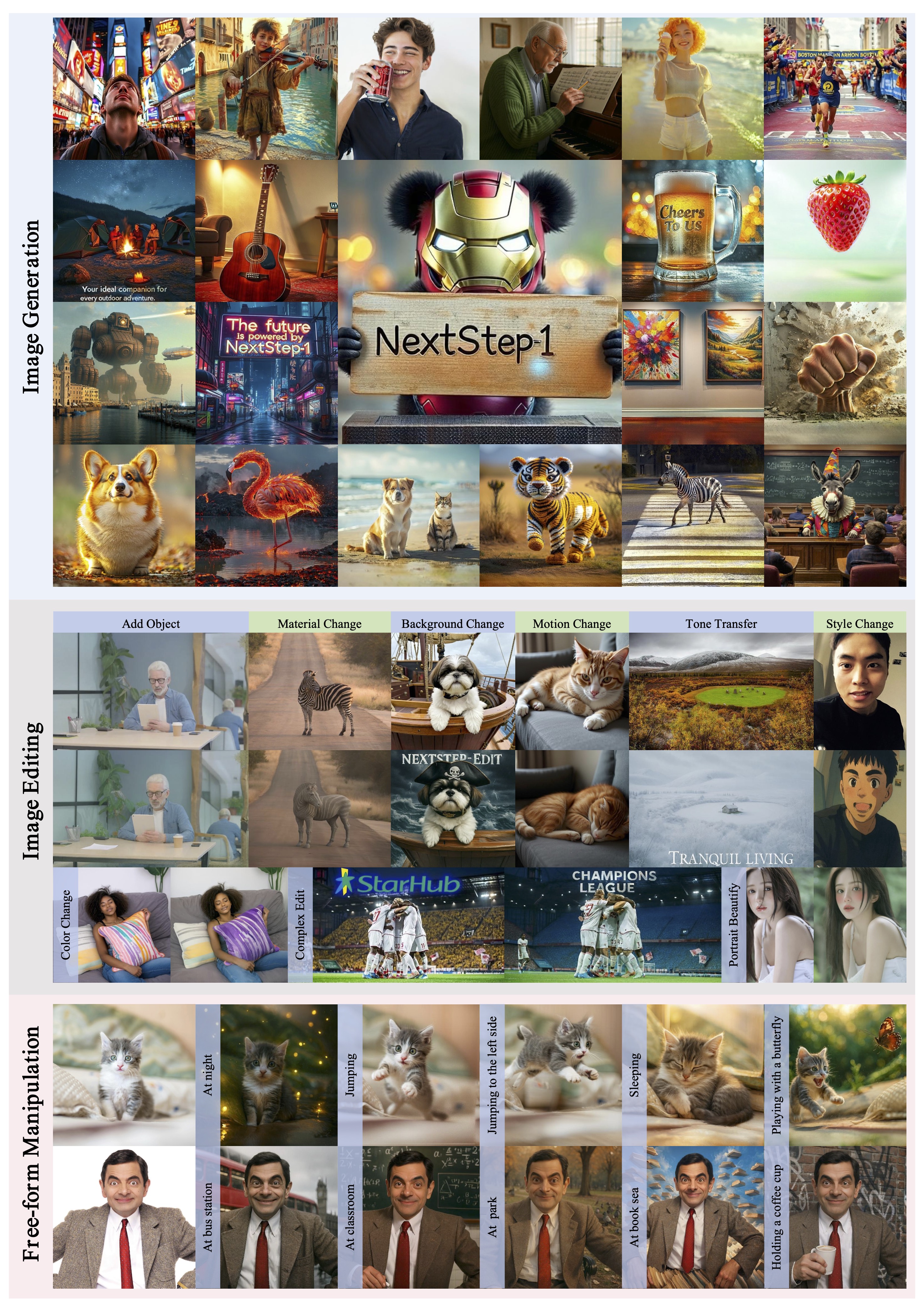

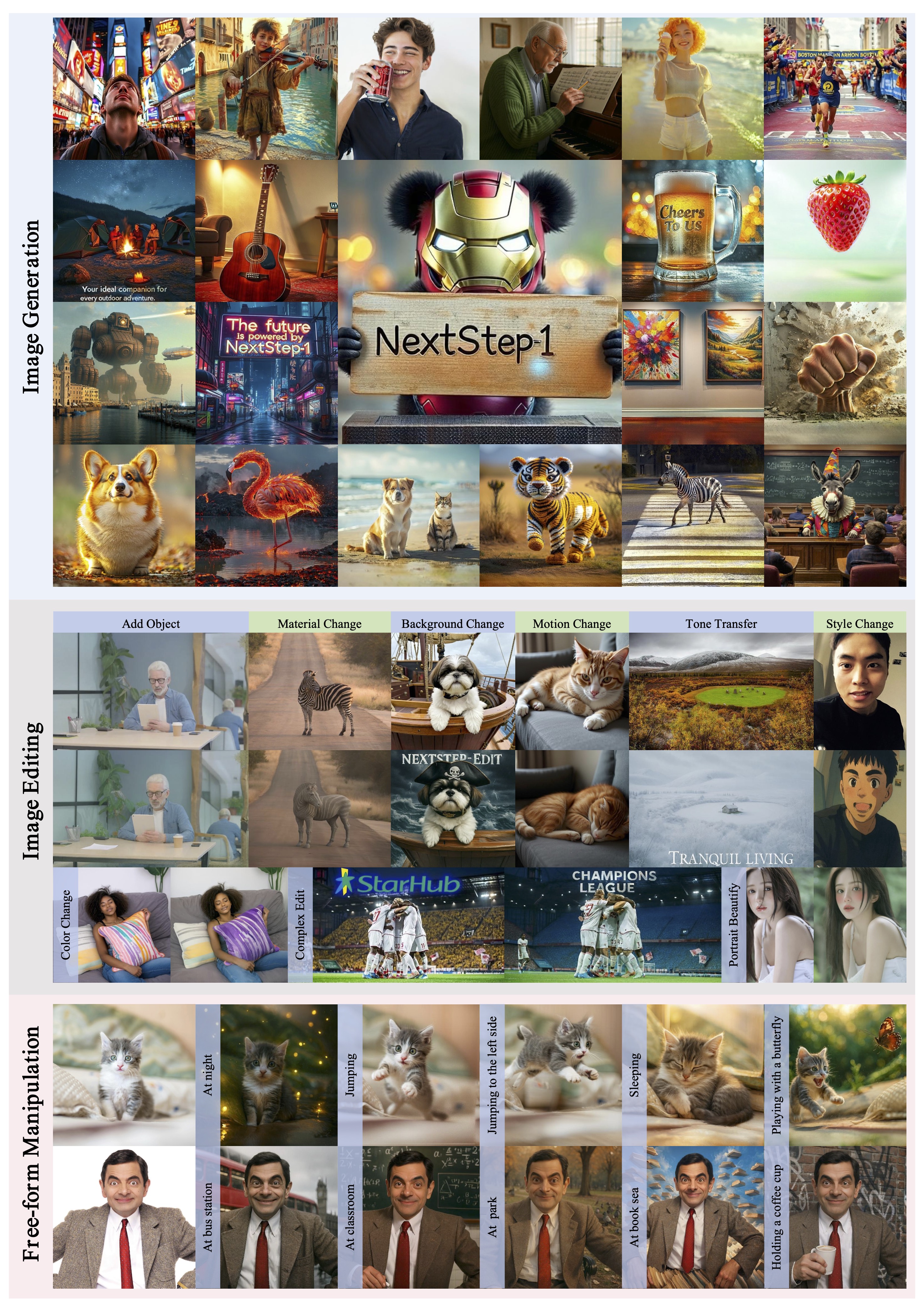

## NextStep-1: Toward Autoregressive Image Generation with Continuous Tokens at Scale

[Homepage](https://stepfun.ai/research/en/nextstep1)

| [GitHub](https://github.com/stepfun-ai/NextStep-1)

| [Paper](https://arxiv.org/abs/2508.10711)

We introduce **NextStep-1**, a 14B autoregressive model paired with a 157M flow matching head, training on discrete text tokens and continuous image tokens with next-token prediction objectives.

**NextStep-1** achieves state-of-the-art performance for autoregressive models in text-to-image generation tasks, exhibiting strong capabilities in high-fidelity image synthesis.

## Environment Setup

To avoid potential errors when loading and running your models, we recommend using the following settings:

```shell

conda create -n nextstep python=3.11 -y

conda activate nextstep

pip install uv # optional

GIT_LFS_SKIP_SMUDGE=1 git clone https://huggingface.co/stepfun-ai/NextStep-1-Large-Edit && cd NextStep-1-Large-Edit

uv pip install -r requirements.txt

hf download stepfun-ai/NextStep-1-Large-Edit "vae/checkpoint.pt" --local-dir ./

```

## Usage

```python

from PIL import Image

from transformers import AutoTokenizer, AutoModel

from models.gen_pipeline import NextStepPipeline

from utils.aspect_ratio import center_crop_arr_with_buckets

HF_HUB = "stepfun-ai/NextStep-1-Large-Edit"

# load model and tokenizer

tokenizer = AutoTokenizer.from_pretrained(HF_HUB, local_files_only=True, trust_remote_code=True,force_download=True)

model = AutoModel.from_pretrained(HF_HUB, local_files_only=True, trust_remote_code=True,force_download=True)

pipeline = NextStepPipeline(tokenizer=tokenizer, model=model).to(device=f"cuda")

# set prompts

positive_prompt = None

negative_prompt = "Copy original image."

example_prompt = "" + "Add a pirate hat to the dog's head. Change the background to a stormy sea with dark clouds. Include the text 'NextStep-Edit' in bold white letters at the top portion of the image."

# load and preprocess reference image

IMG_SIZE = 512

ref_image = Image.open("./assets/origin.jpg")

ref_image = center_crop_arr_with_buckets(ref_image, buckets=[IMG_SIZE])

# generate edited image

image = pipeline.generate_image(

example_prompt,

images=[ref_image],

hw=(IMG_SIZE, IMG_SIZE),

num_images_per_caption=1,

positive_prompt=positive_prompt,

negative_prompt=negative_prompt,

cfg=7.5,

cfg_img=2,

cfg_schedule="constant",

use_norm=True,

num_sampling_steps=50,

timesteps_shift=3.2,

seed=42,

)[0]

image.save(f"./assets/output.jpg")

```

## Citation

If you find NextStep useful for your research and applications, please consider starring this repository and citing:

```bibtex

@article{nextstepteam2025nextstep1,

title={NextStep-1: Toward Autoregressive Image Generation with Continuous Tokens at Scale},

author={NextStep Team and Chunrui Han and Guopeng Li and Jingwei Wu and Quan Sun and Yan Cai and Yuang Peng and Zheng Ge and Deyu Zhou and Haomiao Tang and Hongyu Zhou and Kenkun Liu and Ailin Huang and Bin Wang and Changxin Miao and Deshan Sun and En Yu and Fukun Yin and Gang Yu and Hao Nie and Haoran Lv and Hanpeng Hu and Jia Wang and Jian Zhou and Jianjian Sun and Kaijun Tan and Kang An and Kangheng Lin and Liang Zhao and Mei Chen and Peng Xing and Rui Wang and Shiyu Liu and Shutao Xia and Tianhao You and Wei Ji and Xianfang Zeng and Xin Han and Xuelin Zhang and Yana Wei and Yanming Xu and Yimin Jiang and Yingming Wang and Yu Zhou and Yucheng Han and Ziyang Meng and Binxing Jiao and Daxin Jiang and Xiangyu Zhang and Yibo Zhu},

journal={arXiv preprint arXiv:2508.10711},

year={2025}

}

```