Website 🤖 7B Model 🤖 32B Model MedEvalKit Technical Report

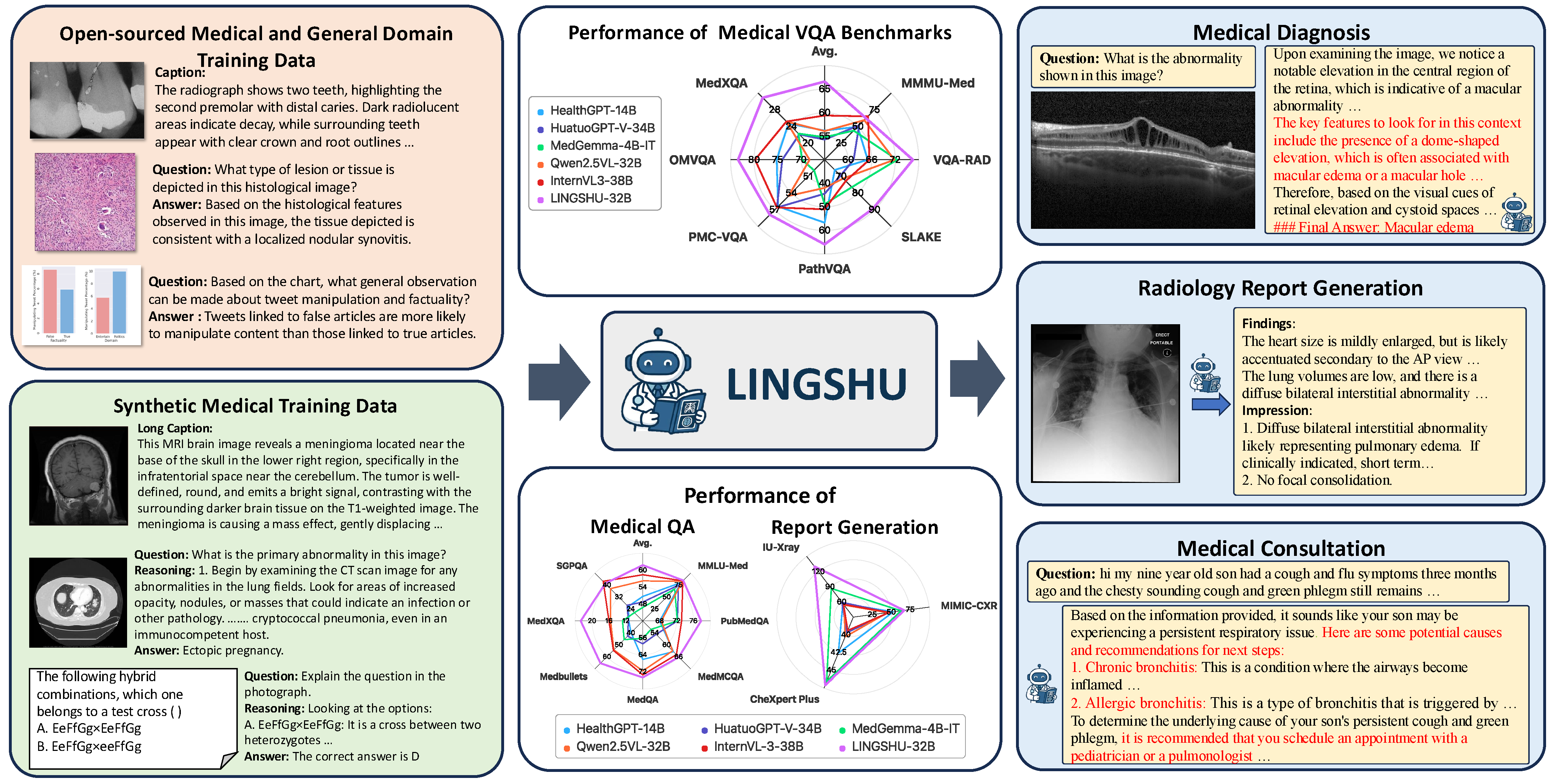

# *Lingshu* - SOTA Multimodal Large Language Models for Medical Domain # BIG NEWS: Lingshu is released with state-of-the-art performance on medical VQA tasks and report generation. This repository contains the model of the paper [Lingshu: A Generalist Foundation Model for Unified Multimodal Medical Understanding and Reasoning](https://huggingface.co/papers/2506.07044). We also release a comprehensive medical evaluation toolkit in [MedEvalKit](https://github.com/alibaba-damo-academy/MedEvalKit), which supports fast evaluation of major multimodal and textual medical tasks.

| Models | MMMU-Med | VQA-RAD | SLAKE | PathVQA | PMC-VQA | OmniMedVQA | MedXpertQA | Avg. |

|---|---|---|---|---|---|---|---|---|

| Proprietary Models | ||||||||

| GPT-4.1 | 75.2 | 65.0 | 72.2 | 55.5 | 55.2 | 75.5 | 45.2 | 63.4 |

| Claude Sonnet 4 | 74.6 | 67.6 | 70.6 | 54.2 | 54.4 | 65.5 | 43.3 | 61.5 |

| Gemini-2.5-Flash | 76.9 | 68.5 | 75.8 | 55.4 | 55.4 | 71.0 | 52.8 | 65.1 |

| Open-source Models (<10B) | ||||||||

| BiomedGPT | 24.9 | 16.6 | 13.6 | 11.3 | 27.6 | 27.9 | - | - |

| Med-R1-2B | 34.8 | 39.0 | 54.5 | 15.3 | 47.4 | - | 21.1 | - |

| MedVLM-R1-2B | 35.2 | 48.6 | 56.0 | 32.5 | 47.6 | 77.7 | 20.4 | 45.4 |

| MedGemma-4B-IT | 43.7 | 72.5 | 76.4 | 48.8 | 49.9 | 69.8 | 22.3 | 54.8 |

| LLaVA-Med-7B | 29.3 | 53.7 | 48.0 | 38.8 | 30.5 | 44.3 | 20.3 | 37.8 |

| HuatuoGPT-V-7B | 47.3 | 67.0 | 67.8 | 48.0 | 53.3 | 74.2 | 21.6 | 54.2 |

| BioMediX2-8B | 39.8 | 49.2 | 57.7 | 37.0 | 43.5 | 63.3 | 21.8 | 44.6 |

| Qwen2.5VL-7B | 50.6 | 64.5 | 67.2 | 44.1 | 51.9 | 63.6 | 22.3 | 52.0 |

| InternVL2.5-8B | 53.5 | 59.4 | 69.0 | 42.1 | 51.3 | 81.3 | 21.7 | 54.0 |

| InternVL3-8B | 59.2 | 65.4 | 72.8 | 48.6 | 53.8 | 79.1 | 22.4 | 57.3 |

| Lingshu-7B | 54.0 | 67.9 | 83.1 | 61.9 | 56.3 | 82.9 | 26.7 | 61.8 |

| Open-source Models (>10B) | ||||||||

| HealthGPT-14B | 49.6 | 65.0 | 66.1 | 56.7 | 56.4 | 75.2 | 24.7 | 56.2 |

| HuatuoGPT-V-34B | 51.8 | 61.4 | 69.5 | 44.4 | 56.6 | 74.0 | 22.1 | 54.3 |

| MedDr-40B | 49.3 | 65.2 | 66.4 | 53.5 | 13.9 | 64.3 | - | - |

| InternVL3-14B | 63.1 | 66.3 | 72.8 | 48.0 | 54.1 | 78.9 | 23.1 | 58.0 |

| Qwen2.5V-32B | 59.6 | 71.8 | 71.2 | 41.9 | 54.5 | 68.2 | 25.2 | 56.1 |

| InternVL2.5-38B | 61.6 | 61.4 | 70.3 | 46.9 | 57.2 | 79.9 | 24.4 | 57.4 |

| InternVL3-38B | 65.2 | 65.4 | 72.7 | 51.0 | 56.6 | 79.8 | 25.2 | 59.4 |

| Lingshu-32B | 62.3 | 76.5 | 89.2 | 65.9 | 57.9 | 83.4 | 30.9 | 66.6 |

| Models | MMLU-Med | PubMedQA | MedMCQA | MedQA | Medbullets | MedXpertQA | SuperGPQA-Med | Avg. |

|---|---|---|---|---|---|---|---|---|

| Proprietary Models | ||||||||

| GPT-4.1 | 89.6 | 75.6 | 77.7 | 89.1 | 77.0 | 30.9 | 49.9 | 70.0 |

| Claude Sonnet 4 | 91.3 | 78.6 | 79.3 | 92.1 | 80.2 | 33.6 | 56.3 | 73.1 |

| Gemini-2.5-Flash | 84.2 | 73.8 | 73.6 | 91.2 | 77.6 | 35.6 | 53.3 | 69.9 |

| Open-source Models (<10B) | ||||||||

| Med-R1-2B | 51.5 | 66.2 | 39.1 | 39.9 | 33.6 | 11.2 | 17.9 | 37.0 |

| MedVLM-R1-2B | 51.8 | 66.4 | 39.7 | 42.3 | 33.8 | 11.8 | 19.1 | 37.8 |

| MedGemma-4B-IT | 66.7 | 72.2 | 52.2 | 56.2 | 45.6 | 12.8 | 21.6 | 46.8 |

| LLaVA-Med-7B | 50.6 | 26.4 | 39.4 | 42.0 | 34.4 | 9.9 | 16.1 | 31.3 |

| HuatuoGPT-V-7B | 69.3 | 72.8 | 51.2 | 52.9 | 40.9 | 10.1 | 21.9 | 45.6 |

| BioMediX2-8B | 68.6 | 75.2 | 52.9 | 58.9 | 45.9 | 13.4 | 25.2 | 48.6 |

| Qwen2.5VL-7B | 73.4 | 76.4 | 52.6 | 57.3 | 42.1 | 12.8 | 26.3 | 48.7 |

| InternVL2.5-8B | 74.2 | 76.4 | 52.4 | 53.7 | 42.4 | 11.6 | 26.1 | 48.1 |

| InternVL3-8B | 77.5 | 75.4 | 57.7 | 62.1 | 48.5 | 13.1 | 31.2 | 52.2 |

| Lingshu-7B | 74.5 | 76.6 | 55.9 | 63.3 | 56.2 | 16.5 | 26.3 | 52.8 |

| Open-source Models (>10B) | ||||||||

| HealthGPT-14B | 80.2 | 68.0 | 63.4 | 66.2 | 39.8 | 11.3 | 25.7 | 50.7 |

| HuatuoGPT-V-34B | 74.7 | 72.2 | 54.7 | 58.8 | 42.7 | 11.4 | 26.5 | 48.7 |

| MedDr-40B | 65.2 | 77.4 | 38.4 | 59.2 | 44.3 | 12.0 | 24.0 | 45.8 |

| InternVL3-14B | 81.7 | 77.2 | 62.0 | 70.1 | 49.5 | 14.1 | 37.9 | 56.1 |

| Qwen2.5VL-32B | 83.2 | 68.4 | 63.0 | 71.6 | 54.2 | 15.6 | 37.6 | 56.2 |

| InternVL2.5-38B | 84.6 | 74.2 | 65.9 | 74.4 | 55.0 | 14.7 | 39.9 | 58.4 |

| InternVL3-38B | 83.8 | 73.2 | 64.9 | 73.5 | 54.6 | 16.0 | 42.5 | 58.4 |

| Lingshu-32B | 84.7 | 77.8 | 66.1 | 74.7 | 65.4 | 22.7 | 41.1 | 61.8 |

| Models | MIMIC-CXR | CheXpert Plus | IU-Xray | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ROUGE-L | CIDEr | RaTE | SembScore | RadCliQ-v1-1 | ROUGE-L | CIDEr | RaTE | SembScore | RadCliQ-v1-1 | ROUGE-L | CIDEr | RaTE | SembScore | RadCliQ-v1-1 | |

| Proprietary Models | |||||||||||||||

| GPT-4.1 | 9.0 | 82.8 | 51.3 | 23.9 | 57.1 | 24.5 | 78.8 | 45.5 | 23.2 | 45.5 | 30.2 | 124.6 | 51.3 | 47.5 | 80.3 |

| Claude Sonnet 4 | 20.0 | 56.6 | 45.6 | 19.7 | 53.4 | 22.0 | 59.5 | 43.5 | 18.9 | 43.3 | 25.4 | 88.3 | 55.4 | 41.0 | 72.1 |

| Gemini-2.5-Flash | 25.4 | 80.7 | 50.3 | 29.7 | 59.4 | 23.6 | 72.2 | 44.3 | 27.4 | 44.0 | 33.5 | 129.3 | 55.6 | 50.9 | 91.6 |

| Open-source Models (<10B) | |||||||||||||||

| Med-R1-2B | 19.3 | 35.4 | 40.6 | 14.8 | 42.4 | 18.6 | 37.1 | 38.5 | 17.8 | 37.6 | 16.1 | 38.3 | 41.4 | 12.5 | 43.6 |

| MedVLM-R1-2B | 20.3 | 40.1 | 41.6 | 14.2 | 48.3 | 20.9 | 43.5 | 38.9 | 15.5 | 40.9 | 22.7 | 61.1 | 46.1 | 22.7 | 54.3 |

| MedGemma-4B-IT | 25.6 | 81.0 | 52.4 | 29.2 | 62.9 | 27.1 | 79.0 | 47.2 | 29.3 | 46.6 | 30.8 | 103.6 | 57.0 | 46.8 | 86.7 |

| LLaVA-Med-7B | 15.0 | 43.4 | 12.8 | 18.3 | 52.9 | 18.4 | 45.5 | 38.8 | 23.5 | 44.0 | 18.8 | 68.2 | 40.9 | 16.0 | 58.1 |

| HuatuoGPT-V-7B | 23.4 | 69.5 | 48.9 | 20.0 | 48.2 | 21.3 | 64.7 | 44.2 | 19.3 | 39.4 | 29.6 | 104.3 | 52.9 | 40.7 | 63.6 |

| BioMediX2-8B | 20.0 | 52.8 | 44.4 | 17.7 | 53.0 | 18.1 | 47.9 | 40.8 | 21.6 | 43.3 | 19.6 | 58.8 | 40.1 | 11.6 | 53.8 |

| Qwen2.5VL-7B | 24.1 | 63.7 | 47.0 | 18.4 | 55.1 | 22.2 | 62.0 | 41.0 | 17.2 | 43.1 | 26.5 | 78.1 | 48.4 | 36.3 | 66.1 |

| InternVL2.5-8B | 23.2 | 61.8 | 47.0 | 21.0 | 56.2 | 20.6 | 58.5 | 43.1 | 19.7 | 42.7 | 24.8 | 75.4 | 51.1 | 36.7 | 67.0 |

| InternVL3-8B | 22.9 | 66.2 | 48.2 | 21.5 | 55.1 | 20.9 | 65.4 | 44.3 | 25.2 | 43.7 | 22.9 | 76.2 | 51.2 | 31.3 | 59.9 |

| Lingshu-7B | 30.8 | 109.4 | 52.1 | 30.0 | 69.2 | 26.5 | 79.0 | 45.4 | 26.8 | 47.3 | 41.2 | 180.7 | 57.6 | 48.4 | 108.1 |

| Open-source Models (>10B) | |||||||||||||||

| HealthGPT-14B | 21.4 | 64.7 | 48.4 | 16.5 | 52.7 | 20.6 | 66.2 | 44.4 | 22.7 | 42.6 | 22.9 | 81.9 | 50.8 | 16.6 | 56.9 |

| HuatuoGPT-V-34B | 23.5 | 68.5 | 48.5 | 23.0 | 47.1 | 22.5 | 62.8 | 42.9 | 22.1 | 39.7 | 28.2 | 108.3 | 54.4 | 42.2 | 59.3 |

| MedDr-40B | 15.7 | 62.3 | 45.2 | 12.2 | 47.0 | 24.1 | 66.1 | 44.7 | 24.2 | 44.7 | 19.4 | 62.9 | 40.3 | 7.3 | 48.9 |

| InternVL3-14B | 22.0 | 63.7 | 48.6 | 17.4 | 46.5 | 20.4 | 60.2 | 44.1 | 20.7 | 39.4 | 24.8 | 93.7 | 55.0 | 38.7 | 55.0 |

| Qwen2.5VL-32B | 15.7 | 50.2 | 47.5 | 17.1 | 45.2 | 15.2 | 54.8 | 43.4 | 18.5 | 40.3 | 18.9 | 73.3 | 51.3 | 38.1 | 54.0 |

| InternVL2.5-38B | 22.7 | 61.4 | 47.5 | 18.2 | 54.9 | 21.6 | 60.6 | 42.6 | 20.3 | 45.4 | 28.9 | 96.5 | 53.5 | 38.5 | 69.7 |

| InternVL3-38B | 22.8 | 64.6 | 47.9 | 18.1 | 47.2 | 20.5 | 62.7 | 43.8 | 20.2 | 39.4 | 25.5 | 90.7 | 53.5 | 33.1 | 55.2 |

| Lingshu-32B | 28.8 | 96.4 | 50.8 | 30.1 | 67.1 | 25.3 | 75.9 | 43.4 | 24.2 | 47.1 | 42.8 | 189.2 | 63.5 | 54.6 | 130.4 |