Wan 2.2, FLUX, FLUX Krea & Qwen Image Just got Upgraded: Ultimate Tutorial for Open Source SOTA Image & Video Gen Models

By Furkan Gözükara - PhD Computer Engineer, SECourses 10 min read

Tutorial Video https://youtu.be/3BFDcO2Ysu4

Info

Wan 2.2, Qwen Image, FLUX, FLUX Krea, all these models are the SOTA open-source models and in this master tutorial I will show you how to use these models in the easiest, most performant, and most accurate way. After doing almost one week of research, I have determined the very best presets and prepared this tutorial. With literally one click you will be able to install, download models, set presets, and use these amazing models. Wan 2.2 is currently the king of video generation models and now it is super fast with lightx2v Wan2.2-Lightning LoRAs. Moreover, Qwen Image is now ultra-fast with the recently released 8-step LoRA with almost no quality loss. Furthermore, I have updated FLUX and FLUX Krea presets to improve image generation quality. Finally, I have trained FLUX Krea with our existing DreamBooth and LoRA training workflows, analyzed and shared the results in this tutorial. As additional information, I have shown the upcoming Qwen Image editing/inpainting model and the Qwen Image training application I am developing.

- ▶️ SwarmUI Installers, Presets and Model Downloader App : 🔗 https://www.patreon.com/posts/114517862

- ▶️ ComfyUI Backend Installer : 🔗 https://www.patreon.com/posts/105023709

- ▶️ FLUX / FLUX Krea DreamBooth Training : 🔗 https://www.patreon.com/posts/112099700

- ▶️ FLUX / FLUX Krea LoRA Training : 🔗 https://www.patreon.com/posts/110879657

- ▶️ Main SwarmUI Installation Tutorial : 🔗 https://youtu.be/fTzlQ0tjxj0

- ▶️ RunPod SwarmUI Installation Tutorial : 🔗 https://youtu.be/R02kPf9Y3_w

- ▶️ Massed Compute SwarmUI Installation Tutorial (starting 21:32) : 🔗 https://youtu.be/8cMIwS9qo4M

Video Chapters

- 0:00 Introduction to New State-of-the-Art AI Models

- 0:43 Wan 2.2 vs Wan 2.1 Image-to-Video Comparison

- 1:43 Huge Improvement with New Wan 2.2 Text-to-Video Presets

- 2:44 More Examples of New Wan 2.2 Presets (Text & Image-to-Video)

- 3:08 Using RIFE for Smooth Frame Interpolation (2x FPS)

- 4:30 Image Generation: Wan 2.2 Realism vs FLUX Dev & Krea Dev

- 5:08 Introducing Ultra-Fast Qwen Image 8-Step Preset

- 5:44 Coming Soon: Qwen Image Editing Capabilities Preview

- 6:10 Comparing Qwen Image Presets (High Quality, Fast & Realism)

- 7:14 Behind the Scenes: The Extensive Testing Process for Presets

- 7:42 FLUX Krea Dev Training Experiments (DreamBooth & LoRA)

- 8:21 Updates: Qwen Training App, ComfyUI & SwarmUI Installers

- 8:59 How to Update SwarmUI and ComfyUI Installations

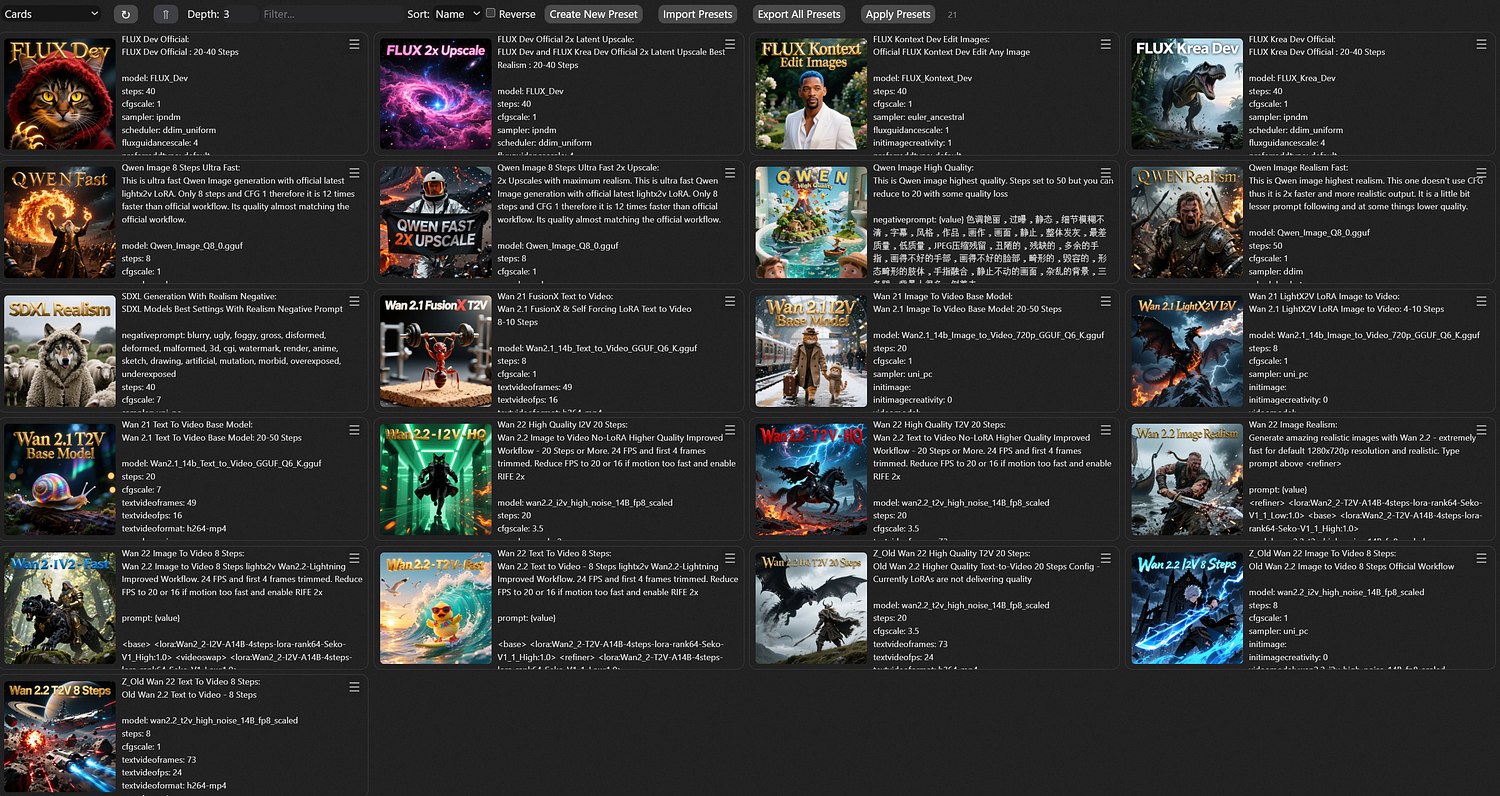

- 10:05 Importing New Presets into SwarmUI

- 10:51 Easiest Way: Using the Automatic Preset Import Script

- 12:22 Using the Model Downloader for Required AI Models

- 13:22 Configuring Downloader for ComfyUI & Forge WebUI

- 15:31 Demo: Generating a Wan 2.2 Image-to-Video (8-Steps)

- 17:02 Using Google Studio AI for High-Quality Prompt Generation

- 18:19 Starting the Generation & Multi-GPU Trick

- 19:46 Advanced Video Options: Frames, FPS, and RIFE Settings

- 21:11 Demo: Generating a Wan 2.2 Text-to-Video (8-Steps)

- 22:27 Live Result: Image-to-Video Generation Finished

- 23:34 Demo: Ultra-Fast Image Generation with Qwen (8-Steps)

- 24:50 Live Result: Text-to-Video Generation Finished (Amazing Quality)

- 25:21 Generation Speed Analysis & Downloading Your Video

- 26:38 Comparing FLUX Krea Dev & Qwen Realism Presets

- 28:46 How to Upscale Images to 2x High Resolution

- 29:47 Summary of New Presets and Recommendations

- 30:40 In-Depth: Training on FLUX Krea Dev (LoRA & DreamBooth)

- 33:48 Coming Soon: One-Click Qwen Image Training Application

- 36:11 Join The Community (Discord & Reddit) & Final Words

Exploring Cutting-Edge AI Image Generation Models: Qwen-Image, FLUX.1, FLUX.1 Krea, and Wan 2.2

In the rapidly evolving landscape of artificial intelligence, image generation models have emerged as transformative tools, enabling creators, designers, and developers to produce stunning visuals from simple text descriptions. These models leverage advanced deep learning techniques to bridge the gap between human imagination and digital reality, offering capabilities ranging from photorealistic rendering to intricate editing and even video extension. As of 2025, several standout models have pushed the boundaries of what’s possible, including Alibaba’s Qwen-Image, Black Forest Labs’ FLUX.1 series, the specialized FLUX.1 Krea variant, and Alibaba’s Wan 2.2. This article delves into each model in ultra-detailed depth, exploring their architectures, capabilities, performance metrics, and practical applications. By examining these innovations, we uncover how they are reshaping creative workflows across industries such as advertising, entertainment, and education.

Qwen-Image: Alibaba’s Multimodal Powerhouse for Generation and Editing

Qwen-Image represents a significant leap in open-source image generation technology, developed by Alibaba’s Qwen team. Released in mid-2025, this model stands out for its seamless integration of text-to-image generation with advanced editing and understanding features, making it a versatile foundation for multimodal AI applications.

Architecture and Parameters

At its core, Qwen-Image employs a Multimodal Diffusion Transformer (MMDiT) architecture, which combines diffusion-based generation with transformer layers optimized for handling both visual and textual data. The model boasts 20 billion parameters, striking a balance between computational efficiency and high-fidelity output. This architecture allows for flexible handling of various aspect ratios and styles, supported by a robust Vision-Language (VL) component derived from Qwen2.5-VL for semantic control and a Variational Autoencoder (VAE) for appearance fidelity.

Capabilities

Qwen-Image excels in a broad spectrum of tasks:

- Text-to-Image Generation: It generates high-resolution images (up to 4K) from detailed text prompts in English and Chinese, supporting aspect ratios like 1:1, 16:9, 9:16, 4:3, 3:4, 3:2, and 2:3. Styles range from photorealistic and cinematic to impressionist, anime, and minimalist. For instance, it can create complex scenes such as a coffee shop entrance with specific signage, neon lights, and posters incorporating precise text like mathematical constants.

- Image Editing: Building on its foundation, the Qwen-Image-Edit variant extends capabilities to semantic and appearance editing. Semantic edits include style transfers (e.g., converting portraits to Studio Ghibli aesthetics), object rotation (90 or 180 degrees), and IP creation like themed emoji packs. Appearance edits allow precise modifications, such as adding reflective signboards, removing fine details like hair strands, changing colors of specific elements (e.g., turning a single letter blue), or altering backgrounds while preserving overall composition. Text editing is bilingual, maintaining font, size, and style during additions, deletions, or modifications — ideal for editing posters or calligraphy.

- Image Understanding and Additional Features: The model supports object detection, semantic segmentation, depth estimation, edge detection (Canny), novel view synthesis, and super-resolution. It can even perform chained edits, iteratively correcting errors in artworks like ancient Chinese calligraphy.

Training and Benchmarks

While specific training data details are not publicly disclosed, Qwen-Image was trained on diverse datasets emphasizing complex text rendering and multilingual support. Benchmarks on the AI Arena platform use Elo ratings for pairwise comparisons, where Qwen-Image ranks highly against state-of-the-art closed-source APIs. It achieves superior performance in text rendering accuracy and editing precision, outperforming many competitors in tasks requiring bilingual text handling and fine-grained modifications.

Use Cases

Qwen-Image is ideal for graphic designers creating multilingual marketing materials, artists experimenting with style transfers, and developers building apps for image enhancement. For example, e-commerce platforms could use it to generate and edit product visuals dynamically, while educators might create customized illustrations for bilingual content.

Licensed under Apache 2.0, Qwen-Image is accessible via Hugging Face and ModelScope, with easy integration into frameworks like Diffusers. A sample inference takes about 50 steps on a GPU, producing results in seconds to minutes depending on hardware.

FLUX.1: Black Forest Labs’ High-Performance Text-to-Image Foundation

FLUX.1, developed by Black Forest Labs in 2024 and refined into 2025, is a family of text-to-image models renowned for their speed, quality, and accessibility. It includes variants like FLUX.1 [dev] and FLUX.1 [schnell], each tailored for different use scenarios, emphasizing rectified flow transformers for efficient generation.

Architecture and Parameters

FLUX.1 models utilize a rectified flow transformer architecture, which optimizes the diffusion process for faster sampling. Both [dev] and [schnell] variants feature 12 billion parameters, enabling complex scene understanding and generation. The [schnell] version incorporates latent adversarial diffusion distillation, allowing images to be generated in just 1 to 4 steps, while [dev] uses guidance distillation for enhanced efficiency without sacrificing quality.

Capabilities

- Text-to-Image Generation: FLUX.1 produces high-quality, diverse images from text prompts, supporting resolutions up to 1024x1024 and beyond. It excels in photorealism, intricate details, and prompt adherence, generating everything from abstract art to realistic scenes. For example, it can create a frog holding a sign saying “hello world” with cinematic composition.

- Variants-Specific Features: [schnell] prioritizes speed for quick iterations, ideal for real-time applications. [dev] offers slightly higher quality, second only to the proprietary [pro] variant, with better handling of nuanced prompts.

- Additional Tools: Supports image-to-image editing via dedicated endpoints, transforming existing images while maintaining fidelity.

Training and Benchmarks

Trained on vast datasets with distillation techniques to reduce biases and improve efficiency, FLUX.1 matches or exceeds closed-source models like Midjourney in output quality and prompt following. Benchmarks highlight its competitive edge in visual appeal and diversity, with low failure rates on complex prompts. Inference speed is a standout: [schnell] completes generations in under a second on high-end GPUs.

Use Cases

FLUX.1 is perfect for rapid prototyping in game development, social media content creation, and scientific visualization. Artists can use [dev] for detailed artworks, while [schnell] suits mobile apps needing instant results. It’s available under Apache 2.0 for [schnell] and a non-commercial license for [dev], integrable via Diffusers or ComfyUI.

FLUX.1 Krea: The Aesthetic-Focused Variant for Photorealistic Excellence

FLUX.1 Krea [dev], a 2025 collaboration between Black Forest Labs and Krea AI, refines the standard FLUX.1 [dev] into an “opinionated” model emphasizing aesthetics and photorealism. This variant addresses common critiques of AI-generated art, such as oversaturation, by delivering more natural, visually compelling outputs.

Architecture and Parameters

Like its parent, it uses a 12 billion parameter rectified flow transformer with guidance distillation. The fine-tuning introduces specialized layers for aesthetic enhancement, making it a drop-in replacement for FLUX.1 [dev] in existing systems.

Capabilities

- Text-to-Image Generation: Generates images with a strong focus on aesthetic photography, producing photorealistic results that avoid the “AI look.” It handles complex prompts with superior texture, lighting, and composition, such as detailed landscapes or portraits.

- Differences from Standard FLUX.1 [dev]: Krea is fine-tuned for resilience against violative inputs and emphasizes photorealism over general versatility. It filters NSFW content and undergoes targeted fine-tuning to prevent abuse, resulting in cleaner, more artistic outputs.

- Editing and Extensions: Supports style-specific generations and can be used in workflows for video or enhanced editing via Krea’s platform.

Training and Benchmarks

Pre-release evaluations show higher resilience and quality in aesthetic categories compared to other open models. Training includes mitigation against biases, CSAM, and NCII, with multiple fine-tuning rounds. It outperforms standard FLUX in photorealistic benchmarks, achieving higher Elo scores in user preference tests.

Use Cases

Ideal for photographers, advertisers, and filmmakers seeking hyper-realistic visuals. Krea’s integration allows for intuitive interfaces, making it accessible for non-technical users. Licensed under the FLUX.1 [dev] non-commercial policy, it’s available on Hugging Face and Krea.ai.

Wan 2.2: Alibaba’s Versatile Model for Image and Video Generation

Wan 2.2, also known as Tongyi Wanxiang 2.2 from Alibaba’s Tongyi Lab, is a 2025 open-source series excelling in both image and video generation. With a focus on realism and control, it introduces innovative architectures like Mixture of Experts (MoE) for video tasks, while its image component delivers un-distilled, high-quality results.

Architecture and Parameters

The text-to-image variant is a 14 billion parameter model supporting LoRA for fine-tuning on both low-noise and high-noise transformers. For video, it employs MoE in diffusion models, enabling efficient handling of cinematic elements like lighting, color, and lens language. Variants include a 5B parameter version for lighter deployments.

Capabilities

- Text-to-Image Generation: Produces hyper-realistic images with fine details, excelling in portraits, textures, and styles. It supports high resolutions (e.g., 720P equivalents) and complex prompts, maintaining identity in full-body shots or style consistency in artistic renders.

- Image-to-Video and Text-to-Video: Extends images to 24fps videos at 720P, incorporating control signals for motion, with flexible combinations of over 100 cinematic styles.

- Editing and Fine-Tuning: LoRA enables custom training for specific styles or subjects, such as historical art (e.g., Thomas Cole paintings) or virtual photography.

Training and Benchmarks

As an un-distilled model, Wan 2.2 achieves superior quality post-training compared to distilled alternatives. Benchmarks demonstrate excellence in realism and prompt adherence, rivaling models like Sora in video coherence and FLUX in image detail. It handles diverse inputs with low VRAM requirements (e.g., 24GB for full use), making it accessible.

Use Cases

Wan 2.2 suits video production, e-commerce visuals, and AI art training. Filmmakers can generate short clips from stills, while designers fine-tune for branded styles. Open-sourced under permissive licenses.

Comparative Analysis

To highlight the strengths of these models, the following table provides a side-by-side comparison:

Qwen-Image shines in editing precision, FLUX.1 in efficiency, Krea in visual appeal, and Wan 2.2 in multimodal extension. Challenges include potential biases in all models and hardware demands for high-res outputs.

Conclusion

The Qwen-Image, FLUX.1, FLUX.1 Krea, and Wan 2.2 models exemplify the pinnacle of AI-driven creativity in 2025, democratizing access to professional-grade image and video tools. Whether you’re generating intricate scenes, editing with surgical accuracy, or extending visuals into motion, these models offer unparalleled flexibility. As AI continues to advance, integrating them into workflows will unlock new realms of innovation, empowering users to turn abstract ideas into tangible masterpieces.