File size: 3,295 Bytes

82072d1 abf5d3f 6ada1b2 e00dd13 82072d1 2c764db 01de356 8f78b94 9acb528 b71e3fc 0ab01d1 8f78b94 8ef41e8 9acb528 49f03a6 d59332f 8ef41e8 80a014e 70a5f0f 8ef41e8 b580a01 70a5f0f 82072d1 9acb528 82072d1 eaf60d9 82072d1 eaf60d9 82072d1 eaf60d9 59b5a84 82072d1 00d9f09 82072d1 00d9f09 82072d1 00d9f09 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 |

---

base_model: meta-llama/Meta-Llama-3.1-8B-Instruct

library_name: peft

license: openrail

language:

- en

pipeline_tag: text-classification

---

<h1 align="center">RoGuard 1.0: Advancing Safety for LLMs with Robust Guardrails</h1>

<div align="center" style="line-height: 1;">

<a href="https://huggingface.co/Roblox/RoGuard" target="_blank"><img alt="Hugging Face" src="https://img.shields.io/badge/%F0%9F%A4%97%20Hugging%20Face-RoGuard 1.0-ffc107?color=ffc107&logoColor=white"/></a>

<a href="https://github.com/Roblox/RoGuard-1.0"><img alt="github" src="https://img.shields.io/badge/🤖%20Github-RoGuard%201.0-ff6b6b?color=1783ff&logoColor=white"/></a>

<a href="https://github.com/Roblox/RoGuard/blob/main/LICENSE"><img src="https://img.shields.io/badge/Model%20License-RAIL_MS-green" alt="Model License"></a>

</div>

<div align="center" style="line-height: 1;">

<a href="https://huggingface.co/datasets/Roblox/RoGuard-Eval" target="_blank"><img alt="Hugging Face" src="https://img.shields.io/badge/%F0%9F%A4%97%20Hugging%20Face-RoGuardEval-ffc107?color=1783ff&logoColor=white"/></a>

<a href="https://creativecommons.org/licenses/by-nc-sa/4.0/"><img src="https://img.shields.io/badge/Data%20License-CC_BY_NC_4.0-blue" alt="Data License"></a>

</div>

<div align="center" style="line-height: 1;">

<a href="https://corp.roblox.com/newsroom/2025/07/roguard-advancing-safety-for-llms-with-robust-guardrails" target="_blank"><img src=https://img.shields.io/badge/Roblox-Blog-000000.svg?logo=Roblox height=22px></a>

<img src="https://img.shields.io/badge/ArXiv-Report (coming soon)-b5212f.svg?logo=arxiv" height="22px"><sub></sub>

</div>

RoGuard 1.0, a SOTA instruction fine-tuned LLM, is designed to help safeguard our Text Generation API. It performs safety classification at both the prompt and response levels, deciding whether or not each input or output violates our policies. This dual-level assessment is essential for moderating both user queries and the model’s own generated outputs. At the heart of our system is an LLM that’s been fine-tuned from the Llama-3.1-8B-Instruct model. We trained this LLM with a particular focus on high-quality instruction tuning to optimize for safety judgment performance.

## 📊 Model Benchmark Results

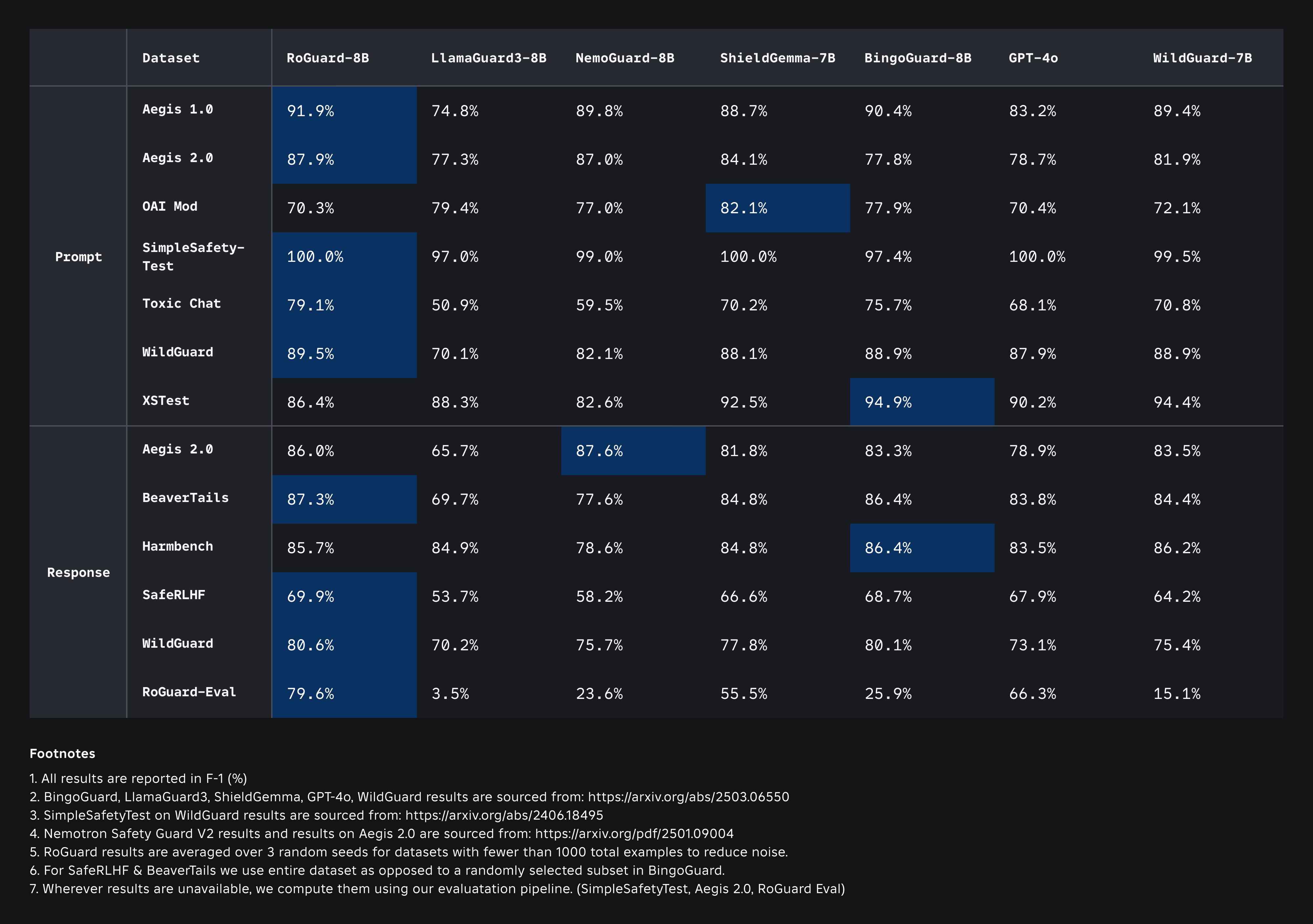

We benchmark RoGuard 1.0 model on a comprehensive set of open-source datasets for both prompt and response, as well as on RoGuard-Eval. This allows us to evaluate our model on both in-domain and out-of-domain datasets. We report our results in terms of F-1 score for binary violating/non-violating classification. In the table above, we compare our performance with that of several well-known models. The RoGuard 1.0 outperforms other models while generalizing on out-of-domain datasets.

- **Prompt Metrics**: These evaluate how well the model classifies or responds to potentially harmful **user inputs**

- **Response Metrics**: These measure how well the model handles or generates **responses**, ensuring its outputs are safe and aligned.

## 🔗 GitHub Repository

You can find the full source code and evaluation framework on GitHub:

👉 [Roblox/RoGuard on GitHub](https://github.com/Roblox/RoGuard) |